Machine Learning, Data Mining, Genetic Algorithms, Neural ...

- 1. Machine Learning, Data Mining, Genetic Algorithms, Neural Networks ISYS370 Dr. R. Weber

- 2. Concept Learning is a Form of Inductive Learning Learner uses: positive examples (instances ARE examples of a concept) and negative examples (instances ARE NOT examples of a concept)

- 3. Concept Learning Needs empirical validation Dense or sparse data determine quality of different methods

- 4. Validation of Concept Learning i The learned concept should be able to correctly classify new instances of the concept When it succeeds in a real instance of the concept it finds true positives When it fails in a real instance of the concept it finds false negatives

- 5. Validation of Concept Learning ii The learned concept should be able to correctly classify new instances of the concept When it succeeds in a counterexample it finds true negatives When it fails in a counterexample it finds false positives

- 6. Rule Learning Learning widely used in data mining Version Space Learning is a search method to learn rules Decision Trees

- 7. Decision trees Knowledge representation formalism Represent mutually exclusive rules (disjunction) A way of breaking up a data set into classes or categories Classification rules that determine, for each instance with attribute values, whether it belongs to one or another class Not incremental

- 8. Decision trees - leaf nodes (classes) - decision nodes (tests on attribute values) - from decision nodes branches grow for each possible outcome of the test From Cawsey, 1997

- 9. Decision tree induction Goal is to correctly classify all example data Several algorithms to induce decision trees: ID3 (Quinlan 1979) , CLS, ACLS, ASSISTANT, IND, C4.5 Constructs decision tree from past data Attempts to find the simplest tree (not guaranteed because it is based on heuristics)

- 10. From: a set of target classes Training data containing objects of more than one class ID3 uses test to refine the training data set into subsets that contain objects of only one class each Choosing the right test is the key ID3 algorithm

- 11. Information gain or ‘minimum entropy’ Maximizing information gain corresponds to minimizing entropy Predictive features (good indicators of the outcome) How does ID3 chooses tests

- 12. Information gain or ‘minimum entropy’ Maximizing information gain corresponds to minimizing entropy Predictive features (good indicators of the outcome) Choosing tests

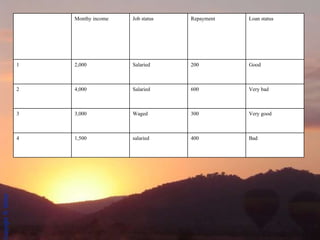

- 13. Bad 400 salaried 1,500 4 Very good 300 Waged 3,000 3 Very bad 600 Salaried 4,000 2 Good 200 Salaried 2,000 1 Loan status Repayment Job status Monthy income

- 14. Link analysis Deviation detection Data mining tasks ii Rules: Association generation Relationships between entities How things change over time, trends

- 15. KDD applications Fraud detection Telecom (calling cards, cell phones) Credit cards Health insurance Loan approval Investment analysis Marketing and sales data analysis Identify potential customers Effectiveness of sales campaign Store layout

- 16. Text mining The problem starts with a query and the solution is a set of information (e.g., patterns, connections, profiles, trends) contained in several different texts that are potentially relevant to the initial query.

- 17. Text mining applications IBM Text Navigator Cluster documents by content; Each document is annotated by the 2 most frequently used words in the cluster; Concept Extraction (Los Alamos) Text analysis of medical records; Uses a clustering approach based on trigram representation; Documents in vectors, cosine for comparison;

- 18. rule-based ES case-based reasoning inductive ML, NN algorithms deductive reasoning analogical reasoning inductive reasoning search Problem solving method Reasoning type

- 20. Genetic algorithms (i) learn by experimentation based on human genetics, it originates new solutions representational restrictions good to improve quality of other methods e.g., search algorithms, CBR evolutionary algorithms (broader)

- 21. Genetic algorithms (ii) requires an evaluation function to guide the process population of genomes represent possible solutions operations are applied over these genomes operations can be mutation, crossover operations produce new offspring an evaluation function tests how fit an offspring is the fittest will survive to mate again

- 22. Genetic Algorithms ii http://ai.bpa.arizona.edu/~mramsey/ga.html You can change parameters http://www.rennard.org/alife/english/gavgb.html Steven Thompson presented

- 24. ~= 2 nd -5 th week training vision the evidence

- 25. the evidence ~= 2 nd -5 th week training vision 10

- 26. the evidence ~= 2 nd -5 th week training vision 10

- 27. the evidence ~= 2 nd -5 th week training vision

- 28. NN: model of brains input output neurons synapses electric transmissions :

- 29. Elements input nodes output nodes links weights

- 30. terminology input and output nodes (or units) connected by links each link has a numeric weight weights store information networks are trained on training sets (examples) and after are tested on test sets to assess networks’ accuracy learning/training takes place as weights are updated to reflect the input/output behavior

- 31. The concept => mammal => bird 0 1 1 4 legs fly lay eggs 1 0 0 1 Yes, 0 No => mammal 1 1 0

- 32. The concept => mammal => bird 0 1 1 4 legs fly lay eggs 1 0 0 => mammal 1 1 0 1 Yes, 0 No

- 33. The concept => mammal => bird 0 1 1 4 legs fly lay eggs 1 0 0 => mammal 1 1 0 0.5 0.5 0.5 1 Yes, 0 No

- 34. => mammal => bird 0 1 1 4 legs fly lay eggs 1 0 0 => mammal 1 1 0 0*0.5+1*0.5+1*0.5= 1 1*0.5+0*0.5+0*0.5= 0.5 1*0.5+1*0.5+0*0.5= 1 Goal is to have weights that recognize different representations of mammals and birds as such 0.5 0.5 0.5

- 35. => mammal => bird 0 1 1 4 legs fly lay eggs 1 0 0 => mammal 1 1 0 0*0.5+1*0.5+1*0.5= 1 1*0.5+0*0.5+0*0.5= 0.5 1*0.5+1*0.5+0*0.5= 1 Suppose we want bird to be greater 0.5 and mammal to be equal or less than 0.5 0.5 0.5 0.5

- 36. => mammal => bird 0 1 1 4 legs fly lay eggs 1 0 0 => mammal 1 1 0 0*0.25+1*0.25+1*0.5= 0.75 1*0.25+0*0.25+0*0.5= 0.25 1*0.25+1*0.25+0*0.5= 0.5 Suppose we want bird to be greater 0.5 and mammal to be equal or less than 0.5 0.25 0.25 0.5

- 37. The training Output=Step( w f ) learning takes place as weights are updated to reflect the input/output behavior => mammal (1) => bird (0) 0 1 1 4 legs flies eggs i=1 i=2 i=3 j=1 j=2 j=3 ij Goal minimize error between representation of the expected and actual outcome 20 ij 0 0 0 0 0 0 0 0 0 1 0 0 1 0 0 1 0 0 1 0 0 1 0 0 1 1 1 1 0 0 1 1 1 1 1 1

- 38. NN demo…..

- 39. Characteristics NN implement inductive learning algorithms (through generalization) therefore, it requires several training examples to learn NN do not provide an explanation why the task performed the way it was no explicit knowledge; uses data Classification (pattern recognition), clustering, diagnosis, optimization, forecasting (prediction), modeling, reconstruction, routing

- 40. Where are NN applicable? Where they can form a model from training data alone; When there may be an algorithm, but it is not known, or has too many variables; There are enough examples available It is easier to let the network learn from examples Other inductive learning methods may not be as accurate

- 41. Applications (i) predict movement of stocks, currencies, etc., from previous data; to recognize signatures made (e.g. in a bank) with those stored; to monitor the state of aircraft engines (by monitoring vibration levels and sound, early warning of engine problems can be given; British Rail have been testing an application to monitor diesel engines;

- 42. Applications (ii) Pronunciation (rules with many exceptions) Handwritten character recognition (network w/ 200,000 is impossible to train, final 9,760 weights, used 7300 examples to train and 2,000 to test, 99% accuracy) Learn brain patterns to control and activate limbs as in the “Rats control a robot by thought alone” article Credit assignment

- 43. CMU Driving ALVINN learns from human drivers how to steer a vehicle along a single lane on a highway ALVINN is implemented in two vehicles equipped with computer-controlled steering, acceleration, and braking cars can reach 70 m/h with ALVINN programs that consider all the problem environment reach 4 m/h only

- 44. Why using NN for the driving task? there is no good theory of driving, but it is easy to collect training samples training data is obtained with a human* driving the vehicle 5min training, 10 min algorithm runs driving is continuous and noisy almost all features contribute with useful information *humans are not very good generators of training instances when they behave too regularly without making mistakes

- 45. INPUT: video camera generates array of 30x32 grid of input nodes OUTPUT: 30 nodes layer corresponding to steering direction vehicle steers to the direction of the layer with highest activation the neural network

- 46. Resources http://www.cs.stir.ac.uk/~lss/NNIntro/InvSlides.html#what http://www.ri.cmu.edu/projects/project_160.html http://www.txtwriter.com/Onscience/Articles/ratrobot.html

Editor's Notes

- #15: What is predictive modeling? Predictive modeling uses demographic, medical and pharmacy claims information to determine the range and intensity of medical problems for a given population of insured persons. This assessment of risk allows health plans, payers and provider groups to plan, evaluate and fund health care management programs more effectively. From: http://www.dxcgrisksmart.com/faq.html

- #20: TELL THE CAT STORY

- #24: TELL THE CAT STORY