Description

When I try to generate an engine file from onnx the process stops with the message killed. This happens when CPU memory overflow occurs. The error only occurs if I use --fp16 and on Ampere.

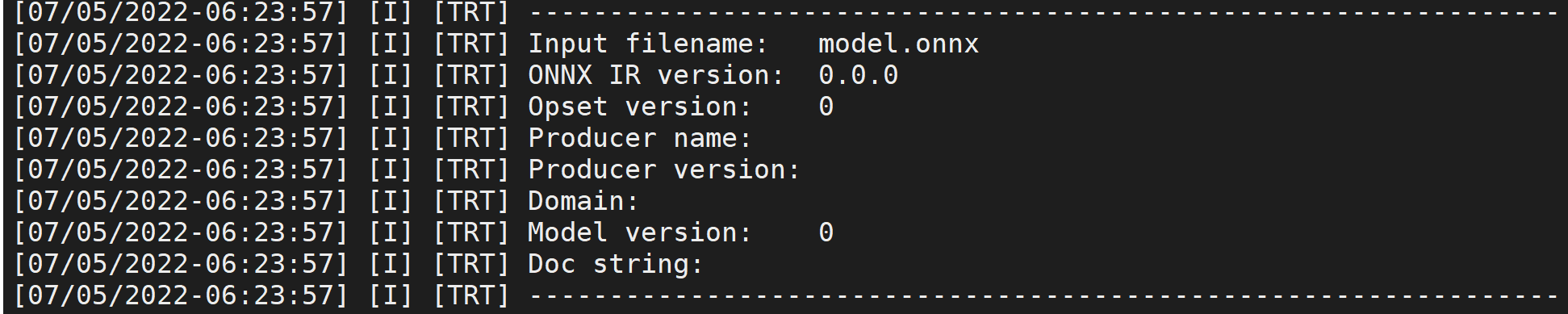

The last lines of the extended log output

[07/01/2022-05:42:58] [V] [TRT] --------------- Timing Runner: PWN(fc.2.weight + (Unnamed Layer* 404) [Shuffle], PRelu_397) (PointWise)

[07/01/2022-05:42:58] [V] [TRT] Tactic: 128 Time: 0.004172

[07/01/2022-05:42:58] [V] [TRT] Tactic: 256 Time: 0.004424

[07/01/2022-05:42:58] [V] [TRT] Tactic: 512 Time: 0.004096

[07/01/2022-05:42:58] [V] [TRT] Tactic: -32 Time: 0.033792

[07/01/2022-05:42:58] [V] [TRT] Tactic: -64 Time: 0.01856

[07/01/2022-05:42:58] [V] [TRT] Tactic: -128 Time: 0.011264

[07/01/2022-05:42:58] [V] [TRT] Fastest Tactic: 512 Time: 0.004096

[07/01/2022-05:42:58] [V] [TRT] >>>>>>>>>>>>>>> Chose Runner Type: PointWiseV2 Tactic: 1

[07/01/2022-05:42:58] [V] [TRT] *************** Autotuning format combination: Half(256,1) → Half(256,1) ***************

[07/01/2022-05:42:58] [V] [TRT] --------------- Timing Runner: PWN(fc.2.weight + (Unnamed Layer* 404) [Shuffle], PRelu_397) (PointWiseV2)

[07/01/2022-05:42:58] [V] [TRT] Tactic: 0 Time: 0.00316

[07/01/2022-05:42:58] [V] [TRT] Tactic: 1 Time: 0.003384

[07/01/2022-05:42:59] [V] [TRT] Tactic: 2 Time: 0.003116

[07/01/2022-05:42:59] [V] [TRT] Tactic: 3 Time: 0.00406

[07/01/2022-05:42:59] [V] [TRT] Tactic: 4 Time: 0.003312

[07/01/2022-05:42:59] [V] [TRT] Tactic: 5 Time: 0.003072

[07/01/2022-05:43:00] [V] [TRT] Tactic: 6 Time: 0.004404

[07/01/2022-05:43:00] [V] [TRT] Tactic: 7 Time: 0.004036

[07/01/2022-05:43:00] [V] [TRT] Tactic: 8 Time: 0.003328

[07/01/2022-05:43:00] [V] [TRT] Tactic: 9 Time: 0.003072

[07/01/2022-05:43:01] [V] [TRT] Tactic: 28 Time: 0.003168

[07/01/2022-05:43:01] [V] [TRT] Fastest Tactic: 5 Time: 0.003072

[07/01/2022-05:43:01] [V] [TRT] --------------- Timing Runner: PWN(fc.2.weight + (Unnamed Layer* 404) [Shuffle], PRelu_397) (PointWise)

[07/01/2022-05:43:01] [V] [TRT] Tactic: 128 Time: 0.00684

[07/01/2022-05:43:01] [V] [TRT] Tactic: 256 Time: 0.008576

[07/01/2022-05:43:01] [V] [TRT] Tactic: 512 Time: 0.007624

[07/01/2022-05:43:01] [V] [TRT] Tactic: -32 Time: 0.032864

[07/01/2022-05:43:01] [V] [TRT] Tactic: -64 Time: 0.01842

[07/01/2022-05:43:01] [V] [TRT] Tactic: -128 Time: 0.011392

[07/01/2022-05:43:01] [V] [TRT] Fastest Tactic: 128 Time: 0.00684

[07/01/2022-05:43:01] [V] [TRT] >>>>>>>>>>>>>>> Chose Runner Type: PointWiseV2 Tactic: 5

Killed

Environment

TensorRT Version: 8.2.5.1

GPU Type: NVIDIA GeForce RTX 3070

Nvidia Driver Version: 510.73.05

CUDA Version: 11.6

Baremetal or Container (if container which image + tag): nvcr.io/nvidia/tensorrt:22.05-py3

Relevant Files

Steps To Reproduce

- docker run -it --rm --gpus=all -v

pwd/models:/models nvcr.io/nvidia/tensorrt:22.05-py3 /bin/bash - trtexec --onnx=/models/models.onnx --fp16 --verbose