Approximation, Randomization and Combinatorial Optimization. Algorithms and Techniques_ 8th International Workshop on Approximation Algorithms for Combinatorial Optimization Problems, APPROX 2005 and 9th International .pdf

- 2. Lecture Notes in Computer Science 3624 Commenced Publication in 1973 Founding and Former Series Editors: Gerhard Goos, Juris Hartmanis, and Jan van Leeuwen Editorial Board David Hutchison Lancaster University, UK Takeo Kanade Carnegie Mellon University, Pittsburgh, PA, USA Josef Kittler University of Surrey, Guildford, UK Jon M. Kleinberg Cornell University, Ithaca, NY, USA Friedemann Mattern ETH Zurich, Switzerland John C. Mitchell Stanford University, CA, USA Moni Naor Weizmann Institute of Science, Rehovot, Israel Oscar Nierstrasz University of Bern, Switzerland C. Pandu Rangan Indian Institute of Technology, Madras, India Bernhard Steffen University of Dortmund, Germany Madhu Sudan Massachusetts Institute of Technology, MA, USA Demetri Terzopoulos New York University, NY, USA Doug Tygar University of California, Berkeley, CA, USA Moshe Y. Vardi Rice University, Houston, TX, USA Gerhard Weikum Max-Planck Institute of Computer Science, Saarbruecken, Germany

- 3. Chandra Chekuri Klaus Jansen José D.P. Rolim Luca Trevisan (Eds.) Approximation, Randomization and Combinatorial Optimization Algorithms and Techniques 8th International Workshop on Approximation Algorithms for Combinatorial Optimization Problems, APPROX 2005 and 9th International Workshop on Randomization and Computation, RANDOM 2005 Berkeley, CA, USA, August 22-24, 2005 Proceedings 1 3

- 4. Volume Editors Chandra Chekuri Lucent Bell Labs 600 Mountain Avenue, Murray Hill, NJ 07974, USA E-mail: [email protected] Klaus Jansen University of Kiel, Institute for Computer Science Olshausenstr. 40, 24098 Kiel, Germany E-mail: [email protected] José D.P. Rolim Université de Genève, Centre Universitaire d’Informatique 24, Rue Général Dufour, 1211 Genève 4, Suisse E-mail: [email protected] Luca Trevisan University of California, Computer Science Department 679 Soda Hall, Berkeley, CA 94720-1776, USA E-mail: [email protected] Library of Congress Control Number: 2005930720 CR Subject Classification (1998): F.2, G.2, G.1 ISSN 0302-9743 ISBN-10 3-540-28239-4 Springer Berlin Heidelberg New York ISBN-13 978-3-540-28239-6 Springer Berlin Heidelberg New York This work is subject to copyright. All rights are reserved, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, re-use of illustrations, recitation, broadcasting, reproduction on microfilms or in any other way, and storage in data banks. Duplication of this publication or parts thereof is permitted only under the provisions of the German Copyright Law of September 9, 1965, in its current version, and permission for use must always be obtained from Springer. Violations are liable to prosecution under the German Copyright Law. Springer is a part of Springer Science+Business Media springeronline.com © Springer-Verlag Berlin Heidelberg 2005 Printed in Germany Typesetting: Camera-ready by author, data conversion by Olgun Computergrafik Printed on acid-free paper SPIN: 11538462 06/3142 5 4 3 2 1 0

- 5. Preface This volume contains the papers presented at the 8th International Workshop on Approximation Algorithms for Combinatorial Optimization Problems (APPROX 2005) and the 9th International Workshop on Randomization and Computation (RANDOM 2005), which took place concurrently at the University of California in Berkeley, on August 22–24, 2005. APPROX focuses on algorith- mic and complexity issues surrounding the development of efficient approximate solutions to computationally hard problems, and APPROX 2005 was the eighth in the series after Aalborg (1998), Berkeley (1999), Saarbrücken (2000), Berke- ley (2001), Rome (2002), Princeton (2003), and Cambridge (2004). RANDOM is concerned with applications of randomness to computational and combinatorial problems, and RANDOM 2005 was the ninth workshop in the series follow- ing Bologna (1997), Barcelona (1998), Berkeley (1999), Geneva (2000), Berkeley (2001), Harvard (2002), Princeton (2003), and Cambridge (2004). Topics of interest for APPROX and RANDOM are: design and analysis of approximation algorithms, hardness of approximation, small space and data streaming algorithms, sub-linear time algorithms, embeddings and metric space methods, mathematical programming methods, coloring and partitioning, cuts and connectivity, geometric problems, game theory and applications, network design and routing, packing and covering, scheduling, design and analysis of ran- domized algorithms, randomized complexity theory, pseudorandomness and de- randomization, random combinatorial structures, random walks/Markov chains, expander graphs and randomness extractors, probabilistic proof systems, ran- dom projections and embeddings, error-correcting codes, average-case analysis, property testing, computational learning theory, and other applications of ap- proximation and randomness. The volume contains 20 contributed papers selected by the APPROX Pro- gram Committee out of 50 submissions, and 21 contributed papers selected by the RANDOM Program Committee out of 51 submissions. We would like to thank all of the authors who submitted papers, the members of the program committees

- 6. VI Preface APPROX 2005 Matthew Andrews, Lucent Bell Labs Avrim Blum, CMU Moses Charikar, Princeton University Chandra Chekuri, Lucent Bell Labs (Chair) Julia Chuzhoy, MIT Uriel Feige, Microsoft Research and Weizmann Institute Naveen Garg, IIT Delhi Howard Karloff, AT&T Labs – Research Stavros Kolliopoulos, University of Athens Adam Meyerson, UCLA Seffi Naor, Technion Santosh Vempala, MIT RANDOM 2005 Dorit Aharonov, Hebrew University Boaz Barak, IAS and Princeton University Funda Ergun, Simon Fraser University Johan Håstad, KTH Stockholm Chi-Jen Lu, Academia Sinica Milena Mihail, Georgia Institute of Technology Robert Krauthgamer, IBM Almaden Dana Randall, Georgia Institute of Technology Amin Shokrollahi, EPF Lausanne Angelika Steger, ETH Zurich Luca Trevisan, UC Berkeley (Chair) and the external subreferees Scott Aaronson, Dimitris Achlioptas, Mansoor Alicherry, Andris Ambainis, Aaron Archer, Nikhil Bansal, Tugkan Batu, Gerard Ben Arous, Michael Ben-Or, Eli Ben-Sasson, Petra Berenbrink, Randeep Bhatia, Nayantara Bhatnagar, Niv Buchbinder, Shuchi Chawla, Joseph Cheriyan, Roee Engelberg, Lance Fortnow, Tom Friedetzky, Mikael Goldmann, Daniel Gottesman, Sam Greenberg, Anupam Gupta, Venkat Guruswami, Tom Hayes, Monika Henzinger, Danny Hermelin, Nicole Immorlica, Piotr Indyk, Adam Kalai, Julia Kempe, Claire Kenyon, Jordan Kerenidis, Sanjeev Khanna, Amit Kumar, Ravi Kumar, Nissan Lev-Tov, Liane Lewin, Laszlo Lovasz, Elitza Maneva, Michael Mitzenmacher, Cris Moore, Michele Mosca, Kamesh Munagala, Noam Nisan, Ryan O’Donnell, Martin Pal, Vinayaka Pandit, David Peleg, Yuval Rabani, Dror Rawitz, Danny Raz, Adi Rosen, Ronitt Rubinfeld, Cenk Sahinalp, Alex Samorodnitsky, Gabi Scalosub, Leonard Schulman, Roy Schwartz, Pranab Sen, Mehrdad Shahshahani, Amir Shpilka, Anastasios Sidiropoulos, Greg Sorkin, Adam Smith, Ronen Shaltiel, Maxim Sviridenko, Amnon-Ta-Shma, Emre Telatar, Alex Vardy, Eric Vigoda, Da-Wei Wang, Ronald de Wolf, David Woodruff, Hsin-Lung Wu, and Lisa Zhang.

- 7. Preface VII We gratefully acknowledge the support from the Lucent Bell Labs of Murray Hill, the Computer Science Division of the University of California at Berkeley, the Institute of Computer Science of the Christian-Albrechts-Universität zu Kiel and the Department of Computer Science of the University of Geneva. We also thank Ute Iaquinto and Parvaneh Karimi Massouleh for their help. August 2005 Chandra Chekuri and Luca Trevisan, Program Chairs Klaus Jansen and José D.P. Rolim, Workshop Chairs

- 8. Table of Contents Contributed Talks of APPROX The Network as a Storage Device: Dynamic Routing with Bounded Buffers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 Stanislav Angelov, Sanjeev Khanna, and Keshav Kunal Rounding Two and Three Dimensional Solutions of the SDP Relaxation of MAX CUT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14 Adi Avidor and Uri Zwick What Would Edmonds Do? Augmenting Paths and Witnesses for Degree-Bounded MSTs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26 Kamalika Chaudhuri, Satish Rao, Samantha Riesenfeld, and Kunal Talwar A Rounding Algorithm for Approximating Minimum Manhattan Networks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40 Victor Chepoi, Karim Nouioua, and Yann Vaxès Packing Element-Disjoint Steiner Trees . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52 Joseph Cheriyan and Mohammad R. Salavatipour Approximating the Bandwidth of Caterpillars . . . . . . . . . . . . . . . . . . . . . . . . . 62 Uriel Feige and Kunal Talwar Where’s the Winner? Max-Finding and Sorting with Metric Costs. . . . . . . . 74 Anupam Gupta and Amit Kumar What About Wednesday? Approximation Algorithms for Multistage Stochastic Optimization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86 Anupam Gupta, Martin Pál, Ramamoorthi Ravi, and Amitabh Sinha The Complexity of Making Unique Choices: Approximating 1-in-k SAT . . . 99 Venkatesan Guruswami and Luca Trevisan Approximating the Distortion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111 Alexander Hall and Christos Papadimitriou Approximating the Best-Fit Tree Under Lp Norms . . . . . . . . . . . . . . . . . . . . . 123 Boulos Harb, Sampath Kannan, and Andrew McGregor Beating a Random Assignment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134 Gustav Hast

- 9. X Table of Contents Scheduling on Unrelated Machines Under Tree-Like Precedence Constraints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146 V.S. Anil Kumar, Madhav V. Marathe, Srinivasan Parthasarathy, and Aravind Srinivasan Approximation Algorithms for Network Design and Facility Location with Service Capacities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158 Jens Maßberg and Jens Vygen Finding Graph Matchings in Data Streams . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170 Andrew McGregor A Primal-Dual Approximation Algorithm for Partial Vertex Cover: Making Educated Guesses. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182 Julián Mestre Efficient Approximation of Convex Recolorings . . . . . . . . . . . . . . . . . . . . . . . . 192 Shlomo Moran and Sagi Snir Approximation Algorithms for Requirement Cut on Graphs . . . . . . . . . . . . . 209 Viswanath Nagarajan and Ramamoorthi Ravi Approximation Schemes for Node-Weighted Geometric Steiner Tree Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 221 Jan Remy and Angelika Steger Towards Optimal Integrality Gaps for Hypergraph Vertex Cover in the Lovász-Schrijver Hierarchy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233 Iannis Tourlakis Contributed Talks of RANDOM Bounds for Error Reduction with Few Quantum Queries . . . . . . . . . . . . . . . . 245 Sourav Chakraborty, Jaikumar Radhakrishnan, and Nandakumar Raghunathan Sampling Bounds for Stochastic Optimization . . . . . . . . . . . . . . . . . . . . . . . . . 257 Moses Charikar, Chandra Chekuri, and Martin Pál An Improved Analysis of Mergers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 270 Zeev Dvir and Amir Shpilka Finding a Maximum Independent Set in a Sparse Random Graph . . . . . . . . 282 Uriel Feige and Eran Ofek On the Error Parameter of Dispersers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 294 Ronen Gradwohl, Guy Kindler, Omer Reingold, and Amnon Ta-Shma Tolerant Locally Testable Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 306 Venkatesan Guruswami and Atri Rudra

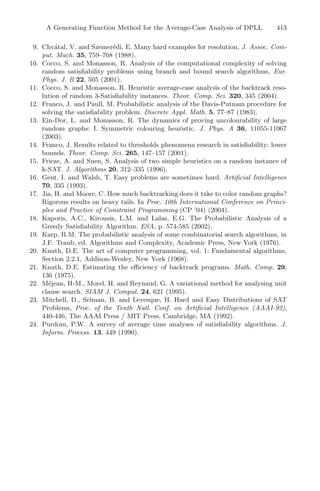

- 10. Table of Contents XI A Lower Bound on List Size for List Decoding . . . . . . . . . . . . . . . . . . . . . . . . . 318 Venkatesan Guruswami and Salil Vadhan A Lower Bound for Distribution-Free Monotonicity Testing. . . . . . . . . . . . . . 330 Shirley Halevy and Eyal Kushilevitz On Learning Random DNF Formulas Under the Uniform Distribution . . . . 342 Jeffrey C. Jackson and Rocco A. Servedio Derandomized Constructions of k-Wise (Almost) Independent Permutations . . . . . . . . . . . . . . . . . . . . . . . . . 354 Eyal Kaplan, Moni Naor, and Omer Reingold Testing Periodicity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 366 Oded Lachish and Ilan Newman The Parity Problem in the Presence of Noise, Decoding Random Linear Codes, and the Subset Sum Problem . . . . . . . . . . 378 Vadim Lyubashevsky The Online Clique Avoidance Game on Random Graphs . . . . . . . . . . . . . . . . 390 Martin Marciniszyn, Reto Spöhel, and Angelika Steger A Generating Function Method for the Average-Case Analysis of DPLL . . 402 Rémi Monasson A Continuous-Discontinuous Second-Order Transition in the Satisfiability of Random Horn-SAT Formulas . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 414 Cristopher Moore, Gabriel Istrate, Demetrios Demopoulos, and Moshe Y. Vardi Mixing Points on a Circle . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 426 Dana Randall and Peter Winkler Derandomized Squaring of Graphs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 436 Eyal Rozenman and Salil Vadhan Tight Bounds for String Reconstruction Using Substring Queries . . . . . . . . . 448 Dekel Tsur Reconstructive Dispersers and Hitting Set Generators . . . . . . . . . . . . . . . . . . 460 Christopher Umans The Tensor Product of Two Codes Is Not Necessarily Robustly Testable . . 472 Paul Valiant Fractional Decompositions of Dense Hypergraphs . . . . . . . . . . . . . . . . . . . . . . 482 Raphael Yuster Author Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 495

- 11. The Network as a Storage Device: Dynamic Routing with Bounded Buffers Stanislav Angelov , Sanjeev Khanna , and Keshav Kunal University of Pennsylvania, Philadelphia, PA 19104, USA {angelov,sanjeev,kkunal}@cis.upenn.edu Abstract. We study dynamic routing in store-and-forward packet net- works where each network link has bounded buffer capacity for receiving incoming packets and is capable of transmitting a fixed number of pack- ets per unit of time. At any moment in time, packets are injected at various network nodes with each packet specifying its destination node. The goal is to maximize the throughput, defined as the number of packets delivered to their destinations. In this paper, we make some progress in understanding what is achievable on various network topologies. For line networks, Nearest-to-Go (NTG), a natural greedy algorithm, was shown to be O(n2/3 )-competitive by Aiello et al [1]. We show that NTG is Õ( √ n)-competitive, essentially matching an identical lower bound known on the performance of any greedy algorithm shown in [1]. We show that if we allow the online routing algorithm to make centralized decisions, there is indeed a randomized polylog(n)-competitive algorithm for line networks as well as rooted tree networks, where each packet is destined for the root of the tree. For grid graphs, we show that NTG has a performance ratio of Θ̃(n2/3 ) while no greedy algorithm can achieve a ratio better than Ω( √ n). Finally, for an arbitrary network with m edges, we show that NTG is Θ̃(m)-competitive, improving upon an earlier bound of O(mn) [1]. 1 Introduction The problem of dynamically routing packets is central to traffic management on large scale data networks. Packet data networks typically employ store-and- forward routing where network links (or routers) store incoming packets and schedule them to be forwarded in a suitable manner. Internet is a well-known example of such a network. In this paper, we consider routing algorithms for store-and-forward networks in a dynamic setting where packets continuously ar- rive in an arbitrary manner over a period of time and each packet specifies its Supported in part by NSF Career Award CCR-0093117, NSF Award ITR 0205456 and NIGMS Award 1-P20-GM-6912-1. Supported in part by an NSF Career Award CCR-0093117, NSF Award CCF- 0429836, and a US-Israel Binational Science Foundation Grant. Supported in part by an NSF Career Award CCR-0093117 and NSF Award CCF- 0429836. C. Chekuri et al. (Eds.): APPROX and RANDOM 2005, LNCS 3624, pp. 1–13, 2005. c Springer-Verlag Berlin Heidelberg 2005

- 12. 2 Stanislav Angelov, Sanjeev Khanna, and Keshav Kunal destination. The goal is to maximize the throughput, defined as the number of packets that are delivered to their destinations. This model, known as the Com- petitive Network Throughput (CNT) model, was introduced by Aiello et al [1]. The model allows for both adaptive and non-adaptive routing of packets, where in the latter case each packet also specifies a path to its destination node. Note that there is no distinction between the two settings on line and tree networks. Aiello et al analyzed greedy algorithms, which are characterized as ones that accept and forward packets whenever possible. For the non-adaptive setting, they showed that Nearest-to-Go (NTG), a natural greedy algorithm that favors packets whose remaining distance is the shortest, is O(mn)-competitive1 on any network with n nodes and m edges. On line networks, NTG was shown to be O(n2/3 )-competitive provided each link has buffer capacity at least 2. Our Results. Designing routing algorithms in the CNT model is challenging since buffer space is limited at any individual node and a good algorithm should use all nodes to buffer packets and not just nodes where packets are injected. It is like viewing the entire network as a storage device where new information is injected at locations chosen by the adversary. Regions in the network with high injection rate need to continuously move packets to intermediate network regions with low activity. However, if many nodes simultaneously send packets to the same region, the resulting congestion would lead to buffer overflows. Worse still, the adversary may suddenly raise the packet injection rate in the region of low activity. In general, an adversary can employ different attack strategies in different parts of the network. How should a routing algorithm coordinate the movement of packets in presence of buffer constraints? This turns out to be a difficult question even on simple networks such as lines and trees. In this paper, we continue the thread of research started in [1]. Throughout the remainder of this paper, we will assume that all network links have a uniform buffer size B and bandwidth 1. We obtain the following results: Line Networks: For line networks with B 1, we show that NTG is Õ( √ n)- competitive, essentially matching an Ω( √ n) lower bound of [1]. A natural ques- tion is whether non-greedy algorithms can perform better. For B = 1, we show that any deterministic algorithm is Ω(n)-competitive, strengthening a result of [1], and give a randomized Õ( √ n)-competitive algorithm. For B 1, how- ever, no super-constant lower bounds are known leaving a large gap between known lower bounds and what is achievable by greedy algorithms. We show that centralized decisions can improve performance exponentially by designing a randomized O(log3 n)-competitive algorithm, referred to as Merge and Deliver. Tree Networks: For tree networks of height h, it was shown in [1] that any greedy algorithm has a competitive ratio of Ω(n/h). Building on the ideas used 1 The exact bound shown in [1] is O(mD) where D is the maximum length of any given path in the network. We state here the worst-case bound with D = Ω(n).

- 13. The Network as a Storage Device: Dynamic Routing with Bounded Buffers 3 Table 1. Summary of results; the bounds obtained in this paper are in boldface. Algorithm Line Tree Grid General Greedy Ω( √ n) [1] Ω(n) [1] Ω( √ n) Ω(m) NTG Õ( √ n) Õ(n) Θ̃(n2/3 ) Õ(m) Previous bounds O(n2/3 ) [1] O(nh) [1] - O(mn) [1] Merge and Deliver (MD) O(log3 n) O(h log2 n) - - in the line algorithm, we give a O(log2 n)-competitive algorithm when all pack- ets are destined for the root. Such tree networks are motivated by the work on buffer overflows of merging streams of packets by Kesselman et al [2] and the work on information gathering by Kothapalli and Scheideler [3] and Azar and Zachut [4]. The result extends to a randomized O(h log2 n)-competitive algo- rithm when packet destinations are arbitrary. Grid Networks: For adaptive setting in grid networks, we show that NTG with one-bend routing is Θ̃(n2/3 )-competitive. We establish a lower bound of Ω( √ n) on the competitive ratio for greedy algorithms with shortest path routing. General Networks: Finally, for arbitrary network topologies, we show that any greedy algorithm is Ω(m)-competitive and prove that NTG is, in fact, Θ̃(m)- competitive. These results hold for both adaptive and non-adaptive settings, where NTG routes on a shortest path in the adaptive case. Related Work. Dynamic store-and-forward routing networks have been stud- ied extensively. An excellent survey of packet drop policies in communication networks can be found in [5]. Much of the earlier work has focused on the is- sue of stability with packets being injected in either probabilistic or adversarial manner. In stability analysis the goal is to understand how the buffer size at each link needs to grow as a function of the packet injection rate so that packets are never dropped. A stable protocol is one where the maximum buffer size does not grow with time. For work in the probabilistic setting, see [6–10]. Adversarial queuing theory introduced by Borodin et al [11] has also been used to study the stability of protocols and it has been shown in [11, 12] that certain greedy algorithms are unstable, that is, require unbounded buffer sizes. In particular, NTG is unstable. The idea of using the entire network to store packets effectively has been used in [13] but in their model, packets can not be buffered while in transit and the performance measure is the time required to deliver all packets. Moreover, packets never get dropped because there is no limit on the number of packets which can be stored at their respective source nodes. Throughput competitiveness was highlighted as a network performance mea- sure by Awerbuch et al in [14]. They used adversarial traffic to analyze store-

- 14. 4 Stanislav Angelov, Sanjeev Khanna, and Keshav Kunal and-forward routing algorithms in [15], but they compared their throughput to an adversary restricted to a certain class of strategies and with smaller buffers. Kesselman et al [2] study the throughput competitiveness of work-conserving algorithms on line and tree networks when the adversary is a work-conserving algorithm too. Work-conserving algorithms always forward a packet if possible but unlike greedy, they need not accept packets when there is space in the buffer. However, they do not make assumptions about uniform link bandwidths or buffer sizes as in [1] and our model. They also consider the case when packets have different weights. Non-preemptive policies for packets with different values but with unit sized buffers have been analyzed in [16, 17]. In a recent parallel work, Azar and Zachut [4] also obtain centralized algo- rithms with polylog(n) competitive ratios on lines. They first obtain a O(log n)- competitive deterministic algorithm for the special case when all packets have the same destination (which is termed information gathering) and then show that it can be extended to a randomized algorithm with O(log2 n)-competitive ratio for the general case. Their result can be extended to get O(h log n)-competitive ratio on trees. Information gathering problem is similar to our notion of balanced instances (see Section 2.3) though their techniques are very different from ours – they construct an online reduction from the fractional buffers packet routing with bounded delay problem to fractional information gathering and the former is solved by an extension of the work of Awerbuch et al [14] for the discrete version. The fractional algorithm for information gathering is then transformed to a discrete one. 2 Preliminaries 2.1 Model and Problem Statement We model the network as a directed graph on n nodes and m links. A node has at most I traffic ports where new packets can be injected, at most one at each port. Each link has an output port at its tail with a capacity B 0 buffer, and an input port at its head that can store 1 packet. We assume uniform buffer size B at each link and bandwidth of each link to be 1. Time is synchronous and each time step consists of forwarding and switching sub-steps. During the forwarding phase, each link selects at most one packet from its output buffer according to an output scheduling policy and forwards it to its input buffer. During the switching phase, a node clears all packets from its traffic ports and input ports at its incoming link(s). It delivers packets if the node is their destination or assigns them to the output port of the outgoing link on their respective paths. When more than B packets are assigned to a link’s output buffer, packets are discarded based on a contention-resolution policy. We consider preemptive contention-resolution policies that can replace packets already stored at the buffer with new packets. A routing protocol specifies the output scheduling and contention-resolution policy. We are interested in online policies which make decisions with no knowledge of future packet arrivals. Each injected packet comes with a specified destination. We assume that the destination is different from the source, otherwise the packet is routed opti-

- 15. The Network as a Storage Device: Dynamic Routing with Bounded Buffers 5 mally by any algorithm and does not interfere with other packets. The goal of the routing algorithm is to maximize throughput, that is, the total number of packets delivered by it. We distinguish between two types of algorithms, namely centralized and distributed. A centralized algorithm makes coordinated decisions at each node taking into account the state of the entire network while a dis- tributed algorithm requires that each node make its decisions based on local information only. Distributed algorithms are of great practical interest for large networks. Centralized algorithms, on the other hand, give us insight into the inherent complexity of the problem due to the online nature of the input. 2.2 Useful Background Results and Definitions The following lemma which gives an upper bound on the packets that can be absorbed over a time interval, will be a recurrent idea while analyzing algorithms. Lemma 1. [1] In a network with m links, the number of packets that can be delivered and buffered in a time interval of length T units by any algorithm is O(mT/d + mB), where d 0 is a lower bound on the number of links in the shortest path to the destination for each injected packet. The proof bounds the available bandwidth on all links and compares it against the minimum bandwidth required to deliver a packet injected during the time interval. The O(mB) term accounts for packets buffered at the beginning and the end of the interval, which can be arbitrarily close to their destination. An important class of distributed algorithms is greedy algorithms where each link always accepts an incoming packet if there is available buffer space and always forwards a packet if its buffer is non-empty. Based on how contention is resolved when receiving/forwarding packets, we obtain different algorithms. Nearest-To-Go (NTG) is a natural greedy algorithm which always selects a short- est path to route a packet and prefers a packet that has shortest remaining distance to travel, in both choosing packets to accept or forward. Line and tree networks are of special interest to us, where there is a unique path between every source and destination pair. A line network on n nodes is a directed path with nodes labeled 1, 2, . . . , n and a link from node i to i + 1, for i ∈ [1, n). A tree network is a rooted tree with links directed towards the root. Note that there is a one-to-one correspondence between links and nodes (for all but one node) on lines and trees. For simplicity, whenever we refer to a node’s buffer, we mean the output buffer of its unique outgoing link. A simple useful property of any greedy algorithm is as follows: Lemma 2. [1] If at some time t, a greedy algorithm on a line network has buffered k ≤ nB packets, then it delivers at least k packets by time t + (n − 1)B. At times it will be useful to geometrically group packets into classes in the following manner. A packet belongs to class j if the length of the path on which it is routed by the optimal algorithm is in the range [2j , 2j+1 ). In the case when paths are unique or specified the class of a packet can be determined exactly.

- 16. 6 Stanislav Angelov, Sanjeev Khanna, and Keshav Kunal 2.3 Balanced Instances for Line Networks At each time step, a node makes two decisions – which packets to accept (and which to drop if buffer overflows) and which packets to forward. When all packets have the same destination, the choice essentially comes down to whether a node should forward a packet or not, Motivated by this, we define the notion of a balanced instance, so that an algorithm can focus only on deciding when to forward packets. In a balanced instance, each released packet travels a distance of Θ(n). We establish a theorem that shows that with a logarithmic loss in competitive ratio, any algorithm on balanced instances can be converted to a one on arbitrary instances. Azar and Zachut [4] prove a similar theorem that it suffices to do so for information gathering. Theorem 1. An α-competitive algorithm for balanced instances gives an O(α log n)-competitive randomized algorithm for any instance on a line network. Proof. It suffices to randomly partition the line into disjoint networks of length each s.t. (i) online algorithm only accepts packets whose origin and destination are contained inside one of the smaller networks, (ii) each accepted packet travels a distance of Ω(), and (iii) Ω(1/ log n)-fraction of the packets routed by the optimal algorithm are expected to satisfy condition (i) and (ii). We first pick a random integer i ∈ [0, log n). We next look at three distinct ways to partition the line network into intervals of length 3d each, starting from node with index 1, d + 1, or 2d + 1, where d = 2i . The online algorithm chooses one of the three partitionings at random and only routes class i packets whose end-points lie completely inside an interval. It is not hard to see that the process decomposes the problem into line routing instances satisfying (iii). The above partitioning can be done by releasing O(1) packets initially as a part of the network setup process. It can be used by distributed algorithms too. 3 Nearest-to-Go Protocol In this section we prove asymptotically tight bounds (upto logarithmic factors) on the performance of Nearest-To-Go (NTG) on lines, show their extension to grids and then prove bounds on general graphs. For a sequence σ of packets, let Pj denote packets of class j. Also, let OPT be the set of packets delivered by an optimal algorithm. Let class i be the class from which optimal algorithm delivers most packets, that is, |Pi ∩ OPT| = Ω(|OPT|/ log n). We will compare the performance of NTG to an optimal al- gorithm on Pi, which we denote by OPTi. It is easy to see that if NTG is α-competitive against OPTi then it is O(α log n)-competitive against OPT. 3.1 Line Networks We show that NTG is O( √ n log n) competitive on line networks with B 1. This supplements the lower bound Ω( √ n) on the competitive ratio of any greedy algorithm and improves the O(n2/3 )-competitive ratio of NTG obtained in [1].

- 17. The Network as a Storage Device: Dynamic Routing with Bounded Buffers 7 We prove that NTG maintains competitiveness w.r.t. to OPTi on a suitable collection of instances, referred to as virtual instances. Virtual Instances. Let class i be defined as above. Consider the three ways of partitioning defined in Section 2.3 with i as the chosen class. Instead of randomly choosing a partitioning, we pick one containing at least |Pi ∩ OPT|/3 packets. For each interval in this optimal partitioning, we create a new virtual instance consisting of packets (of all classes) injected within the interval as well as any additional packets that travel some portion of the interval during NTG execution on the original sequence σ. Let us fix any such instance. W.l.o.g. we relabel the nodes by integers 1 through 3d. We also add two specials nodes of index 0 (to model packets incoming from earlier instances) and 3d + 1 (to model packets destined beyond the current instance). We divide the packets traveling through the instance as good and bad where a packet is good iff its destination is not the node labeled 3d + 1. Due to the NTG property, bad packets never delay good packets or cause them to be dropped. Hence while analyzing our virtual instance, we will only consider good packets. We also let n = 3d + 2. Analysis. For each virtual instance, we compare the number of good packets delivered by NTG to the number of class i packets contained within the interval that OPTi delivered. The analysis (and not the algorithm) is in rounds where each round has 2 phases. Phase 1 terminates when OPTi absorbs nB packets for the first time, and Phase 2 has a fixed duration of (n − 1)B time units. Packets are given a unique index and credits to account for dropped packets. We swap packets (meaning swap their index and credits) when either of the two events occurs: (i) a new packet causes another to be dropped, or (ii) a moving packet is delayed by another packet. The rules respectively ensure that a packet is (virtually) never dropped after being accepted and is (virtually) never delayed at a node once it starts moving. Recall that NTG drops packets at a node only when the node has B packets. For every node, mark the first time it overflows and give a charge of 2 to each of its packets. We do not charge that node for the next B time steps. Note that charge of 2 is enough since OPTi could have moved away at most B packets from that node during that period and have B packets in its buffers. Repeat the process till the end of Phase 1. Lemma 3. NTG absorbs Ω( √ nB) packets by the end of Phase 1. Proof. Let 2c be the maximum charge accumulated by a packet in Phase 1. NTG absorbed ≥ nB 2c packets since OPTi absorbed at least nB packets. Now consider the rightmost packet with 2c charge. Split its charge into two parts, 2s – charge accumulated while static at its source node and 2m – charge accumulated while moving. Since the packet was charged 2s, it stayed idle for at least B(s − 1) time units and hence at least that many packets crossed over it. It is never in the same buffer with these packets again. On the other hand, while the packet was moving, it left behind m(B − 1) packets which never catch up with it again. Therefore, NTG absorbs at least max{nB 2c , (c − 1)(B − 1)} = Ω( √ nB) packets.

- 18. 8 Stanislav Angelov, Sanjeev Khanna, and Keshav Kunal By the end of the round, OPTi buffered and delivered O(nB) class i packets since it absorbed at most 2nB packets in Phase 1 and by Lemma 1, OPTi absorbed O(nB) packets during Phase 2. By Lemma 2 and 3, NTG delivers Ω( √ nB) packets by the end of Phase 2. Thus we obtain an O( √ n) competitive ratio. Note we can clear the charge of packets in NTG buffers and consider OPTi buffers to be empty at the end of the round. Theorem 2. For B 1, NTG is an O( √ n log n)-competitive algorithm on line networks. Remark 1. We note here that one can show that allowing greedy a buffer space that is α times larger can not improve the competitive ratio to o( √ n/α). 3.2 Grid Networks We consider 2-dimensional regular grids on n nodes, that is, with √ n rows and columns, where nodes are connected by directed links to the neighbors in the same row and column. Since it is no longer true that there is a unique shortest path between a source-destination pair on a grid, we restrict NTG to one-bend routing – every packet first moves along the row it is injected to its destination’s column and then moves along that column. It is not hard to see that since distance between any two nodes is O( √ n), any greedy algorithm that routes packets on shortest paths is Ω( √ n)-competitive. An adversary releases O(nB) packets at all links, all with the same destination. By time O( √ nB), the greedy algorithm will deliver O( √ nB) packets and drop the remaining while an optimal algorithm can deliver all packets. The theorem below gives tight bounds on the performance of NTG with one-bend routing on grid networks. Theorem 3. For B 1, NTG with one-bend routing is Θ̃(n2/3 )-competitive algorithm on grid networks. 3.3 General Networks The following theorem improves the O(mn) upper bound on the performance of NTG on general graphs obtained in [1]. Theorem 4. NTG is Θ̃(m)-competitive on any network for both adaptive and non-adaptive settings. Moreover, every greedy algorithm is Ω(m) competitive. 4 The Merge and Deliver Algorithm In this section, we present a randomized, centralized polylog(n)-competitive al- gorithm, referred to as Merge and Deliver (MD), for line and tree networks.

- 19. The Network as a Storage Device: Dynamic Routing with Bounded Buffers 9 4.1 Line Networks By Theorem 1, it suffices to obtain a polylog(n)-competitive algorithm on bal- anced instances. A balanced instance allows us to treat all packets as equal and focus on the aspect of deciding if some buffered packet should be forwarded or not, which is where we feel the real complexity of the problem lies. Centralized algorithms make it possible to take a global view of the network and spread packets all around to overcome some of the challenges discussed in Section 1. The MD algorithm never drops accepted packets and forwards them in such a manner that it creates a small number of contiguous sequences of nodes with full buffers, which we call segments. A key observation is that once the adversary has filled buffers in a segment, it can absorb only one extra packet per time unit over the entire segment! This follows from the fact that there is a unit bandwidth out of the segment. The algorithm tries to decrease the number of such segments by merging segments of similar length. After a few rounds of merges, MD accumulates enough packets without dropping too many. It then delivers them greedily knowing (from Lemma 1) that the adversary cannot get much leverage during that time. We divide the execution of Merge and Deliver into rounds where each round has 2 phases and starts with empty buffers. Assume w.l.o.g. that OPT buffers are also empty, otherwise we view the stored packets as delivered. 1. Phase 1 is the receiving phase. The phase ends when the number of packets absorbed by MD exceeds nB/p(n) for a suitable p(n) = polylog(n). We show that OPT absorbs O(nB) packets in the phase. 2. Phase 2 is the delivery phase and consists of (n − 1)B time steps. In the phase, MD greedily forwards packets without accepting any new packets. By Proposition 2 it delivers all packets that are buffered by the end of Phase 1. We show that OPT absorbs O(nB) packets in this phase. For clarity of exposition, we first describe and analyze the algorithm for buffers of size 2. We then briefly sketch extension to any B 2. Algorithm for Receiving Phase. At a given time step, a node can be empty, single, or double, where the terms denote the number of packets in its buffer. We define a segment to be a sequence (not necessarily maximal) of double nodes. Segments are assigned to classes, and a new double node (implying it received at least one new packet) is assigned to class 0. We ensure that a segment progresses to a higher class after some duration of time which depends on its current class. It is instructive to think of the packets as forming two layers on the nodes. We call the bottom layer the transport layer. Now, consider a pair of segments s.t. there are only single nodes in between. It is possible to merge them, that is make the top layer of both segments adjacent to each other, using the transport layer, in a time proportional to the length of the left segment. Since segments might be separated by a large distance, it is not possible to physically move all those packets. Instead, we are only interested that the profile of packets changes

- 20. 10 Stanislav Angelov, Sanjeev Khanna, and Keshav Kunal as if the segments had actually become adjacent. MD accomplishes this by forwarding at each time step a packet from the right end of the left segment and all the intermediate single nodes. We call this process teleporting and a teleport from node u to v is essentially forwarding a packet at each node in [u, v − 1]. We will only merge segments of the same class. The merged segment is then assigned to the next higher class. We ensure that segments of the same class have similar lengths or at least have enough credits as other segment of the same class. Thus we will be able to account for dropped packets in each class separately and will show that the class of a segment is bounded during the phase. Note, that there could be merging segments of other classes in between two segments of a given class. To overcome this, we divide time into blocks of log n size. At the ith time unit of each block, only class i segments are active. Also, each class i starts its own clock at the beginning of Phase 1, with period 2i . This clock ticks only during the time units of class i. Fix a class i, and consider its period and time steps, and the class i segments. At the beginning of the period, segments are numbered from left to right starting from 1. MD merges each odd-number segment with the segment that follows it. The (at most one) unpaired segment is merged into the transport layer. At the end of the period, a merged pair becomes one segment of class i+1 and waits idle until the next class i + 1 period begins. We now formally define the segment merging operation. Segment Merging. Consider the pair of segments such that the first segment ends at node e and the second segment starts at s + 1 at the time step. We teleport a packet from e to s. We distinguish the following special cases: (i) If e = s or one of the segments has length 0, the merge is completed. (ii) If s e is already a double node we teleport the packet to s , where s ≥ e is the minimum index s.t. nodes [s , s] are double. The profile changes as if we moved all segments in [s , s], which are of different class and therefore inactive, backward by one index and teleported the packet to s. (iii) If the two segments are not linked by a transport layer, that is, there is an empty node to the right of the odd segment or the odd segment is the last segment of class i, we teleport the packet to the first empty node to its right. Analysis. We first note that online can accept one packet (and drop at most one packet) at a single node. Hence the number of packets which online receives plus the number of packets dropped at double nodes is an upper bound on the number of packets dropped by online. We need to count only the packets dropped at double nodes to compare the packets in optimum to that in online. The movement strategy in Phase 1 ensures that there is never a net outflow of packets from the transport layer to the upper layer. Therefore we can treat packets in transport layer as good as delivered and devise a charging scheme which only draws credits from packets in top layer to account for dropped packets.

- 21. The Network as a Storage Device: Dynamic Routing with Bounded Buffers 11 Since packets could join the transport layer during merging, we cannot ensure that segment lengths grow geometrically, but we show an upper bound on their lengths in this lemma which follows easily from induction. Lemma 4. At the start of class i period, the length of a class i segment is ≤ 2i . We note that each merge operation decreases the length of the left segment of a merging segment pair by 1. The next lemma follows. Lemma 5. The merge of two class i segments needs at most 2i ticks and can be accomplished in a class i period. Corollary 1. If a class i segment is present at the beginning of class i period, by the end of the period, its packets either merge with another class i segment to form a class i + 1 segment, or join the transport layer. We assign O(log2 n) credits to each packet which are absorbed as follows: – log2 n + 3 log n units of merging credits, which are distributed equally over all classes, that is a credit of log n + 3 in each class. – log2 n + 2 log n units of idling credits, which are distributed equally over all classes, that is a credit of log n + 2 in each class. When a new segment of class 0 is created it gets the credit of the new packet injected in the top layer. When two segments of class i are merged the unused credits of the segments are transferred to the new segment of class i + 1. If a packet gets delivered or joins the transport layer, it gives its credit to the segment it was trying to merge into. The following lemma establishes that a class i segment has enough credit irrespective of its length. Lemma 6. A class i segment has 2i (log n+3) merging credits and 2i (log n+2) idling credits. The class of a segment is bounded by log n. Proof. The first part follows by induction. If a class i segment has 2i (log n + 3) merging credits, there must have been 2i class 0 segments to begin with. Since we stop the phase right after n/p(n) packets are absorbed, i log n. We now analyze packets dropped by segments in each class. Consider a seg- ment of length l ≤ 2i in class i. It could have been promoted to class i in the middle of a class i period but since the period spans 2i blocks of time, it stays idle for ≤ 2i log n time units. Number of packets dropped is bounded by B = 2 times the segment length plus the total bandwidth out of the segment which is ≤ 2 · 2i + 2i log n. This can be paid by the idling credit of the segment. At the beginning of a new class i period, it starts merging with another segment of length, say l ≤ 2i , to its right. The total number of packets dropped in both segments is bounded by 2(l + l + l ) + 2 · 2i log n ≤ 6 · 2i + 2 · 2i log n, using a similar argument. This can be paid by the merging credit of the segments. We let p(n) = 2 log2 n+5 logn and note that the number of packets dropped by online during Phase 1 can be bounded by the credit assigned to all absorbed packets. Using Lemma 1 we show that OPT can only deliver and buffer O(n) packets during Phase 2. Since MD delivers at least n/p(n) packets in the round, a competitive ratio of O(p(n)) = O(log2 n) is established.

- 22. 12 Stanislav Angelov, Sanjeev Khanna, and Keshav Kunal Generalization to B 2. We can generalize MD to B 2 by redefining the concept of a double node and segment. Also, we increase the credits assigned to a packet by a factor of B/(B − 1) ≤ 2, scaling p(n) accordingly. Theorem 5. For B 1, Merge and Deliver is a randomized O(log3 n)-compe- titive algorithm on a line network. 4.2 Tree Networks The Merge and Deliver algorithm extends naturally to tree networks. We con- sider rooted tree networks where each edge is directed towards the root node. It is known that on trees with n nodes and height h, a greedy algorithm is Ω(n/h)-competitive [1]. We show the following: Theorem 6. For B 1, there is a O(log2 n)-competitive centralized determin- istic algorithm for trees when packets have the same destination. When destina- tions are arbitrary, there is a randomized O(h log2 n)-competitive algorithm. 5 Line Networks with Unit Buffers For unit buffer sizes, Aiello et al [1] give a lower bound of Ω(n) on the competitive ratio of any greedy algorithm. We show that the lower bound holds for any deterministic algorithm. However, with randomization, we can beat this bound. We show that the following simple strategy is Õ( √ n)-competitive. The online algorithm chooses uniformly at random one of the two strategies, NTG and modified NTG, call it NTG’, that forwards packets at every other time step. The intuition is that if in NTG there is a packet that collides with c other packets then Ω(c) packets are absorbed by NTG’. Theorem 7. For B = 1 any deterministic online algorithm is Ω(n)-competitive on line networks. There is a randomized Õ( √ n)-competitive algorithm for the problem. 6 Conclusion Our results establish a strong separation between the performance of natural greedy algorithms and centralized routing algorithms even on the simple line topology. An interesting open question is to prove or disprove the existence of distributed polylog(n)-competitive algorithms for line networks. References 1. Aiello, W., Ostrovsky, R., Kushilevitz, E., Rosén, A.: Dynamic routing on networks with fixed-size buffers. In: Proceedings of the 14th SODA. (2003) 771–780

- 23. The Network as a Storage Device: Dynamic Routing with Bounded Buffers 13 2. Kesselman, A., Mansour, Y., Lotker, Z., Patt-Shamir, B.: Buffer overflows of merg- ing streams. In: Proceedings of the 15th SPAA. (2003) 244–245 3. Kothapalli, K., Scheideler, C.: Information gathering in adversarial systems: lines and cycles. In: SPAA ’03: Proceedings of the fifteenth annual ACM symposium on Parallel algorithms and architectures. (2003) 333–342 4. Azar, Y., Zachut, R.: Packet routing and information gathering in lines, rings and trees. In: Proceedings of 15th ESA. (2005) 5. Labrador, M., Banerjee, S.: Packet dropping policies for ATM and IP networks. IEEE Communications Surveys 2 (1999) 6. Broder, A.Z., Frieze, A.M., Upfal, E.: A general approach to dynamic packet rout- ing with bounded buffers. In: Proceedings of the 37th FOCS. (1996) 390 7. Broder, A., Upfal, E.: Dynamic deflection routing on arrays. In: Proceedings of the 28th annual ACM Symposium on Theory of Computing. (1996) 348–355 8. Mihail, M.: Conductance and convergence of markov chains–a combinatorial treat- ment of expanders. In: Proceedings of the 30th FOCS. (1989) 526–531 9. Mitzenmacher, M.: Bounds on the greedy routing algorithm for array networks. Journal of Computer System Sciences 53 (1996) 317–327 10. Stamoulis, G., Tsitsiklis, J.: The efficiency of greedy routing in hypercubes and butterflies. IEEE Transactions on Communications 42 (1994) 3051–208 11. Borodin, A., Kleinberg, J., Raghavan, P., Sudan, M., Williamson, D.P.: Adversarial queueing theory. In: Proceedings of the 28th STOC. (1996) 376–385 12. Andrews, M., Mansour, Y., Fernéndez, A., Kleinberg, J., Leighton, T., Liu, Z.: Uni- versal stability results for greedy contention-resolution protocols. In: Proceedings of the 37th FOCS. (1996) 380–389 13. Busch, C., Magdon-Ismail, M., Mavronicolas, M., Spirakis, P.G.: Direct routing: Algorithms and complexity. In: ESA. (2004) 134–145 14. Awerbuch, B., Azar, Y., Plotkin, S.: Throughput competitive on-line routing. In: Proceedings of 34th FOCS. (1993) 32–40 15. Scheideler, C., Vöcking, B.: Universal continuous routing strategies. In: Proceed- ings of the 8th SPAA. (1996) 142–151 16. Kesselman, A., Mansour, Y.: Harmonic buffer management policy for shared mem- ory switches. Theor. Comput. Sci. 324 (2004) 161–182 17. Lotker, Z., Patt-Shamir, B.: Nearly optimal FIFO buffer management for DiffServ. In: Proceedings of the 21th PODC. (2002) 134–143

- 24. Rounding Two and Three Dimensional Solutions of the SDP Relaxation of MAX CUT Adi Avidor and Uri Zwick School of Computer Science Tel-Aviv University, Tel-Aviv 69978, Israel {adi,zwick}@tau.ac.il Abstract. Goemans and Williamson obtained an approximation algorithm for the MAX CUT problem with a performance ratio of αGW 0.87856. Their algorithm starts by solving a standard SDP relaxation of MAX CUT and then rounds the optimal solution obtained using a random hyperplane. In some cases, the optimal solution of the SDP relaxation happens to lie in a low dimensional space. Can an improved performance ratio be obtained for such instances? We show that the answer is yes in dimensions two and three and conjecture that this is also the case in any higher fixed dimension. In two dimensions an opti- mal 32 25+5 √ 5 -approximation algorithm was already obtained by Goemans. (Note that 32 25+5 √ 5 0.88456.) We obtain an alternative derivation of this result us- ing Gegenbauer polynomials. Our main result is an improved rounding proce- dure for SDP solutions that lie in R3 with a performance ratio of about 0.8818 . The rounding procedure uses an interesting yin-yan coloring of the three dimen- sional sphere. The improved performance ratio obtained resolves, in the nega- tive, an open problem posed by Feige and Schechtman [STOC’01]. They asked whether there are MAX CUT instances with integrality ratios arbitrarily close to αGW 0.87856 that have optimal embedding, i.e., optimal solutions of their SDP relaxations, that lie in R3 . 1 Introduction An instance of the MAX CUT problem is an undirected graph G = (V, E) with non- negative weights wij on the edges (i, j) ∈ E. The goal is to find a subset of vertices S ⊆ V that maximizes the weight of the edges of the cut (S, S̄). MAX CUT is one of Karp’s original NP-complete problems, and has been extensively studied during the last few decades. Goemans and Williamson [GW95] used semidefinite programming and the random hyperplane rounding technique to obtain an approximation algorithm for the MAX CUT problem with a performance guarantee of αGW 0.87856. Håstad [Hås01] showed that MAX CUT does not have a (16/17 + ε)-approximation algorithm, for any ε 0, unless P = NP. Recently, Khot et al. [KKMO04] showed that a certain plau- sible conjecture implies that MAX CUT does not have an (αGW + ε)-approximation, for any ε 0. The algorithm of Goemans and Williamson starts by solving a standard SDP re- laxation of the MAX CUT instance. The value of the optimal solution obtained is an This research was supported by the ISRAEL SCIENCE FOUNDATION (grant no. 246/01). C. Chekuri et al. (Eds.): APPROX and RANDOM 2005, LNCS 3624, pp. 14–25, 2005. c Springer-Verlag Berlin Heidelberg 2005

- 25. Rounding Two and Three Dimensional Solutions of the SDP Relaxation of MAX CUT 15 Fig. 1. An optimal solution of the SDP relaxation of C5. Graph nodes lie on the unit circle upper bound on the size of the maximum cut. The ratio between these two quantities is called the integrality ratio of the instance. The infimum of the integrality ratios of all the instances is the integrality ratio of the relaxation. The study of the integrality ratio of the standard SDP relaxation was started by Delorme and Poljak [DP93a, DP93b]. The worst instance they found was the 5-cycle C5 which has an integrality ratio of 32 25+5 √ 5 0.884458. The optimal solution of the SDP relaxation of C5, given in Fig- ure 1, happens to lie in R2 . Feige and Schechtman [FS01] showed that the integral- ity ratio of the standard SDP relaxation of MAX CUT is exactly αGW . More specif- ically, they showed that for every ε 0 there exists a graph with integrality ratio of at most αGW + ε. The optimal solution of the SDP relaxation of this graph lies in RΘ( √ 1/ε log 1/ε) . An interesting open question, raised by Feige and Schechtman, was whether it is possible to find instances of MAX CUT with integrality ratio arbitrarily close to αGW 0.87856 that have optimal embedding, i.e., optimal solutions of their SDP relaxations, that lie in R3 . We supply a negative answer to this question. Improved approximation algorithms for instances of MAX CUT that do not have large cuts were obtained by Zwick [Zwi99], Feige and Langberg [FL01] and Charikar and Wirth [CW04]. These improvements are obtained by modifying the random hyper- plane rounding procedure of Goemans and Williamson [GW95]. Zwick [Zwi99] uses outward rotations. Feige and Langberg [FL01] introduced the RPR2 rounding tech- nique. Other rounding procedures, developed for the use in MAX 2-SAT and MAX DI-CUT algorithms, were suggested by Feige and Goemans [FG95], Matuura and Mat- sui [MM01a, MM01b] and Lewin et al. [LLZ02]. In this paper we study the approximation of MAX CUT instances that have a low dimensional optimal solution of their SDP relaxation. For instances with a so- lution that lies in the plane, Goemans (unpublished) achieved a performance ratio of 32 25+5 √ 5 = 4 5 sin2( 2π 5 ) 0.884458. We describe an alternative way of getting such a performance ratio using a random procedure that uses Gegenbauer polynomials. This result is optimal in the sense that it matches the integrality ratio obtained by Delorme and Poljak [DP93a, DP93b]. Unfortunately, the use of the Gegenbauer polynomials does not yield improved ap- proximation algorithms in dimensions higher than 2. To obtain an improved ratio in dimension three we use an interesting yin-yan like coloring of the unit sphere in R3 . The question whether a similar coloring can be used in four or more dimensions re- mains an interesting open problem. The rest of this extended abstract is organized as follows. In the next section we describe our notations and review the approximation algorithm of Goemans and

- 26. 16 Adi Avidor and Uri Zwick Williamson. In Section 3 we introduce the Gegenbauer polynomials rounding tech- nique. In Section 4 we give our Gegenbauer polynomials based algorithm for the plane and compare it to the algorithm of Goemans. In Section 5 we introduce the 2-coloring rounding. Finally, in Section 6 we present our 2-coloring based algorithm for the three dimensional case. 2 Preliminaries Let G = (V, E) be an undirected graph with non-negative weights attached to its edges. We let wij be the weight of an edge (i, j) ∈ E. We may assume, w.l.o.g., that E = V × V . (If an edge is missing, we can add it and give it a weight of 0.) We also assume V = {1, . . ., n}. We denote by Sd−1 the unit sphere in Rd . We define: sgn(x) = 1 if x ≥ 0 −1 otherwise The Goemans and Williamson algorithm embeds the vertices of the input graph on the unit sphere Sn−1 by solving a semidefinite program, and then rounds the vectors to integral values using random hyperplane rounding. MAX CUT Semidefinite Program: MAX CUT may be formulated as the following integer quadratic program: max i,j wij 1−xi·xj 2 s.t. xi ∈ {−1, 1} Here, each vertex i is associated with a variable xi ∈ {−1, 1}, where −1 and 1 may be viewed as the two sides of the cut. For each edge (i, j) ∈ E the expression 1−xixj 2 is 0 if the two endpoints of the edge are on the same side of the cut and 1 if the two endpoints are on different sides. The latter program may be relaxed by replacing each variable xi with a unit vector vi in Rn . The product between the two variables xi and xj is replaced by an inner prod- uct between the vectors vi and vj. The following semidefinite programming relaxation of MAX CUT is obtained: max i,j wij 1−vi·vj 2 s.t. vi ∈ Sn−1 This semidefinite program can be solved in polynomial time up to any specified precision. The value of the program is an upper bound on the value of the maximal cut in the graph. Intuitively, the semidefinite programming embeds the input graph G on the unit sphere Sn−1 , such that adjacent vertices in G are far apart in Sn−1 . Random Hyperplane Rounding: The random hyperplane rounding procedure chooses a uniformly distributed unit vector r on the sphere Sn−1 . Then, xi is set to sgn(vi · r). We will use the following simple Lemma, taken from [GW95]: Lemma 1. If u, v ∈ Sn−1 , then Prr∈Sn−1 [sgn(u · r) = sgn(v · r)] = arccos(u·v) π .

- 27. Rounding Two and Three Dimensional Solutions of the SDP Relaxation of MAX CUT 17 3 Gegenbauer Polynomials Rounding For any m ≥ 2, and k ≥ 0, the Gegenbauer (or ultraspherical) polynomials G (m) k (x) are defined by the following recurrence relations: G (m) 0 (x) = 1, G (m) 1 (x) = x G (m) k (x) = 1 k+m−3 (2k + m − 4)xG (m) k−1(x) − (k − 1)G (m) k−2(x) for k ≥ 2 G (2) k are Chebyshev polynomials of the first kind, G (3) k are Legendre polynomials of the first kind, and G (4) k are Chebyshev polynomials of the second kind. The following interesting Theorem is due to Schoenberg [Sch42]: Theorem 1. A polynomial f is a sum of Gegenbauer polynomials G (m) k , for k ≥ 0, with non-negative coefficients, i.e., f(x) = k≥0 αkG (m) k (x), αk ≥ 0, if and only if for any v1, . . . , vn ∈ Sm−1 the matrix (f(vi · vj))ij is positive semidefinite. Note that G (m) k (1) = 1 for all m ≥ 2 and k ≥ 0. Let v1, . . . , vn ∈ Sm−1 and let f be a convex sum of G (m) k with respect to k, i.e., f(x) = k≥0 αkG (m) k (x), where αk ≥ 0, k αk = 1. Then, there exist unit vectors v 1, . . . , v n ∈ Sn−1 such that v i · v j = f(vi · vj), for all 1 ≤ i, j ≤ n. In this setting, we define the Gegenbauer polynomials rounding technique with pa- rameter f as: 1. Find vectors v i satisfying v i · v j = f(vi · vj), where 1 ≤ i, j ≤ n (This can be done by solving a semidefinite program) 2. Let r be a vector uniformly distributed on the unit sphere Sn−1 3. Set xi = sgn(v i · r), for 1 ≤ i ≤ n Note that the vectors v 1, . . . , v n do not necessarily lie on the unit sphere Sm−1 . Therefore, the vector r is chosen from Sn−1 . Note also that the random hyperplane rounding of Goemans and Williamson is a special case of the Gegenbauer polynomi- als rounding technique as f can be taken to be f = G (m) 1 . The next corollary is an immediate result of Lemma 1: Corollary 1. If v1, . . . , vn are rounded using Gegenbauer polynomials rounding with polynomial f, then Pr[xi = xj] = arccos(f(vi·vj )) π , where 1 ≤ i, j ≤ n. Alon and Naor [AN04] also studied a rounding technique which applies a polyno- mial on the elements of an inner products matrix. In their technique the polynomial may be arbitrary. However, the matrix must be of the form (vi · uj)ij, where vi’s and uj’s are distinct sets of vectors. 4 An Optimal Algorithm in the Plane In this section we use Gegenbauer polynomials to round solutions of the semidefinite programming relaxation of MAX CUT that happen to lie in the plane. Note that as the

- 28. 18 Adi Avidor and Uri Zwick solution lie in R2 the suitable polynomials to apply are only convex combinations of G (2) k ’s. Algorithm [MaxCut – Gege]: 1. Solve the MAX CUT semidefinite program 2. Run random hyperplane rounding with probability β and Gegenbauer polynomials rounding with the polynomial G (2) 4 (x) = 8x4 − 8x2 + 1 with probability 1 − β We choose β = 4 5 π 5 cot 2π 5 + 1 0.963322. The next Theorem together with the fact that the value of the MAX CUT semidefinite program is an upper bound on the value of the optimal cut, imply that our algorithm is a 32 25+5 √ 5 -approximation al- gorithm. Theorem 2. If the solution of the program lies in the plane, then the expected cut size produced by the algorithm is at least a fraction 32 25+5 √ 5 of the value of the MAX CUT semidefinite program. As the expected size of the cut produced by the algorithm equals i,j wijPr[xi = xj], we may derive Theorem 2 from the next lemma: Lemma 2. Pr[xi = xj] ≥ 32 25+5 √ 5 1−vi·vj 2 , for all 1 ≤ i, j ≤ n. Proof. Let θij = arccos(vi · vj). By using Lemma 1, and Corollary 1 we get that Pr[xi = xj] = β θij π + (1 − β) arccos(G (2) 4 (vi · vj)) π . As G (2) k (x) are Chebyshev polynomials of the first kind, G (2) k (cos θ) = cos(kθ). There- fore, Pr[xi = xj] = β θij π + (1 − β) arccos(cos(4θij)) π . By using Lemma 3 in Appendix A, the latter value is greater or equals to 32 25+5 √ 5 1−cos(θij ) 2 , and equality holds if and only if θij = 4π 5 or θij = 0. The last argument in the proof of Lemma 2 shows that the worst instance in our framework is when θij = 4π 5 for all (i, j) ∈ E. The 5-cycle C5, with an optimal solution vk = (cos(4π 5 k), sin(4π 5 k)), for 1 ≤ k ≤ 5, is such an instance. (See Figure 1.) In our framework, Gegenbauer polynomials rounding performs better than the Goe- mans Williamson algorithm whenever the Gegenbauer polynomials transformation in- creases the expected angle between adjacent vertices. Denote the ‘worst’ angle of the Goemans-Williamson algorithm by θ0 2.331122. In order to achieve an improved approximation, the Gegenbauer polynomial transformation should increase the angle between adjacent vertices with inner product cos θ0. It can be shown that for any di- mension m ≥ 3, and any k ≥ 2, G (m) k (cos θ0) cos θ0. Hence, in higher dimensions other techniques are needed in order to attain an improved approximation algorithm.

- 29. Rounding Two and Three Dimensional Solutions of the SDP Relaxation of MAX CUT 19 Fig. 2. WINDMILL4 2-coloring 5 Rounding Using 2-Coloring of the Sphere Definition 1. A 2-coloring C of the unit sphere Sm−1 is a function C : Sm−1 → {−1, +1}. Let v1, . . . , vn ∈ Sm−1 and let C be a 2-coloring of the unit sphere Sm−1 . In the sphere 2-coloring rounding method with respect to a 2-coloring C, the 2-colored unit sphere is randomly rotated and then each variable xi is set to C(vi). We give below a more formal definition of the 2-coloring rounding with parameter C. In the definition, it is more convenient to rotate v1, . . . , vn than to rotate the 2-colored sphere. 1. Choose a uniformly distributed rotation (orthonormal) matrix R 2. Set xi = C(Rvi), for 1 ≤ i ≤ n The matrix R in the first step may be chosen using the following procedure, which employs the Graham-Schmidt process: 1. Choose independently m vectors r1, . . . , rm ∈Rm according to the m-dimensional standard normal distribution 2. Iteratively, set ri ← ri − i−1 j=1(ri · rj)rj, and then ri ← ri/||ri|| 3. Let R be the matrix whose columns are the vectors ri Note that when 2-coloring rounding is used the probability Pr[xi = xj] depends only on the angle between vi and vj. 2-coloring rounding relates to Gegenbauer polynomials rounding by the following argument: Let WINDMILLk be the 2-coloring of S1 in which a point (cos θ, sin θ)∈ S1 is colored by black (−1) if θ ∈ [2π k , (2 + 1)π k ), and by white (+1) otherwise (where 0 ≤ k). The 2-coloring of WINDMILL4 for example is shown in Figure 2. Denote the angle between vi and vj by θij. Also denote by PrWM [xi = xj] the probability that xi = xj if 2-coloring rounding with WINDMILLk is used, and by PrGEGE[xi = xj] the probability that xi = xj if Gegenbauer polynomials rounding with the polynomial G (2) k is used. Then, PrWM [xi =xj]= θij −2π/k π/k if θij ∈ [2π k , (2 + 1)π k ) where 0 ≤ k (2+1)π/k−θij π/k if θij ∈ [(2 + 1)π k , (2 + 2)π k ) where 0 ≤ k

- 30. 20 Adi Avidor and Uri Zwick Fig. 3. YINYAN 2-coloring In other words, PrWM [xi = xj] = arccos(cos(kθij )) π . On the other hand, by Corollary 1 PrGEGE[xi = xj] = arccos G (2) k (cos(θij )) π . As G (2) k are Chebyshev polynomials of the first kind G (2) k (cos(θij)) = cos(kθij). Hence, PrWM [xi = xj] = PrGEGE[xi = xj]. Goemans unpublished result was obtained in this way, with k = 4. 6 An Algorithm for Three Dimensions Our improved approximation algorithm uses 2-coloring rounding, combined with ran- dom hyperplane rounding. A ‘good’ 2-coloring would be one that helps the random hyperplane rounding procedure with the ratio of the so-called ‘bad’ angles. In our approximation algorithm for three dimensions, we use a simple 2-coloring we call YINYAN . We give a description of YINYAN in the spherical coordinates (θ, φ), where 0 ≤ θ ≤ 2π and −π 2 ≤ φ ≤ π 2 (in our notations, the cartesian representa- tion of (θ, φ) is (cos θ cos φ, sin θ cos φ, sin φ).) YINYAN (θ, φ) = +1 if 4φ ± sin(4θ) −1 otherwise Here ± √ x = sgn(x) |x|. Figure 3 shows the YINYAN 2-coloring. Note that, the coloring of the equator in YINYAN is identical to the coloring WINDMILL4, which was used by Goemans in the plane. Algorithm [MaxCut – YINYAN ]: 1. Solve the MAX CUT semidefinite program 2. Let CUT1 be a cut obtained by running random hyperplane rounding 3. Let CUT2 be a cut obtained by running 2-coloring rounding with YINYAN 2- coloring 4. Return the largest of CUT1 and CUT2

- 31. Rounding Two and Three Dimensional Solutions of the SDP Relaxation of MAX CUT 21 We denote the weight of a cut CUT by wt(CUT), the size of the optimal cut by OPT , and the expected weight of the cut produced by the algorithm by ALG. In addition, we denote by PrGW [xi = xj] the probability that xi = xj if the random hyperplane rounding of Goemans and Williamson is used, and by PrY Y [xi = xj] the probability that xi = xj if 2-coloring rounding with the 2-coloring YINYAN is used. If the angle between vi and vj is θij, we write the latter also as PrY Y [θij]. In these notations, for any 0 ≤ β ≤ 1, ALG = E[max{wt(CUT1), wt(CUT2)}] ≥ βE[wt(CUT1)] + (1 − β)E[wt(CUT2)] = β i,j wijPrGW [xi = xj] + (1 − β) i,j wijPrY Y [xi = xj] Thus, we have the following lower bound on the performance ratio of the algorithm, for any 0 ≤ β ≤ 1: ALG OPT ≥ β i,j wijPrGW [xi = xj] + (1 − β) i,j wijPrY Y [xi = xj] i,j wij 1−vi·vj 2 ≥ inf 0θ≤π β θ π + (1 − β)PrY Y [θ] 1−cos θ 2 The probability PrY Y (θ) may be written as a three dimensional integral. However, this integral does not seem to have an analytical representation. We numerically calcu- lated a lower bound on the latter integral. A lower bound of 0.8818 on the ratio may be obtained by choosing β = 0.46. It is possible to obtain a rigorous proof for the approximation ratio using a tool such as REALSEARCH (see [Zwi02]). However, this would require a tremendous amount of work. An easy way the reader can validate that using YINYAN yields a better than αGW approximation is by computing PrY Y [θ] for angles around the ‘bad’ angle θ0 2.331122. This can be done by a simple easy to check MATLAB code given in Appendix B. An improved algorithm is also obtained using, say, the coloring SINCOL(θ, φ) = +1 if 2φ sin(4θ) −1 otherwise However, a better ratio is obtained by taking YINYAN instead of SINCOL or any version of SINCOL with adjusted constants. There is no reason to believe that the current function is optimal. We were mainly interested in showing that a ratio strictly larger than the ratio of Goemans and Williamson can be obtained.

- 32. 22 Adi Avidor and Uri Zwick 7 Concluding Remarks We presented two new techniques for rounding solutions of semidefinite programming relaxations. We used the first technique to give an approximation algorithm that matches the integrality gap of MAX CUT for instances embedded in the plane. We used the sec- ond method to obtain an approximation algorithm which is better than the Goemans- Williamson approximation algorithm for instances that have a solution in R3 . The latter approximation algorithm rules out the existence of a graph with integrality ratio arbi- trarily close to αGW and an optimal solution in R3 , thus resolving an open problem raised by Feige and Schechtman [FS01]. We conjecture that the 2-coloring rounding method can be used to obtain an approximation algorithm with a performance ratio strictly larger than αGW for any fixed dimension. It can be shown that adding “triangle constraints” to the MAX CUT semidefinite programming relaxation rules out a non-integral solution in the plane. It would be inter- esting to derive similar approximation ratios for three (or higher) dimensional solutions that satisfy the “triangle constraints”. Acknowledgments We would like to thank Olga Sorkine for her help in rendering Figure 3. References [AN04] N. Alon and A. Naor. Approximating the cut-norm via Grothendieck’s inequality. In Proceedings of the 36th Annual ACM Symposium on Theory of Computing, Chicago, Illinois, pages 72–80, 2004. [CW04] M. Charikar and A. Wirth. Maximizing Quadratic Programs: Extending Grothendieck’s Inequality. In Proceedings of the 45th Annual IEEE Symposium on Foundations of Computer Science, Rome, Italy, pages 54–60, 2004. [DP93a] C. Delorme and S. Poljak. Combinatorial properties and the complexity of a max-cut approximation. European Journal of Combinatorics, 14(4):313–333, 1993. [DP93b] C. Delorme and S. Poljak. Laplacian eigenvalues and the maximum cut problem. Mathematical Programming, 62(3, Ser. A):557–574, 1993. [FG95] U. Feige and M. X. Goemans. Approximating the value of two prover proof sys- tems, with applications to MAX-2SAT and MAX-DICUT. In Proceedings of the 3rd Israel Symposium on Theory and Computing Systems, Tel Aviv, Israel, pages 182–189, 1995. [FL01] U. Feige and M. Langberg. The RPR2 rounding technique for semidefinite pro- grams. In Proceedings of the 28th Int. Coll. on Automata, Languages and Program- ming, Crete, Greece, pages 213–224, 2001. [FS01] U. Feige and G. Schechtman. On the integrality ratio of semidefinite relaxations of MAX CUT. In Proceedings of the 33th Annual ACM Symposium on Theory of Computing, Crete, Greece, pages 433–442, 2001. [GW95] M. X. Goemans and D. P. Williamson. Improved Approximation Algorithms for Maximum Cut and Satisfiability Problems Using Semidefinite Programming. Jour- nal of the ACM, 42:1115–1145, 1995.

- 33. Rounding Two and Three Dimensional Solutions of the SDP Relaxation of MAX CUT 23 [Hås01] J. Håstad. Some optimal inapproximability results. Journal of the ACM, 48(4):798– 859, 2001. [KKMO04] S. Khot, G. Kindler, E. Mossel, and R. O’Donnell. Optimal inapproimability re- sutls for MAX-CUT and other 2-variable CSPs? In Proceedings of the 45th Annual IEEE Symposium on Foundations of Computer Science, Rome, Italy, pages 146–154, 2004. [LLZ02] M. Lewin, D. Livnat, and U. Zwick. Improved rounding techniques for the MAX 2-SAT and MAX DI-CUT problems. In Proceedings of the 9th IPCO, Cambridge, Massachusetts, pages 67–82, 2002. [MM01a] S. Matuura and T. Matsui. 0.863-approximation algorithm for MAX DICUT. In Approximation, Randomization and Combinatorial Optimization: Algorithms and Techniques, Proceedongs of APPROX-RANDOM’01, Berkeley, California, pages 138–146, 2001. [MM01b] S. Matuura and T. Matsui. 0.935-approximation randomized algorithm for MAX 2SAT and its derandomization. Technical Report METR 2001-03, Department of Mathematical Engineering and Information Physics, the University of Tokyo, Japan, September 2001. [Sch42] I. J. Schoenberg. Positive definite functions on spheres. Duke Math J., 9:96–107, 1942. [Zwi99] U. Zwick. Outward rotations: a tool for rounding solutions of semidefinite program- ming relaxations, with applications to MAX CUT and other problems. In Proceed- ings of the 31th Annual ACM Symposium on Theory of Computing, Atlanta, Georgia, pages 679–687, 1999. [Zwi02] U. Zwick. Computer assisted proof of optimal approximability results. In Proceed- ings of the 13th Annual ACM-SIAM Symposium on Discrete Algorithms, San Fran- cisco, California, pages 496–505, 2002. Appendix A Lemma 3. Let β = 4 5 π 5 cot 2π 5 + 1 and αGEGE = 4 5 sin2( 2π 5 ) = 32 25+5 √ 5 . Then, for every θ ∈ [0, π] β θ π + (1 − β) arccos(cos 4θ) π ≥ αGEGE 1 − cos θ 2 and equality holds if and only if θ = 0 or θ = 4π 5 . Proof. Define h(θ) = β θ π + (1 − β)arccos(cos 4θ) π − αGEGE 1−cos θ 2 . We show that the minimum of h(θ) is zero and is attained only at θ = 0 and θ = 4π 5 . Note that arccos(cos 4θ) = ⎧ ⎪ ⎪ ⎨ ⎪ ⎪ ⎩ 4θ if 0 ≤ θ π 4 2π − 4θ if π 4 ≤ θ π 2 4θ − 2π if π 2 ≤ θ 3π 4 4π − 4θ if 3π 4 ≤ θ ≤ π

- 34. 24 Adi Avidor and Uri Zwick Hence, for θ ∈ [0, π] {0, π 4 , π 2 , 3π 4 , π} h (θ) = 4−3β π − 1 2 αGEGE sin θ if 0 θ π 4 or π 2 θ 3π 4 5β−4 π − 1 2 αGEGE sin θ if π 4 θ π 2 or 3π 4 θ π and h (θ) = −1 2 αGEGE cos θ. There are three cases: Case I: 0 ≤ θ ≤ π/2 In this case h (θ) 0 for θ / ∈ {0, π/4, π/2}. Therefore, the minimum of h(θ) in the interval 0 ≤ θ ≤ π/2 may be attained at θ = 0, θ = π/4 or θ = π/2. Indeed, h(0) = 0 and the minimum is attained. In addition, h(π/4) = 1−3/4·β−αGEGE(1− √ 2/2)/2 = 0.14798 . . . 0, and h(π/2) = (β − αGEGE)/2 = 0.03942 . . . 0. Case II: π/2 ≤ θ ≤ 3π/4 As for all θ ∈ (π 2 , 3π 4 ) the function h (θ) is defined and positive, the minimum may be attained when h (θ) = 0, θ = π/2 or θ = 3π/4. The unique solution θ0 ∈ (π 2 , 3π 4 ) of the equation 0 = h (θ0) = 4−3β π − 1 2 αGEGE sin(θ0) is θ0 = arcsin 4−3β π 2 αGEGE . However, for this local minimum, h(θ0) = 0.00146 . . . 0. In addition, as men- tioned before h(π 2 ) 0. Moreover, h(3π 4 ) = β 3 4 + (1 − β) − 1 2 αGEGE(1 + √ 2 2 ) = 0.00423 . . . 0. Case III: 3π/4 ≤ θ ≤ π Again, for all θ ∈ (3π 4 , π) the function h (θ) is defined and positive. Hence, the minimum may be attained when h (θ) = 0, θ = 3π/4 or θ = π. The unique solu- tion θ0 ∈ (3π 4 , π) of the equation 0 = h (θ0) = 5β−4 π − 1 2 αGEGE sin(θ0) is θ0 = arcsin 5β−4 π 2 αGEGE = arcsin 4 5 cot(2π 5 ) · 2 5 sin2 ( 2π 5 ) 4 = 4π 5 . For this local min- imum, h(θ0) = 0. In addition, as mentioned before h(3π 4 ) 0. Moreover, h(π) = β − αGEGE 0.

- 35. Rounding Two and Three Dimensional Solutions of the SDP Relaxation of MAX CUT 25 Appendix B A short MATLAB routine to compute the probability that two vectors on S2 with a given angle between them are separated by rounding with YINYAN 2-coloring. The routine uses Monte-Carlo with ntry trials. For example, for the angle 2.331122, by running yinyan(2.331122, 10000000) we get that the probability is 0.7472. This is strictly larger than the random hyperplane rounding separating probability of 2.331122 π 0.7420. function p = yinyan(angle,ntry) c = cos(angle); s = sin(angle); cnt = 0; for i=1:ntry % Choose two random vectors with the given angle. u = randn(1,3); u = u/sqrt(u*u’); v = randn(1,3); v = v - (u*v’)*u; v = v/sqrt(v*v’); v = c*u + s*v; % Convert to polar coordinates [tet1,phi1] = cart2sph(u(1),u(2),u(3)); [tet2,phi2] = cart2sph(v(1),v(2),v(3)); % Check whether they are separated cnt = cnt + ((4*phi1f(4*tet1))˜=(4*phi2f(4*tet2))); end p = cnt/ntry; function y = f(x) s = sin(x); y = sign(s)*sqrt(abs(s));