Concurrency and Parallelism, Asynchronous Programming, Network Programming

- 1. DEPARTMENT OF COMPUTER SCIENCE AND ENGINEERING VELAGAPUDI RAMAKRISHNA SIDDHARTHA ENGINEERING COLLEGE 20CSH4801A ADVANCED PYTHON PROGRAMMING UNIT 3 Lecture By, Prabu. U Assistant Professor, Department of Computer Science and Engineering.

- 2. UNIT 3: Concurrency and Parallelism: Introduction to Concurrency and Parallelism, Threading, Multiprocessing, Inter Thread/Process Synchronisation, Futures, Concurrency with Async-IO. Asynchronous Programming: Reactive Programming Introduction, RxPy Observables, Observers and Subjects, RxPy Operators. Network Programming: Introduction to Sockets, Sockets in Python. 20CSH4801A ₋ ADVANCED PYTHON PROGRAMMING

- 3. 1. Introduction to Concurrency and Parallelism 2. Threading 3. Multiprocessing 4. Inter Thread/Process Synchronisation 5. Futures 6. Concurrency with AsyncIO CONCURRENCY AND PARALLELISM

- 4. 1. Introduction to Concurrency and Parallelism (i) Introduction (ii) Concurrency (iii) Parallelism (iv) Distribution (v) Grid Computing (vi) Concurrency and Synchronisation (vii)Object Orientation and Concurrency (viii)Threads versus Processes (ix) Some Terminology

- 5. (i) Introduction In this chapter we will introduce the concepts of concurrency and parallelism. We will also briefly consider the related topic of distribution. After this we will consider process synchronisation, why object-oriented approaches are well suited to concurrency and parallelism before finishing with a short discussion of threads versus processes.

- 6. (ii) Concurrency Concurrency is defined by the dictionary as two or more events or circumstances happening or existing at the same time. In Computer Science concurrency refers to the ability of different parts or units of a program, algorithm or problem to be executed at the same time, potentially on multiple processors or multiple cores. Here a processor refers to the central processing unit (or CPU) or a computer while core refers to the idea that a CPU chip can have multiple cores or processors on it. Originally a CPU chip had a single core. That is the CPU chip had a single processing unit on it.

- 7. However, over time, to increase computer performance, hardware manufacturers added additional cores or processing units to chips. Thus, a dual-core CPU chip has two processing units while a quad-core CPU chip has four processing units. This means that as far as the operating system of the computer is concerned, it has multiple CPUs on which it can run programs. Running processing at the same time, on multiple CPUs, can substantially improve the overall performance of an application. For example, let us assume that we have a program that will call three independent functions, these functions are: make a backup of the current data held by the program, print the data currently held by the program, run an animation using the current data.

- 8. Let us assume that these functions run sequentially, with the following timings: the backup function takes 13 s, the print function takes 15 s, the animation function takes 10 s. This would result in a total of 38 s to perform all three operations. This is illustrated graphically below: However, the three functions are all completely independent of each other. That is, they do not rely on each other for any results or behaviour; they do not need one of the other functions to complete before they can complete etc. Thus, we can run each function concurrently.

- 10. If the underlying operating system and program language being used support multiple processes, then we can potentially run each function in a separate process at the same time and obtain a significant speed up in overall execution time. If the application starts all three functions at the same time, then the maximum time before the main process can continue will be 15s, as that is the time taken by the longest function to execute. However, the main program may be able to continue as soon as all three functions are started as it also does not depend on the results from any of the functions; thus, the delay may be negligible (although there will typically be some small delay as each process is set up). This is shown graphically below:

- 12. (iii) Parallelism A distinction its often made in Computer Science between concurrency and parallelism. In concurrency, separate independent tasks are performed potentially at the same time. In parallelism, a large complex task is broken down into a set of subtasks. The subtasks represent part of the overall problem. Each subtask can be executed at the same time. Typically, it is necessary to combine the results of the subtasks together to generate an overall result.

- 13. These subtasks are also very similar if not functionally exactly the same (although in general each subtask invocation will have been supplied with different data). Thus, parallelism is when multiple copies of the same functionality are run at the same time, but on different data. Some examples of where parallelism can be applied include: A web search engine. Such a system may look at many, many web pages. Each time it does so it must send a request to the appropriate web site, receive the result and process the data obtained. These steps are the same whether it is the BBC web site, Microsoft’s web site or the web site of Cambridge University. Thus, the requests can be run sequentially or in parallel. Image Processing. A large image may be broken down into slices so that each slice can be analysed in parallel.

- 14. The following diagram illustrates the basic idea behind parallelism; a main program fires off three subtasks each of which runs in parallel. The main program then waits for all the subtasks to complete before combining together the results from the subtasks before it can continue.

- 15. (iv) Distribution When implementing a concurrent or parallel solution, where the resulting processes run is typically an implementation detail. Conceptually these processes could run on the same processor, physical machine or on a remote or distributed machine. As such distribution, in which problems are solved or processes executed by sharing the work across multiple physical machines, is often related to concurrency and parallelism. However, there is no requirement to distribute work across physical machines, indeed in doing so extra work is usually involved.

- 16. To distribute work to a remote machine, data and in many cases code, must be transferred and made available to the remote machine. This can result in significant delays in running the code remotely and may offset any potential performance advantages of using a physically separate computer. As a result, many concurrent/ parallel technologies default to executing code in a separate process on the same machine.

- 17. (v) Grid Computing Grid Computing is based on the use of a network of loosely coupled computers, in which each computer can have a job submitted to it, which it will run to completion before returning a result. In many cases the grid is made up of a heterogeneous set of computers (rather than all computers being the same) and may be geographically dispersed. These computers may be comprised of both physical computers and virtual machines. A Virtual Machine is a piece of software that emulates a whole computer and runs on some underlying hardware that is shared with other virtual machines.

- 19. Each Virtual Machine thinks it is the only computer on the hardware; however the virtual machines all share the resources of the physical computer. Multiple virtual machines can thus run simultaneously on the same physical computer. Each virtual machine provides its own virtual hardware, including CPUs, memory, hard drives, network interfaces and other devices. The virtual hardware is then mapped to the real hardware on the physical machine which saves costs by reducing the need for physical hardware systems along with the associated maintenance costs, as well as reducing the power and cooling demands of multiple computers.

- 20. Within a grid, software is used to manage the grid nodes and to submit jobs to those nodes. Such software will receive the jobs to perform (programs to run and information about the environment such as libraries to use) from clients of the grid. These jobs are typically added to a job queue before a job scheduler submits them to a node within the grid. When any results are generated by the job they are collected from the node and returned to the client. This is illustrated below:

- 21. The use of grids can make distributing concurrent/parallel processes amongst a set of physical and virtual machines much easier.

- 22. (vi) Concurrency and Synchronisation Concurrency relates to executing multiple tasks at the same time. In many cases these tasks are not related to each other such as printing a document and refreshing the User Interface. In these cases, the separate tasks are completely independent and can execute at the same time without any interaction. In other situations, multiple concurrent tasks need to interact; for example, where one or more tasks produce data, and one or more other tasks consume that data. This is often referred to as a producer-consumer relationship.

- 23. In other situations, all parallel processes must have reached the same point before some other behaviour is executed. Another situation that can occur is where we want to ensure that only one concurrent task executes a piece of sensitive code at a time; this code must therefore be protected from concurrent access. Concurrent and parallel libraires need to provide facilities that allow for such synchronisation to occur.

- 24. (vii) Object Orientation and Concurrency The concepts behind object-oriented programming lend themselves particularly well to the concepts associated with concurrency. For example, a system can be described as a set of discrete objects communicating with one another when necessary. In Python, only one object may execute at any one moment in time within a single interpreter. However, conceptually at least, there is no reason why this restriction should be enforced. The basic concepts behind object orientation still hold, even if each object executes within a separate independent process.

- 25. Traditionally a message send is treated like a procedural call, in which the calling object’s execution is blocked until a response is returned. However, we can extend this model quite simply to view each object as a concurrently executable program, with activity starting when the object is created and continuing even when a message is sent to another object (unless the response is required for further processing). In this model, there may be very many (concurrent) objects executing at the same time. Of course, this introduces issues associated with resource allocation, etc. but no more so than in any concurrent system.

- 26. One implication of the concurrent object model is that objects are larger than in the traditional single execution thread approach, because of the overhead of having each object as a separate thread of execution. Overheads such as the need for a scheduler to handling these execution threads and resource allocation mechanisms means that it is not feasible to have integers, characters, etc. as separate processes.

- 27. (viii) Threads versus Processes A process is an instance of a computer program that is being executed by the operating system. Any process has three key elements; the program being executed, the data used by that program (such as the variables used by the program) and the state of the process (also known as the execution context of the program). A (Python) Thread is a preemptive lightweight process. A Thread is considered to be pre-emptive because every thread has a chance to run as the main thread at some point.

- 28. When a thread gets to execute then it will execute until completion, until it is waiting for some form of I/O (Input/Output), sleeps for a period of time, it has run for 15 ms (the current threshold in Python 3). If the thread has not completed when one of the above situations occurs, then it will give up being the executing thread and another thread will be run instead. This means that one thread can be interrupted in the middle of performing a series of related steps.

- 29. A thread is a considered a lightweight process because it does not possess its own address space and it is not treated as a separate entity by the host operating system. Instead, it exists within a single machine process using the same address space. It is useful to get a clear idea of the difference between a thread (running within a single machine process) and a multi-process system that uses separate processes on the underlying hardware.

- 30. (ix) Some Terminology The world of concurrent programming is full of terminology that you may not be familiar with. Some of those terms and concepts are outlined below: Asynchronous versus Synchronous invocations: Most of the method, function or procedure invocations you will have seen in programming represent synchronous invocations. A synchronous method or function call is one which blocks the calling code from executing until it returns. Such calls are typically within a single thread of execution. Asynchronous calls are ones where the flow of control immediately returns to the callee, and the caller is able to execute in its own thread of execution. Allowing both the caller and the call to continue processing.

- 31. Non-Blocking versus Blocking code: Blocking code is a term used to describe the code running in one thread of execution, waiting for some activity to complete which causes one of more separate threads of execution to also be delayed. For example, if one thread is the producer of some data and other threads are the consumers of that data, then the consumer threads cannot continue until the producer generates the data for them to consume. In contrast, non-blocking means that no thread is able to indefinitely delay others.

- 32. Concurrent versus Parallel code: Concurrent code and parallel code are similar, but different in one significant aspect. Concurrency indicates that two or more activities are both making progress even though they might not be executing at the same point in time. This is typically achieved by continuously swapping competing processes between execution and non-execution. This process is repeated until at least one of the threads of execution (Threads) has completed their task. This may occur because two threads are sharing the same physical processor with each is being given a short time period in which to progress before the other gets a short time period to progress. The two threads are said to be sharing the processing time using a technique known as time slicing. Parallelism on the other hand implies that there are multiple processors available allowing each thread to execute on their own processor simultaneously.

- 33. 2. Threading (i) Introduction (ii) Threads (iii) Thread States (iv) Creating a Thread (v) Instantiating the Thread Class (vi) The Thread Class (vii)The Threading Module Functions (viii)Passing Arguments to a Thread (ix) Extending the Thread Class

- 34. 2. Threading (x) Daemon Threads (xi) Naming Threads (xii) Thread Local Data (xiii) Timers (xiv) The Global Interpreter Lock

- 35. (i) Introduction Threading is one of the ways in which Python allows you to write programs that multitask; that is appearing to do more than one thing at a time. This chapter presents the threading module and uses a short example to illustrate how these features can be used.

- 36. (ii) Threads In Python the Thread class from the threading module represents an activity that is run in a separate thread of execution within a single process. These threads of execution are lightweight, pre-emptive execution threads. A thread is lightweight because it does not possess its own address space and it is not treated as a separate entity by the host operating system; it is not a process. Instead, it exists within a single machine process using the same address space as other threads.

- 37. (iii) Threads States When a thread object is first created it exists, but it is not yet runnable; it must be started. Once it has been started it is then runnable; that is, it is eligible to be scheduled for execution. It may switch back and forth between running and being runnable under the control of the scheduler. The scheduler is responsible for managing multiple threads that all wish to grab some execution time.

- 38. A thread object remains runnable or running until its run() method terminates; at which point it has finished its execution and it is now dead. All states between un-started and dead are considered to indicate that the Thread is alive (and therefore may run at some point). This is shown below:

- 39. A Thread may also be in the waiting state; for example, when it is waiting for another thread to finish its work before continuing (possibly because it needs the results produced by that thread to continue). This can be achieved using the join() method and is also illustrated above. Once the second thread completes the waiting thread will again become runnable. The thread which is currently executing is termed the active thread. There are a few points to note about thread states: A thread is considered to be alive unless its run() method terminates after which it can be considered dead. A live thread can be running, runnable, waiting, etc.

- 40. The runnable state indicates that the thread can be executed by the processor, but it is not currently executing. This is because an equal or higher priority process is already executing, and the thread must wait until the processor becomes free. Thus, the diagram shows that the scheduler can move a thread between the running and runnable state. In fact, this could happen many times as the thread executes for a while, is then removed from the processor by the scheduler and added to the waiting queue, before being returned to the processor again at a later date.

- 41. (iv) Creating Threads There are two ways in which to initiate a new thread of execution: Pass a reference to a callable object (such as a function or method) into the Thread class constructor. This reference acts as the target for the Thread to execute. Create a subclass of the Thread class and redefine the run() method to perform the set of actions that the thread is intended to do. We will look at both approaches.

- 42. As a thread is an object, it can be treated just like any other object: it can be sent messages, it can have instance variables and it can provide methods. Thus, the multi-threaded aspects of Python all conform to the object-oriented model. This greatly simplifies the creation of multi-threaded systems as well as the maintainability and clarity of the resulting software. Once a new instance of a thread is created, it must be started. Before it is started, it cannot run, although it exists.

- 43. (v) Instantiating the Thread Class The Thread class can be found in the threading module and therefore must be imported prior to use. The class Thread defines a single constructor that takes up to six optional arguments: class threading.Thread(group=None, target=None, name=None, args=(), kwargs={}, daemon=None)

- 44. The Thread constructor should always be called using keyword arguments; the meaning of these arguments is: group should be None; reserved for future extension when a ThreadGroup class is implemented. target is the callable object to be invoked by the run() method. Defaults to None, meaning nothing is called. name is the thread name. By default, a unique name is constructed of the form “Thread-N” where N is an integer. args is the argument tuple for the target invocation. Defaults to (). If a single argument is provided the tuple is not required. If multiple arguments are provided then each argument is an element within the tuple. kwargs is a dictionary of keyword arguments for the target invocation. Defaults to {}. daemon indicates whether this thread runs as a daemon thread or not. If not None, daemon explicitly sets whether the thread is daemonic. If None (the default), the daemonic property is inherited from the current thread.

- 45. Once a Thread is created it must be started to become eligible for execution using the Thread.start() method. The following illustrates a very simple program that creates a Thread that will run the simple_worker() function: from threading import Thread def simple_worker(): print('hello') # Create a new thread and start it # The thread will run the function simple_worker t1 = Thread(target=simple_worker) t1.start() Refer SimpleWorkerEx.py

- 46. In this example, the thread t1 will execute the function simple_worker. The main code will be executed by the main thread that is present when the program starts; there are thus two threads used in the above program; main and t1.

- 47. (vi) The Thread Class The Thread class defines all the facilities required to create an object that can execute within its own lightweight process. The key methods are: start() Start the thread’s activity. It must be called at most once per thread object. It arranges for the object’s run() method to be invoked in a separate thread of control. This method will raise a RuntimeError if called more than once on the same thread object. run() Method representing the thread’s activity. You may override this method in a subclass. The standard run() method invokes the callable object passed to the object’s constructor as the target argument, if any, with positional and keyword arguments taken from the args and kwargs arguments, respectively. You should not call this method directly.

- 48. join(timeout = None)Wait until the thread sent this message terminates. This blocks the calling thread until the thread whose join()method is called terminates. When the timeout argument is present and not None, it should be a floating-point number specifying a timeout for the operation in seconds (or fractions thereof). A thread can be join()ed many times. name A string used for identification purposes only. It has no semantics. Multiple threads may be given the same name. The initial name is set by the constructor. Giving a thread a name can be useful for debugging purposes. ident The ‘thread identifier’ of this thread or None if the thread has not been started. This is a nonzero integer.

- 49. is_alive() Return whether the thread is alive. This method returns True just before the run()method starts until just after the run() method terminates. The module function threading.enumerate() returns a list of all alive threads. daemon A boolean value indicating whether this thread is a daemon thread (True)or not (False). This must be set before start() is called, otherwise a RuntimeError is raised. Its default value is inherited from the creating thread. The entire Python program exits when no alive non-daemon threads are left. Refer ThreadMethodsEx.py Refer JoinMethodEx.py

- 50. (vii) The Threading Module Functions There are a set of threading module functions which support working with threads; these functions include:: threading.active_count() Return the number of Thread objects currently alive. The returned count is equal to the length of the list returned by enumerate(). threading.current_thread()Return the current Thread object, corresponding to the caller’s thread of control. If the caller’s thread of control was not created through the threading module, a dummy thread object with limited functionality is returned. threading.get_ident()Return the ‘thread identifier’ of the current thread. This is a nonzero integer. Thread identifiers may be recycled when a thread exits and another thread is created.

- 51. threading.enumerate()Return a list of all Thread objects currently alive. The list includes daemon threads, dummy thread objects created by current_thread() and the main thread. It excludes terminated threads and threads that have not yet been started. threading.main_thread()Return the main Thread object.

- 52. (viii) Passing Arguments to a Thread Many functions expect to be given a set of parameter values when they are run; these arguments still need to be passed to the function when they are run via a separate thread. These parameters can be passed to the function to be executed via the args parameter, for example: Refer ArgsToThread.py In this example, the worker function takes a message to be printed 10 times within a loop. Inside the loop the thread will print the message and then sleep for a second. This allows other threads to be executed as the Thread must wait for the sleep timeout to finish before again becoming runnable

- 53. Three threads t1, t2 and t3 are then created each with a different message. Note that the worker()function can be reused with each Thread as each invocation of the function will have its own parameter values passed to it. The three threads are then started. This means that at this point there is the main thread, and three worker threads that are Runnable (although only one thread will run at a time). The three worker threads each run the worker() function printing out either the letter A, B or C ten times. This means that once started each thread will print out a string, sleep for 1 s and then wait until it is selected to run again, this is illustrated in the following diagram:

- 55. Notice that the main thread is finished after the worker threads have only printed out a single letter each; however as long as there is at least one non- daemon thread running the program will not terminate; as none of these threads are marked as a daemon thread the program continues until the last thread has finished printing out the tenth of its letters. Also notice how each of the threads gets a chance to run on the processor before it sleeps again; thus, we can see the letters A, B and C all mixed in together.

- 56. (ix) Extending the Thread Class The second approach to creating a Thread mentioned earlier was to subclass the Thread class. To do this you must 1. Define a new subclass of Thread. 2. Override the run() method. 3. Define a new __init__()method that calls the parent class __init__() method to pass the required parameters up to the Thread class constructor. This is illustrated below where the WorkerThread class passes the name, target and daemon parameters up to the Thread super class constructor. Refer ExtendingThreadEx.py

- 57. Note that it is common to call any subclasses of the Thread class, SomethingThread, to make it clear that it is a subclass of the Thread class and should be treated as if it was a Thread (which of course it is).

- 58. (x) Daemon Threads A thread can be marked as a daemon thread by setting the daemon property to true either in the constructor or later via the accessor property. Refer DaemonThreadEx.py This creates a background daemon thread that will run the function worker().Such threads are often used for house keeping tasks (such as background data backups etc.). As mentioned above a daemon thread is not enough on its own to keep the current program from terminating.

- 59. This means that the daemon thread will keep looping until the main thread finishes. As the main thread sleeps for 5 s that allows the daemon thread to print out about 5 strings before the main thread terminates. This is illustrated by the output below: Starting CCCCCDone

- 60. (xi) Naming Threads Threads can be named; which can be very useful when debugging an application with multiple threads. In the following example, three threads have been created; two have been explicitly given a name related to what they are doing while the middle one has been left with the default name. We then start all three threads and use the threading.enumerate() function to loop through all the currently live threads printing out their names: Refer NamingThreadEx.py

- 61. As you can see in addition to the worker thread and the daemon thread there is a MainThread (that initiates the whole program) and Thread-1 which is the thread referenced by the variable t2 and uses the default thread name.

- 62. (xii) Thread Local Data In some situations, each Thread requires its own copy of the data it is working with; this means that the shared (heap) memory is difficult to use as it is inherently shared between all threads. To overcome this Python provides a concept known as Thread-Local data. Thread-local data is data whose values are associated with a thread rather than with the shared memory. This idea is illustrated below:

- 64. To create thread-local data it is only necessary to create an instance of threading. local (or a subclass of this) and store attributes into it. The instances will be thread specific; meaning that one thread will not see the values stored by another thread. Refer ThreadLocalEx.py The example presented above defines two functions. The first function attempts to access a value in the thread local data object. If the value is not present an exception is raised (AttributeError). The show_value() function catches the exception or successfully processes the data.

- 65. The worker function calls show_value() twice, once before it sets a value in the local data object and once after. As this function will be run by separate threads the currentThread name is printed by the show_value() function. The main function crates a local data object using the local() function from the threading library. It then calls show_value() itself. Next it creates two threads to execute the worker function in passing the local_data object into them; each thread is then started. Finally, it calls show_value() again. As can be seen from the output one thread cannot see the data set by another thread in the local_data object (even when the attribute name is the same).

- 66. (xiii) Timers The Timer class represents an action (or task) to run after a certain amount of time has elapsed. The Timer class is a subclass of Thread and as such also functions as an example of creating custom threads. Timers are started, as with threads, by calling their start() method. The timer can be stopped (before its action has begun) by calling the cancel() method. The interval the timer will wait before executing its action may not be exactly the same as the interval specified by the user as another thread may be running when the timer wishes to start. The signature of the Timer class constructor is: Timer(interval, function, args = None, kwargs = None)

- 67. An example of using the Timer class is given below: Refer TimerClassEx.py In this case the Timer will run the hello function after an initial delay of 5 s.

- 68. (xiv) The Global Interpreter Lock The Global Interpreter Lock (or the GIL) is a global lock within the underlying CPython interpreter that was designed to avoid potential deadlocks between multiple tasks. It is designed to protect access to Python objects by preventing multiple threads from executing at the same time. For the most part you do not need to worry about the GIL as it is at a lower level than the programs you will be writing. However, it is worth noting that the GIL is controversial because it prevents multithreaded Python programs from taking full advantage of multiprocessor systems in certain situations.

- 69. This is because in order to execute a thread must obtain the GIL and only one thread at a time can hold the GIL (that is the lock it represents). This means that Python acts like a single CPU machine; only one thing can run at a time. A Thread will only give up the GIL if it sleeps, has to wait for something (such as some I/O) or it has held the GIL for a certain amount of time. If the maximum time that a thread can hold the GIL has been met the scheduler will release the GIL from that thread (resulting it stopping execution and now having to wait until it has the GIL returned to it) and will select another thread to gain the GIL and start to execute. It is thus impossible for standard Python threads to take advantage of the multiple CPUs typically available on modern computer hardware.

- 70. 3. Multiprocessing (i) Introduction (ii) The Process Class (iii) Working with the Process Class (iv) Alternative Ways to Start a Process (v) Using a Pool (vi) Exchanging Data Between Processes (vii)Sharing State Between Processes (a) Process Shared Memory

- 71. (i) Introduction The multiprocessing library supports the generation of separate (operating system level) processes to execute behaviour (such as functions or methods) using an API that is similar to the Threading API presented in the last chapter. It can be used to avoid the limitation introduced by the Global Interpreter Lock (the GIL) by using separate operating system processes rather than lightweight threads (which run within a single process). This means that the multiprocessing library allows developers to fully exploit the multiple processor environment of modern computer hardware which typically has multiple processor cores allowing multiple operations/behaviours to run in parallel; this can be very significant for data analytics, image processing, animation and games applications.

- 72. The multiprocessing library also introduces some new features, most notably the Pool object for parallelising execution of a callable object (e.g. functions and methods) that has no equivalent within the Threading API.

- 73. (ii) The Process Class The Process class is the multiprocessing library’s equivalent to the Thread class in the threading library. It can be used to run a callable object such as a function in a separate process. To do this it is necessary to create a new instance of the Process class and then call the start() method on it. Methods such as join() are also available so that one process can wait for another process to complete before continuing etc. The main difference is that when a new Process is created it runs within a separate process on the underlying operating systems (such as Window, Linux or Mac OS).

- 74. In contrast a Thread runs within the same process as the original program. This means that the process is managed and executed directly by the operating system on one of the processors that are part of the underlying computer hardware. The up-side of this is that you are able to exploit the underlying parallelism inherent in the physical computer hardware. The downside is that a Process takes more work to set up than the lighter weight Threads. The constructor for the Process class provides the same set of arguments as the Thread class, namely: class multiprocessing.Process(group=None, target=None, name=None, args=(), kwargs={}, daemon=None)

- 75. group should always be None; it exists solely for compatibility with the Threading API. target is the callable object to be invoked by the run() method. It defaults to None, meaning nothing is called. name is the process name. args is the argument tuple for the target invocation. kwargs is a dictionary of keyword arguments for the target invocation. daemon argument sets the process daemon flag to True or False. If None (the default), this flag will be inherited from the creating process.

- 76. As with the Thread class, the Process constructor should always be called using keyword arguments. The Process class also provides a similar set of methods to the Thread class start() Start the process’s activity. This must be called at most once per process object. It arranges for the object’s run() method to be invoked in a separate process. join([timeout]) If the optional argument timeout is None (the default), the method blocks until the joined process terminates. If timeout is a positive number, it blocks at most timeout seconds. Note that the method returns None if its process terminates or if the method times out. is_alive()Return whether the process is alive. Roughly, a process object is alive from the moment the start() method returns until the child process terminates.

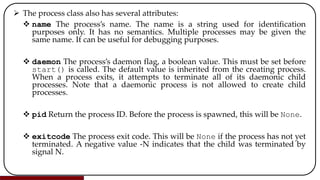

- 77. The process class also has several attributes: name The process’s name. The name is a string used for identification purposes only. It has no semantics. Multiple processes may be given the same name. It can be useful for debugging purposes. daemon The process’s daemon flag, a boolean value. This must be set before start() is called. The default value is inherited from the creating process. When a process exits, it attempts to terminate all of its daemonic child processes. Note that a daemonic process is not allowed to create child processes. pid Return the process ID. Before the process is spawned, this will be None. exitcode The process exit code. This will be None if the process has not yet terminated. A negative value -N indicates that the child was terminated by signal N.

- 78. In addition to these methods and attributes, the Process class also defines additional process related methods including terminate() Terminate the process. kill() Same as terminate() except that on Unix the SIGKILL signal is used instead of the SIGTERM signal. close() Close the Process object, releasing all resources associated with it. ValueError is raised if the underlying process is still running. Once close() returns successfully, most of the other methods and attributes of the Process object will raise a ValueError.

- 79. (iii) Working with the Process Class The following simple program creates three Process objects; each runs the function worker(), with the string arguments A, B and C respectively. These three process objects are then started using the start()method. Refer ProcessClassEx.py It is essentially the same as the equivalent program for threads but with the Process class being used instead of the Thread class. The main difference between the Thread and Process versions is that the Process version runs the worker function in separate processes whereas in the Thread version all the Threads share the same process.

- 80. (iv) Alternative Ways to Start a Process When the start() method is called on a Process, three different approaches to starting the underlying process are available. These approaches can be set using the multiprocessing.set_start_method() which takes a string indicating the approach to use. The actual process initiation mechanisms available depend on the underlying operating system: ‘spawn’ The parent process starts a fresh Python interpreter process. The child process will only inherit those resources necessary to run the process objects run() method. In particular, unnecessary file descriptors and handles from the parent process will not be inherited. Starting a process using this method is rather slow compared to using fork or forkserver. Available on Unix and Windows. This is the default on Windows.

- 81. ‘fork’ The parent process uses os.fork() to fork the Python interpreter. The child process, when it begins, is effectively identical to the parent process. All resources of the parent are inherited by the child process. Available only on Unix type operating systems. This is the default on Unix, Linux and Mac OS. ‘forkserver’ In this case a server process is started. From then on, whenever a new process is needed, the parent process connects to the server and requests that it fork a new process. The fork server process is single threaded, so it is safe for it to use os.fork(). No unnecessary resources are inherited. Available on Unix style platforms which support passing file descriptors over Unix pipes. The set_start_method()should be used to set the start method (and this should only be set once within a program). Refer SetStartEx.py

- 82. Note that the parent process and current process ids are printed out for the worker()function, while the main() method prints out only its own id. This shows that the main application process id is the same as the worker process parents’ id. Alternatively, it is possible to use the get_context() method to obtain a context object. Context objects have the same API as the multiprocessing module and allow you to use multiple start methods in the same program, for example: ctx = multiprocessing.get_context(‘spawn’) q = ctx.Queue() p = ctx.Process(target = foo, args = (q,))

- 83. (v) Using a Pool Creating Processes is expensive in terms of computer resources. It would therefore be useful to be able to reuse processes within an application. The Pool class provides such reusable processes. The Pool class represents a pool of worker processes that can be used to perform a set of concurrent, parallel operations. The Pool provides methods which allow tasks to be offloaded to these worker processes. The Pool class provides a constructor which takes a number of arguments: class multiprocessing.pool.Pool(processes, initializer, initargs, maxtasksperchild, context)

- 84. These represent: processes is the number of worker processes to use. If processes is None then the number returned by os.cpu_count()is used. initializer If initializer is not None then each worker process will call initializer(*initargs) when it starts. maxtasksperchild is the number of tasks a worker process can complete before it will exit and be replaced with a fresh worker process, to enable unused resources to be freed. The default maxtasksperchild is None, which means worker processes will live as long as the pool. context can be used to specify the context used for starting the worker processes. Usually a pool is created using the function multiprocessing. Pool(). Alternatively the pool can be created using the Pool() method of a context object. The Pool class provides a range of methods that can be used to submit work to the worker processes managed by the pool. Note that the methods of the Pool object should only be called by the process which created the pool.

- 85. The following diagram illustrates the effect of submitting some work or task to the pool. From the list of available processes, one process is selected, and the task is passed to the process. The process will then execute the task. On completion any results are returned, and the process is returned to the available list. If when a task is submitted to the pool, there are no available processes then the task will be added to a wait queue until such time as a process is available to handle the task.

- 87. The simplest of the methods provided by the Pool for work submission is the map method: pool.map(func, iterable, chunksize=None) This method returns a list of the results obtained by executing the function in parallel against each of the items in the iterable parameter. The func parameter is the callable object to be executed (such as a function or a method). The iteratable is used to pass in any parameters to the function. This method chops the iterable into a number of chunks which it submits to the process pool as separate tasks. The (approximate) size of these chunks can be specified by setting chunksize to a positive integer. The method blocks until the result is ready.

- 88. The following sample program illustrates the basic use of the Pool and the map() method. Refer PoolEx.py Note that the Pool object must be closed once you have finished with it; we are therefore using the ‘with as’ statement described earlier in this book to handle the Pool resource cleanly (it will ensure the Pool is closed when the block of code within the with as statement is completed). As can be seen from this output the map() function is used to run six different instances of the worker() function with the values provided by the list of integers. Each instance is executed by a worker process managed by the Pool.

- 89. However, note that the Pool only has 4 worker processes, this means that the last two instances of the worker function must wait until two of the worker Processes have finished the work they are doing and can be reused. This can act as a way of throttling, or controlling, how much work is done in parallel. A variant on the map()method is the imap_unordered() method. This method also applies a given function to an iterable but does not attempt to maintain the order of the results. The results are accessible via the iterable returned by the function. This may improve the performance of the resulting program. The following program modified the worker()function to return its result rather than print it. These results are then accessible by iterating over them as they are produced via a for loop:

- 90. A further method available on the Pool class is the Pool.apply_async() method. This method allows operations/functions to be executed asynchronously allowing the method calls to return immediately. That is as soon as the method call is made, control is returned to the calling code which can continue immediately. Any results to be collected from the asynchronous operations can be obtained either by providing a callback function or by using the blocking get() method to obtain a result. Two examples are shown below, the first uses the blocking get() method. This method will wait until a result is available before continuing. The second approach uses a callback function. The callback function is called when a result is available; the result is passed into the function. Refer PoolAsync.py

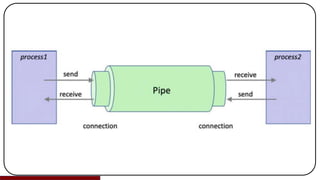

- 91. (vi) Exchanging Data Between Processes In some situations, it is necessary for two processes to exchange data. However, the two process objects do not share memory as they are running in separate operating system level processes. To get around this the multiprocessing library provides the Pipe() function. The Pipe() function returns a pair of connection.Connection objects connected by a pipe which by default is duplex (two-way). The two connection objects returned by Pipe() represent the two ends of the pipe. Each connection object has send() and recv() methods (among others). This allows one process to send data via the send() method of one end of the connection object. In turn a second process can receive that data via the receive () method of the other connection object. This is illustrated below:

- 93. Once a program has finished with a connection is should be closed using close (). The following program illustrates how pipe connections are used: Refer DataExchange.py Note that data in a pipe may become corrupted if two processes try to read from or write to the same end of the pipe at the same time. However, there is no risk of corruption from processes using different ends of the pipe at the same time.

- 94. (vii) Sharing State Between Processes In general, if it can be avoided, then you should not share state between separate processes. However, if it is unavoidable then the mutiprocessing library provides two ways in which state (data) can be shared, these are Shared Memory (as supported by multiprocessing.Value and multiprocessing.Array) and Server Process

- 95. (a) Process Shared Memory Data can be stored in a shared memory map using a multiprocessing.Value or multiprocessing.Array. This data can be accessed by multiple processes. The constructor for the multiprocessing.Value type is: multiprocessing.Value (typecode_or_type, *args, lock = True) Where: typecode_or_type determines the type of the returned object: it is either a ctypes type or a one character typecode. For example, ‘d’ indicates a double precision float and ‘i’ indicates a signed integer.

- 96. *args is passed on to the constructor for the type. lock If lock is True (the default) then a new recursive lock object is created to synchronise access to the value. If lock is False then access to the returned object will not be automatically protected by a lock, so it will not necessarily be process-safe. The constructor for multiprocessing.Array is multiprocessing.Array(typecode_or_type, size_or_initializer, lock=True)

- 97. Where: typecode_or_type determines the type of the elements of the returned array. size_or_initializer If size_or_initializer is an integer, then it determines the length of the array, and the array will be initially zeroed. Otherwise, size_or_initializer is a sequence which is used to initialise the array and whose length determines the length of the array. If lock is True (the default) then a new lock object is created to synchronise access to the value. If lock is False then access to the returned object will not be automatically protected by a lock, so it will not necessarily be “process- safe”. Refer ProcessSharedMemoryEx.py

- 98. 4. Inter Thread/Process Synchronisation (i) Introduction (ii) Using a Barrier (iii) Event Signalling (iv) Synchronising Concurrent Code (v) Python Locks (vi) Python Conditions (vii) Python Semaphores (viii) The Concurrent Queue Class

- 99. (i) Introduction In this chapter we will look at several facilities supported by both the threading and multiprocessing libraries that allow for synchronisation and cooperation between Threads or Processes. In the remainder of this chapter we will look at some of the ways in which Python supports synchronisation between multiple Threads and Processes. Note that most of the libraries are mirrored between threading and multiprocessing so that the same basic ideas hold for both approaches with, in the main, very similar APIs. However, you should not mix and match threads and processes. If you are using Threads, then you should only use facilities from the threading library. In turn if you are using Processes than you should only use facilities in the multiprocessing library. The examples given in this chapter will use one or other of the technologies but are relevant for both approaches.

- 100. (ii) Using a Barrier Using a threading.Barrier (or multiprocessing.Barrier) is one of the simplest ways in which the execution of a set of Threads (or Processes) can be synchronised. The threads or processes involved in the barrier are known as the parties that are taking part in the barrier. Each of the parties in the barrier can work independently until it reaches the barrier point in the code. The barrier represents an end point that all parties must reach before any further behaviour can be triggered.

- 101. At the point that all the parties reach the barrier it is possible to optionally trigger a post-phase action (also known as the barrier callback). This post-phase action represents some behaviour that should be run when all parties reach the barrier but before allowing those parties to continue. The post-phase action (the callback) executes in a single thread (or process). Once it is completed then all the parties are unblocked and may continue. This is illustrated in the following diagram. Threads t1, t2 and t3 are all involved in the barrier. When thread t1 reaches the barrier, it must wait until it is released by the barrier.

- 102. Similarly, when t2 reaches the barrier, it must wait. When t3 finally reaches the barrier, the callback is invoked. Once the callback has completed the barrier releases all three threads which are then able to continue.

- 103. An example of using a Barrier object is given below. Note that the function being invoked in each Thread must also cooperate in using the barrier as the code will run up to the barrier.wait() method and then wait until all other threads have also reached this point before being allowed to continue. The Barrier is a class that can be used to create a barrier object. When the Barrier class is instantiated, it can be provided with three parameters: Where, parties the number of individual parties that will participate in the Barrier. action is a callable object (such as a function) which, when supplied, will be called after all the parties have entered the barrier and just prior to releasing them all.

- 104. timeout If a ‘timeout’ is provided, it is used as the default for all subsequent wait() calls on the barrier. Thus, in the following code b = Barrier(3, action=callback) Indicates that there will be three parties involved in the Barrier and that the callback function will be invoked when all three reach the barrier (however the timeout is left as the default value None). The Barrier object is created outside of the Threads (or Processes) but must be made available to the function being executed by the Thread (or Process). The easiest way to handle this is to pass the barrier into the function as one of the parameters; this means that the function can be used with different barrier objects depending upon the context.

- 105. An example using the Barrier class with a set of Threads is given below: Refer BarrierEx.py From this you can see that the print_it() function is run three times concurrently; all three invocations reach the barrier.wait() statement but in a different order to that in which they were started. Once the three have reached this point the callback function is executed before the print_it() function invocations can proceed. The Barrier class itself provides several methods used to manage or find out information about the barrier:

- 106. A Barrier object can be reused any number of times for the same number of Threads.

- 107. The above example could easily be changed to run using Process by altering the import statement and creating a set of Processes instead of Threads: from multiprocessing import Barrier, Process ... print('Main - Starting') b = Barrier(3, callback) t1 = Process(target=print_it, args=('A', b)) Note that you should only use threads with a threading.Barrier. In turn you should only use Processes with a multiprocessing.Barrier.

- 108. (iii) Event Signalling Although the point of using multiple Threads or Processes is to execute separate operations concurrently, there are times when it is important to be able to allow two or more Threads or Processes to cooperate on the timing of their behaviour. The Barrier object presented above is a relatively high-level way to do this; however, in some cases finer grained control is required. The threading.Event or multiprocessing.Event classes can be used for this purpose. An Event manages an internal flag that callers can either set() or clear().

- 109. Other threads can wait() for the flag to be set(), effectively blocking their own progress until allowed to continue by the Event. The internal flag is initially set to False which ensures that if a task gets to the Event before it is set then it must wait. You can infact invoke wait with an optional timeout. If you do not include the optional timeout, then wait() will wait forever while wait(timeout)will wait up to the timeout given in seconds. If the time out is reached, then the wait method returns False; otherwise wait returns True. As an example, the following diagram illustrates two processes sharing an event object. The first process runs a function that waits for the event to be set. In turn the second process runs a function that will set the event and thus release the waiting process. Refer EventEx.py

- 111. To change this to use Threads we would merely need to change the import and to create two Threads: from threading import Thread, Event ... print('Starting') event = Event() t1 = Thread(target=wait_for_event, args=[event]) t1.start() t2 = Thread(target=set_event, args=[event]) t2.start() t1.join() print('Done')

- 112. (iv) Synchronising Concurrent Code It is not uncommon to need to ensure that critical regions of code are protected from concurrent execution by multiple Threads or Processes. These blocks of code typically involve the modification of, or access to, shared data. It is therefore necessary to ensure that only one Thread or Process is updating a shared object at a time and that consumer threads or processes are blocked while this update is occurring. This situation is most common where one or more Threads or Processes are the producers of data and one or more other Threads or Processes are the consumers of that data.

- 113. This is illustrated in the following diagram.

- 114. In this diagram the Producer is running in its own Thread (although it could also run in a separate Process) and places data onto some common shared data container. Subsequently a number of independent Consumers can consume that data when it is available and when they are free to process the data. However, there is no point in the consumers repeatedly checking the container for data as that would be a waste of resources (for example in terms of executing code on a processor and of context switching between multiple Threads or Processes). We therefore need some form of notification or synchronisation between the Producer and the Consumer to manage this situation. Python provides several classes in the threading (and also in the multiprocessing) library that can be used to manage critical code blocks. These classes include Lock, Condition and Semaphore.

- 115. (v) Python Locks The Lock class defined (both in the threading and the multiprocessing libraries) provides a mechanism for synchronising access to a block of code. The Lock object can be in one of two states locked and unlocked (with the initial state being unlocked). The Lock grants access to a single thread at a time; other threads must wait for the Lock to become free before progressing. The Lock class provides two basic methods for acquiring the lock (acquire()) and releasing (release()) the lock.

- 116. When the state of the Lock object is unlocked, then acquire() changes the state to locked and returns immediately. When the state is locked, acquire() blocks until a call to release() in another thread changes it to unlocked, then the acquire() call resets it to locked and returns. The release() method should only be called in the locked state; it changes the state to unlocked and returns immediately. If an attempt is made to release an unlocked lock, a RuntimeError will be raised. An example of using a Lock object is shown below: Refer LockEx.py

- 117. The SharedData class presented above uses locks to control access to critical blocks of code, specifically to the read_value() and the change_value() methods. The Lock object is held internally to the ShareData object and both methods attempt to acquire the lock before performing their behavior but must then release the lock after use. The read_value() method does this explicitly using try: finally: blocks while the change_value() method uses a with statement (as the Lock type supports the Context Manager Protocol). Both approaches achieve the same result but the with statement style is more concise. The SharedData class is used below with two simple functions. In this case the SharedData object has been defined as a global variable but it could also have been passed into the reader() and updater() functions as an argument.

- 118. Both the reader and updater functions loop, attempting to call the read_value() and change_value() methods on the shared_data object. As both methods use a lock to control access to the methods, only one thread can gain access to the locked area at a time. This means that the reader() function may start to read data before the updater() function has changed the data (or vice versa). This is indicated by the output where the reader thread accesses the value ‘0’ twice before the updater records the value ‘1’. However, the updater() function runs a second time before the reader gains access to locked block of code which is why the value 2 is missed. Depending upon the application this may or may not be an issue.

- 119. Lock objects can only be acquired once; if a thread attempts to acquire a lock on the same Lock object more than once then a RuntimeError is thrown. If it is necessary to re-acquire a lock on a Lock object then the threading. RLock class should be used. This is a Re-entrant Lock and allows the same Thread (or Process) to acquire a lock multiple times. The code must however release the lock as many times as it has acquired it.

- 120. (vi) Python Conditions Conditions can be used to synchronise the interaction between two or more Threads or Processes. Conditions objects support the concept of a notification model; ideal for a shared data resource being accessed by multiple consumers and producers. A Condition can be used to notify one or all of the waiting Threads or Processes that they can proceed (for example to read data from a shared resource).

- 121. The methods available that support this are: notify() notifies one waiting thread which can then continue notify_all() notifies all waiting threads that they can continue wait() causes a thread to wait until it has been notified that it can continue A Condition is always associated with an internal lock which must be acquired and released before the wait() and notify() methods can be called. The Condition supports the Context Manager Protocol and can therefore be used via a with statement (which is the most typical way to use a Condition) to obtain this lock.

- 122. For example, to obtain the condition lock and call the wait method we might write: with condition: condition.wait() print('Now we can proceed’) The condition object is used in the following example to illustrate how a producer thread and two consumer threads can cooperate. A DataResource class has been defined which will hold an item of data that will be shared between a consumer and a set of producers. It also (internally) defines a Condition attribute. Note that this means that the Condition is completely internalised to the DataResource class; external code does not need to know, or be concerned with, the Condition and its use.

- 123. Instead external code can merely call the consumer() and producer() functions in separate Threads as required. The consumer() method uses a with statement to obtain the (internal) lock on the Condition object before waiting to be notified that the data is available. In turn the producer() method also uses a with statement to obtain a lock on the condition object before generating the data attribute value and then notifying anything waiting on the condition that they can proceed. Note that although the consumer method obtains a lock on the condition object; if it has to wait it will release the lock and re-obtain the lock once it is notified that it can continue. This is a subtly that is often missed. Refer ConditionsEx.py

- 124. (vii) Python Semaphores The Python Semaphore class implements Dijkstra’s counting semaphore model. In general, a semaphore is like an integer variable, its value is intended to represent a number of available resources of some kind. There are typically two operations available on a semaphore; these operations are acquire() and release() (although in some libraries Dijkstra’s original names of p() and v() are used, these operation names are based on the original Dutch phrases).

- 125. The acquire() operation subtracts one from the value of the semaphore, unless the value is 0, in which case it blocks the calling thread until the semaphore’s value increases above 0 again. The signal() operation adds one to the value, indicating a new instance of the resource has been added to the pool. Both the threading.Semaphore and the multiprocessing.Semaphore classes also supports the Context Management Protocol. An optional parameter used with the Semaphore constructor gives the initial value for the internal counter; it defaults to 1. If the value given is less than 0, ValueError is raised. The following example illustrates 5 different Threads all running the same worker() function.

- 126. The worker() function attempts to acquire a semaphore; if it does then it continues into the with statement block; if it doesn’t, it waits until it can acquire it. As the semaphore is initialised to 2 there can only be two threads that can acquire the Semaphore at a time. The sample program however, starts up five threads, this therefore means that the first 2 running Threads will acquire the semaphore and the remaining thee will have to wait to acquire the semaphore. Once the first two release the semaphore a further two can acquire it and so on. Refer SemaphoreEx.py

- 127. (viii) The Concurrent Queue Class As might be expected the model where a producer Thread or Process generates data to be processed by one or more Consumer Threads or Processes is so common that a higher-level abstraction is provided in Python than the use of Locks, Conditions or Semaphores; this is the blocking queue model implemented by the threading.Queue or multiprocessing.Queue classes. Both these Queue classes are Thread and Process safe. That is, they work appropriately (using internal locks) to manage data access from concurrent Threads or Processes. An example of using a Queue to exchange data between a worker process and the main process is shown below.

- 128. The worker process executes the worker() function sleeping, for 2 s before putting a string ‘Hello World’ on the queue. The main application function sets up the queue and creates the process. The queue is passed into the process as one of its arguments. The process is then started. The main process then waits until data is available on the queue via the (blocking) get() methods. Once the data is available it is retrieved and printed out before the main process terminates. Refer QueueEx.py

- 129. However, this does not make it that clear how the execution of the two processes interweaves. The following diagram illustrates this graphically:

- 130. In the above diagram the main process waits for a result to be returned from the queue following the call to the get() method; as it is waiting it is not using any system resources. In turn the worker process sleeps for two seconds before putting some data onto the queue (via put(‘Hello World’)). After this value is sent to the Queue the value is returned to the main process which is woken up (moved out of the waiting state) and can continue to process the rest of the main function.

- 131. 5. Futures (i) Introduction (ii) The Need for a Future (iii) Futures in Python (a) Future Creation (b) Simple Example Future (iv) Running Multiple Futures (a) Waiting for All Futures to Complete (b) Processing Results as Completed (v) Processing Future Results Using a Callback

- 132. (i) Introduction A future is a thread (or process) that promises to return a value in the future; once the associated behaviour has completed. It is thus a future value. It provides a very simple way of firing off behaviour that will either be time consuming to execute or which may be delayed due to expensive operations such as Input/Output and which could slow down the execution of other elements of a program.

- 133. (ii) The Need for a Future In a normal method or function invocation, the method or function is executed in line with the invoking code (the caller) having to wait until the function or method (the callee) returns. Only after this the caller able to continue to the next line of code and execute that. In many (most) situations this is exactly what you want as the next line of code may depend on a result returned from the previous line of code etc. However, in some situations the next line of code is independent of the previous line of code. For example, let us assume that we are populating a User Interface (UI).

- 134. The first line of code may read the name of the user from some external data source (such as a database) and then display it within a field in the UI. The next line of code may then add today's data to another field in the UI. These two lines of code are independent of each other and could be run concurrently/in parallel with each other. In this situation we could use either a Thread or a Process to run the two lines of code independently of the caller, thus achieving a level of concurrency and allowing the caller to carry onto the third line of code etc. However, neither the Thread or the Process by default provide a simple mechanism for obtaining a result from such an independent operation.

- 135. This may not be a problem as operations may be self-contained; for example, they may obtain data from the database or from today’s date and then updated a UI. However, in many situations the calculation will return a result which needs to be handled by the original invoking code (the caller). This could involve performing a long running calculation and then using the result returned to generate another value or update another object etc. A Future is an abstraction that simplifies the definition and execution of such concurrent tasks. Futures are available in many different languages including Python but also Java, Scala, C++ etc.

- 136. When using a Future; a callable object (such as a function) is passed to the Future which executes the behaviour either as a separate Thread or as a separate Process and then can return a result once it is generated. The result can either be handled by a call back function (that is invoked when the result is available) or by using a operation that will wait for a result to be provided.

- 137. (iii) Futures in Python The concurrent.futures library was introduced into Python in version 3.2 (and is also available in Python 2.5 onwards). The concurrent.futures library provides the Future class and a high-level API for working with Futures. The concurrent.futures.Future class encapsulates the asynchronous execution of a callable object (e.g. a function or method). The Future class provides a range of methods that can be used to obtain information about the state of the future, retrieve results or cancel the future: cancel() Attempt to cancel the Future. If the Future is currently being executed and cannot be cancelled then the method will return False, otherwise the call will be cancelled and the method will return True.

- 138. cancelled() Returns True if the Future was successfully cancelled. running() Returns True if the Future is currently being executed and cannot be cancelled. done() Returns True if the Future was successfully cancelled or finished running. result(timeout=None) Return the value returned by the Future. If the Future hasn’t yet completed then this method will wait up to timeout seconds. If the call hasn’t completed in timeout seconds, then a TimeoutError will be raised. timeout can be an int or float. If timeout is not specified or None, there is no limit to the wait time. If the future is cancelled before completing then the CancelledError will be raised. If the call raised, this method will raise the same exception. It should be noted however, that Future instances should not be created directly, rather they should be created via the submit method of an appropriate executor.

- 139. (a) Future Creation Futures are created and executed by Executors. An Executor provides two methods that can be used to execute a Future (or Futures) and one to shut down the executor. At the root of the executor class hierarchy is the concurrent.futures.Executor abstract class. It has two subclasses: the ThreadPoolExecutor and the ProcessPoolExecutor. The ThreadPoolExecutor uses threads to execute the futures while the ProcessPoolExecutor uses separate processes. You can therefore choose how you want the Future to be executed by specifying one or other of these executors.

- 140. (b) Simple Example Future To illustrate these ideas, we will look at a very simple example of using a Future. To do this we will use a simple worker function; similar to that used in the previous chapters: Refer FutureEx.py The only difference with this version of worker is that it also returns a result which is the number of times that the worker printed out the message.

- 141. We can make the invocation of this method into a Future. To do this we use a ThreadPoolExecutor imported from the concurrent.futures module. We will then submit the worker function to the pool for execution. This returns a reference to a Future which we can use to obtain the result: Refer ThreadPoolExecuterEx.py

- 142. Notice how the output from the main program and the worker is interwoven with two ‘A’s being printed out before the message starting ‘Obtained a…’. In this case a new ThreadPoolExecutor is being created with one thread in the pool (typically there would be multiple threads in the pool but one is being used here for illustrative purposes). The submit() method is then used to submit the function worker with the parameter ‘A’ to the ThreadPoolExecutor for it to schedule execution of the function. The submit() method returns a Future object. The main program then waits for the future object to return a result (by calling the result() method on the future). This method can also take a timeout. To change this example to use Processes rather than Threads all that is needed is to change the pool executor to a ProcessPoolExecutor:

- 143. Refer ProcessPoolExecuter.py The only difference is that in this particular run the message starting ‘Obtained a..’ is printed out before any of the ‘A’s are printed; this may be due to the fact that a Process initially takes longer to set up than a Thread.

- 144. (iv) Running Multiple Futures Both the ThreadPoolExecutor and the ProcessPoolExecutor can be configured to support multiple Threads/Processes via the pool. Each task that is submitted to the pool will then run within a separate Thread/Process. If more tasks are submitted than there are Threads/Processes available, then the submitted task will wait for the first available Thread/Process and then be executed. This can act as a way of managing the amount of concurrent work being done.

- 145. For example, in the following example, the worker() function is submitted to the pool four times, but the pool is configured to use threads. Thus the fourth worker will need to wait until one of the first three completes before it is able to execute: Refer MultipleFuturesEx.py When this runs we can see that the Futures for A, B and C all run concurrently but D must wait until one of the others finishes: The main thread also waits for future4 to finish as it requests the result which is a blocking call that will only return once the future has completed and generates a result. Again, to use Processes rather than Threads all we need to do is to replace the ThreadPoolExecutor with the ProcessPoolExecutor: Refer MultipleFuturesEx1.py

- 146. (a) Waiting for All Futures to Complete It is possible to wait for all futures to complete before progressing. In the previous section it was assumed that future4 would be the last future to complete; but in many cases it may not be possible to know which future will be the last to complete. In such situations it is very useful to be able to wait for all the futures to complete before continuing. This can be done using the concurrent.futures.wait function. This function takes a collection of futures and optionally a timeout and a return_when indicator.

- 147. wait(fs, timeout=None, return_when=ALL_COMPLETED) where: timeout can be used to control the maximum number of seconds to wait before returning. timeout can be an int or float. If timeout is not specified or None, there is no limit to the wait time. return_when indicates when this function should return. It must be one of the following constants: FIRST_COMPLETED The function will return when any future finishes or is cancelled. FIRST_EXCEPTION The function will return when any future finishes by raising an exception. If no future raises an exception, then it is equivalent to ALL_COMPLETED. ALL_COMPLETED The function will return when all futures finish or are cancelled.

- 148. The wait() function returns two sets done and not_done. The first set contains the futures that completed (finished or were cancelled) before the wait completed. The second set, the not_dones, contains uncompleted futures. We can use the wait() function to modify out previous example so that we no longer rely on future4 finishing last: Refer FutureWaitEx.py Note how each future is added to the list of futures which is then passed to the wait() function.

- 149. (b) Waiting for All Futures to Complete What if we want to process each of the results returned by our collection of futures? We could loop through the futures list in the previous section once all the results have been generated. However, this means that we would have to wait for them all to complete before processing the list. In many situations we would like to process the results as soon as they are generated without being concerned if that is the first, third, last or second etc. The concurrent.futures.as_completed() function does preciously this; it will serve up each future in turn as soon as they are completed; with all futures eventually being returned but without guaranteeing the order (just that as soon as a future is finished generating a result it will be immediately available).

- 150. For example, in the following example, the is_even() function sleeps for a random number of seconds (ensuring that different invocations of this function will take different durations) then calculates a result: Refer AsCompletedEx.py As you can see from this output although the six futures were started in sequence the results returned are in a different order (with the returned order being 1, 4, 5, 3, 2 and finally 6).

- 151. (v) Processing Future Results Using a Callback An alternative to the as_complete() approach is to provide a function that will be called once a result has been generated. This has the advantage that the main program is never paused; it can continue doing whatever is required of it. The function called once the result is generated is typically known as a callback function; that is the future calls back to this function when the result is available. Each future can have a separate call back as the function to invoke is set on the future using the add_done_callback() method. This method takes the name of the function to invoke.

- 152. For example, in this modified version of the previous example, we specify a callback function that will be used to print the futures result. This call back function is called print_future_result(). It takes the future that has completed as its argument: Refer FutureResultEx.py When we run this, we can see that the call back function is called after the main thread has completed. Again, the order is unspecified as the is_even() function still sleeps for a random amount of time.

- 153. 6. Concurrency with AsyncIO (i) Introduction (ii) Asynchronous IO (iii) Async IO Event Loop (iv) The Async and Await Keywords (a) Using Async and Await (v) Async IO Tasks (vi) Running Multiple Tasks (a) Collating Results from Multiple Tasks (b) Handling Task Results as They Are Made Available

- 154. (i) Introduction The Async IO facilities in Python are relatively recent additions originally introduced in Python 3.4 and evolving up to and including Python 3.7. They are comprised (as of Python 3.7) of two new keywords async and await (introduced in Python 3.7) and the Async IO Python package.