Query based summarization

- 1. Query-Based Summarization Mariana Damova 30.07.2010

- 2. Outline Definition of the task DUC evaluation criteria General purpose approaches Application tailored systems Conclusion

- 3. The task of query-based summarization Producing a summary from a document or a set of documents satisfying a request for information expressed by a query. The summary is a sequence of sentences, which can be extracted from the documents, or produced with NLP techniques.

- 4. Types of summaries Summary construction methods Abstractive vs. Extractive Number of sources for the summary Single-document summaries vs. Multi-document summaries Summary trigger Generic vs. query-based Indicative Informative

- 5. Steps in the query-based summarization process Identification of relevant sections from the documents Generation of the summary

- 6. Evaluation of DUC Recall-Oriented Understudy for Gisting Evaluation (ROUGE) DUC conferences starting 2001 run by the National Institute of Standards and Technology (NIST) (Number of MUs marked) • E C = ------------------------------------------------------------- Total number of MUs in the model summary E , the ratio of completeness, ranges from 1 to 0: 1 for all , 3/4 for most , 1/2 for some , 1/4 for hardly any , and 0 for none .

- 7. Approaches based on Document graphs Ahmed A. Mohamed, Sanguthevar Rajasekaran Query-Based Summarization Based on Document Graphs (2006) The document graph is produced from a plain text document by tokenizing and parsing it into NPs. The relations of the type ISA, related_to, are generated following heuristic rules. A centric graph is produced from all source documents and guides the summarizer in its search for candidate sentences to be added to the outputs summary. Summarization: (a) The centric graph is compared with the concepts in the query (b) The graph of the document and a graph of the query are generated, and the similarity between each sentence and the query are measured (c) A query modification technique is used by including the graph of a selected sentence to the query graph

- 8. Approaches based on Document graphs Wauter Bosma (2005). Query-Based Summarization using Rhetorical Structure Theory Shows how answers to questions can be improved by extracting more information about the topic with summarization techniques for a single document extracts. The RST (Rhetorical Structure Theory) is used to create a graph representation of the document – a weighted graph in which each node represents a sentence and the weight of an edge represents the distance between two sentences. If a sentence is relevant to an answer, a second sentence is evaluated as relevant too, based on the weight of the path between the two sentences. Two step approach: Relations between sentences are defined in a discourse graph A graph search algorithm is used to extract the most salient sentences from the graph for the summary.

- 9. Approaches using linguistics John M. Conroy, Judith D. Schlesinger, Jade Goldstein Stewart (2005). CLASSY Query-Based Multi-Document Summarization. HMM (Hidden Markov Model) for sentence selection within a document and a question answering algorithm for generation of a multi-document summary Patterns with lexical cues for sentence and phrase elimination Typographic cues (title, paragraph, etc.) to detect the topic description and obtain question-answering capability Named entity identifier ran on all document sets generates lists of entities for the categories of location, person, date, organization, and evaluates each topic description based on keywords After all linguistic processing and query terms generated, HMM model is used to score the individual sentences as summary or non-summary ones

- 10. Approaches using linguistics Liang Zhou, Chin-Yew, Eduard Hovy (2006). Summarizing Answers for Complicated Questions. Query interpretation is used to analyze the given user profile and topic narrative for document clusters, then the summary is created The analysis is based on basic elements, head-modifier relation triple representation of the document content produced from a syntactic parse tree, and a set of ‘cutting rules’, extracting just the valid basic elements from the tree Scores are assigned to the sentences based on their basic elements Filtering and redundancy removal techniques are applied before generating the summary The summary outputs the topmost sentences until the required sentence limit is reached

- 11. Machine-learning approaches Jagadeesh J, Prasad Pingali, Vasudeva Varma (2007). Capturing Sentence Prior for Query-Based Multi-Document Summarization. Information retrieval techniques combined with summarization techniques New notion of sentence importance independent of query into the final scoring Sentences are scored using a set of features from all sentences, normalized in a maximum score, and the final score of a sentence is calculated using a weighted linear combination of individual feature values Information measure A query dependent ranking of a document/sentence Explicit notion of importance of a document/sentence

- 12. Machine-learning approaches Frank Schilder, Ravikumar Kondadadi (2008). FastSum: Fast and accurate query-based multi-document summarization. Word-frequency features of clusters, documents and topics Summary sentences are ranked by a regression Support Vector Machine Sentence splitting Filtering candidate sentences Computing the word frequencies in the documents of a cluster Topic description (a list of key words, and phrases) Topic title (the query of queries) The features used are word-based and sentence-based Least Angle Regression – minimal set of features, fast processing times

- 13. Application Tailored Systems Subject domain ontology based approach Opinion Summarization

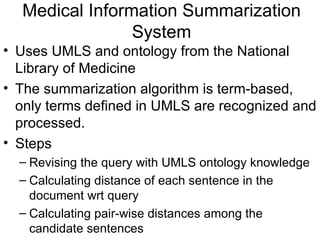

- 14. Medical Information Summarization System Uses UMLS and ontology from the National Library of Medicine The summarization algorithm is term-based, only terms defined in UMLS are recognized and processed. Steps Revising the query with UMLS ontology knowledge Calculating distance of each sentence in the document wrt query Calculating pair-wise distances among the candidate sentences

- 15. Opinion summarization Sentiment summarization in the legal domain Opinion related question and a set of documents that contain the answer, summary of for each target that summarizes the answers to the questions Semi-automatic Web blog search module FastSum Sentiment integration (sentiment tagger, based on unigram term lookup using gazetteers of positive and negative polarity indicating terms based on the General Inquirer

- 16. Conclusion CLASSY and FastSum score highest on the ROUGE criteria: top 4 and top 7 and 6

Editor's Notes

- #4: Automatic summarization is the process by which the information in a source text is expressed in a more concise fashion, with a minimal loss of information.

- #5: abstractive summaries produce generated text from the important parts of the documents; extractive summaries identify important sections of the text and use them in the summary as they are. single document summaries represent a single document. multi-document summaries are produced from multiple documents and they have to deal with three major problems: recognizing and coping with redundancy ; identifying important differences among documents; ensuring summary coherence . generic summaries present in concise manner the main topics of a given text; query-based summaries are constructed as an answer to an information need expressed by a user’s query, where: indicative summaries point to information of the document, which helps the user to decide whether the document should be read or not; Informative summaries provide all the relevant information to represent the original document.

- #7: Rouge is based on MT evaluation. In this approach human made summaries are compared with automatic summaries based on n-gram co-occurrence statistics. Gisting “ the choicest or most essential or most vital part of some idea or experience ”. The product of machine translation is sometimes called a " gisting translation“ . MT will often produce only a rough translation that will at best allow the reader to "get the gist" of the source text, but is unlikely to convey a complete understanding of it. To evaluate system performance NIST assessors who created the .ideal. written summaries did pairwise comparisons of their summaries to the system-generated summaries, other assessors. summaries, and baseline summaries. They used the Summary Evaluation Environment DUC evaluation - provide sets of documents and their human made summaries, and sets of unseen documents - the ideal summary is created - pairwise comparison of the summaries Recall at different compression ratios has been used in summarization research to measure how well an automatic system retains important content of original Documents . However, the simple sentence recall measure cannot differentiate system performance appropriately . I nstead of pure sentence recall score, we use coverage score C .

- #9: RST - a text has a kind of unity that arbitrary collections of sentences generally lack. RST offers an explanation of the coherence of texts. For every part of a coherent text, there is some function, some plausible reason for its presence, evident to readers. RST is intended to describe texts. It posits a range of possibilities of structure -- various sorts of "building blocks" which can be observed to occur in texts. These "blocks" are at two levels, the principal one dealing with "nuclearity" and "relations" (often called coherence relations in the linguistic literature).

- #10: A hidden Markov model ( HMM ) is a statistical model in which the system being modeled is assumed to be a Markov process with unobserved state. A HMM can be considered as the simplest dynamic Bayesian network . In a regular Markov model , the state is directly visible to the observer, and therefore the state transition probabilities are the only parameters. In a hidden Markov model, the state is not directly visible, but output, dependent on the state, is visible. Each state has a probability distribution over the possible output tokens.

- #11: Redundancy Removal. This will identify any information repetition in the source (input) texts, thus minimising any redundant or repetitive content in the final summary. Definition of Basic Elements: (a) the head of a major syntactic constituent, expressed as a single item, (b) relation between a head-Basic element and a single dependent, expressed as a triple (head | modifier | relation). Basic elements can be created by using a parser to produce a syntactic parse tree and a set of ‘cutting rules’ to extract just the valid basic elements from the tree. With basic elements represented as triples one can quite easily decide whether any two units match or not. The query-based basic elements summarizer includes four major stages: (a) query interpretation ; (b) identify important basic elements; (c) identify important sentences; (d) generate summaries

- #12: Capturing Sentence Prior for Query-Based Multi-Document Summarization achieves the generation of a fixed length multi document summary which satisfies a specific information need by topic-oriented informative multi-document summarization. Information retrieval techniques have been explored to improve the relevance scoring of sentences towards information need. A measure to capture the notion of importance or prior of a sentence has been identified. The Probability Ranking Principle, the calculated importance/prior is incorporated into the final sentence scoring by weighted linear combination. The system has outperformed all systems at DUC 2006 challenge in terms of ROUGE scores with a significant margin over the next best system. The information need or topic consists of mainly two components. First is the title of the topic, second is the actual information need, expressed as multiple questions. In this approach Information retrieval techniques have been combined with summarization techniques, in producing the extracts. The system that involves the described task consists of the following stages: information need enrichment, content selection and summary generation. Using Conditional Sampling assumption that the query words to be independent of each other while keeping their dependencies on w intact, it computes the required joint probability. Most of the current query-based summarization systems concentrate only on features that measure the relevance of sentences towards the query. They do not explicitly attempt to capture centrality/prior knowledge carried by a sentence pertaining to a domain. The approach defines a new measure which captures the sentence importance based on the distribution of its constituent words in the domain corpus. An entropy measure has been used to compute the information content of a sentence based on a unigram model learned from document corpus

- #13: based solely on word-frequency features of clusters, documents and topics. Summary sentences are ranked by a regression SVM. The summarizer does not use any expensive NLP techniques. Because of a detailed feature analysis using Least Angle Regression, FastSum can rely on a minimal set of features leading to fast processing times, e.g. 1250 news documents per 60 seconds. The method only involves sentence splitting, filtering candidate sentences and computing the word frequencies in the documents of a cluster, topic description and the topic title. A machine learning technique called regression SVM is used and for the feature selection a new model selection technique is adopted, called Least Angle Regression (LARS). The focus is on selecting the minimal set of features that are computationally less expensive than other features. The approach ranks all sentences in the topic cluster for summarizability. Features are mainly based on word frequencies of words in the clusters, documents and topics. A cluster contains 25 documents and is associated with a topic. The topic contains a topic title and a topic description. The topic title is a list of key words or phrases describing the topic, the topic description contain the actual query or queries. The features used are word-based and sentence-based. Word-based features are computed based on the probability of words for the different containers. Sentence-based features include the length and position of the sentence in the document.

- #15: Dividing the candidate sentences into groups based on a threshold and selecting highest-ranked one from each group. When it is determined which sentences will be included in the summary, three different “scores” are generated and normalized with the length of the sentence. A query-based Medical Information Summarization System Using Ontology Knowledge proposes a technique using UMLS (Unified Medical Language System) and ontology from National Library of Medicine. The ontology-based approach performs clearly better than the keyword-only approach. A general web search engine tries to serve as an information access agent. It retrieves and ranks information according to a user’s query. A document summarization system is presented specialized for medical domain, which will retrieve and summarize up-to-date medical information from trustworthy online sources according to users’ queries. Summaries that a user wants need to be generated on the fly based on his query keywords in a web context. The summarization algorithm is term-based, i.e. only terms defined in UMLS will be recognized and processed. The summarization procedure is s follows: (a) revise the query with UMLS ontology knowledge, (b) calculate distance of each sentence in the document to the finalized query. The distance function is a metrics satisfying d(x,x)=0, symmetry, and triangle inequality. If the distance is smaller than the threshold, the sentence will be a candidate to be included in the summary. (c) calculate pair-wise distances among the candidate sentences, then divide the candidate sentences into groups based on a threshold and select highest-ranked one from each group. When it is determined which sentences will be included in the summary, three different “scores” are generated: a) simply count the number of matched original keywords and select the sentences with many matching keywords b) if a sentence contains a n original keyword assign weight 1 to it. If a sentence contains an expanded keyword, assign weight 0.5 to this keyword. Add all the weights together, and get the score for each sentence. Sentences with high scores are being selected. c) after the scores are obtained, normalize the score with the length of the sentence. And sentences with high normalized score are being selected.

- #16: Each summary has to address a set of complex questions about the target, where the question cannot be answered simply with a named entity. The input to the summarization task comprises a target, some opinion-related questions about the target, and a set of documents that contain answers to the questions. The output is a summary for each target that summarizes the answers to the questions. It has been discovered that users have a strong preference for summarizers that model sentiment over non-sentiment baselines. A filtering component identifies sentences that are unlikely to be in a good summary. Another filter is concerned with the sentiment of a sentence. The system performs the following steps: A.Preprocessing, B.Question sentiment and target analyzer, C.Filtering, C1 Sentiment tagger, C2 Taget overlap,D.Feature extraction,E.sentence ranker, F.Redundancy removal. Several preprocessing steps take place before Web-based blog entries are introduced to the FastSum engine. These include translating the original legal opinion topics into queries and identifying any target entities or concepts within those queries, running the queries through the blog search engine and aggregating the top-ranked results, and passing those results through a “marginal relevance filter” in order to ensure that the entries serving as FastSum input data surpass a minimum relevance criterion.