Writing Continuous Applications with Structured Streaming Python APIs in Apache Spark

2 likes4,186 views

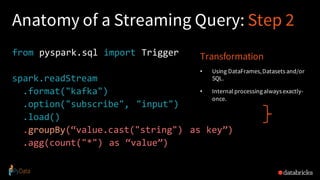

The document discusses the use of Apache Spark for building continuous streaming applications using structured streaming in PySpark, highlighting its benefits, challenges, and integration capabilities. It explains how Spark unifies various data processing needs and provides a detailed overview of constructing a streaming application with example code snippets. The session also covers topics such as data ingestion from sources like Kafka, processing data transformations, and writing results to output sinks while ensuring fault tolerance and efficient querying.

1 of 49

Downloaded 70 times

![Traditional ETL

• Raw, dirty, un/semi-structured is data dumped as files

• Periodic jobs run every few hours to convert raw data to structured

data ready for further analytics

• Hours of delay before taking decisions on latest data

• Problem: Unacceptable when time is of essence

• [intrusion , anomaly or fraud detection,monitoringIoT devices, etc.]

37

file

dump

seconds hours

table

10101010](https://image.slidesharecdn.com/pysparkstructuredstreamingfinal-190112225329/85/Writing-Continuous-Applications-with-Structured-Streaming-Python-APIs-in-Apache-Spark-37-320.jpg)

![Reading from Kafka

raw_data_df = spark.readStream

.format("kafka")

.option("kafka.boostrap.servers",...)

.option("subscribe", "topic")

.load()

rawData dataframe has

the following columns

key value topic partition offset timestamp

[binary] [binary] "topicA" 0 345 1486087873

[binary] [binary] "topicB" 3 2890 1486086721](https://image.slidesharecdn.com/pysparkstructuredstreamingfinal-190112225329/85/Writing-Continuous-Applications-with-Structured-Streaming-Python-APIs-in-Apache-Spark-40-320.jpg)

Ad

Recommended

Combining Machine Learning Frameworks with Apache Spark

Combining Machine Learning Frameworks with Apache SparkDatabricks This document discusses combining machine learning frameworks with Apache Spark. It provides an overview of Apache Spark and MLlib, describes how to distribute TensorFlow computations using Spark, and discusses managing machine learning workflows with Spark through features like cross validation, persistence, and distributed data sources. The goal is to make machine learning easy, scalable, and integrate with existing workflows.

Large-Scale Data Science in Apache Spark 2.0

Large-Scale Data Science in Apache Spark 2.0Databricks This document discusses the enhancements in Apache Spark 2.0, focusing on its scalability for large-scale data science and AI through improved hardware and user scalability. It emphasizes the use of structured APIs for efficient data manipulation and introduces new features for deep learning and integration with existing Python and R libraries. The content highlights Spark's capabilities in parallelizing computations and the ease of building complex data science models with high-level APIs.

Spark Machine Learning: Adding Your Own Algorithms and Tools with Holden Kara...

Spark Machine Learning: Adding Your Own Algorithms and Tools with Holden Kara...Databricks The document discusses extending Spark ML estimators and transformers, focusing on implementing pipelines, estimators, and transformers in Apache Spark. It introduces key contributors, outlines the Spark Technology Center's mission, and details the process of loading data and training machine learning models using Spark SQL and various classes like StringIndexer and DecisionTreeClassifier. Additionally, it highlights how to create custom estimators and transformers, manage pipeline stages, and the advantages of utilizing ML pipelines for machine learning tasks.

Robust and Scalable ETL over Cloud Storage with Apache Spark

Robust and Scalable ETL over Cloud Storage with Apache SparkDatabricks The document discusses the implementation of ETL (Extract, Transform, Load) processes on cloud storage using Databricks and highlights the challenges of reliability and performance with cloud-native storage compared to HDFS. It examines the trade-offs regarding costs, availability, durability, and metadata performance, proposing solutions such as the Databricks commit service to ensure atomic commits and manage eventual consistency. Overall, the document advocates for leveraging cloud storage while addressing its limitations to achieve efficient and reliable ETL operations.

Elasticsearch And Apache Lucene For Apache Spark And MLlib

Elasticsearch And Apache Lucene For Apache Spark And MLlibJen Aman This document summarizes a presentation about using Elasticsearch and Lucene for text processing and machine learning pipelines in Apache Spark. Some key points:

- Elasticsearch provides text analysis capabilities through Lucene and can be used to clean, tokenize, and vectorize text for machine learning tasks.

- Elasticsearch integrates natively with Spark through Java/Scala APIs and allows indexing and querying data from Spark.

- A typical machine learning pipeline for text classification in Spark involves tokenization, feature extraction (e.g. hashing), and a classifier like logistic regression.

- The presentation proposes preparing text analysis specifications in Elasticsearch once and reusing them across multiple Spark pipelines to simplify the workflows and avoid data movement between systems

What's New in Apache Spark 2.3 & Why Should You Care

What's New in Apache Spark 2.3 & Why Should You CareDatabricks The document discusses the major features and enhancements in Apache Spark 2.3, including improvements in continuous processing, stream-stream joins, and PySpark performance. Key elements include the new structured streaming execution mode that offers low latency, support for various data formats, and integration with Kubernetes. The emphasis is placed on building robust streaming applications and the advantages of using Spark's unified analytics platform.

Spark DataFrames: Simple and Fast Analytics on Structured Data at Spark Summi...

Spark DataFrames: Simple and Fast Analytics on Structured Data at Spark Summi...Databricks The document presents insights on Spark SQL and DataFrames, highlighting its capabilities in processing structured data efficiently and effectively. It details the evolution of Spark SQL since its inception in April 2014, emphasizing features like multi-version support, various bindings, and a unified interface for reading and writing data in multiple formats. Additionally, it explores the optimization of data processing pipelines, integration with BI tools, and high-level operations for analytics while showcasing performance improvements with DataFrames compared to traditional RDDs.

Performance Optimization Case Study: Shattering Hadoop's Sort Record with Spa...

Performance Optimization Case Study: Shattering Hadoop's Sort Record with Spa...Databricks The performance optimization case study showcases how Spark and Scala shattered Hadoop's sorting records by utilizing efficient in-memory processing and advanced engineering techniques. Key advancements included a sort-based shuffle, native network transport with Netty, and clever application-level optimizations to enhance cache and garbage collection performance. This effort culminated in achieving a new sorting record for 100TB and 1PB datasets, marking a significant milestone in big data processing.

Apache Spark 2.0: Faster, Easier, and Smarter

Apache Spark 2.0: Faster, Easier, and SmarterDatabricks Apache Spark 2.0 enhances performance and usability with key features such as Project Tungsten for speed improvements of 5-20x, a unified API integrating datasets, dataframes, and SQL, and structured streaming for real-time analytics. The update focuses on maintaining backward compatibility while introducing richer semantics and optimizations. Users can explore Spark 2.0 through the Databricks Community Edition, with extensive improvements set to address around 2000 issues.

Composable Parallel Processing in Apache Spark and Weld

Composable Parallel Processing in Apache Spark and WeldDatabricks The document discusses composable parallel processing in Apache Spark and introduces the Weld runtime, emphasizing the need for efficient composition of libraries in big data processing. It highlights Spark's goals to provide a unified engine and API for batch, interactive, and streaming applications, as well as the benefits of structured APIs in Spark 2.0 for performance and programmability. Additionally, it addresses challenges regarding data representation and the inefficiencies of traditional library composition, proposing new composition interfaces like Weld to optimize data movement and execution across various workloads.

Introduction to Apache Spark Developer Training

Introduction to Apache Spark Developer TrainingCloudera, Inc. The document outlines a training program for developers focused on Apache Spark, covering its components, APIs, and distributed data processing concepts. No prior knowledge of Spark, Hadoop, or distributed programming is needed, but basic Linux familiarity and intermediate programming skills in Scala or Python are required. The course includes practical applications and culminates in certified training to equip participants with the necessary skills for big data analysis.

Spark Community Update - Spark Summit San Francisco 2015

Spark Community Update - Spark Summit San Francisco 2015Databricks This document summarizes Spark community updates from June 2014 to June 2015. It notes that Spark has become the most active open source project for data processing, with the number of contributors and lines of code doubling over the past year. New features in Spark include support for the R programming language and machine learning pipelines inspired by scikit-learn. The document outlines ongoing work to improve the Spark engine and platform APIs, as well as previews upcoming developments through Spark 1.5.

ETL with SPARK - First Spark London meetup

ETL with SPARK - First Spark London meetupRafal Kwasny The document discusses how Spark can be used to supercharge ETL workflows by running them faster and with less code compared to traditional Hadoop approaches. It provides examples of using Spark for tasks like sessionization of user clickstream data. Best practices are covered like optimizing for JVM issues, avoiding full GC pauses, and tips for deployment on EC2. Future improvements to Spark like SQL support and Java 8 are also mentioned.

Strata NYC 2015: What's new in Spark Streaming

Strata NYC 2015: What's new in Spark StreamingDatabricks Spark Streaming allows processing of live data streams at scale. Recent improvements include:

1) Enhanced fault tolerance through a write-ahead log and replay of unprocessed data on failure.

2) Dynamic backpressure to automatically adjust ingestion rates and ensure stability.

3) Visualization tools for debugging and monitoring streaming jobs.

4) Support for streaming machine learning algorithms and integration with other Spark components.

Introduction to Spark ML

Introduction to Spark MLHolden Karau This document provides an introduction and overview of machine learning with Spark ML. It discusses the speaker and TAs, previews the topics that will be covered which include Spark's ML APIs, running an example with one API, model save/load, and serving options. It also briefly describes the different pieces of Spark including SQL, streaming, languages APIs, MLlib, and community packages. The document provides examples of loading data with Spark SQL and Spark CSV, constructing a pipeline with transformers and estimators, training a decision tree model, adding more features to the tree, and cross validation. Finally, it discusses serving models and exporting models to PMML format.

Easy, Scalable, Fault-tolerant stream processing with Structured Streaming in...

Easy, Scalable, Fault-tolerant stream processing with Structured Streaming in...DataWorks Summit The document discusses the complexities of building robust stream processing applications using Apache Spark's Structured Streaming, which simplifies processing complex data and workloads. It outlines the conceptual model of treating data streams as unbounded tables, provides technical insights into querying data, managing state, checkpointing, and watermarking for fault tolerance, and explains how to perform real-time analytics with structured data. Additionally, it covers various use cases and APIs for connecting to sources like Kafka and Kinesis, enabling efficient processing and querying of event-time data.

Recent Developments In SparkR For Advanced Analytics

Recent Developments In SparkR For Advanced AnalyticsDatabricks The document discusses the SparkR package, which bridges the gap between R and big data analytics, providing descriptive and predictive analytics on large datasets. It outlines the architecture, features, and implementation of SparkR, including data frame operations, statistical computations, and integration with Spark's machine learning library. Future directions for SparkR include improving API consistency, enhancing R formula support, and integrating more machine learning algorithms.

Microservices and Teraflops: Effortlessly Scaling Data Science with PyWren wi...

Microservices and Teraflops: Effortlessly Scaling Data Science with PyWren wi...Databricks PyWren is a serverless framework that allows data scientists to easily scale Python code across AWS Lambda. It uses Lambda to parallelize work by mapping Python functions to a large dataset. The functions and data are serialized and uploaded to S3, which then triggers Lambda. Results are stored in S3. This allows data science problems that take minutes or hours to be solved to complete in seconds by parallelizing across thousands of Lambda instances. PyWren aims to abstract away the complexity of serverless infrastructure so data scientists can focus on their code instead of operations.

Getting Ready to Use Redis with Apache Spark with Dvir Volk

Getting Ready to Use Redis with Apache Spark with Dvir VolkSpark Summit The document outlines a presentation on using Redis for real-time machine learning, focusing on Redis-ML integrated with Apache Spark. It covers the capabilities of Redis as a key-value store, the functionalities of Redis-ML for model serving, and a case study involving a recommendation system. The concluding remarks emphasize the advantages of combining Spark for training with Redis for efficient model serving, highlighting reduced resource costs and simplified machine learning lifecycle.

A Journey into Databricks' Pipelines: Journey and Lessons Learned

A Journey into Databricks' Pipelines: Journey and Lessons LearnedDatabricks The document discusses Databricks' development of a next-generation data pipeline utilizing Apache Spark, highlighting challenges like fault tolerance and scalability. It outlines the architecture of their data pipeline, including real-time and batch processing capabilities, and shares lessons learned regarding efficiency and cost management. The conclusion emphasizes the benefits of Databricks and Apache Spark as a unified platform for ETL, data warehousing, and analytics.

Time Series Analytics with Spark: Spark Summit East talk by Simon Ouellette

Time Series Analytics with Spark: Spark Summit East talk by Simon OuelletteSpark Summit This document provides an overview of spark-timeseries, an open source time series library for Apache Spark. It discusses the library's design choices around representing multivariate time series data, partitioning time series data for distributed processing, and handling operations like lagging and differencing on irregular time series data. It also presents examples of using the library to test for stationarity, generate lagged features, and perform Holt-Winters forecasting on seasonal passenger data.

Designing Distributed Machine Learning on Apache Spark

Designing Distributed Machine Learning on Apache SparkDatabricks This document summarizes Joseph Bradley's presentation on designing distributed machine learning on Apache Spark. Bradley is a committer and PMC member of Apache Spark and works as a software engineer at Databricks. He discusses how Spark provides a unified engine for distributed workloads and libraries like MLlib make it possible to perform scalable machine learning. Bradley outlines different methods for distributing ML algorithms, using k-means clustering as an example of reorganizing an algorithm to fit the MapReduce framework in a way that minimizes communication costs.

Extreme Apache Spark: how in 3 months we created a pipeline that can process ...

Extreme Apache Spark: how in 3 months we created a pipeline that can process ...Josef A. Habdank The document outlines the process of creating a high-capacity data processing pipeline using Apache Spark, capable of handling up to 8 billion records per day. It details a successful implementation achieved in three months, using low-cost cloud servers, while discussing Spark's core functionalities and best practices for high throughput coding. The presentation also addresses the challenges faced and solutions found while scaling data operations and optimizing performance.

Foundations for Scaling ML in Apache Spark by Joseph Bradley at BigMine16

Foundations for Scaling ML in Apache Spark by Joseph Bradley at BigMine16BigMine The document discusses the foundations for scaling machine learning (ML) within Apache Spark, highlighting its capabilities for big data computing through features like Resilient Distributed Datasets (RDDs), DataFrames, and the ML library. It addresses challenges faced with RDDs for scalability while presenting the advantages of transitioning to DataFrames, which optimize performance and simplify algorithm development. The future of ML in Spark focuses on efficient scaling and improved usability through better resource management and optimization techniques.

Spark Summit EU 2016: The Next AMPLab: Real-time Intelligent Secure Execution

Spark Summit EU 2016: The Next AMPLab: Real-time Intelligent Secure ExecutionDatabricks The document outlines the mission of Berkeley's Amplab to create a next-generation open-source data analytics stack for real-time decision-making with strong security. It emphasizes the importance of making faster, accurate decisions based on live data to combat issues like zero-day attacks and improve various applications, from fraud detection to real-time analytics. The future research directions focus on reducing latency, enhancing machine learning robustness, and ensuring data privacy and integrity within decision systems.

Apache Spark Performance: Past, Future and Present

Apache Spark Performance: Past, Future and PresentDatabricks This document discusses improving performance instrumentation in Apache Spark. It summarizes that existing instrumentation focuses on blocked times in the main task thread, but opportunities exist to better instrument read/write times and machine-level resource utilization. The author proposes combining per-task I/O metrics with machine utilization data to provide complete metrics about time spent using each resource on a per-task basis, improving performance clarity for users. More details are available at the listed website.

Memory Management in Apache Spark

Memory Management in Apache SparkDatabricks The document discusses memory management in Apache Spark, highlighting its critical importance for performance. It outlines three main challenges: memory arbitration between execution and storage, across parallel tasks, and between operators within a task, along with solutions for each. The presentation provides insights into unified memory management introduced in Spark 1.6 and various optimization techniques for efficient memory use.

Clipper: A Low-Latency Online Prediction Serving System

Clipper: A Low-Latency Online Prediction Serving SystemDatabricks Clipper is a low-latency online prediction serving system designed to support various machine learning models and frameworks, addressing challenges such as high throughput and low-latency serving. It aims to decouple applications from models, using container-based deployment for better resource isolation and functionality. Clipper supports easy integration and deployment of models, particularly from Spark and other frameworks, through a unified interface and adaptive batching strategies.

Writing Continuous Applications with Structured Streaming PySpark API

Writing Continuous Applications with Structured Streaming PySpark APIDatabricks The document discusses the development of continuous applications using Structured Streaming in Apache Spark, highlighting its benefits, complexities, and capabilities in handling diverse data. It explains the architecture of Structured Streaming, including the process of reading, transforming, and writing data streams, as well as the importance of features like checkpointing for fault tolerance. The presentation includes tutorials and emphasizes the unification of batch and streaming processing to enable real-time data handling and analytics.

Writing Continuous Applications with Structured Streaming in PySpark

Writing Continuous Applications with Structured Streaming in PySparkDatabricks The document discusses the use of Apache Spark for building continuous applications with structured streaming, emphasizing the challenges of stream processing and advantages of using Spark. It covers various components such as data integration, transformation, and real-time analytics, showcasing the power of structured streaming in dealing with complex data. The talk includes a demonstration of continuous applications and resources for further exploration of Spark's capabilities.

More Related Content

What's hot (20)

Apache Spark 2.0: Faster, Easier, and Smarter

Apache Spark 2.0: Faster, Easier, and SmarterDatabricks Apache Spark 2.0 enhances performance and usability with key features such as Project Tungsten for speed improvements of 5-20x, a unified API integrating datasets, dataframes, and SQL, and structured streaming for real-time analytics. The update focuses on maintaining backward compatibility while introducing richer semantics and optimizations. Users can explore Spark 2.0 through the Databricks Community Edition, with extensive improvements set to address around 2000 issues.

Composable Parallel Processing in Apache Spark and Weld

Composable Parallel Processing in Apache Spark and WeldDatabricks The document discusses composable parallel processing in Apache Spark and introduces the Weld runtime, emphasizing the need for efficient composition of libraries in big data processing. It highlights Spark's goals to provide a unified engine and API for batch, interactive, and streaming applications, as well as the benefits of structured APIs in Spark 2.0 for performance and programmability. Additionally, it addresses challenges regarding data representation and the inefficiencies of traditional library composition, proposing new composition interfaces like Weld to optimize data movement and execution across various workloads.

Introduction to Apache Spark Developer Training

Introduction to Apache Spark Developer TrainingCloudera, Inc. The document outlines a training program for developers focused on Apache Spark, covering its components, APIs, and distributed data processing concepts. No prior knowledge of Spark, Hadoop, or distributed programming is needed, but basic Linux familiarity and intermediate programming skills in Scala or Python are required. The course includes practical applications and culminates in certified training to equip participants with the necessary skills for big data analysis.

Spark Community Update - Spark Summit San Francisco 2015

Spark Community Update - Spark Summit San Francisco 2015Databricks This document summarizes Spark community updates from June 2014 to June 2015. It notes that Spark has become the most active open source project for data processing, with the number of contributors and lines of code doubling over the past year. New features in Spark include support for the R programming language and machine learning pipelines inspired by scikit-learn. The document outlines ongoing work to improve the Spark engine and platform APIs, as well as previews upcoming developments through Spark 1.5.

ETL with SPARK - First Spark London meetup

ETL with SPARK - First Spark London meetupRafal Kwasny The document discusses how Spark can be used to supercharge ETL workflows by running them faster and with less code compared to traditional Hadoop approaches. It provides examples of using Spark for tasks like sessionization of user clickstream data. Best practices are covered like optimizing for JVM issues, avoiding full GC pauses, and tips for deployment on EC2. Future improvements to Spark like SQL support and Java 8 are also mentioned.

Strata NYC 2015: What's new in Spark Streaming

Strata NYC 2015: What's new in Spark StreamingDatabricks Spark Streaming allows processing of live data streams at scale. Recent improvements include:

1) Enhanced fault tolerance through a write-ahead log and replay of unprocessed data on failure.

2) Dynamic backpressure to automatically adjust ingestion rates and ensure stability.

3) Visualization tools for debugging and monitoring streaming jobs.

4) Support for streaming machine learning algorithms and integration with other Spark components.

Introduction to Spark ML

Introduction to Spark MLHolden Karau This document provides an introduction and overview of machine learning with Spark ML. It discusses the speaker and TAs, previews the topics that will be covered which include Spark's ML APIs, running an example with one API, model save/load, and serving options. It also briefly describes the different pieces of Spark including SQL, streaming, languages APIs, MLlib, and community packages. The document provides examples of loading data with Spark SQL and Spark CSV, constructing a pipeline with transformers and estimators, training a decision tree model, adding more features to the tree, and cross validation. Finally, it discusses serving models and exporting models to PMML format.

Easy, Scalable, Fault-tolerant stream processing with Structured Streaming in...

Easy, Scalable, Fault-tolerant stream processing with Structured Streaming in...DataWorks Summit The document discusses the complexities of building robust stream processing applications using Apache Spark's Structured Streaming, which simplifies processing complex data and workloads. It outlines the conceptual model of treating data streams as unbounded tables, provides technical insights into querying data, managing state, checkpointing, and watermarking for fault tolerance, and explains how to perform real-time analytics with structured data. Additionally, it covers various use cases and APIs for connecting to sources like Kafka and Kinesis, enabling efficient processing and querying of event-time data.

Recent Developments In SparkR For Advanced Analytics

Recent Developments In SparkR For Advanced AnalyticsDatabricks The document discusses the SparkR package, which bridges the gap between R and big data analytics, providing descriptive and predictive analytics on large datasets. It outlines the architecture, features, and implementation of SparkR, including data frame operations, statistical computations, and integration with Spark's machine learning library. Future directions for SparkR include improving API consistency, enhancing R formula support, and integrating more machine learning algorithms.

Microservices and Teraflops: Effortlessly Scaling Data Science with PyWren wi...

Microservices and Teraflops: Effortlessly Scaling Data Science with PyWren wi...Databricks PyWren is a serverless framework that allows data scientists to easily scale Python code across AWS Lambda. It uses Lambda to parallelize work by mapping Python functions to a large dataset. The functions and data are serialized and uploaded to S3, which then triggers Lambda. Results are stored in S3. This allows data science problems that take minutes or hours to be solved to complete in seconds by parallelizing across thousands of Lambda instances. PyWren aims to abstract away the complexity of serverless infrastructure so data scientists can focus on their code instead of operations.

Getting Ready to Use Redis with Apache Spark with Dvir Volk

Getting Ready to Use Redis with Apache Spark with Dvir VolkSpark Summit The document outlines a presentation on using Redis for real-time machine learning, focusing on Redis-ML integrated with Apache Spark. It covers the capabilities of Redis as a key-value store, the functionalities of Redis-ML for model serving, and a case study involving a recommendation system. The concluding remarks emphasize the advantages of combining Spark for training with Redis for efficient model serving, highlighting reduced resource costs and simplified machine learning lifecycle.

A Journey into Databricks' Pipelines: Journey and Lessons Learned

A Journey into Databricks' Pipelines: Journey and Lessons LearnedDatabricks The document discusses Databricks' development of a next-generation data pipeline utilizing Apache Spark, highlighting challenges like fault tolerance and scalability. It outlines the architecture of their data pipeline, including real-time and batch processing capabilities, and shares lessons learned regarding efficiency and cost management. The conclusion emphasizes the benefits of Databricks and Apache Spark as a unified platform for ETL, data warehousing, and analytics.

Time Series Analytics with Spark: Spark Summit East talk by Simon Ouellette

Time Series Analytics with Spark: Spark Summit East talk by Simon OuelletteSpark Summit This document provides an overview of spark-timeseries, an open source time series library for Apache Spark. It discusses the library's design choices around representing multivariate time series data, partitioning time series data for distributed processing, and handling operations like lagging and differencing on irregular time series data. It also presents examples of using the library to test for stationarity, generate lagged features, and perform Holt-Winters forecasting on seasonal passenger data.

Designing Distributed Machine Learning on Apache Spark

Designing Distributed Machine Learning on Apache SparkDatabricks This document summarizes Joseph Bradley's presentation on designing distributed machine learning on Apache Spark. Bradley is a committer and PMC member of Apache Spark and works as a software engineer at Databricks. He discusses how Spark provides a unified engine for distributed workloads and libraries like MLlib make it possible to perform scalable machine learning. Bradley outlines different methods for distributing ML algorithms, using k-means clustering as an example of reorganizing an algorithm to fit the MapReduce framework in a way that minimizes communication costs.

Extreme Apache Spark: how in 3 months we created a pipeline that can process ...

Extreme Apache Spark: how in 3 months we created a pipeline that can process ...Josef A. Habdank The document outlines the process of creating a high-capacity data processing pipeline using Apache Spark, capable of handling up to 8 billion records per day. It details a successful implementation achieved in three months, using low-cost cloud servers, while discussing Spark's core functionalities and best practices for high throughput coding. The presentation also addresses the challenges faced and solutions found while scaling data operations and optimizing performance.

Foundations for Scaling ML in Apache Spark by Joseph Bradley at BigMine16

Foundations for Scaling ML in Apache Spark by Joseph Bradley at BigMine16BigMine The document discusses the foundations for scaling machine learning (ML) within Apache Spark, highlighting its capabilities for big data computing through features like Resilient Distributed Datasets (RDDs), DataFrames, and the ML library. It addresses challenges faced with RDDs for scalability while presenting the advantages of transitioning to DataFrames, which optimize performance and simplify algorithm development. The future of ML in Spark focuses on efficient scaling and improved usability through better resource management and optimization techniques.

Spark Summit EU 2016: The Next AMPLab: Real-time Intelligent Secure Execution

Spark Summit EU 2016: The Next AMPLab: Real-time Intelligent Secure ExecutionDatabricks The document outlines the mission of Berkeley's Amplab to create a next-generation open-source data analytics stack for real-time decision-making with strong security. It emphasizes the importance of making faster, accurate decisions based on live data to combat issues like zero-day attacks and improve various applications, from fraud detection to real-time analytics. The future research directions focus on reducing latency, enhancing machine learning robustness, and ensuring data privacy and integrity within decision systems.

Apache Spark Performance: Past, Future and Present

Apache Spark Performance: Past, Future and PresentDatabricks This document discusses improving performance instrumentation in Apache Spark. It summarizes that existing instrumentation focuses on blocked times in the main task thread, but opportunities exist to better instrument read/write times and machine-level resource utilization. The author proposes combining per-task I/O metrics with machine utilization data to provide complete metrics about time spent using each resource on a per-task basis, improving performance clarity for users. More details are available at the listed website.

Memory Management in Apache Spark

Memory Management in Apache SparkDatabricks The document discusses memory management in Apache Spark, highlighting its critical importance for performance. It outlines three main challenges: memory arbitration between execution and storage, across parallel tasks, and between operators within a task, along with solutions for each. The presentation provides insights into unified memory management introduced in Spark 1.6 and various optimization techniques for efficient memory use.

Clipper: A Low-Latency Online Prediction Serving System

Clipper: A Low-Latency Online Prediction Serving SystemDatabricks Clipper is a low-latency online prediction serving system designed to support various machine learning models and frameworks, addressing challenges such as high throughput and low-latency serving. It aims to decouple applications from models, using container-based deployment for better resource isolation and functionality. Clipper supports easy integration and deployment of models, particularly from Spark and other frameworks, through a unified interface and adaptive batching strategies.

Similar to Writing Continuous Applications with Structured Streaming Python APIs in Apache Spark (20)

Writing Continuous Applications with Structured Streaming PySpark API

Writing Continuous Applications with Structured Streaming PySpark APIDatabricks The document discusses the development of continuous applications using Structured Streaming in Apache Spark, highlighting its benefits, complexities, and capabilities in handling diverse data. It explains the architecture of Structured Streaming, including the process of reading, transforming, and writing data streams, as well as the importance of features like checkpointing for fault tolerance. The presentation includes tutorials and emphasizes the unification of batch and streaming processing to enable real-time data handling and analytics.

Writing Continuous Applications with Structured Streaming in PySpark

Writing Continuous Applications with Structured Streaming in PySparkDatabricks The document discusses the use of Apache Spark for building continuous applications with structured streaming, emphasizing the challenges of stream processing and advantages of using Spark. It covers various components such as data integration, transformation, and real-time analytics, showcasing the power of structured streaming in dealing with complex data. The talk includes a demonstration of continuous applications and resources for further exploration of Spark's capabilities.

Kafka Summit NYC 2017 - Easy, Scalable, Fault-tolerant Stream Processing with...

Kafka Summit NYC 2017 - Easy, Scalable, Fault-tolerant Stream Processing with...confluent Structured Streaming provides a scalable and fault-tolerant stream processing framework on Spark SQL. It allows users to write streaming jobs using simple batch-like SQL queries that Spark will automatically optimize for efficient streaming execution. This includes handling out-of-order and late data, checkpointing to ensure fault-tolerance, and providing end-to-end exactly-once guarantees. The talk discusses how Structured Streaming represents streaming data as unbounded tables and executes queries incrementally to produce streaming query results.

Easy, scalable, fault tolerant stream processing with structured streaming - ...

Easy, scalable, fault tolerant stream processing with structured streaming - ...Anyscale The document discusses the complexities and solutions involved in building robust stream processing applications using Apache Spark's structured streaming. It emphasizes the ease of writing simple, batch-like queries that Spark can automatically convert for streaming use, along with features like fault tolerance, event time processing, and integration with various data sources. The presentation also introduces various APIs and the benefits of streaming ETL, providing an example of data processing from Kafka to Parquet format.

What's new with Apache Spark's Structured Streaming?

What's new with Apache Spark's Structured Streaming?Miklos Christine Structured Streaming in Apache Spark allows users to write streaming applications as batch-style queries on static or streaming data sources. It treats streams as continuous unbounded tables and allows batch queries written on DataFrames/Datasets to be automatically converted into incremental execution plans to process streaming data in micro-batches. This provides a simple yet powerful API for building robust stream processing applications with end-to-end fault tolerance guarantees and integration with various data sources and sinks.

Easy, scalable, fault tolerant stream processing with structured streaming - ...

Easy, scalable, fault tolerant stream processing with structured streaming - ...Databricks The document discusses structured streaming with Apache Spark, focusing on its ease of use, scalability, and fault tolerance for stream processing applications. It highlights key features such as data integration from various sources, checkpointing for fault tolerance, and advanced transformations to handle complex workloads and data types. The presentation also covers practical examples of implementing streaming queries, event-time aggregations, and stateful processing with benefits for real-time analytics and decision-making.

Making Structured Streaming Ready for Production

Making Structured Streaming Ready for ProductionDatabricks The document discusses structured streaming with Apache Spark, highlighting its capabilities for building robust stream processing applications and managing complex data. It elaborates on the model of treating streams as unbounded tables and introduces features like event time processing, checkpointing for fault tolerance, and seamless integration with various data sources. Additionally, it covers advanced functionalities such as stateful processing and watermarking for late data handling, showcasing Spark's performance enhancements over traditional ETL processes.

Easy, scalable, fault tolerant stream processing with structured streaming - ...

Easy, scalable, fault tolerant stream processing with structured streaming - ...Databricks The document discusses the complexities of building scalable and fault-tolerant stream processing applications using Structured Streaming with Apache Spark. It highlights key features such as handling diverse data formats and storage systems, ensuring exactly-once processing semantics, and leveraging Spark SQL for incremental execution. The presentation covers practical examples and concepts like triggers, output modes, and watermarking to efficiently manage state and late data in streaming queries.

Apache Spark 2.0: A Deep Dive Into Structured Streaming - by Tathagata Das

Apache Spark 2.0: A Deep Dive Into Structured Streaming - by Tathagata Das Databricks The document discusses structured streaming in Apache Spark, focusing on its development, features, and enhancements introduced in Spark 2.0 and beyond. It highlights the transition from traditional streaming apps to a unified model that simplifies the handling of batch and streaming data while offering advanced capabilities like continuous processing and fault tolerance. The presentation emphasizes practical use cases in IoT and machine learning, showcasing how structured streaming improves analytics and query management.

Real-Time Data Pipelines Made Easy with Structured Streaming in Apache Spark.pdf

Real-Time Data Pipelines Made Easy with Structured Streaming in Apache Spark.pdfnilanjan172nsvian Real-Time Data Pipelines Made Easy with Structured Streaming in Apache Spark.pdf

Data Council

Continuous Application with Structured Streaming 2.0

Continuous Application with Structured Streaming 2.0Anyscale The document discusses the advancements in Apache Spark 2.0, particularly focusing on structured streaming which unifies batch, interactive, and streaming queries. It highlights the importance of handling complex streaming requirements such as event-time processing, state management, and real-time analytics through a high-level API. Additionally, it presents use cases, advantages, and practical examples, emphasizing the continuous and incremental execution capabilities of Spark structured streaming.

A Deep Dive into Structured Streaming in Apache Spark

A Deep Dive into Structured Streaming in Apache Spark Anyscale This document provides an overview of Structured Streaming in Apache Spark. It begins with a brief history of streaming in Spark and outlines some of the limitations of the previous DStream API. It then introduces the new Structured Streaming API, which allows for continuous queries to be expressed as standard Spark SQL queries against continuously arriving data. It describes the new processing model and how queries are executed incrementally. It also covers features like event-time processing, windows, joins, and fault-tolerance guarantees through checkpointing and write-ahead logging. Overall, the document presents Structured Streaming as providing a simpler way to perform streaming analytics by allowing streaming queries to be expressed using the same APIs as batch queries.

A Deep Dive into Structured Streaming: Apache Spark Meetup at Bloomberg 2016

A Deep Dive into Structured Streaming: Apache Spark Meetup at Bloomberg 2016 Databricks The document discusses advancements in structured streaming in Apache Spark, highlighting its features such as fault-tolerant state management and unified APIs for batch and streaming data. It outlines the importance of real-time analytics, and various use cases, particularly in IoT device monitoring. The presentation emphasizes the advantages of structured streaming over traditional DStreams, focusing on data processing efficiency and integrating batch, interactive, and streaming workloads seamlessly.

Leveraging Azure Databricks to minimize time to insight by combining Batch an...

Leveraging Azure Databricks to minimize time to insight by combining Batch an...Microsoft Tech Community The document outlines a collaborative analytics platform based on Apache Spark optimized for Azure, specifically Databricks, featuring streamlined workflows for data scientists and analysts. It emphasizes integration with various Azure services and capabilities for real-time data processing, including event-time processing and exactly-once guarantees. The platform's advanced functionalities include support for complex queries, stateful processing, and built-in support for diverse data formats and sources.

Taking Spark Streaming to the Next Level with Datasets and DataFrames

Taking Spark Streaming to the Next Level with Datasets and DataFramesDatabricks Structured Streaming provides a simple way to perform streaming analytics by treating unbounded, continuous data streams similarly to static DataFrames and Datasets. It allows for event-time processing, windowing, joins, and other SQL operations on streaming data. Under the hood, it uses micro-batch processing to incrementally and continuously execute queries on streaming data using Spark's SQL engine and Catalyst optimizer. This allows for high-level APIs as well as end-to-end guarantees like exactly-once processing and fault tolerance through mechanisms like offset tracking and a fault-tolerant state store.

Spark Structured Streaming

Spark Structured Streaming Revin Chalil Spark Structured Streaming is Spark's API for building end-to-end streaming applications. It allows expressing streaming computations as standard SQL queries and executes them continuously as new data arrives. Key features include built-in input sources like Kafka, transformations using DataFrames/SQL, output sinks, triggers to control batching, and checkpointing for fault tolerance. The presentation demonstrated a sample Structured Streaming application reading from Kafka and writing to the console using Spark on AWS.

A Deep Dive into Stateful Stream Processing in Structured Streaming with Tath...

A Deep Dive into Stateful Stream Processing in Structured Streaming with Tath...Databricks The document details stateful stream processing in Apache Spark's Structured Streaming, highlighting its fast, scalable, and fault-tolerant capabilities with high-level APIs. It covers the anatomy of streaming queries, including sources, transformations, and sinks, while emphasizing the importance of state management, watermarking, and fault recovery mechanisms. Additionally, it discusses various operations such as streaming aggregation, deduplication, and user-defined stateful processing using APIs like mapGroupsWithState.

Productizing Structured Streaming Jobs

Productizing Structured Streaming JobsDatabricks The document discusses the operational aspects of productizing structured streaming jobs using Apache Spark, emphasizing testing, monitoring, deploying, and updating these jobs. It outlines strategies for testing with a focus on leveraging Spark's stream test harness, and the importance of maintaining a staging environment to ensure reliable deployments. Additionally, it covers monitoring techniques to track query progress and performance, and deployment considerations for handling multiple streaming sources efficiently.

Deep Dive into Stateful Stream Processing in Structured Streaming with Tathag...

Deep Dive into Stateful Stream Processing in Structured Streaming with Tathag...Databricks Structured Streaming provides stateful stream processing capabilities in Spark SQL through built-in operations like aggregations and joins as well as user-defined stateful transformations. It handles state automatically through watermarking to limit state size by dropping old data. For arbitrary stateful logic, MapGroupsWithState requires explicit state management by the user.

Designing Structured Streaming Pipelines—How to Architect Things Right

Designing Structured Streaming Pipelines—How to Architect Things RightDatabricks The document outlines the architecture and processes of structured streaming using Spark, focusing on its capabilities for distributed stream processing, high throughput, and fault tolerance. It details various streaming query patterns, including ETL processes, stateful aggregations, and change data capture, with examples of handling data from sources like Kafka. The document emphasizes the design patterns for efficient data pipeline development and the importance of latency and throughput considerations in those designs.

Leveraging Azure Databricks to minimize time to insight by combining Batch an...

Leveraging Azure Databricks to minimize time to insight by combining Batch an...Microsoft Tech Community

Ad

More from Databricks (20)

DW Migration Webinar-March 2022.pptx

DW Migration Webinar-March 2022.pptxDatabricks The document discusses migrating a data warehouse to the Databricks Lakehouse Platform. It outlines why legacy data warehouses are struggling, how the Databricks Platform addresses these issues, and key considerations for modern analytics and data warehousing. The document then provides an overview of the migration methodology, approach, strategies, and key takeaways for moving to a lakehouse on Databricks.

Data Lakehouse Symposium | Day 1 | Part 1

Data Lakehouse Symposium | Day 1 | Part 1Databricks The document discusses the concept of a data lakehouse, highlighting the integration of structured, textual, and analog/IOT data. It emphasizes the importance of common identifiers and universal connectors for meaningful analytics across different data types, ultimately aiming to improve healthcare and manufacturing outcomes through effective data analysis. The presentation outlines the challenges of managing diverse data formats and the potential for data-driven insights to enhance quality of life.

Data Lakehouse Symposium | Day 1 | Part 2

Data Lakehouse Symposium | Day 1 | Part 2Databricks The document compares data lakehouses and data warehouses, outlining their similarities and differences. Both serve analytical processing and contain vetted, historical data, but the data lakehouse handles a much larger volume of machine-generated data and features fundamentally different structures from transaction-based data warehouses. Ultimately, they are presented as related yet distinct entities in the realm of data management.

Data Lakehouse Symposium | Day 2

Data Lakehouse Symposium | Day 2Databricks The Data Lakehouse Symposium held in February 2022 discussed the evolution of data management from data warehouses to lakehouses, emphasizing the integration of governance and metadata. It highlighted the challenges companies face in utilizing various types of data, particularly unstructured textual data, and the importance of adding context for effective analysis. The presentation also examined strategies for transforming unstructured data into structured formats to enable better decision-making and analytical processes.

Data Lakehouse Symposium | Day 4

Data Lakehouse Symposium | Day 4Databricks The document discusses the challenges of modern data, analytics, and AI workloads. Most enterprises struggle with siloed data systems that make integration and productivity difficult. The future of data lies with a data lakehouse platform that can unify data engineering, analytics, data warehousing, and machine learning workloads on a single open platform. The Databricks Lakehouse platform aims to address these challenges with its open data lake approach and capabilities for data engineering, SQL analytics, governance, and machine learning.

5 Critical Steps to Clean Your Data Swamp When Migrating Off of Hadoop

5 Critical Steps to Clean Your Data Swamp When Migrating Off of HadoopDatabricks The document outlines the challenges and considerations for migrating from Hadoop to Databricks, emphasizing the complexities of the Hadoop ecosystem and the advantages of a modern cloud-based data architecture. It provides a comprehensive migration plan that includes internal assessments, technical planning, and execution while addressing key topics such as data migration, security, and SQL integration. Specific tools and methodologies for effective transition and enhanced performance in data analytics are also discussed.

Democratizing Data Quality Through a Centralized Platform

Democratizing Data Quality Through a Centralized PlatformDatabricks Zillow's Data Governance Platform team addresses data quality challenges by creating a centralized platform that enhances visibility and standardizes data quality rules. The platform includes self-service capabilities and integrates with data lineage, allowing for built-in alerting and scalable onboarding. Key takeaways emphasize the importance of early alerting, collaboration, and the shared responsibility for maintaining high-quality data to improve decision-making.

Learn to Use Databricks for Data Science

Learn to Use Databricks for Data ScienceDatabricks The document outlines the challenges and workflows involved in data science, emphasizing the need for proper setup and resource management. It highlights the importance of sharing results with stakeholders and describes how Databricks' lakehouse platform simplifies these processes by integrating data sources and providing essential tools for data analysis. Overall, the goal is to help data scientists focus on their core analytical work rather than dealing with setup complexities.

Why APM Is Not the Same As ML Monitoring

Why APM Is Not the Same As ML MonitoringDatabricks The document discusses the distinctions between application performance monitoring (APM) and machine learning (ML) monitoring, emphasizing the unique challenges of ML monitoring, such as the need for intelligent detection and alerting. It outlines the essential components of ML monitoring, including statistical summarization, distribution comparison, and actionable alerts based on model performance. Additionally, it introduces Verta's end-to-end MLOps platform designed to meet the specialized needs of ML monitoring throughout the entire model lifecycle.

The Function, the Context, and the Data—Enabling ML Ops at Stitch Fix

The Function, the Context, and the Data—Enabling ML Ops at Stitch FixDatabricks Elijah Ben Izzy, a Data Platform Engineer at Stitch Fix, discusses building abstractions for machine learning operations to optimize workflows and enhance the separation of concerns between data science and platform engineering. The presentation highlights the importance of a custom-built model envelope for seamless integration and management of ML models, as well as advancements in deployment and inference processes. Future directions include enhanced production monitoring and sophisticated feature integration to further streamline data science workflows.

Stage Level Scheduling Improving Big Data and AI Integration

Stage Level Scheduling Improving Big Data and AI IntegrationDatabricks The document discusses stage-level scheduling and resource allocation in Apache Spark to enhance big data and AI integration. It outlines various resource requirements such as executors, memory, and accelerators, while presenting benefits like improved hardware utilization and simplified application pipelines. Additionally, it introduces the RAPIDS Accelerator for Spark and distributed deep learning with Horovod, emphasizing performance optimizations and future enhancements.

Simplify Data Conversion from Spark to TensorFlow and PyTorch

Simplify Data Conversion from Spark to TensorFlow and PyTorchDatabricks The document discusses the importance of data conversion between Spark and deep learning frameworks like TensorFlow and PyTorch. It highlights key pain points, such as challenges in migrating from single-node to distributed training and the complexity of saving and loading data. Additionally, it introduces the Spark Dataset Converter, which simplifies data handling while training deep learning models and offers best practices for efficient usage.

Scaling your Data Pipelines with Apache Spark on Kubernetes

Scaling your Data Pipelines with Apache Spark on KubernetesDatabricks This document discusses the integration of Apache Spark with Kubernetes on Google Cloud, highlighting its advantages for running data engineering and machine learning workloads within existing infrastructure. It outlines benefits such as improved cost optimization, faster scaling, and enhanced resource management through Google Kubernetes Engine (GKE) and Dataproc, while detailing implementation steps and monitoring options. Additionally, it covers the compatibility with big data ecosystem tools, job execution, and enterprise security features.

Scaling and Unifying SciKit Learn and Apache Spark Pipelines

Scaling and Unifying SciKit Learn and Apache Spark PipelinesDatabricks The document discusses the integration and scaling of AI/ML pipelines using Ray, aiming to unify Scikit-learn and Spark pipelines. Key features include Python functions as computation units, data exchange capabilities, and support for advanced execution strategies. It concludes with contact information for collaboration and emphasizes the importance of feedback from the community.

Sawtooth Windows for Feature Aggregations

Sawtooth Windows for Feature AggregationsDatabricks The document discusses the Sawtooth Windows Zipline, a feature engineering framework focusing on machine learning with structured data. It emphasizes the importance of real-time, stable, and consistent features for model training and serving, while highlighting the challenges of data sources and the intricacies of aggregations. Key topics include model complexity, data quality, and various types of windowed aggregations for efficient data processing.

Redis + Apache Spark = Swiss Army Knife Meets Kitchen Sink

Redis + Apache Spark = Swiss Army Knife Meets Kitchen SinkDatabricks The document discusses the integration of Redis with Apache Spark for managing long-running batch jobs and distributed counters. It outlines the challenges faced in submitting queries and the inefficiencies of existing solutions, proposing a system that utilizes Redis for queuing and job status communication. Key workflows and code views are provided to demonstrate the proposed solutions for efficient query handling and data processing.

Re-imagine Data Monitoring with whylogs and Spark

Re-imagine Data Monitoring with whylogs and SparkDatabricks The document discusses the challenges of monitoring machine learning data, emphasizing how traditional data analysis techniques fall short in addressing issues in ML data pipelines. It introduces the open-source library Whylogs for data logging, highlighting its lightweight profiling methods suitable for large datasets and integration with Apache Spark. Key topics include data quality problems, the need for scalable monitoring, and approaches for logging and analyzing ML data effectively.

Raven: End-to-end Optimization of ML Prediction Queries

Raven: End-to-end Optimization of ML Prediction QueriesDatabricks The document discusses Raven, an optimizer for machine learning prediction queries at Microsoft, focusing on its ability to improve the performance of SQL-based ML operations. It details how Raven integrates with Azure data engines, utilizing techniques like model projection pushdown and model-to-SQL translation to enhance query efficiency. Performance evaluations indicate that Raven significantly outperforms existing ML runtimes in various scenarios, achieving speed increases of up to 44 times compared to traditional approaches.

Processing Large Datasets for ADAS Applications using Apache Spark

Processing Large Datasets for ADAS Applications using Apache SparkDatabricks The document outlines the use of Spark for processing large datasets in automated driving applications, focusing on semantic segmentation and the challenges of moving from prototype to production. It presents the architecture of the system, covering ETL processes, model training, and inference, while addressing design considerations like scaling, security, and governance. Key takeaways emphasize the importance of leveraging cloud-based solutions and effective workflow management to enhance the development of perception software for autonomous vehicles.

Massive Data Processing in Adobe Using Delta Lake

Massive Data Processing in Adobe Using Delta LakeDatabricks The document discusses massive data processing at Adobe using Delta Lake, highlighting various aspects such as data representation, schema evolution, and challenges in data ingestion. It emphasizes the performance benefits of utilizing Delta Lake for handling large-scale data efficiently, while considering issues like schema management and replication lag. Key features like ACID transactions and lazy schema on-read approaches are also outlined to address the complexities of multi-tenant data architecture.

Ad

Recently uploaded (20)

Plooma is a writing platform to plan, write, and shape books your way

Plooma is a writing platform to plan, write, and shape books your wayPlooma Plooma is your all in one writing companion, designed to support authors at every twist and turn of the book creation journey. Whether you're sketching out your story's blueprint, breathing life into characters, or crafting chapters, Plooma provides a seamless space to organize all your ideas and materials without the overwhelm. Its intuitive interface makes building rich narratives and immersive worlds feel effortless.

Packed with powerful story and character organization tools, Plooma lets you track character development and manage world building details with ease. When it’s time to write, the distraction-free mode offers a clean, minimal environment to help you dive deep and write consistently. Plus, built-in editing tools catch grammar slips and style quirks in real-time, polishing your story so you don’t have to juggle multiple apps.

What really sets Plooma apart is its smart AI assistant - analyzing chapters for continuity, helping you generate character portraits, and flagging inconsistencies to keep your story tight and cohesive. This clever support saves you time and builds confidence, especially during those complex, detail packed projects.

Getting started is simple: outline your story’s structure and key characters with Plooma’s user-friendly planning tools, then write your chapters in the focused editor, using analytics to shape your words. Throughout your journey, Plooma’s AI offers helpful feedback and suggestions, guiding you toward a polished, well-crafted book ready to share with the world.

With Plooma by your side, you get a powerful toolkit that simplifies the creative process, boosts your productivity, and elevates your writing - making the path from idea to finished book smoother, more fun, and totally doable.

Get Started here: https://www.plooma.ink/

Generative Artificial Intelligence and its Applications

Generative Artificial Intelligence and its ApplicationsSandeepKS52 The exploration of generative AI begins with an overview of its fundamental concepts, highlighting how these technologies create new content and ideas by learning from existing data. Following this, the focus shifts to the processes involved in training and fine-tuning models, which are essential for enhancing their performance and ensuring they meet specific needs. Finally, the importance of responsible AI practices is emphasized, addressing ethical considerations and the impact of AI on society, which are crucial for developing systems that are not only effective but also beneficial and fair.

DevOps for AI: running LLMs in production with Kubernetes and KubeFlow

DevOps for AI: running LLMs in production with Kubernetes and KubeFlowAarno Aukia Presented by Aarno Aukia on June 4th, 2025, at Kubernetes Community Days KCD New York

Agentic Techniques in Retrieval-Augmented Generation with Azure AI Search

Agentic Techniques in Retrieval-Augmented Generation with Azure AI SearchMaxim Salnikov Discover how Agentic Retrieval in Azure AI Search takes Retrieval-Augmented Generation (RAG) to the next level by intelligently breaking down complex queries, leveraging full conversation history, and executing parallel searches through a new LLM-powered query planner. This session introduces a cutting-edge approach that delivers significantly more accurate, relevant, and grounded answers—unlocking new capabilities for building smarter, more responsive generative AI applications.

Traditional Retrieval-Augmented Generation (RAG) pipelines work well for simple queries—but when users ask complex, multi-part questions or refer to previous conversation history, they often fall short. That’s where Agentic Retrieval comes in: a game-changing advancement in Azure AI Search that brings LLM-powered reasoning directly into the retrieval layer.

This session unveils how agentic techniques elevate your RAG-based applications by introducing intelligent query planning, subquery decomposition, parallel execution, and result merging—all orchestrated by a new Knowledge Agent. You’ll learn how this approach significantly boosts relevance, groundedness, and answer quality, especially for sophisticated enterprise use cases.

Key takeaways:

- Understand the evolution from keyword and vector search to agentic query orchestration

- See how full conversation context improves retrieval accuracy

- Explore measurable improvements in answer relevance and completeness (up to 40% gains!)

- Get hands-on guidance on integrating Agentic Retrieval with Azure AI Foundry and SDKs

- Discover how to build scalable, AI-first applications powered by this new paradigm

Whether you're building intelligent copilots, enterprise Q&A bots, or AI-driven search solutions, this session will equip you with the tools and patterns to push beyond traditional RAG.

Transmission Media. (Computer Networks)

Transmission Media. (Computer Networks)S Pranav (Deepu) INTRODUCTION:TRANSMISSION MEDIA

• A transmission media in data communication is a physical path between the sender and

the receiver and it is the channel through which data can be sent from one location to

another. Data can be represented through signals by computers and other sorts of

telecommunication devices. These are transmitted from one device to another in the

form of electromagnetic signals. These Electromagnetic signals can move from one

sender to another receiver through a vacuum, air, or other transmission media.

Electromagnetic energy mainly includes radio waves, visible light, UV light, and gamma

ra

Open Source Software Development Methods

Open Source Software Development MethodsVICTOR MAESTRE RAMIREZ Open Source Software Development Methods

Shell Skill Tree - LabEx Certification (LabEx)

Shell Skill Tree - LabEx Certification (LabEx)VICTOR MAESTRE RAMIREZ Shell Skill Tree - LabEx Certification (LabEx)

Software Engineering Process, Notation & Tools Introduction - Part 3

Software Engineering Process, Notation & Tools Introduction - Part 3Gaurav Sharma Software Engineering Process, Notation & Tools Introduction

Integrating Survey123 and R&H Data Using FME

Integrating Survey123 and R&H Data Using FMESafe Software West Virginia Department of Transportation (WVDOT) actively engages in several field data collection initiatives using Collector and Survey 123. A critical component for effective asset management and enhanced analytical capabilities is the integration of Geographic Information System (GIS) data with Linear Referencing System (LRS) data. Currently, RouteID and Measures are not captured in Survey 123. However, we can bridge this gap through FME Flow automation. When a survey is submitted through Survey 123 for ArcGIS Portal (10.8.1), it triggers FME Flow automation. This process uses a customized workbench that interacts with a modified version of Esri's Geometry to Measure API. The result is a JSON response that includes RouteID and Measures, which are then applied to the feature service record.

Step by step guide to install Flutter and Dart

Step by step guide to install Flutter and DartS Pranav (Deepu) Flutter is basically Google’s portable user

interface (UI) toolkit, used to build and

develop eye-catching, natively-built

applications for mobile, desktop, and web,

from a single codebase. Flutter is free, open-

sourced, and compatible with existing code. It

is utilized by companies and developers

around the world, due to its user-friendly

interface and fairly simple, yet to-the-point

commands.

Meet You in the Middle: 1000x Performance for Parquet Queries on PB-Scale Dat...

Meet You in the Middle: 1000x Performance for Parquet Queries on PB-Scale Dat...Alluxio, Inc. Alluxio Webinar

June 10, 2025

For more Alluxio Events: https://www.alluxio.io/events/

Speaker:

David Zhu (Engineering Manager @ Alluxio)

Storing data as Parquet files on cloud object storage, such as AWS S3, has become prevalent not only for large-scale data lakes but also as lightweight feature stores for training and inference, or as document stores for Retrieval-Augmented Generation (RAG). However, querying petabyte-to-exabyte-scale data lakes directly from S3 remains notoriously slow, with latencies typically ranging from hundreds of milliseconds to several seconds.

In this webinar, David Zhu, Software Engineering Manager at Alluxio, will present the results of a joint collaboration between Alluxio and a leading SaaS and data infrastructure enterprise that explored leveraging Alluxio as a high-performance caching and acceleration layer atop AWS S3 for ultra-fast querying of Parquet files at PB scale.

David will share:

- How Alluxio delivers sub-millisecond Time-to-First-Byte (TTFB) for Parquet queries, comparable to S3 Express One Zone, without requiring specialized hardware, data format changes, or data migration from your existing data lake.

- The architecture that enables Alluxio’s throughput to scale linearly with cluster size, achieving one million queries per second on a modest 50-node deployment, surpassing S3 Express single-account throughput by 50x without latency degradation.

- Specifics on how Alluxio offloads partial Parquet read operations and reduces overhead, enabling direct, ultra-low-latency point queries in hundreds of microseconds and achieving a 1,000x performance gain over traditional S3 querying methods.

MOVIE RECOMMENDATION SYSTEM, UDUMULA GOPI REDDY, Y24MC13085.pptx

MOVIE RECOMMENDATION SYSTEM, UDUMULA GOPI REDDY, Y24MC13085.pptxMaharshi Mallela Movie recommendation system is a software application or algorithm designed to suggest movies to users based on their preferences, viewing history, or other relevant factors. The primary goal of such a system is to enhance user experience by providing personalized and relevant movie suggestions.

Smart Financial Solutions: Money Lender Software, Daily Pigmy & Personal Loan...

Smart Financial Solutions: Money Lender Software, Daily Pigmy & Personal Loan...Intelli grow Explore innovative tools tailored for modern finance with our Money Lender Software Development, efficient Daily Pigmy Collection Software, and streamlined Personal Loan Software. This presentation showcases how these solutions simplify loan management, boost collection efficiency, and enhance customer experience for NBFCs, microfinance firms, and individual lenders.

FME as an Orchestration Tool - Peak of Data & AI 2025

FME as an Orchestration Tool - Peak of Data & AI 2025Safe Software Processing huge amounts of data through FME can have performance consequences, but as an orchestration tool, FME is brilliant! We'll take a look at the principles of data gravity, best practices, pros, cons, tips and tricks. And of course all spiced up with relevant examples!

GDG Douglas - Google AI Agents: Your Next Intern?

GDG Douglas - Google AI Agents: Your Next Intern?felipeceotto Presentation done at the GDG Douglas event for June 2025.

A first look at Google's new Agent Development Kit.

Agent Development Kit is a new open-source framework from Google designed to simplify the full stack end-to-end development of agents and multi-agent systems.

Async-ronizing Success at Wix - Patterns for Seamless Microservices - Devoxx ...

Async-ronizing Success at Wix - Patterns for Seamless Microservices - Devoxx ...Natan Silnitsky In a world where speed, resilience, and fault tolerance define success, Wix leverages Kafka to power asynchronous programming across 4,000 microservices. This talk explores four key patterns that boost developer velocity while solving common challenges with scalable, efficient, and reliable solutions:

1. Integration Events: Shift from synchronous calls to pre-fetching to reduce query latency and improve user experience.

2. Task Queue: Offload non-critical tasks like notifications to streamline request flows.

3. Task Scheduler: Enable precise, fault-tolerant delayed or recurring workflows with robust scheduling.

4. Iterator for Long-running Jobs: Process extensive workloads via chunked execution, optimizing scalability and resilience.

For each pattern, we’ll discuss benefits, challenges, and how we mitigate drawbacks to create practical solutions

This session offers actionable insights for developers and architects tackling distributed systems, helping refine microservices and adopting Kafka-driven async excellence.

Smadav Pro 2025 Rev 15.4 Crack Full Version With Registration Key

Smadav Pro 2025 Rev 15.4 Crack Full Version With Registration Keyjoybepari360 ➡️ 🌍📱👉COPY & PASTE LINK👉👉👉

https://crackpurely.site/smadav-pro-crack-full-version-registration-key/

Who will create the languages of the future?

Who will create the languages of the future?Jordi Cabot Will future languages be created by language engineers?

Can you "vibe" a DSL?

In this talk, we will explore the changing landscape of language engineering and discuss how Artificial Intelligence and low-code/no-code techniques can play a role in this future by helping in the definition, use, execution, and testing of new languages. Even empowering non-tech users to create their own language infrastructure. Maybe without them even realizing.

Women in Tech: Marketo Engage User Group - June 2025 - AJO with AWS

Women in Tech: Marketo Engage User Group - June 2025 - AJO with AWSBradBedford3 Creating meaningful, real-time engagement across channels is essential to building lasting business relationships. Discover how AWS, in collaboration with Deloitte, set up one of Adobe's first instances of Journey Optimizer B2B Edition to revolutionize customer journeys for B2B audiences.

This session will share the use cases the AWS team has the implemented leveraging Adobe's Journey Optimizer B2B alongside Marketo Engage and Real-Time CDP B2B to deliver unified, personalized experiences and drive impactful engagement.

They will discuss how they are positioning AJO B2B in their marketing strategy and how AWS is imagining AJO B2B and Marketo will continue to work together in the future.

Whether you’re looking to enhance customer journeys or scale your B2B marketing efforts, you’ll leave with a clear view of what can be achieved to help transform your own approach.

Speakers:

Britney Young Senior Technical Product Manager, AWS

Erine de Leeuw Technical Product Manager, AWS

Writing Continuous Applications with Structured Streaming Python APIs in Apache Spark

- 1. https://dbricks.co/tutorial-pydata-miami 1 Enter your cluster name Use DBR 5.0 and Apache Spark 2.4, Scala 2.11 Choose Python 3 WiFi: CIC or CIC-A

- 2. Writing Continuous Applications with Structured Streaming in PySpark Jules S. Damji PyData, Miami, FL Jan 11, 2019

- 3. I have used Apache Spark 2.x Before…

- 4. Apache Spark Community & DeveloperAdvocate@ Databricks DeveloperAdvocate@ Hortonworks Software engineering @Sun Microsystems, Netscape, @Home, VeriSign, Scalix, Centrify, LoudCloud/Opsware, ProQuest Program Chair Spark + AI Summit https://www.linkedin.com/in/dmatrix @2twitme

- 5. DATABRICKS WORKSPACE Databricks Delta ML Frameworks DATABRICKS CLOUD SERVICE DATABRICKS RUNTIME Reliable & Scalable Simple & Integrated Databricks Unified Analytics Platform APIs Jobs Models Notebooks Dashboards End to end ML lifecycle

- 6. Agenda for Today’s Talk • What and Why Apache Spark • Why Streaming Applications are Difficult • What’s Structured Streaming • Anatomy of a Continunous Application • Tutorials & Demo • Q & A

- 7. How to think about data in 2019 - 2020 “Data is the new oil"

- 8. What’s Apache Spark & Why

- 9. What is Apache Spark? • General cluster computing engine that extends MapReduce • Rich set of APIs and libraries • Unified Engine • Large community: 1000+ orgs, clusters up to 8000 nodes Apache Spark, Spark and Apache are trademarks of the Apache Software Foundation SQLStreaming ML Graph … DL

- 10. Unique Thing about Spark • Unification: same engine and same API for diverse use cases • Streaming, batch, or interactive • ETL, SQL, machine learning, or graph

- 11. Why Unification?

- 12. Why Unification? • MapReduce: a general engine for batch processing

- 13. MapReduce Generalbatch processing Pregel Dremel Millwheel Drill Giraph ImpalaStorm S4 . . . Specialized systems for newworkloads Big Data Systems Yesterday Hard to manage, tune, deployHard to combine in pipelines

- 14. MapReduce Generalbatch processing Unified engine Big Data Systems Today ? Pregel Dremel Millwheel Drill Giraph ImpalaStorm S4 . . . Specialized systems for newworkloads

- 15. Faster, Easier to Use, Unified 15 First Distributed Processing Engine Specialized Data Processing Engines Unified Data Processing Engine

- 16. Benefits of Unification 1. Simpler to use and operate 2. Code reuse: e.g. only write monitoring, FT, etc once 3. New apps that span processing types: e.g. interactive queries on a stream, online machine learning

- 17. An Analogy Specialized devices Unified device New applications

- 18. Why Streaming Applications are Inherently Difficult?

- 20. Complexities in stream processing COMPLEX DATA Diverse data formats (json, avro, txt, csv, binary, …) Data can be dirty, late, out-of-order COMPLEX SYSTEMS Diverse storage systems (Kafka, S3, Kinesis, RDBMS, …) System failures COMPLEX WORKLOADS Combining streaming with interactive queries Machine learning