Parallel programming model

- 1. Presented by ILLURU PHANI KUMAR (ME) Embedded &IOT 17MEI503 PRN:170861384003 Parallel Programming Models

- 2. The four basic types of Parallel Processing Models depending upon their level of abstraction are : Machine models : the lowest level of abstraction and consists of a description of hardware and operating system, e.g., the registers or the input and output buffers Architectural models : the next level of abstraction and include the interconnection network of parallel platforms, memory organization, synchronous or asynchronous processing, and execution mode of single instructions by SIMD or MIMD. Models for Parallel Systems

- 3. Computational models : the next higher level of abstraction and reflecting the time needed for the execution of an algorithm on the resources of a computer given by an architectural model. Thus, a computational model provides an analytical method for designing and evaluating algorithms. Programming models : the next higher level of abstraction and describes a parallel computing system in terms of the semantics of the programming language or programming environment used.

- 4. There are several criteria by which the parallel programming models can differ: the level of parallelism which is exploited in the parallel execution (instruction level, statement level, procedural level, or parallel loops); the implicit or user-defined explicit specification of parallelism; the way how parallel program parts are specified; the execution mode of parallel units (SIMD or SPMD, synchronous or asynchronous); Criteria of Parallel Programming Model

- 5. A parallel program specifies computations which can be executed in parallel. Depending on the programming model, the computations can be defined at different levels. A computation can be : A sequence of instructions performing arithmetic or logical operations A sequence of statements where each statement may capture several instructions. A function or method invocation which typically consists of several statements. Many parallel programming models provide the concept of parallel loops; the iterations of a parallel loop are independent of each other and can therefore be executed in parallel. Computations in a Parallel Program

- 6. Parallelization of Programs Reduce the program execution time as much as possible by using multiple processors or cores. Parallelization is performed in several steps: Decomposition of the computations: The computations of the sequential algorithm are decomposed into tasks, and dependencies between the tasks are determined. Identified at different execution levels: instruction level, data or functional parallelism Decomposition can be done at program start (static decomposition), or created dynamically during program execution. The goal of task decomposition is therefore to generate enough tasks to keep all cores busy at all times during program execution.

- 7. Assignment of tasks to processes or threads: The main goal of the assignment step is to assign the tasks such that a good load balancing results, i.e., each process or thread should have about the same number of computations to perform. For shared address space, it is useful to assign two tasks which work on the same data set to the same thread, since this leads to a good cache usage. The assignment of tasks to processes or threads is also called scheduling. For a static decomposition, the assignment can be done in the initialization phase at program start (static scheduling). But scheduling can also be done during program execution (dynamic scheduling). Parallelization of Programs

- 8. Mapping of processes or threads to physical processes or cores: In the simplest case, each process or thread is mapped to a separate processor or core. If less cores than threads are available, multiple threads must be mapped to a single core. This mapping can be done by the operating system, but it could also be supported by program statements. The main goal of the mapping step is to get an equal utilization of the processors or cores while keeping communication between the processors as small as possible. Parallelization of Programs

- 10. Levels of Parallelism The computations performed by a given program provide opportunities for parallel execution at different levels: Instruction level Statement level Loop level Function level.

- 11. Parallelism at Instruction Level Multiple instructions of a program can be executed in parallel at the same time, if they are independent of each other. In particular, the existence of one of the following data dependencies between instructions I1 and I2 inhibits their parallel execution: Flow dependency (also called true dependency): There is a flow dependency from instruction I1 to I2, if I1 computes a result value in a register or variable which is then used by I2 as operand. Anti-dependency: There is an anti-dependency from I1 to I2, if I1 uses a register or variable as operand which is later used by I2 to store the result of a computation. Output dependency: There is an output dependency from I1 to I2, if I1 and I2 use the same register or variable to store the result of a computation.

- 12. In all three cases, instructions I1 and I2 cannot be executed in opposite order or in parallel, since this would result in an erroneous computation: For the flow dependence, I2 would use an old value as operand if the order is reversed. For the anti-dependence, I1 would use the wrong value computed by I2 as operand, if the order is reversed. For the output dependence, the subsequent instructions would use a wrong value for R1, if the order is reversed. Parallelism at Instruction Level

- 13. The dependencies between instructions can be illustrated by a data dependency graph.

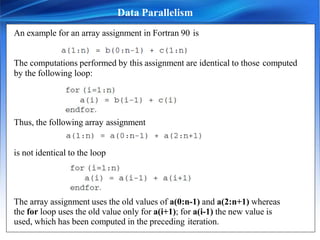

- 14. Data Parallelism In many programs, the same operation must be applied to different elements of a larger data structure. In the simplest case, this could be an array structure. If the operations to be applied are independent of each other, this could be used for parallel execution: The elements of the data structure are distributed evenly among the processors and each processor performs the operation on its assigned elements. Data parallelism and can be used in many areas, especially in scientific computing. It can be exploited for SIMD, MIMD, SPMD models It can be exploited for both shared and distributed address spaces.

- 15. An example for an array assignment in Fortran 90 is The computations performed by this assignment are identical to those computed by the following loop: Thus, the following array assignment is not identical to the loop The array assignment uses the old values of a(0:n-1) and a(2:n+1) whereas the for loop uses the old value only for a(i+1); for a(i-1) the new value is used, which has been computed in the preceding iteration. Data Parallelism

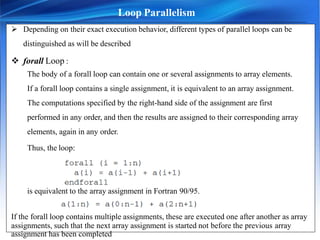

- 16. Loop Parallelism Many algorithms perform computations by iteratively traversing a large data structure. The iterative traversal is usually expressed by a loop provided by imperative programming languages. A loop is usually executed sequentially which means that the computations of the ith iteration are started not before all computations of the (i − 1)th iteration are completed. This execution scheme is called sequential loop in the following. If there are no dependencies between the iterations of a loop, the iterations can be executed in arbitrary order, and they can also be executed in parallel by different processors. Such a loop is then called a parallel loop.

- 17. Depending on their exact execution behavior, different types of parallel loops can be distinguished as will be described forall Loop : The body of a forall loop can contain one or several assignments to array elements. If a forall loop contains a single assignment, it is equivalent to an array assignment. The computations specified by the right-hand side of the assignment are first performed in any order, and then the results are assigned to their corresponding array elements, again in any order. Thus, the loop: is equivalent to the array assignment in Fortran 90/95. If the forall loop contains multiple assignments, these are executed one after another as array assignments, such that the next array assignment is started not before the previous array Loop Parallelism assignment has been completed.

- 18. dopar Loop : The body of a dopar loop may not only contain one or several assignments to array elements, but also other statements and even other loops. The iterations of a dopar loop are executed by multiple processors in parallel. Each processor executes its iterations in any order one after another. The instructions of each iteration are executed sequentially in program order, using the variable values of the initial state before the dopar loop is started. Thus, variable updates performed in one iteration are not visible to the other iterations. After all iterations have been executed, the updates of the single iterations are combined and a new global state is computed. If two different iterations update the same variable, one of the two updates becomes visible in the new global state, resulting in a non- deterministic behavior. The overall effect of forall and dopar loops with the same loop body may differ if the loop body contains more than one statement. Loop Parallelism

- 19. In the sequential for loop, the computation of b(i) uses the value of a(i-1) that hasbeen computed in the preceding iteration and the value of a(i+1) valid before the loop. The two statements in the forall loop are treated as separate array assignments. Thus, the computation of b(i) uses for both a(i-1) and a(i+1) the new value computed by the first statement. In the dopar loop, updates in one iteration are not visible to the other iterations. Since the computation of b(i) does not use the value of a(i) that is computed in the same iteration, the old values are used for a(i-1) and a(i+1). The following table shows an example for the values computed: Loop Parallelism

- 20. Functional Parallelism Task parallelism or Functional parallelism : Many sequential programs contain independent program parts can be executed in parallel. Independent program parts called Task- single statements, basic blocks, loops, or function calls. Task parallelism can be represented as a task graph where the nodes are the tasks and the edges represent the dependencies between the tasks. Tasks must be scheduled such that the dependencies between them is fulfilled. Typically, a task cannot be started before all tasks which it depends on are finished. The goal of a scheduling algorithm is to find a schedule that minimizes the overall execution time. Static and dynamic scheduling algorithms can be used.

- 21. Static Scheduling: A static scheduling algorithm determines the assignment of tasks to processors deterministically at program start or at compile time. The assignment may be based on an estimation of the execution time of the tasks, which might be obtained by runtime measurements or an analysis of the computational structure of the tasks. Dynamic Scheduling A dynamic scheduling algorithm determines the assignment of tasks to processors during program execution. Therefore, the schedule generated can be adapted to the observed execution times of the tasks. Static and Dynamic Scheduling

- 22. Explicit and Implicit Representation of Parallelism Parallel programming models can also be distinguished depending on whether the available parallelism, including the partitioning into tasks and specification of communication and synchronization, is represented explicitly in the program or not. The development of parallel programs is facilitated if no explicit representation is included, but in this case an advanced compiler must be available to produce efficient parallel programs. On the other hand, an explicit representation is more effort for program development, but the compiler can be much simpler.

- 23. Implicit Parallelism For the programmer, the simplest model results, when no explicit representation of parallelism is required. In this case, the program is mainly a specification of the computations to be performed, but no parallel execution order is given. In such a model, the programmer can concentrate on the details of the (sequential) algorithm to be implemented and does not need to care about the organization of the parallel execution. There are two approaches to it: Parallelizing compilers and Functional programming languages

- 24. Automatic Parallelization : Transforms a sequential program into an efficient parallel program by using appropriate compiler techniques. To generate the parallel program, the compiler must first analyze the dependencies between the computations to be performed. Based on this analysis, the computation can then be assigned to processors for execution such that a good load balancing results. Moreover, for a distributed address space, the amount of communication should be reduced as much as possible. In practice, automatic parallelization is difficult to perform because dependence analysis is difficult for pointer-based computations or indirect addressing and because the execution time of function calls or loops with unknown bounds is difficult to predict at compile time. Therefore, automatic parallelization often produces parallel programs with unsatisfactory runtime behavior and, hence, this approach is not often used in practice. Parallelizing compilers

- 25. Functional programming languages Describes the computations of a program as the evaluation of mathematical functions without side effects; this means the evaluation of a function has the only effect that the output value of the function is computed. Thus, calling a function twice with the same input argument values always produces the same output value. Higher-order functions can be used; these are functions which use other functions as arguments and yield functions as arguments. Iterative computations are usually expressed by recursion. The most popular functional programming language is Haskell. The advantage of using functional languages would be that new language constructs are not necessary to enable a parallel execution as is the case for non-functional programming languages.

- 26. Explicit Parallelism with Implicit Distribution This Model requires an explicit representation of parallelism in the program, but which do not demand an explicit distribution and assignment to processes or threads. Correspondingly, no explicit communication or synchronization is required. For the compiler, this approach has the advantage that the available degree of parallelism is specified in the program and does not need to be retrieved by a complicated data dependence analysis. This class of programming models includes parallel programming languages which extend sequential programming languages by parallel loops with independent iterations. The parallel loops specify the available parallelism, but the exact assignments of loop iterations to processors is not fixed. This approach has been taken by the library OpenMP where parallel loops can be specified by compiler directives. Another example is HPF(High Performance Fortran).

- 27. Explicit Distribution A third class of parallel programming models requires not only an explicit representation of parallelism, but also an explicit partitioning into tasks or an explicit assignment of work units to threads. The mapping to processors or cores as well as communication between processors is implicit and does not need to be specified. An example for this class is the BSP (bulk synchronous parallel) programming model.

- 28. Explicit Assignment to Processors This model requires an explicit partitioning into tasks or threads and also need an explicit assignment to processors. But the communication between the processors does not need to be specified. An example for this class is the coordination language Linda which replaces the usual point-to-point communication between processors by a tuple space concept. A tuple space provides a global pool of data in which data can be stored and fromwhich data can be retrieved. The following three operations are provided to access the tuple space: in: read and remove a tuple from the tuple space; read: read a tuple from the tuple space without removing it; out: write a tuple in the tuple space.

- 29. Explicit Communication and Synchronization The last class comprises programming models in which the programmer must specify all details of a parallel execution, including the required communication and synchronization operations. This has the advantage that a standard compiler can be used and that the programmer can control the parallel execution explicitly with all the details. This usually provides efficient parallel programs, but it also requires a significant amount of work for program development. Programming models belonging to this class are message-passing models like MPI.

- 30. Parallel Programming Patterns Parallel programming patterns provide specific coordination structures for processes or threads Creation of Processes or Threads Static :. In the static case, a fixed number of processes or threads is created at program start. These processes or threads exist during the entire execution of the parallel program and are terminated when program execution is finished. Dynamic : An alternative approach is to allow creation and termination of processes or threads dynamically at arbitrary points during program execution. At program start, a single process or thread is active and executes the main program.

- 31. Fork–Join : Using the concept, an existing thread T creates a number of child threads T1, . . . , Tm with a fork statement. The child threads work in parallel and execute a given program part or function. The creating parent thread T can execute the same or a different program part or function and can then wait for the termination of T1, . . . , Tm by using a joincall. The fork–join concept can be provided as a language construct or as a library function. The fork–join concept is, for example, used in OpenMP for the creation of threads executing a parallel loop. The spawn and exit operations provided by message-passing systems like MPI-2 , provide a similar action pattern as fork–join.

- 32. Parbegin–Parend A similar pattern as fork–join for thread creation and termination is provided by the parbegin–parend construct which is sometimes also called cobegin–coend. The construct allows the specification of a sequence of statements, including function calls, to be executed by a set of processors in parallel. When an executing thread reaches a parbegin–parend construct, a set of threads is created and the statements of the construct are assigned to these threads for execution. The statements following the parbegin–parend construct are executed not before all these threads have finished their work and have been terminated.

- 33. SPMD and SIMD : In the SIMD approach, the single instructions are executed synchronously by the different threads on different data. Synchronization is implicit In the SPMD approach, the different threads work asynchronously with each other and different threads may execute different parts of the parallel program. Need explicit synchronization .

Editor's Notes

- #4: A parallel programming model specifies the programmer’s view on parallel computer by defining how the programmer can code an algorithm. This view is influenced by the architectural design and the language, compiler, or the runtime libraries and, thus, there exist many different parallel programming models even for the same architecture.

- #5: the modes and pattern of communication among computing units for the exchange of information (explicit communication or shared variables); synchronization mechanisms to organize computation and communication between parallel units. Each parallel programming language or environment implements the criteria given above and may use a combination of the possibilities.

![Chorus - Distributed Operating System [ case study ]](https://cdn.slidesharecdn.com/ss_thumbnails/chorus-171117082036-181113144303-thumbnail.jpg?width=560&fit=bounds)

![Présentation_gestion[1] [Autosaved].pptx](https://cdn.slidesharecdn.com/ss_thumbnails/prsentationgestion1autosaved-250608153959-37fabfd3-thumbnail.jpg?width=560&fit=bounds)