Big data processing with Apache Spark and Oracle Database

Download as pptx, pdf3 likes1,609 views

Slides from the session on big data processing with Apache Spark and Oracle database from BG OUG Autumn conference 2019 held in Riu Pravetz.

1 of 43

Downloaded 13 times

![Spark streaming

• To define a Spark stream you need to create a

JavaStreamingContext instance

SparkConf conf = new

SparkConf().setMaster("local[4]").setAppName("CustomerItems");

JavaStreamingContext jssc = new JavaStreamingContext(conf,

Durations.seconds(1));](https://image.slidesharecdn.com/bigdataprocessingwithapachesparkandoracledatabase-191202195344/85/Big-data-processing-with-Apache-Spark-and-Oracle-Database-24-320.jpg)

Ad

Recommended

Introduction to apache spark

Introduction to apache spark Aakashdata we will see an overview of Spark in Big Data. We will start with an introduction to Apache Spark Programming. Then we will move to know the Spark History. Moreover, we will learn why Spark is needed. Afterward, will cover all fundamental of Spark components. Furthermore, we will learn about Spark’s core abstraction and Spark RDD. For more detailed insights, we will also cover spark features, Spark limitations, and Spark Use cases.

Spark as a Platform to Support Multi-Tenancy and Many Kinds of Data Applicati...

Spark as a Platform to Support Multi-Tenancy and Many Kinds of Data Applicati...Spark Summit This document summarizes Uber's use of Spark as a data platform to support multi-tenancy and various data applications. Key points include:

- Uber uses Spark on YARN for resource management and isolation between teams/jobs. Parquet is used as the columnar file format for performance and schema support.

- Challenges include sharing infrastructure between many teams with different backgrounds and use cases. Spark provides a common platform.

- An Uber Development Kit (UDK) is used to help users get Spark jobs running quickly on Uber's infrastructure, with templates, defaults, and APIs for common tasks.

Introduction to Apache Spark

Introduction to Apache SparkAnastasios Skarlatidis This presentation is an introduction to Apache Spark. It covers the basic API, some advanced features and describes how Spark physically executes its jobs.

Deep Dive into Spark SQL with Advanced Performance Tuning with Xiao Li & Wenc...

Deep Dive into Spark SQL with Advanced Performance Tuning with Xiao Li & Wenc...Databricks Spark SQL is a highly scalable and efficient relational processing engine with ease-to-use APIs and mid-query fault tolerance. It is a core module of Apache Spark. Spark SQL can process, integrate and analyze the data from diverse data sources (e.g., Hive, Cassandra, Kafka and Oracle) and file formats (e.g., Parquet, ORC, CSV, and JSON). This talk will dive into the technical details of SparkSQL spanning the entire lifecycle of a query execution. The audience will get a deeper understanding of Spark SQL and understand how to tune Spark SQL performance.

Top 5 mistakes when writing Spark applications

Top 5 mistakes when writing Spark applicationshadooparchbook This document discusses common mistakes people make when writing Spark applications and provides recommendations to address them. It covers issues related to executor configuration, application failures due to shuffle block sizes exceeding limits, slow jobs caused by data skew, and managing the DAG to avoid excessive shuffles and stages. Recommendations include using smaller executors, increasing the number of partitions, addressing skew through techniques like salting, and preferring ReduceByKey over GroupByKey and TreeReduce over Reduce to improve performance and resource usage.

Spark Performance Tuning .pdf

Spark Performance Tuning .pdfAmit Raj It will tells you various ways to optimize the Apache spark performance. Such as cache broadcast, shuffling, Memory Tuning etc

Apache Spark - Basics of RDD | Big Data Hadoop Spark Tutorial | CloudxLab

Apache Spark - Basics of RDD | Big Data Hadoop Spark Tutorial | CloudxLabCloudxLab Big Data with Hadoop & Spark Training: http://bit.ly/2L4rPmM

This CloudxLab Basics of RDD tutorial helps you to understand Basics of RDD in detail. Below are the topics covered in this tutorial:

1) What is RDD - Resilient Distributed Datasets

2) Creating RDD in Scala

3) RDD Operations - Transformations & Actions

4) RDD Transformations - map() & filter()

5) RDD Actions - take() & saveAsTextFile()

6) Lazy Evaluation & Instant Evaluation

7) Lineage Graph

8) flatMap and Union

9) Scala Transformations - Union

10) Scala Actions - saveAsTextFile(), collect(), take() and count()

11) More Actions - reduce()

12) Can We Use reduce() for Computing Average?

13) Solving Problems with Spark

14) Compute Average and Standard Deviation with Spark

15) Pick Random Samples From a Dataset using Spark

KSQL-ops! Running ksqlDB in the Wild (Simon Aubury, ThoughtWorks) Kafka Summi...

KSQL-ops! Running ksqlDB in the Wild (Simon Aubury, ThoughtWorks) Kafka Summi...confluent Simon Aubury gave a presentation on using ksqlDB for various enterprise workloads. He discussed four use cases: 1) streaming ETL to analyze web traffic data, 2) data enrichment to identify customers impacted by a storm, 3) measurement and audit to verify new system loads, and 4) data transformation to quickly fix data issues. For each use case, he described how to develop pipelines and applications in ksqlDB to address the business needs in a scalable and failure-resistant manner. Overall, he advocated for understanding when ksqlDB is appropriate to use and planning systems accordingly.

Deep Dive into Stateful Stream Processing in Structured Streaming with Tathag...

Deep Dive into Stateful Stream Processing in Structured Streaming with Tathag...Databricks Structured Streaming provides stateful stream processing capabilities in Spark SQL through built-in operations like aggregations and joins as well as user-defined stateful transformations. It handles state automatically through watermarking to limit state size by dropping old data. For arbitrary stateful logic, MapGroupsWithState requires explicit state management by the user.

How to Actually Tune Your Spark Jobs So They Work

How to Actually Tune Your Spark Jobs So They WorkIlya Ganelin This document summarizes a USF Spark workshop that covers Spark internals and how to optimize Spark jobs. It discusses how Spark works with partitions, caching, serialization and shuffling data. It provides lessons on using less memory by partitioning wisely, avoiding shuffles, using the driver carefully, and caching strategically to speed up jobs. The workshop emphasizes understanding Spark and tuning configurations to improve performance and stability.

Introduction to Apache Spark

Introduction to Apache SparkSamy Dindane Apache Spark is a fast distributed data processing engine that runs in memory. It can be used with Java, Scala, Python and R. Spark uses resilient distributed datasets (RDDs) as its main data structure. RDDs are immutable and partitioned collections of elements that allow transformations like map and filter. Spark is 10-100x faster than Hadoop for iterative algorithms and can be used for tasks like ETL, machine learning, and streaming.

Understanding and Improving Code Generation

Understanding and Improving Code GenerationDatabricks Code generation is integral to Spark’s physical execution engine. When implemented, the Spark engine creates optimized bytecode at runtime improving performance when compared to interpreted execution. Spark has taken the next step with whole-stage codegen which collapses an entire query into a single function.

Apache Spark in Depth: Core Concepts, Architecture & Internals

Apache Spark in Depth: Core Concepts, Architecture & InternalsAnton Kirillov Slides cover Spark core concepts of Apache Spark such as RDD, DAG, execution workflow, forming stages of tasks and shuffle implementation and also describes architecture and main components of Spark Driver. The workshop part covers Spark execution modes , provides link to github repo which contains Spark Applications examples and dockerized Hadoop environment to experiment with

Apache Spark overview

Apache Spark overviewDataArt This document provides an overview of Apache Spark, including how it compares to Hadoop, the Spark ecosystem, Resilient Distributed Datasets (RDDs), transformations and actions on RDDs, the directed acyclic graph (DAG) scheduler, Spark Streaming, and the DataFrames API. Key points covered include Spark's faster performance versus Hadoop through its use of memory instead of disk, the RDD abstraction for distributed collections, common RDD operations, and Spark's capabilities for real-time streaming data processing and SQL queries on structured data.

Real-Time Spark: From Interactive Queries to Streaming

Real-Time Spark: From Interactive Queries to StreamingDatabricks This document summarizes Michael Armbrust's presentation on real-time Spark. It discusses:

1. The goals of real-time analytics including having the freshest answers as fast as possible while keeping the answers up to date.

2. Spark 2.0 introduces unified APIs for SQL, DataFrames and Datasets to make developing real-time analytics simpler with powerful yet simple APIs.

3. Structured streaming allows running the same SQL queries on streaming data to continuously aggregate data and update outputs, unifying batch, interactive, and streaming queries into a single API.

How we got to 1 millisecond latency in 99% under repair, compaction, and flus...

How we got to 1 millisecond latency in 99% under repair, compaction, and flus...ScyllaDB Scylla is an open source reimplementation of Cassandra which performs up to 10X with drop in-replacement compatibility. At ScyllaDB, performance matters but even more importantly, stable performance under any circumstances.

A key factor for our consistent performance is our reliance on userspace schedulers. Scheduling in userspace allows the application, the database in our case to have better control on the different priorities each task has and to provide an SLA to selected operations. Scylla used to have an I/O scheduler and recently won a CPU scheduler.

At ScyllaDB, we make architectural decisions that provide not only low latencies but consistently low latencies at higher percentiles. This begins with our choice of language and key architectural decisions such as not using the Linux page-cache, and is fulfilled by autonomous database control, a set of algorithms, which guarantees that the system will adapt to changes in the workload. In the last year, we have made changes to Scylla that provide latencies that are consistent in every percentile. In this talk, Dor Laor will recap those changes and discuss what ScyllaDB is doing in the future.

Squirreling Away $640 Billion: How Stripe Leverages Flink for Change Data Cap...

Squirreling Away $640 Billion: How Stripe Leverages Flink for Change Data Cap...Flink Forward Flink Forward San Francisco 2022.

Being in the payments space, Stripe requires strict correctness and freshness guarantees. We rely on Flink as the natural solution for delivering on this in support of our Change Data Capture (CDC) infrastructure. We heavily rely on CDC as a tool for capturing data change streams from our databases without critically impacting database reliability, scalability, and maintainability. Data derived from these streams is used broadly across the business and powers many of our critical financial reporting systems totalling over $640 Billion in payment volume annually. We use many components of Flink’s flexible DataStream API to perform aggregations and abstract away the complexities of stream processing from our downstreams. In this talk, we’ll walk through our experience from the very beginning to what we have in production today. We’ll share stories around the technical details and trade-offs we encountered along the way.

by

Jeff Chao

Top 5 Mistakes to Avoid When Writing Apache Spark Applications

Top 5 Mistakes to Avoid When Writing Apache Spark ApplicationsCloudera, Inc. The document discusses 5 common mistakes people make when writing Spark applications:

1) Not properly sizing executors for memory and cores.

2) Having shuffle blocks larger than 2GB which can cause jobs to fail.

3) Not addressing data skew which can cause joins and shuffles to be very slow.

4) Not properly managing the DAG to minimize shuffles and stages.

5) Classpath conflicts from mismatched dependencies causing errors.

Gcp data engineer

Gcp data engineerNarendranath Reddy T The document provides an overview of the Google Cloud Platform (GCP) Data Engineer certification exam, including the content breakdown and question format. It then details several big data technologies in the GCP ecosystem such as Apache Pig, Hive, Spark, and Beam. Finally, it covers various GCP storage options including Cloud Storage, Cloud SQL, Datastore, BigTable, and BigQuery, outlining their key features, performance characteristics, data models, and use cases.

Kafka internals

Kafka internalsDavid Groozman This presentation contains our understanding of Kafka internals, its performance. We also present Nest Logic Active Data Profiling system.

Programming in Spark using PySpark

Programming in Spark using PySpark Mostafa This session covers how to work with PySpark interface to develop Spark applications. From loading, ingesting, and applying transformation on the data. The session covers how to work with different data sources of data, apply transformation, python best practices in developing Spark Apps. The demo covers integrating Apache Spark apps, In memory processing capabilities, working with notebooks, and integrating analytics tools into Spark Applications.

Optimizing Delta/Parquet Data Lakes for Apache Spark

Optimizing Delta/Parquet Data Lakes for Apache SparkDatabricks Matthew Powers gave a talk on optimizing data lakes for Apache Spark. He discussed community goals like standardizing method signatures. He advocated for using Spark helper libraries like spark-daria and spark-fast-tests. Powers explained how to build better data lakes using techniques like partitioning data on relevant fields to skip data and speed up queries significantly. He also covered modern Scala libraries, incremental updates, compacting small files, and using Delta Lakes to more easily update partitioned data lakes over time.

Apache Spark 101

Apache Spark 101Abdullah Çetin ÇAVDAR This document provides an overview of Apache Spark, including its goal of providing a fast and general engine for large-scale data processing. It discusses Spark's programming model, components like RDDs and DAGs, and how to initialize and deploy Spark on a cluster. Key aspects covered include RDDs as the fundamental data structure in Spark, transformations and actions, and storage levels for caching data in memory or disk.

Rds data lake @ Robinhood

Rds data lake @ Robinhood BalajiVaradarajan13 This document provides an overview and deep dive into Robinhood's RDS Data Lake architecture for ingesting data from their RDS databases into an S3 data lake. It discusses their prior daily snapshotting approach, and how they implemented a faster change data capture pipeline using Debezium to capture database changes and ingest them incrementally into a Hudi data lake. It also covers lessons learned around change data capture setup and configuration, initial table bootstrapping, data serialization formats, and scaling the ingestion process. Future work areas discussed include orchestrating thousands of pipelines and improving downstream query performance.

Memory Management in Apache Spark

Memory Management in Apache SparkDatabricks Memory management is at the heart of any data-intensive system. Spark, in particular, must arbitrate memory allocation between two main use cases: buffering intermediate data for processing (execution) and caching user data (storage). This talk will take a deep dive through the memory management designs adopted in Spark since its inception and discuss their performance and usability implications for the end user.

Apache Spark Core – Practical Optimization

Apache Spark Core – Practical OptimizationDatabricks Properly shaping partitions and your jobs to enable powerful optimizations, eliminate skew and maximize cluster utilization. We will explore various Spark Partition shaping methods along with several optimization strategies including join optimizations, aggregate optimizations, salting and multi-dimensional parallelism.

Intro to Cassandra

Intro to CassandraDataStax Academy Archaic database technologies just don't scale under the always on, distributed demands of modern IOT, mobile and web applications. We'll start this Intro to Cassandra by discussing how its approach is different and why so many awesome companies have migrated from the cold clutches of the relational world into the warm embrace of peer to peer architecture. After this high-level opening discussion, we'll briefly unpack the following:

• Cassandra's internal architecture and distribution model

• Cassandra's Data Model

• Reads and Writes

Node.js and the MySQL Document Store

Node.js and the MySQL Document StoreRui Quelhas This document provides an overview of using Node.js with the MySQL Document Store. It discusses how MySQL can be used as a NoSQL database with the new MySQL Document Store API. Key components that enable this include the X DevAPI, Router, X Plugin, and X Protocol. The API allows for schemaless data storage and retrieval using JSON documents stored in MySQL collections. Documents can be added, retrieved, modified, and removed from collections using the MySQL Connector/Node.js driver.

Building highly scalable data pipelines with Apache Spark

Building highly scalable data pipelines with Apache SparkMartin Toshev This document provides a summary of Apache Spark, including:

- Spark is a framework for large-scale data processing across clusters that is faster than Hadoop by relying more on RAM and minimizing disk IO.

- Spark transformations operate on resilient distributed datasets (RDDs) to manipulate data, while actions return results to the driver program.

- Spark can receive data from various sources like files, databases, sockets through its datasource APIs and process both batch and streaming data.

- Spark streaming divides streaming data into micro-batches called DStreams and integrates with messaging systems like Kafka. Structured streaming is a newer API that works on DataFrames/Datasets.

Spark Concepts - Spark SQL, Graphx, Streaming

Spark Concepts - Spark SQL, Graphx, StreamingPetr Zapletal This document provides an overview of Apache Spark modules including Spark SQL, GraphX, and Spark Streaming. Spark SQL allows querying structured data using SQL, GraphX provides APIs for graph processing, and Spark Streaming enables scalable stream processing. The document discusses Resilient Distributed Datasets (RDDs), SchemaRDDs, querying data with SQLContext, GraphX property graphs and algorithms, StreamingContext, and input/output operations in Spark Streaming.

More Related Content

What's hot (20)

Deep Dive into Stateful Stream Processing in Structured Streaming with Tathag...

Deep Dive into Stateful Stream Processing in Structured Streaming with Tathag...Databricks Structured Streaming provides stateful stream processing capabilities in Spark SQL through built-in operations like aggregations and joins as well as user-defined stateful transformations. It handles state automatically through watermarking to limit state size by dropping old data. For arbitrary stateful logic, MapGroupsWithState requires explicit state management by the user.

How to Actually Tune Your Spark Jobs So They Work

How to Actually Tune Your Spark Jobs So They WorkIlya Ganelin This document summarizes a USF Spark workshop that covers Spark internals and how to optimize Spark jobs. It discusses how Spark works with partitions, caching, serialization and shuffling data. It provides lessons on using less memory by partitioning wisely, avoiding shuffles, using the driver carefully, and caching strategically to speed up jobs. The workshop emphasizes understanding Spark and tuning configurations to improve performance and stability.

Introduction to Apache Spark

Introduction to Apache SparkSamy Dindane Apache Spark is a fast distributed data processing engine that runs in memory. It can be used with Java, Scala, Python and R. Spark uses resilient distributed datasets (RDDs) as its main data structure. RDDs are immutable and partitioned collections of elements that allow transformations like map and filter. Spark is 10-100x faster than Hadoop for iterative algorithms and can be used for tasks like ETL, machine learning, and streaming.

Understanding and Improving Code Generation

Understanding and Improving Code GenerationDatabricks Code generation is integral to Spark’s physical execution engine. When implemented, the Spark engine creates optimized bytecode at runtime improving performance when compared to interpreted execution. Spark has taken the next step with whole-stage codegen which collapses an entire query into a single function.

Apache Spark in Depth: Core Concepts, Architecture & Internals

Apache Spark in Depth: Core Concepts, Architecture & InternalsAnton Kirillov Slides cover Spark core concepts of Apache Spark such as RDD, DAG, execution workflow, forming stages of tasks and shuffle implementation and also describes architecture and main components of Spark Driver. The workshop part covers Spark execution modes , provides link to github repo which contains Spark Applications examples and dockerized Hadoop environment to experiment with

Apache Spark overview

Apache Spark overviewDataArt This document provides an overview of Apache Spark, including how it compares to Hadoop, the Spark ecosystem, Resilient Distributed Datasets (RDDs), transformations and actions on RDDs, the directed acyclic graph (DAG) scheduler, Spark Streaming, and the DataFrames API. Key points covered include Spark's faster performance versus Hadoop through its use of memory instead of disk, the RDD abstraction for distributed collections, common RDD operations, and Spark's capabilities for real-time streaming data processing and SQL queries on structured data.

Real-Time Spark: From Interactive Queries to Streaming

Real-Time Spark: From Interactive Queries to StreamingDatabricks This document summarizes Michael Armbrust's presentation on real-time Spark. It discusses:

1. The goals of real-time analytics including having the freshest answers as fast as possible while keeping the answers up to date.

2. Spark 2.0 introduces unified APIs for SQL, DataFrames and Datasets to make developing real-time analytics simpler with powerful yet simple APIs.

3. Structured streaming allows running the same SQL queries on streaming data to continuously aggregate data and update outputs, unifying batch, interactive, and streaming queries into a single API.

How we got to 1 millisecond latency in 99% under repair, compaction, and flus...

How we got to 1 millisecond latency in 99% under repair, compaction, and flus...ScyllaDB Scylla is an open source reimplementation of Cassandra which performs up to 10X with drop in-replacement compatibility. At ScyllaDB, performance matters but even more importantly, stable performance under any circumstances.

A key factor for our consistent performance is our reliance on userspace schedulers. Scheduling in userspace allows the application, the database in our case to have better control on the different priorities each task has and to provide an SLA to selected operations. Scylla used to have an I/O scheduler and recently won a CPU scheduler.

At ScyllaDB, we make architectural decisions that provide not only low latencies but consistently low latencies at higher percentiles. This begins with our choice of language and key architectural decisions such as not using the Linux page-cache, and is fulfilled by autonomous database control, a set of algorithms, which guarantees that the system will adapt to changes in the workload. In the last year, we have made changes to Scylla that provide latencies that are consistent in every percentile. In this talk, Dor Laor will recap those changes and discuss what ScyllaDB is doing in the future.

Squirreling Away $640 Billion: How Stripe Leverages Flink for Change Data Cap...

Squirreling Away $640 Billion: How Stripe Leverages Flink for Change Data Cap...Flink Forward Flink Forward San Francisco 2022.

Being in the payments space, Stripe requires strict correctness and freshness guarantees. We rely on Flink as the natural solution for delivering on this in support of our Change Data Capture (CDC) infrastructure. We heavily rely on CDC as a tool for capturing data change streams from our databases without critically impacting database reliability, scalability, and maintainability. Data derived from these streams is used broadly across the business and powers many of our critical financial reporting systems totalling over $640 Billion in payment volume annually. We use many components of Flink’s flexible DataStream API to perform aggregations and abstract away the complexities of stream processing from our downstreams. In this talk, we’ll walk through our experience from the very beginning to what we have in production today. We’ll share stories around the technical details and trade-offs we encountered along the way.

by

Jeff Chao

Top 5 Mistakes to Avoid When Writing Apache Spark Applications

Top 5 Mistakes to Avoid When Writing Apache Spark ApplicationsCloudera, Inc. The document discusses 5 common mistakes people make when writing Spark applications:

1) Not properly sizing executors for memory and cores.

2) Having shuffle blocks larger than 2GB which can cause jobs to fail.

3) Not addressing data skew which can cause joins and shuffles to be very slow.

4) Not properly managing the DAG to minimize shuffles and stages.

5) Classpath conflicts from mismatched dependencies causing errors.

Gcp data engineer

Gcp data engineerNarendranath Reddy T The document provides an overview of the Google Cloud Platform (GCP) Data Engineer certification exam, including the content breakdown and question format. It then details several big data technologies in the GCP ecosystem such as Apache Pig, Hive, Spark, and Beam. Finally, it covers various GCP storage options including Cloud Storage, Cloud SQL, Datastore, BigTable, and BigQuery, outlining their key features, performance characteristics, data models, and use cases.

Kafka internals

Kafka internalsDavid Groozman This presentation contains our understanding of Kafka internals, its performance. We also present Nest Logic Active Data Profiling system.

Programming in Spark using PySpark

Programming in Spark using PySpark Mostafa This session covers how to work with PySpark interface to develop Spark applications. From loading, ingesting, and applying transformation on the data. The session covers how to work with different data sources of data, apply transformation, python best practices in developing Spark Apps. The demo covers integrating Apache Spark apps, In memory processing capabilities, working with notebooks, and integrating analytics tools into Spark Applications.

Optimizing Delta/Parquet Data Lakes for Apache Spark

Optimizing Delta/Parquet Data Lakes for Apache SparkDatabricks Matthew Powers gave a talk on optimizing data lakes for Apache Spark. He discussed community goals like standardizing method signatures. He advocated for using Spark helper libraries like spark-daria and spark-fast-tests. Powers explained how to build better data lakes using techniques like partitioning data on relevant fields to skip data and speed up queries significantly. He also covered modern Scala libraries, incremental updates, compacting small files, and using Delta Lakes to more easily update partitioned data lakes over time.

Apache Spark 101

Apache Spark 101Abdullah Çetin ÇAVDAR This document provides an overview of Apache Spark, including its goal of providing a fast and general engine for large-scale data processing. It discusses Spark's programming model, components like RDDs and DAGs, and how to initialize and deploy Spark on a cluster. Key aspects covered include RDDs as the fundamental data structure in Spark, transformations and actions, and storage levels for caching data in memory or disk.

Rds data lake @ Robinhood

Rds data lake @ Robinhood BalajiVaradarajan13 This document provides an overview and deep dive into Robinhood's RDS Data Lake architecture for ingesting data from their RDS databases into an S3 data lake. It discusses their prior daily snapshotting approach, and how they implemented a faster change data capture pipeline using Debezium to capture database changes and ingest them incrementally into a Hudi data lake. It also covers lessons learned around change data capture setup and configuration, initial table bootstrapping, data serialization formats, and scaling the ingestion process. Future work areas discussed include orchestrating thousands of pipelines and improving downstream query performance.

Memory Management in Apache Spark

Memory Management in Apache SparkDatabricks Memory management is at the heart of any data-intensive system. Spark, in particular, must arbitrate memory allocation between two main use cases: buffering intermediate data for processing (execution) and caching user data (storage). This talk will take a deep dive through the memory management designs adopted in Spark since its inception and discuss their performance and usability implications for the end user.

Apache Spark Core – Practical Optimization

Apache Spark Core – Practical OptimizationDatabricks Properly shaping partitions and your jobs to enable powerful optimizations, eliminate skew and maximize cluster utilization. We will explore various Spark Partition shaping methods along with several optimization strategies including join optimizations, aggregate optimizations, salting and multi-dimensional parallelism.

Intro to Cassandra

Intro to CassandraDataStax Academy Archaic database technologies just don't scale under the always on, distributed demands of modern IOT, mobile and web applications. We'll start this Intro to Cassandra by discussing how its approach is different and why so many awesome companies have migrated from the cold clutches of the relational world into the warm embrace of peer to peer architecture. After this high-level opening discussion, we'll briefly unpack the following:

• Cassandra's internal architecture and distribution model

• Cassandra's Data Model

• Reads and Writes

Node.js and the MySQL Document Store

Node.js and the MySQL Document StoreRui Quelhas This document provides an overview of using Node.js with the MySQL Document Store. It discusses how MySQL can be used as a NoSQL database with the new MySQL Document Store API. Key components that enable this include the X DevAPI, Router, X Plugin, and X Protocol. The API allows for schemaless data storage and retrieval using JSON documents stored in MySQL collections. Documents can be added, retrieved, modified, and removed from collections using the MySQL Connector/Node.js driver.

Similar to Big data processing with Apache Spark and Oracle Database (20)

Building highly scalable data pipelines with Apache Spark

Building highly scalable data pipelines with Apache SparkMartin Toshev This document provides a summary of Apache Spark, including:

- Spark is a framework for large-scale data processing across clusters that is faster than Hadoop by relying more on RAM and minimizing disk IO.

- Spark transformations operate on resilient distributed datasets (RDDs) to manipulate data, while actions return results to the driver program.

- Spark can receive data from various sources like files, databases, sockets through its datasource APIs and process both batch and streaming data.

- Spark streaming divides streaming data into micro-batches called DStreams and integrates with messaging systems like Kafka. Structured streaming is a newer API that works on DataFrames/Datasets.

Spark Concepts - Spark SQL, Graphx, Streaming

Spark Concepts - Spark SQL, Graphx, StreamingPetr Zapletal This document provides an overview of Apache Spark modules including Spark SQL, GraphX, and Spark Streaming. Spark SQL allows querying structured data using SQL, GraphX provides APIs for graph processing, and Spark Streaming enables scalable stream processing. The document discusses Resilient Distributed Datasets (RDDs), SchemaRDDs, querying data with SQLContext, GraphX property graphs and algorithms, StreamingContext, and input/output operations in Spark Streaming.

Spark from the Surface

Spark from the SurfaceJosi Aranda Apache Spark is an open-source distributed processing engine that is up to 100 times faster than Hadoop for processing data stored in memory and 10 times faster for data stored on disk. It provides high-level APIs in Java, Scala, Python and SQL and supports batch processing, streaming, and machine learning. Spark runs on Hadoop, Mesos, Kubernetes or standalone and can access diverse data sources using its core abstraction called resilient distributed datasets (RDDs).

Apache Spark Components

Apache Spark ComponentsGirish Khanzode Spark Streaming allows processing live data streams using small batch sizes to provide low latency results. It provides a simple API to implement complex stream processing algorithms across hundreds of nodes. Spark SQL allows querying structured data using SQL or the Hive query language and integrates with Spark's batch and interactive processing. MLlib provides machine learning algorithms and pipelines to easily apply ML to large datasets. GraphX extends Spark with an API for graph-parallel computation on property graphs.

Processing Large Data with Apache Spark -- HasGeek

Processing Large Data with Apache Spark -- HasGeekVenkata Naga Ravi Apache Spark presentation at HasGeek FifthElelephant

https://fifthelephant.talkfunnel.com/2015/15-processing-large-data-with-apache-spark

Covering Big Data Overview, Spark Overview, Spark Internals and its supported libraries

Jump Start on Apache Spark 2.2 with Databricks

Jump Start on Apache Spark 2.2 with DatabricksAnyscale Apache Spark 2.0 and subsequent releases of Spark 2.1 and 2.2 have laid the foundation for many new features and functionality. Its main three themes—easier, faster, and smarter—are pervasive in its unified and simplified high-level APIs for Structured data.

In this introductory part lecture and part hands-on workshop, you’ll learn how to apply some of these new APIs using Databricks Community Edition. In particular, we will cover the following areas:

Agenda:

• Overview of Spark Fundamentals & Architecture

• What’s new in Spark 2.x

• Unified APIs: SparkSessions, SQL, DataFrames, Datasets

• Introduction to DataFrames, Datasets and Spark SQL

• Introduction to Structured Streaming Concepts

• Four Hands-On Labs

Apache Spark Architecture | Apache Spark Architecture Explained | Apache Spar...

Apache Spark Architecture | Apache Spark Architecture Explained | Apache Spar...Simplilearn This presentation on Spark Architecture will give an idea of what is Apache Spark, the essential features in Spark, the different Spark components. Here, you will learn about Spark Core, Spark SQL, Spark Streaming, Spark MLlib, and Graphx. You will understand how Spark processes an application and runs it on a cluster with the help of its architecture. Finally, you will perform a demo on Apache Spark. So, let's get started with Apache Spark Architecture.

YouTube Video: https://www.youtube.com/watch?v=CF5Ewk0GxiQ

What is this Big Data Hadoop training course about?

The Big Data Hadoop and Spark developer course have been designed to impart an in-depth knowledge of Big Data processing using Hadoop and Spark. The course is packed with real-life projects and case studies to be executed in the CloudLab.

What are the course objectives?

Simplilearn’s Apache Spark and Scala certification training are designed to:

1. Advance your expertise in the Big Data Hadoop Ecosystem

2. Help you master essential Apache and Spark skills, such as Spark Streaming, Spark SQL, machine learning programming, GraphX programming and Shell Scripting Spark

3. Help you land a Hadoop developer job requiring Apache Spark expertise by giving you a real-life industry project coupled with 30 demos

What skills will you learn?

By completing this Apache Spark and Scala course you will be able to:

1. Understand the limitations of MapReduce and the role of Spark in overcoming these limitations

2. Understand the fundamentals of the Scala programming language and its features

3. Explain and master the process of installing Spark as a standalone cluster

4. Develop expertise in using Resilient Distributed Datasets (RDD) for creating applications in Spark

5. Master Structured Query Language (SQL) using SparkSQL

6. Gain a thorough understanding of Spark streaming features

7. Master and describe the features of Spark ML programming and GraphX programming

Who should take this Scala course?

1. Professionals aspiring for a career in the field of real-time big data analytics

2. Analytics professionals

3. Research professionals

4. IT developers and testers

5. Data scientists

6. BI and reporting professionals

7. Students who wish to gain a thorough understanding of Apache Spark

Learn more at https://www.simplilearn.com/big-data-and-analytics/apache-spark-scala-certification-training

Glint with Apache Spark

Glint with Apache SparkVenkata Naga Ravi Spark Overview, Cluster Architecture, Elements, Spark Stack, Spark Streaming

Meetup Details of my presentation:

http://www.meetup.com/lspe-in/events/212250542/

http://www.meetup.com/devops-bangalore/events/222155834/

Unified Big Data Processing with Apache Spark (QCON 2014)

Unified Big Data Processing with Apache Spark (QCON 2014)Databricks This document discusses Apache Spark, a fast and general engine for big data processing. It describes how Spark generalizes the MapReduce model through its Resilient Distributed Datasets (RDDs) abstraction, which allows efficient sharing of data across parallel operations. This unified approach allows Spark to support multiple types of processing, like SQL queries, streaming, and machine learning, within a single framework. The document also outlines ongoing developments like Spark SQL and improved machine learning capabilities.

Apache Spark for Beginners

Apache Spark for BeginnersAnirudh Spark is an open-source cluster computing framework that provides high performance for both batch and streaming data processing. It addresses limitations of other distributed processing systems like MapReduce by providing in-memory computing capabilities and supporting a more general programming model. Spark core provides basic functionalities and serves as the foundation for higher-level modules like Spark SQL, MLlib, GraphX, and Spark Streaming. RDDs are Spark's basic abstraction for distributed datasets, allowing immutable distributed collections to be operated on in parallel. Key benefits of Spark include speed through in-memory computing, ease of use through its APIs, and a unified engine supporting multiple workloads.

Apache Spark in Industry

Apache Spark in IndustryDorian Beganovic 1. Apache Spark is an open source cluster computing framework for large-scale data processing. It is compatible with Hadoop and provides APIs for SQL, streaming, machine learning, and graph processing.

2. Over 3000 companies use Spark, including Microsoft, Uber, Pinterest, and Amazon. It can run on standalone clusters, EC2, YARN, and Mesos.

3. Spark SQL, Streaming, and MLlib allow for SQL queries, streaming analytics, and machine learning at scale using Spark's APIs which are inspired by Python/R data frames and scikit-learn.

Apache Spark Overview

Apache Spark OverviewDharmjit Singh This presentations is first in the series of Apache Spark tutorials and covers the basics of Spark framework.Subscribe to my youtube channel for more updates https://www.youtube.com/channel/UCNCbLAXe716V2B7TEsiWcoA

APACHE SPARK.pptx

APACHE SPARK.pptxDeepaThirumurugan Spark is a fast, general-purpose cluster computing system. It provides high-level APIs in Java, Scala, Python and R for distributed tasks including SQL, streaming, and machine learning. Spark improves on MapReduce by keeping data in-memory, allowing iterative algorithms to run faster than disk-based approaches. Resilient Distributed Datasets (RDDs) are Spark's fundamental data structure, acting as a fault-tolerant collection of elements that can be operated on in parallel.

Apache Spark Overview @ ferret

Apache Spark Overview @ ferretAndrii Gakhov Apache Spark is a fast and general engine for large-scale data processing. It was originally developed in 2009 and is now supported by Databricks. Spark provides APIs in Java, Scala, Python and can run on Hadoop, Mesos, standalone or in the cloud. It provides high-level APIs like Spark SQL, MLlib, GraphX and Spark Streaming for structured data processing, machine learning, graph analytics and stream processing.

Apache Spark - A High Level overview

Apache Spark - A High Level overviewKaran Alang Apache Spark is an open-source unified analytics engine for large-scale data processing. It provides high-level APIs in Scala, Java, Python, and R, and an optimized engine that supports general computation graphs for data analysis. Some key components of Apache Spark include Resilient Distributed Datasets (RDDs), DataFrames, Datasets, and Spark SQL for structured data processing. Spark also supports streaming, machine learning via MLlib, and graph processing with GraphX.

Spark Saturday: Spark SQL & DataFrame Workshop with Apache Spark 2.3

Spark Saturday: Spark SQL & DataFrame Workshop with Apache Spark 2.3Databricks This introductory workshop is aimed at data analysts & data engineers new to Apache Spark and exposes them how to analyze big data with Spark SQL and DataFrames.

In this partly instructor-led and self-paced labs, we will cover Spark concepts and you’ll do labs for Spark SQL and DataFrames

in Databricks Community Edition.

Toward the end, you’ll get a glimpse into newly minted Databricks Developer Certification for Apache Spark: what to expect & how to prepare for it.

* Apache Spark Basics & Architecture

* Spark SQL

* DataFrames

* Brief Overview of Databricks Certified Developer for Apache Spark

SparkPaper

SparkPaperSuraj Thapaliya Apache Spark is an open source framework for large-scale data processing. It was originally developed at UC Berkeley and provides fast, easy-to-use tools for batch and streaming data. Spark features include SQL queries, machine learning, streaming, and graph processing. It is up to 100 times faster than Hadoop for iterative algorithms and interactive queries due to its in-memory processing capabilities. Spark uses Resilient Distributed Datasets (RDDs) that allow data to be reused across parallel operations.

Spark after Dark by Chris Fregly of Databricks

Spark after Dark by Chris Fregly of DatabricksData Con LA Spark After Dark is a mock dating site that uses the latest Spark libraries, AWS Kinesis, Lambda Architecture, and Probabilistic Data Structures to generate dating recommendations.

There will be 5+ demos covering everything from basic data ETL to advanced data processing including Alternating Least Squares Machine Learning/Collaborative Filtering and PageRank Graph Processing.

There is heavy emphasis on Spark Streaming and AWS Kinesis.

Watch the video here

https://www.youtube.com/watch?v=g0i_d8YT-Bs

Spark After Dark - LA Apache Spark Users Group - Feb 2015

Spark After Dark - LA Apache Spark Users Group - Feb 2015Chris Fregly Spark After Dark is a mock dating site that uses the latest Spark libraries including Spark SQL, BlinkDB, Tachyon, Spark Streaming, MLlib, and GraphX to generate high-quality dating recommendations for its members and blazing fast analytics for its operators.

We begin with brief overview of Spark, Spark Libraries, and Spark Use Cases. In addition, we'll discuss the modern day Lambda Architecture that combines real-time and batch processing into a single system. Lastly, we present best practices for monitoring and tuning a highly-available Spark and Spark Streaming cluster.

There will be many live demos covering everything from basic topics such as ETL and data ingestion to advanced topics such as streaming, sampling, approximations, machine learning, textual analysis, and graph processing.

Ad

More from Martin Toshev (20)

Jdk 10 sneak peek

Jdk 10 sneak peekMartin Toshev JDK 10 is the first release with a 6-month cadence. It includes new features like local variable type inference with the var keyword and Application Class-Data Sharing to reduce memory usage. Performance improvements were made like parallel full GC for G1. JDK 10 also adds experimental support for Graal as a Java-based JIT compiler and improves container awareness. Future releases will continue adding new features from projects like Panama, Valhalla, and Loom.

Semantic Technology In Oracle Database 12c

Semantic Technology In Oracle Database 12cMartin Toshev Presentation from the BG OUG Autumn conference, November 2017 at Pravetz, Bulgaria.

Demos:

SPARQL/SPARUL

=============

Using Virtuoso/Blazegraph as a triple store

// insert triple

INSERT {

GRAPH <data.bgoug.online/graph> {

<http://data.bgoug.online/people/MartinToshev>

<http://data.bgoug.online/attends>

<http://data.bgoug.online/events/100>

}

}

// query triple

SELECT ?s ?p ?o FROM <data.bgoug.online/graph> WHERE { ?s ?p ?o }

// insert another record

INSERT {

GRAPH <data.bgoug.online/graph> {

<http://data.bgoug.online/people/IvanIvanov>

<http://data.bgoug.online/attends>

<http://data.bgoug.online/events/100>

}

}

// try to insert triple multiple times

// delete triples for Ivan Ivanov

DELETE {GRAPH <data.bgoug.online/graph> {<http://data.bgoug.online/people/IvanIvanov> ?p ?o} }

WHERE { <http://data.bgoug.online/people/IvanIvanov> ?p ?o }

// show in blazegraph (use INSERT DATA) for INSERT

Oracle Semantic technology

==========================

// create tablespace (under sys)

CREATE TABLESPACE rdf_tblspace

DATAFILE 'D:\software\Oracle12c\oradata\SampeDB\rdf_tblspace.dat' SIZE 1024M REUSE

AUTOEXTEND ON NEXT 256M MAXSIZE UNLIMITED

SEGMENT SPACE MANAGEMENT AUTO;

EXECUTE SEM_APIS.CREATE_SEM_NETWORK('rdf_tblspace');

// create semantic tables and do SQL queries

CREATE TABLE conference_rdf_table (id NUMBER, triple SDO_RDF_TRIPLE_S);

EXECUTE SEM_APIS.CREATE_SEM_MODEL('conference', 'conference_rdf_table', 'triple');

INSERT INTO conference_rdf_table VALUES (1,

SDO_RDF_TRIPLE_S ('conference','<http://data.bgoug.online/people/MartinToshev>',

'<http://data.bgoug.online/attends>','<http://data.bgoug.online/events/100>'));

SELECT SEM_APIS.GET_MODEL_ID('conference') AS conference FROM DUAL;

SELECT SEM_APIS.IS_TRIPLE(

'conference','<http://data.bgoug.online/people/MartinToshev>',

'<http://data.bgoug.online/attends>','<http://data.bgoug.online/events/100>') as is_triple from DUAL;

SELECT c.triple.GET_SUBJECT() AS subject,

c.triple.GET_PROPERTY() AS property,

c.triple.GET_OBJECT() AS object

FROM conference_rdf_table c;

SELECT x attendee, y event

FROM TABLE(SEM_MATCH(

'{?x <http://data.bgoug.online/attends> ?y}',

SEM_Models('conference'),

null,

SEM_ALIASES(SEM_ALIAS('','<http://data.bgoug.online/conference>')),

null));

Practical security In a modular world

Practical security In a modular worldMartin Toshev This document discusses security aspects of Java modules and compares them to OSGi bundles. It explains that the Java module system brings improved security while fitting into the existing security architecture. Modules introduce another layer of access control and stronger encapsulation for application code. Both modules and bundles define protection domains and can be signed, but modules lack OSGi's notion of local bundle permissions. The new module system enhances security while modularizing the Java platform.

Java 9 Security Enhancements in Practice

Java 9 Security Enhancements in PracticeMartin Toshev This document discusses security enhancements to Java 9 including TLS and DTLS support. TLS 1.0-1.2 and the upcoming TLS 1.3 are supported via the JSSE API. DTLS 1.0-1.2 is also now supported to provide security for unreliable transports like UDP. The TLS Application-Layer Protocol Negotiation extension allows identifying the application protocol during the TLS handshake. Other enhancements include OCSP stapling, PKCS12 as the default keystore, and SHA-3 hash algorithm support.

Java 9 sneak peek

Java 9 sneak peekMartin Toshev Slides for the "Java 9 sneak peek" session.

Code samples: https://github.com/martinfmi/java_9_sneak_peek

Writing Stored Procedures in Oracle RDBMS

Writing Stored Procedures in Oracle RDBMSMartin Toshev This document discusses writing Java stored procedures with Oracle RDBMS. It covers the differences between PL/SQL and Java stored procedures, how to write Java stored procedures, manage them, and new features in Oracle Database 12c. Key points include: Java procedures use a JVM inside Oracle RDBMS, writing procedures involves loading Java classes and mapping methods, and managing procedures includes debugging, monitoring the JVM, and setting compiler options.

Spring RabbitMQ

Spring RabbitMQMartin Toshev This document provides an overview of Spring RabbitMQ, which is a framework for integrating Java applications with the RabbitMQ message broker. It discusses messaging basics and RabbitMQ concepts like exchanges, queues, bindings and message routing. It then summarizes how Spring RabbitMQ can be used to configure RabbitMQ infrastructure like connections, templates, listeners and administrators either directly in Java code or using Spring configuration. It also briefly mentions how Spring Integration and Spring Boot can be used to build messaging applications on RabbitMQ.

Security Architecture of the Java platform

Security Architecture of the Java platformMartin Toshev The document discusses the evolution of the Java security model from JDK 1.0 to recent versions. It started with a simple sandbox model separating trusted and untrusted code. Over time, features like applet signing, fine-grained permissions, JAAS roles and principals, and module-based security were added to enhance the security and flexibility of granting access to untrusted code. The model aims to safely execute code from various sources while preventing unauthorized access.

Oracle Database 12c Attack Vectors

Oracle Database 12c Attack VectorsMartin Toshev Martin Toshev presented on attack vectors against Oracle database 12c. He began by providing real world examples of attacks, such as privilege escalation exploits. He then discussed potential attack vectors, including those originating from unauthorized, authorized with limited privileges, and SYSDBA users. Finally, he outlined approaches for discovering new vulnerabilities and recommended tools for testing Oracle database security.

JVM++: The Graal VM

JVM++: The Graal VMMartin Toshev Presentation on the Graal VM at BG OUG (http://www.bgoug.org/) summer conference, 3-5June, 2016, Borovetz, Bulgaria

RxJS vs RxJava: Intro

RxJS vs RxJava: IntroMartin Toshev Introductory presentation for the Clash of Technologies: RxJS vs RxJava event organized by SoftServe @ betahouse (17.01.2015). Comparison document with questions & answers available here: https://docs.google.com/document/d/1VhuXJUcILsMSP4_6pCCXBP0X5lEVTsmLivKHcUkFvFY/edit#.

Security Аrchitecture of Тhe Java Platform

Security Аrchitecture of Тhe Java PlatformMartin Toshev The document summarizes the evolution of the Java security model from JDK 1.0 to the current version. It discusses how the security model started with a simple trusted/untrusted code separation and gradually evolved to support signed applets, fine-grained access control, and role-based access using JAAS. It also discusses how the security model will be applied to modules in future versions. Additionally, it covers some of the key security APIs available outside the sandbox like JCA, PKI, JSSE, and best practices for secure coding.

Spring RabbitMQ

Spring RabbitMQMartin Toshev This document provides an overview of Spring RabbitMQ. It discusses messaging basics and RabbitMQ concepts like exchanges, queues, bindings. It then summarizes the Spring AMQP and Spring Integration frameworks for integrating RabbitMQ in Spring applications. Spring AMQP provides the RabbitAdmin, listener container and RabbitTemplate for declaring and interacting with RabbitMQ components. The document contains code examples for configuring RabbitMQ and consuming/producing messages using Spring AMQP.

Writing Stored Procedures with Oracle Database 12c

Writing Stored Procedures with Oracle Database 12cMartin Toshev This document discusses writing Java stored procedures with Oracle Database 12c. It begins by comparing PL/SQL and Java stored procedures, noting that both can be executed directly in the database and recompiled when source code changes. It then covers writing Java stored procedures by loading Java classes into the database, mapping Java methods to PL/SQL functions/procedures, and invoking them. Managing procedures includes monitoring with JMX and debugging with JDBC. New features in Oracle 12c include support for multiple JDK versions, JNDI, and improved debugging support.

Concurrency Utilities in Java 8

Concurrency Utilities in Java 8Martin Toshev This document discusses concurrency utilities introduced in Java 8 including parallel tasks, parallel streams, parallel array operations, CompletableFuture, ConcurrentHashMap, scalable updatable variables, and StampedLock. It provides examples and discusses when and how to properly use these utilities as well as potential performance issues. Resources for further reading on Java concurrency are also listed.

The RabbitMQ Message Broker

The RabbitMQ Message BrokerMartin Toshev RabbitMQ is an open source message broker that implements the AMQP protocol. It provides various messaging patterns using different exchange types and supports clustering for scalability and high availability. Administration of RabbitMQ includes managing queues, exchanges, bindings and other components. Integrations exist for protocols like STOMP, MQTT and frameworks like Spring, while security features include authentication, authorization, and SSL/TLS encryption.

Security Architecture of the Java Platform (BG OUG, Plovdiv, 13.06.2015)

Security Architecture of the Java Platform (BG OUG, Plovdiv, 13.06.2015)Martin Toshev Presentation on security architecture of the Java platform held during a BG OUG conference, June, 2015

Modularity of The Java Platform Javaday (http://javaday.org.ua/)

Modularity of The Java Platform Javaday (http://javaday.org.ua/)Martin Toshev Project Jigsaw aims to provide modularity for the Java platform by defining modules for the JDK and restructuring it. This will address problems caused by the current monolithic design and dependency mechanism. OSGi also provides modularity on top of Java by defining bundles and a runtime. Project Penrose explores interoperability between Jigsaw and OSGi modules.

Writing Java Stored Procedures in Oracle 12c

Writing Java Stored Procedures in Oracle 12cMartin Toshev The session discusses the innerworkings ot the Oracle Aurora JVM, the process of writing and maintaining Java stored procedures and the new features in Oracle database 12c.

KDB database (EPAM tech talks, Sofia, April, 2015)

KDB database (EPAM tech talks, Sofia, April, 2015)Martin Toshev KDB is an in-memory column-oriented database that provides high-performance for real-time and historical large volumes of data. It is used widely in the financial industry. KDB supports the Q programming language for querying and manipulating data, and can be deployed in a distributed environment. The Java API provides simple connection and query methods to access a KDB database. KDB is well-suited for use cases involving capturing market data feeds and analyzing FIX messages.

Ad

Recently uploaded (20)

Azure vs AWS Which Cloud Platform Is Best for Your Business in 2025

Azure vs AWS Which Cloud Platform Is Best for Your Business in 2025Infrassist Technologies Pvt. Ltd. Azure vs. AWS is a common comparison when businesses evaluate cloud platforms for performance, flexibility, and cost-efficiency.

Soulmaite review - Find Real AI soulmate review

Soulmaite review - Find Real AI soulmate reviewSoulmaite Looking for an honest take on Soulmaite? This Soulmaite review covers everything you need to know—from features and pricing to how well it performs as a real AI soulmate. We share how users interact with adult chat features, AI girlfriend 18+ options, and nude AI chat experiences. Whether you're curious about AI roleplay porn or free AI NSFW chat with no sign-up, this review breaks it down clearly and informatively.

Creating an Accessible Future-How AI-powered Accessibility Testing is Shaping...

Creating an Accessible Future-How AI-powered Accessibility Testing is Shaping...Impelsys Inc. Web accessibility is a fundamental principle that strives to make the internet inclusive for all. According to the World Health Organization, over a billion people worldwide live with some form of disability. These individuals face significant challenges when navigating the digital landscape, making the quest for accessible web content more critical than ever.

Enter Artificial Intelligence (AI), a technological marvel with the potential to reshape the way we approach web accessibility. AI offers innovative solutions that can automate processes, enhance user experiences, and ultimately revolutionize web accessibility. In this blog post, we’ll explore how AI is making waves in the world of web accessibility.

Dancing with AI - A Developer's Journey.pptx

Dancing with AI - A Developer's Journey.pptxElliott Richmond In this talk, Elliott explores how developers can embrace AI not as a threat, but as a collaborative partner.

We’ll examine the shift from routine coding to creative leadership, highlighting the new developer superpowers of vision, integration, and innovation.

We'll touch on security, legacy code, and the future of democratized development.

Whether you're AI-curious or already a prompt engineering, this session will help you find your rhythm in the new dance of modern development.

7 Salesforce Data Cloud Best Practices.pdf

7 Salesforce Data Cloud Best Practices.pdfMinuscule Technologies Discover 7 best practices for Salesforce Data Cloud to clean, integrate, secure, and scale data for smarter decisions and improved customer experiences.

Oracle Cloud Infrastructure Generative AI Professional

Oracle Cloud Infrastructure Generative AI ProfessionalVICTOR MAESTRE RAMIREZ Oracle Cloud Infrastructure Generative AI Professional

End-to-end Assurance for SD-WAN & SASE with ThousandEyes

End-to-end Assurance for SD-WAN & SASE with ThousandEyesThousandEyes End-to-end Assurance for SD-WAN & SASE with ThousandEyes

Data Virtualization: Bringing the Power of FME to Any Application

Data Virtualization: Bringing the Power of FME to Any ApplicationSafe Software Imagine building web applications or dashboards on top of all your systems. With FME’s new Data Virtualization feature, you can deliver the full CRUD (create, read, update, and delete) capabilities on top of all your data that exploit the full power of FME’s all data, any AI capabilities. Data Virtualization enables you to build OpenAPI compliant API endpoints using FME Form’s no-code development platform.

In this webinar, you’ll see how easy it is to turn complex data into real-time, usable REST API based services. We’ll walk through a real example of building a map-based app using FME’s Data Virtualization, and show you how to get started in your own environment – no dev team required.

What you’ll take away:

-How to build live applications and dashboards with federated data

-Ways to control what’s exposed: filter, transform, and secure responses

-How to scale access with caching, asynchronous web call support, with API endpoint level security.

-Where this fits in your stack: from web apps, to AI, to automation

Whether you’re building internal tools, public portals, or powering automation – this webinar is your starting point to real-time data delivery.

What is Oracle EPM A Guide to Oracle EPM Cloud Everything You Need to Know

What is Oracle EPM A Guide to Oracle EPM Cloud Everything You Need to KnowSMACT Works In today's fast-paced business landscape, financial planning and performance management demand powerful tools that deliver accurate insights. Oracle EPM (Enterprise Performance Management) stands as a leading solution for organizations seeking to transform their financial processes. This comprehensive guide explores what Oracle EPM is, its key benefits, and how partnering with the right Oracle EPM consulting team can maximize your investment.

TrustArc Webinar - 2025 Global Privacy Survey

TrustArc Webinar - 2025 Global Privacy SurveyTrustArc How does your privacy program compare to your peers? What challenges are privacy teams tackling and prioritizing in 2025?

In the sixth annual Global Privacy Benchmarks Survey, we asked global privacy professionals and business executives to share their perspectives on privacy inside and outside their organizations. The annual report provides a 360-degree view of various industries' priorities, attitudes, and trends. See how organizational priorities and strategic approaches to data security and privacy are evolving around the globe.

This webinar features an expert panel discussion and data-driven insights to help you navigate the shifting privacy landscape. Whether you are a privacy officer, legal professional, compliance specialist, or security expert, this session will provide actionable takeaways to strengthen your privacy strategy.

This webinar will review:

- The emerging trends in data protection, compliance, and risk

- The top challenges for privacy leaders, practitioners, and organizations in 2025

- The impact of evolving regulations and the crossroads with new technology, like AI

Predictions for the future of privacy in 2025 and beyond

6th Power Grid Model Meetup - 21 May 2025

6th Power Grid Model Meetup - 21 May 2025DanBrown980551 6th Power Grid Model Meetup

Join the Power Grid Model community for an exciting day of sharing experiences, learning from each other, planning, and collaborating.

This hybrid in-person/online event will include a full day agenda, with the opportunity to socialize afterwards for in-person attendees.

If you have a hackathon proposal, tell us when you register!

About Power Grid Model

The global energy transition is placing new and unprecedented demands on Distribution System Operators (DSOs). Alongside upgrades to grid capacity, processes such as digitization, capacity optimization, and congestion management are becoming vital for delivering reliable services.

Power Grid Model is an open source project from Linux Foundation Energy and provides a calculation engine that is increasingly essential for DSOs. It offers a standards-based foundation enabling real-time power systems analysis, simulations of electrical power grids, and sophisticated what-if analysis. In addition, it enables in-depth studies and analysis of the electrical power grid’s behavior and performance. This comprehensive model incorporates essential factors such as power generation capacity, electrical losses, voltage levels, power flows, and system stability.

Power Grid Model is currently being applied in a wide variety of use cases, including grid planning, expansion, reliability, and congestion studies. It can also help in analyzing the impact of renewable energy integration, assessing the effects of disturbances or faults, and developing strategies for grid control and optimization.

How Advanced Environmental Detection Is Revolutionizing Oil & Gas Safety.pdf

How Advanced Environmental Detection Is Revolutionizing Oil & Gas Safety.pdfRejig Digital Unlock the future of oil & gas safety with advanced environmental detection technologies that transform hazard monitoring and risk management. This presentation explores cutting-edge innovations that enhance workplace safety, protect critical assets, and ensure regulatory compliance in high-risk environments.

🔍 What You’ll Learn:

✅ How advanced sensors detect environmental threats in real-time for proactive hazard prevention

🔧 Integration of IoT and AI to enable rapid response and minimize incident impact

📡 Enhancing workforce protection through continuous monitoring and data-driven safety protocols

💡 Case studies highlighting successful deployment of environmental detection systems in oil & gas operations

Ideal for safety managers, operations leaders, and technology innovators in the oil & gas industry, this presentation offers practical insights and strategies to revolutionize safety standards and boost operational resilience.

👉 Learn more: https://www.rejigdigital.com/blog/continuous-monitoring-prevent-blowouts-well-control-issues/

Trends Artificial Intelligence - Mary Meeker

Trends Artificial Intelligence - Mary MeekerClive Dickens Mary Meeker’s 2024 AI report highlights a seismic shift in productivity, creativity, and business value driven by generative AI. She charts the rapid adoption of tools like ChatGPT and Midjourney, likening today’s moment to the dawn of the internet. The report emphasizes AI’s impact on knowledge work, software development, and personalized services—while also cautioning about data quality, ethical use, and the human-AI partnership. In short, Meeker sees AI as a transformative force accelerating innovation and redefining how we live and work.

National Fuels Treatments Initiative: Building a Seamless Map of Hazardous Fu...

National Fuels Treatments Initiative: Building a Seamless Map of Hazardous Fu...Safe Software The National Fuels Treatments Initiative (NFT) is transforming wildfire mitigation by creating a standardized map of nationwide fuels treatment locations across all land ownerships in the United States. While existing state and federal systems capture this data in diverse formats, NFT bridges these gaps, delivering the first truly integrated national view. This dataset will be used to measure the implementation of the National Cohesive Wildland Strategy and demonstrate the positive impact of collective investments in hazardous fuels reduction nationwide. In Phase 1, we developed an ETL pipeline template in FME Form, leveraging a schema-agnostic workflow with dynamic feature handling intended for fast roll-out and light maintenance. This was key as the initiative scaled from a few to over fifty contributors nationwide. By directly pulling from agency data stores, oftentimes ArcGIS Feature Services, NFT preserves existing structures, minimizing preparation needs. External mapping tables ensure consistent attribute and domain alignment, while robust change detection processes keep data current and actionable. Now in Phase 2, we’re migrating pipelines to FME Flow to take advantage of advanced scheduling, monitoring dashboards, and automated notifications to streamline operations. Join us to explore how this initiative exemplifies the power of technology, blending FME, ArcGIS Online, and AWS to solve a national business problem with a scalable, automated solution.

Domino IQ – Was Sie erwartet, erste Schritte und Anwendungsfälle

Domino IQ – Was Sie erwartet, erste Schritte und Anwendungsfällepanagenda Webinar Recording: https://www.panagenda.com/webinars/domino-iq-was-sie-erwartet-erste-schritte-und-anwendungsfalle/

HCL Domino iQ Server – Vom Ideenportal zur implementierten Funktion. Entdecken Sie, was es ist, was es nicht ist, und erkunden Sie die Chancen und Herausforderungen, die es bietet.

Wichtige Erkenntnisse

- Was sind Large Language Models (LLMs) und wie stehen sie im Zusammenhang mit Domino iQ

- Wesentliche Voraussetzungen für die Bereitstellung des Domino iQ Servers

- Schritt-für-Schritt-Anleitung zur Einrichtung Ihres Domino iQ Servers

- Teilen und diskutieren Sie Gedanken und Ideen, um das Potenzial von Domino iQ zu maximieren

Agentic AI: Beyond the Buzz- LangGraph Studio V2

Agentic AI: Beyond the Buzz- LangGraph Studio V2Shashikant Jagtap Presentation given at the LangChain community meetup London

https://lu.ma/9d5fntgj

Coveres

Agentic AI: Beyond the Buzz

Introduction to AI Agent and Agentic AI

Agent Use case and stats

Introduction to LangGraph

Build agent with LangGraph Studio V2

Improving Developer Productivity With DORA, SPACE, and DevEx

Improving Developer Productivity With DORA, SPACE, and DevExJustin Reock Ready to measure and improve developer productivity in your organization?

Join Justin Reock, Deputy CTO at DX, for an interactive session where you'll learn actionable strategies to measure and increase engineering performance.

Leave this session equipped with a comprehensive understanding of developer productivity and a roadmap to create a high-performing engineering team in your company.

Azure vs AWS Which Cloud Platform Is Best for Your Business in 2025

Azure vs AWS Which Cloud Platform Is Best for Your Business in 2025Infrassist Technologies Pvt. Ltd.

Big data processing with Apache Spark and Oracle Database

- 1. Big data processing with Apache Spark and Oracle database Martin Toshev

- 2. Who am I Software consultant (CoffeeCupConsulting) BG JUG board member (http://jug.bg) (BG JUG is a 2018 Oracle Duke’s choice award winner)

- 3. Agenda • Apache Spark from an eagle’s eye • Apache Spark capabilities • Using Oracle RDBMS as a Spark datasource

- 4. Apache Spark from an Eagle’s eye

- 5. Highlights • A framework for large-scale distributed data processing • Originally in Scala but extended with Java, Python and R • One of the most contributed open source/Apache/GitHub projects with over 1400 contributors

- 6. Spark vs MapReduce • Spark has been developed in order to address the shortcomings of the MapReduce programming model • In particular MapReduce is unsuitable for: – real-time processing (suitable for batch processing of present data) – operations not limited to the key-value format of data – large data on a network – online transaction processing – graph processing – sequential program execution

- 7. Spark vs Hadoop • Spark is faster as it depends more on RAM usage and tries to minimize disk IO (on the storage system) • Spark however can still use Hadoop: – as a storage engine (HDFS) – as a compute engine (MapReduce or Hadoop YARN) • Spark has pluggable storage and compute engine architecture

- 8. Spark components Spark Framework Spark Core Spark Streaming MLib GraphXSpark SQL

- 9. Spark architecture SparkContext (driver) Cluster manager Worker node Worker node Worker node Spark application (JAR) Input data sources Output data sources

- 11. Spark datasets • The building block of Spark are RDDs (Resilient Distributed Datasets) • They are immutable collections of objects spread across a Spark cluster and stored in RAM or on disk • Created by means of distributed transformations • Rebuilt on failure of a Spark node

- 12. Spark datasets • The DataFrame API is a superset of RDDs introduced in Spark 2.0 • The Dataset API provides a way to work with a combination of RDDs and DataFrames • The DataFrame API is preferred compared to RDDs due to improved performance and more advanced operations

- 13. Spark datasets List<Item> items = …; SparkConf configuration = new SparkConf().setAppName(“ItemsManager").setMaster("local"); JavaSparkContext context = new JavaSparkContext(configuration); JavaRDD<Item> itemsRDD = context.parallelize(items);

- 14. Spark transformations map itemsRDD.map(i -> { i.setName(“phone”); return i;}); filter itemsRDD.filter(i -> i.getName().contains(“phone”)) flatMap itemsRDD.flatMap(i -> Arrays.asList(i, i).iterator()); union itemsRDD.union(newItemsRDD); intersection itemsRDD.intersection(newItemsRDD); distinct itemsRDD.distinct() cartesian itemsRDD.cartesian(otherDatasetRDD)

- 15. Spark transformations groupBy pairItemsRDD = itemsRDD.mapToPair(i -> new Tuple2(i.getType(), i)); modifiedPairItemsRDD = pairItemsRDD.groupByKey(); reduceByKey pairItemsRDD = itemsRDD.mapToPair(o -> new Tuple2(o.getType(), o)); modifiedPairItemsRDD = pairItemsRDD.reduceByKey((o1, o2) -> new Item(o1.getType(), o1.getCount() + o2.getCount(), o1.getUnitPrice()) ); • Other transformations include aggregateByKey, sortByKey, join, cogroup …

- 16. Spark actions • Spark actions are the terminal operations that produce results from the transformations • Actions are a way to communicate back from the execution engine to the Spark driver instance

- 17. Spark actions collect itemsRDD.collect() reduce itemsRDD.map(i -> i.getUnitPrice() * i.getCount()). reduce((x, y) -> x + y); count itemsRDD.count() first itemsRDD.first() take itemsRDD.take(4) takeOrdered itemsRDD.takeOrdered(4, comparator) foreach itemsRDD.foreach(System.out::println) saveAsTextFile itemsRDD.saveAsTextFile(path) saveAsObjectFile itemsRDD.saveAsObjectFile(path)

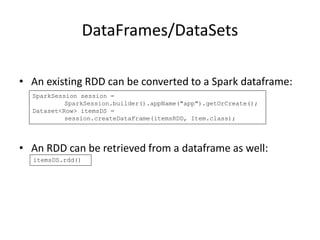

- 18. DataFrames/DataSets • A dataframe can be created using an instance of the org.apache.spark.sql.SparkSession class • The DataFrame/DataSet APIs provide more advanced operations and the capability to run SQL queries on the data itemsDS.createOrReplaceTempView(“items"); session.sql("SELECT * FROM items");

- 19. DataFrames/DataSets • An existing RDD can be converted to a Spark dataframe: • An RDD can be retrieved from a dataframe as well: SparkSession session = SparkSession.builder().appName("app").getOrCreate(); Dataset<Row> itemsDS = session.createDataFrame(itemsRDD, Item.class); itemsDS.rdd()

- 20. Spark data sources • Spark can receive data from a variety of data sources in a variety of ways (batching, real-time streaming) • These datasources might be: – files: Spark supports reading data from a variety of formats (JSON, CSV, Avro, etc.) – relational databases: using JDBC/ODBC driver Spark can extract data from an RDBMS – TCP sockets, messaging systems: using streaming capabilities of Spark data can be read from messaging systems and raw TCP sockets

- 21. Spark data sources • Spark provides support for operations on batch data or real time data • For real time data Spark provides two main APIs: – Spark streaming is an older API working on RDDs – Spark structured streaming is a newer API working on DataFrames/DataSets

- 22. Spark data sources • Spark provides capabilities to plug-in additional data sources not supported by Spark • For streaming sources you can define your own custom receivers

- 23. Spark streaming • Data is divided into batches called Dstreams (decentralized streams) • Typical use case is the integration of Spark with messaging systems such as Kafka, RabbitMQ and ActiveMQ etc. • Fault tolerance can be enabled in Spark Streaming whereby data is stored in HDFS

- 24. Spark streaming • To define a Spark stream you need to create a JavaStreamingContext instance SparkConf conf = new SparkConf().setMaster("local[4]").setAppName("CustomerItems"); JavaStreamingContext jssc = new JavaStreamingContext(conf, Durations.seconds(1));

- 25. Spark streaming • Then a receiver can be created for the data: – from sockets: – from data directory: – from RDD streams (for testing purposes): jssc.socketTextStream("localhost", 7777); jssc.textFileStream("... some data directory ..."); jssc.queueStream(... RDDs queue ... )

- 26. Spark streaming • Then the data pipeline can be built using transformations and actions on the streams • Finally retrieval of data must be triggered from the streaming context: jssc.start(); jssc.awaitTermination();

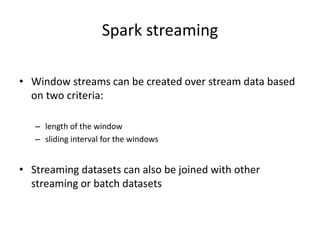

- 27. Spark streaming • Window streams can be created over stream data based on two criteria: – length of the window – sliding interval for the windows • Streaming datasets can also be joined with other streaming or batch datasets

- 28. Spark structured streaming • Newer streaming API working on DataSets/DataFrames: • A schema can be specified on the streaming data using the .schema(<schema>) method on the read stream SparkSession context = SparkSession .builder() .appName("CustomerItems") .getOrCreate(); Dataset<Row> lines = spark .readStream() .format("socket") .option("host", "localhost") .option("port", 7777) .load();