JavaOne 2016: Getting Started with Apache Spark: Use Scala, Java, Python, or All of Them?

- 1. David Taieb STSM - IBM Cloud Data Services Developer advocate [email protected] My boss wants me to work on Apache Spark: should I use Scala, Java or Python or a combination of the three? Java One 2016, San Francisco

- 2. ©2016 IBM Corporation Objectives By the end of this session, you should be able to: – Have Basic knowledge of Apache Spark (at least enough to get you started) – Understand key differences between Java, Scala and Python – Better informed about deciding which language to use – Understand the role of the Notebook in quickly building data solution.

- 3. ©2016 IBM Corporation Let’s start with a story… Disclaimer All characters and events depicted in this Story are entirely fictitious. Any similarity to actual use cases, events or persons is actually intentional

- 4. ©2016 IBM Corporation Meet Ben “the developer” • Hold a master degree in computer science • 10 year experience, 6 years with the company • Full stack Web developer • Languages of choice: Java, Node.js, HTML5/CSS3 • Data: No SQL (Cloudant, Mongo), relational • Protocols: REST, JSON, MQTT • No major experience with Big data Favorite Quote: “The Best Line of Code is the One I Didn't Have to Write!”

- 5. ©2016 IBM Corporation Meet Natasha “the data scientist” • Hold a PHD in data science • 5 year experience, 2 years with the company • Experienced in Python and R • Expert in Machine Learning and Data visualization • Software engineering is not her thing Favorite Quote: “In God we trust. All others bring data” W. Edwards Deming

- 6. ©2016 IBM Corporation Surprise meeting with VP of development We have an urgent need for our marketing department to build an application that can provide real-time sentiment analysis on Twitter data courtesy of http://linkq.com.vn/

- 7. ©2016 IBM Corporation Key Constraints • You only have 6 weeks to build the application • Target consumer is the LOB user: must be easy to use even for non technical people • Web interface: should be accessible from a standard browser (desktop or mobile) • It must scale out of the box: I want you to use Apache Spark

- 8. ©2016 IBM Corporation Some learning to do What exactly is Apache Spark?

- 9. ©2016 IBM Corporation What is Apache spark ? Spark is an open source in-memory computing framework for distributed data processing and iterative analysis on massive data volumes

- 10. ©2016 IBM Corporation Spark Core Libraries general compute engine, handles distributed task dispatching, scheduling and basic I/O functions Spark SQL executes SQL statements performs streaming analytics using micro-batches common machine learning and statistical algorithms distributed graph processing framework Spark Streaming Mllib Machine Learning GraphX Graph Spark Core

- 11. ©2016 IBM Corporation Spark is evolved quickly and has strong traction Spark is one of the most active open source projects Interest over time (Google Trends) Source: https://www.google.com/trends/explore#q=apache%20spark&cmpt=q&tz=http://www.indeed.com/jobanalytics/jobtrends?q=apache+spark&l= Job Trends (Indeed.com)

- 12. ©2016 IBM Corporation Resilient Distributed Datasets (RDDs) • A collection of elements that Spark works on in parallel • May be kept in memory or on disk • Applications can also explicitly tell Spark to cache an RDD, which is great for iterative algorithms • An RDD contains the “raw data”, plus the function to compute it • Fault-tolerance: if any partition of an RDD is lost, it will automatically be recomputed using the transformations that originally created it RDD built from a Java collection RDD built from an external dataset (local FS, HDFS, Hbase,…)

- 13. ©2016 IBM Corporation RDD’s fault tolerance scenario RD D1 Map Transformation Data Node A File Input Split 1 File Input Split 2 File Input Split 3 RD D1 RD D1 RD D2 RD D2 Reduce Transformation RD D3 RD D3 filter Transformation Data Node B

- 14. ©2016 IBM Corporation Spark SQL and Data Frames • Spark’s interface for working with structured and semi-structured data • Ability to load from a verity of data sources (parquet, JSON, Hive) • Supports SQL syntax for both JDBC/ODBC connectors • Special RDD tooling (Join between RDD and an SQL table, Expose UDF’s) • DataFrames • A Data Frame is a distributed collection of data organized into named columns. • It is conceptually equivalent to a table in a relational database • DataFrames can be constructed from a wide array of sources such as: structured data files, tables in Hive, external databases, or existing RDDs • Provide better performance than RDD thanks to Spark SQL’s catalyst query optimizer

- 15. ©2016 IBM Corporation Consuming Spark Spark Application (driver) Master (cluster Manager) Worker Node Worker Node Worker Node Worker Node … Spark Cluster Kernel Master (cluster Manager) Worker Node Worker Node … Spark Cluster Notebook Server Browser Http/WebSockets Kernel Protocol Batch Job (Spark-Submit) Interactive Notebook • RDD Partitioning • Task packaging and dispatching • Worker node scheduling

- 16. ©2016 IBM Corporation IBM’s commitment to Spark • Contribute to the Core • Spark Technology Cluster (STC) • Open source SystemML • Foster Community • Educate 1M+ data scientists and engineers • Sponsor AMPLab IBM Analytics for Apache Spark • IBM Analytics for Apache Spark Managed Service • www.ibm.com/analytics/us/en/technology/cloud-data-services/spark-as-a-service IBM Data Science Experience • DataScience Experience: • datascience.ibm.com IBM Packages for Apache Spark •IBM Packages for Apache Spark: • ibm.biz/spark-kit

- 17. ©2016 IBM Corporation Ben and Natasha start brainstorming • I’ll work on data acquisition from Twitter and enrichment with sentiment analysis scores using Spark Streaming • I know Java very well, but I don’t have time to learn Python. • However, I am willing to learn Scala if that helps improve my productivity • I’ll perform the data exploration and analysis • I know Python and R, but am not familiar enough with Java or Scala • I like pandas and numpy. I’m ok to learn Spark but expect the same level of apis • I need to work iteratively with the data

- 18. ©2016 IBM Corporation More learning What exactly is a Notebook?

- 21. ©2016 IBM Corporation What is a Notebook Text, Annotations Code, Data Visualizations, Widgets, Output • Web based UI for running apache spark console commands • Easy, no install spark accelerator • Best way to start working with spark

- 22. ©2016 IBM Corporation What is Jupyter? with a “y”, clever ah? Browser Kernel Code Output https://www.bluetrack.com/uploads/items_images/kernel-of-corn-stress-balls1_thumb.jpg?r=1 Look! Apache Toree for Spark "Open source, interactive data science and scientific computing" – Formerly IPython – Large, open, growing community and ecosystem Very popular – “~2 million users for IPython” [1] – $6m in funding in 2015 [3] – 200 contributors to notebook subproject alone [4] – 275,000 public notebooks on GitHub [2]

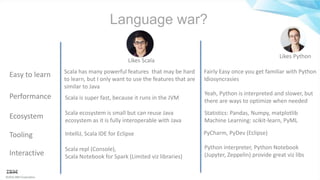

- 23. ©2016 IBM Corporation Language war? Likes Scala Likes Python Easy to learn Scala has many powerful features that may be hard to learn, but I only want to use the features that are similar to Java Fairly Easy once you get familiar with Python Idiosyncrasies Performance Scala is super fast, because it runs in the JVM Yeah, Python is interpreted and slower, but there are ways to optimize when needed Ecosystem Scala ecosystem is small but can reuse Java ecosystem as it is fully interoperable with Java Statistics: Pandas, Numpy, matplotlib Machine Learning: scikit-learn, PyML Tooling IntelliJ, Scala IDE for Eclipse PyCharm, PyDev (Eclipse) Interactive Scala repl (Console), Scala Notebook for Spark (Limited viz libraries) Python interpreter, Python Notebook (Jupyter, Zeppelin) provide great viz libs

- 24. ©2016 IBM Corporation Scala vs Java • Less Verbose public class Circle{ private double radius; private double xCenter; private double yCenter; public Circle(double radius,double xCenter, double yCenter){ this.radius = radius; this.xCenter = xCenter; this.yCenter = yCenter; } public void setRadius(double radius){ this.radius=radius;} public double getRadius(){return radius;} public void setXCenter(double xCenter){ this.xCenter=xCenter;} public double getXCenter(){return xCenter;} public void setYCenter(double yCenter){ this.yCenter=yCenter;} public double getYCenter(){return yCenter;} } Java class Circle( var radius:Double, var xCenter:Double, var yCenter:Double ) Scala Define a Class with a few fields

- 25. ©2016 IBM Corporation Scala vs Java • Less Verbose • Mixes OOP and FP Sort a list of circles by radius size and by center abscissa Collections.sort(circles, new Comparator<Circle>() { public int compare(Circle a, Circle b) { return a.getRadius()-b.getRadius() } }); Collections.sort(circles, new Comparator<Circle>() { public int compare(Circle a, Circle b) { return a.getXCenter()-b.getXCenter(); } }); circles.sortBy( c=>(c.radius,c.xCenter) ) Scala Java 7 and below circles .sort(Comparator.comparing(Circle::getRadius()) .thenComparing(Circle::getXCenter()) Java 8

- 26. ©2016 IBM Corporation Scala vs Java • Less Verbose • Mixes OOP and FP • Concurrency: Actors and Futures class JavaOneActor extends Actor { def receive = { case ”version" => println(”JavaOne 2016") case “location” => println(“San Francisco”) case _ => println(”Sorry, I didn’t get that") } } object JavaOneActorMain extends App { val system = ActorSystem(”JavaOne”) val actor= system.actorOf(Props[JavaOneActor],name=”JavaOne") actor ! ”version" actor ! ”location” actor ! “Is it raining?” }

- 27. ©2016 IBM Corporation Scala vs Java • Less Verbose • Mixes OOP and FP • Actors • Better type system • Parameterized Types • Abstract Types • Compound Types • Singleton Types • Tuples Types • Function Types • Lambdas Types • Self-Recursive Types • Functors • Monads

- 28. ©2016 IBM Corporation Scala vs Java • Less Verbose • Mixes OOP and FP • Actors • Better type system • Runs as fast • Scala compiles into Java Bytecode • Full interoperability with Java • Runs in the JVM • Of course, algorithm and optimizations matters

- 29. ©2016 IBM Corporation Scala vs Java • Less Verbose • Mixes OOP and FP • Actors • Better type system • Runs as fast • Some features can be confusing • SBT looks like black magic • Everything is a function: easy to write cryptic code • Implicits • Currying • Partial functions • Partially applied functions courtesy of http://www.codeodor.com/

- 30. ©2016 IBM Corporation Let’s look at an example Spark Application The world famous Word Count!

- 31. ©2016 IBM Corporation Java JavaRDD<String> textFile = sc.textFile("hdfs://..."); JavaRDD<String> words = textFile.flatMap(new FlatMapFunction<String, String>() { public Iterable<String> call(String s) { return Arrays.asList(s.split(" ")); } }); JavaPairRDD<String, Integer> pairs = words.mapToPair(new PairFunction<String, String, Integer>() { public Tuple2<String, Integer> call(String s) { return new Tuple2<String, Integer>(s, 1); } }); JavaPairRDD<String, Integer> counts = pairs.reduceByKey(new Function2<Integer, Integer, Integer>() { public Integer call(Integer a, Integer b) { return a + b; } }); counts.saveAsTextFile("hdfs://..."); Create RDD from a file on the hadoop cluster New RDD made of all words in the files For each word create a tuple (word,1) Aggregate all the results, using the word as the key Save the results back to the hadoop cluster

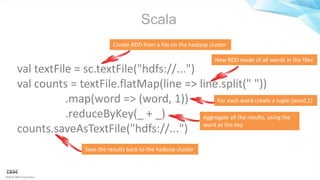

- 32. ©2016 IBM Corporation Scala val textFile = sc.textFile("hdfs://...") val counts = textFile.flatMap(line => line.split(" ")) .map(word => (word, 1)) .reduceByKey(_ + _) counts.saveAsTextFile("hdfs://...") Create RDD from a file on the hadoop cluster New RDD made of all words in the files For each word create a tuple (word,1) Aggregate all the results, using the word as the key Save the results back to the hadoop cluster

- 33. ©2016 IBM Corporation Python text_file = sc.textFile("hdfs://...") counts = text_file.flatMap(lambda line: line.split(" ")) .map(lambda word: (word, 1)) .reduceByKey(lambda a, b: a + b) counts.saveAsTextFile("hdfs://...") Create RDD from a file on the hadoop cluster New RDD made of all words in the files For each word create a tuple (word,1) Aggregate all the results, using the word as the key Save the results back to the hadoop cluster

- 34. ©2016 IBM Corporation OK, let’s agree on the architecture Watson Tone Analyzer Input Stream Enrich data with Emotion Tone Scores Processed data Notebook Agree to disagree on the Language

- 35. ©2016 IBM Corporation Dividing the tasks • Implement a Spark Streaming connector to Twitter • Call Watson Tone Analyzer for each tweets • Return a Spark DataFrame with the tweets enriched with Tone scores • Code written in Scala, delivered as a Jar • Will test in Scala Notebook • Works in a Python Notebook • Load the twitter data with Tone score from a persisted store • Perform the data exploration and analysis: trending hashtags and sentiments • Produce visualizations to LOB Users

- 36. ©2016 IBM Corporation A word on Watson Tone Analyzer • Uses linguistic analysis to detect 3 types of tones: Emotion, Social Tendencies and Language styles • Available as a cloud service on IBM Bluemix Input http://www.ibm.com/watson/developercloud/tone-analyzer.html Results

- 37. ©2016 IBM Corporation How is Ben doing? Spark Streaming to Twitter Get Watson Tone sentiment scores Enrich Tweets with new Scores Make spark Dataframe available ssc = new StreamingContext( sc, Seconds(5) ) ssc.addStreamingListener( new StreamingListener ) val keys = config.getConfig("tweets.key").split(","); val stream = org.apache.spark.streaming.twitter.TwitterUtils.createStream( ssc, None ); val tweets = stream.filter { status => Option(status.getUser).flatMap[String] { u => Option(u.getLang) }.getOrElse("").startsWith("en") && CharMatcher.ASCII.matchesAllOf(status.getText) && ( keys.isEmpty || keys.exists{status.getText.contains(_) }) ... Create a Spark StremingContext with a 5 sec batch window Create a Twitter Stream Filter any tweet that are not in English or that do not match the word filters

- 38. ©2016 IBM Corporation How is Ben doing? Spark Streaming to Twitter Get Watson Tone sentiment scores Enrich Tweets with new Scores Make spark Dataframe available case class DocumentTone( document_tone: Sentiment ) case class Sentiment(tone_categories: Seq[ToneCategory]); … val sentimentResults: String = EntityEncoder[String].toEntity("{"text": " + JSONObject.quote( status.text ) + "}" ).flatMap { entity => val s = broadcastVar.value.get("watson.tone.url").get + "/v3/tone?version=" + broadcastVar.value.get("watson.api.version").get val toneuri: Uri = Uri.fromString( s ).getOrElse( null ) client( Request( method = Method.POST, uri = toneuri, headers = …, body = entity.body)) .flatMap { response => if (response.status.code == 200 ) { response.as[String] } else { println("Error received from Watson Tone Analyzer.”) null } } }.run //Return Sentiment object pickled from response upickle.read[DocumentTone](sentimentResults).document_tone Call the Watson Tone Analyzer Service using a POST Request Pickle the JSON Response and return a DocumentTone Object Define the Sentiment Data Model

- 39. ©2016 IBM Corporation How is Ben doing? Spark Streaming to Twitter Get Watson Tone sentiment scores Enrich Tweets with new Scores Make spark Dataframe available lazy val client = PooledHttp1Client() val rowTweets = tweets.map(status=> { val sentiment = ToneAnalyzer.computeSentiment( client, status, broadcastVar ) var colValues = Array[Any]( status.getUser.getName, //author status.getCreatedAt.toString, //date status.getUser.getLang, //Lang status.getText, //text Option(status.getGeoLocation).map{ _.getLatitude}.getOrElse(0.0), //lat Option(status.getGeoLocation).map{_.getLongitude}.getOrElse(0.0) //long ) var scoreMap = getScoreMap(sentiment) colValues = colValues ++ ToneAnalyzer.sentimentFactors.map { f => round(f) * 100.0} //Return [Row, (sentiment, status)] (Row(colValues.toArray:_*),(sentiment, status)) }) Enrich the Tweets with Sentiment Scores, returns a Row Object

- 40. ©2016 IBM Corporation How is Ben doing? Spark Streaming to Twitter Get Watson Tone sentiment scores Enrich Tweets with new Scores Make spark Dataframe available def createTwitterDataFrames(sc: SparkContext) : (SQLContext, DataFrame) = { if ( workingRDD.count <= 0 ){ println("No data receive. Please start the Twitter stream again to collect data") return null } try{ val df = sqlContext.createDataFrame( workingRDD, schemaTweets ) df.registerTempTable("tweets") println("A new table named tweets with " + df.count() + " records has been correctly created and can be accessed through the SQLContext variable") println("Here's the schema for tweets") df.printSchema() (sqlContext, df) }catch{ case e: Exception => {logError(e.getMessage, e ); return null} } } Transform the RDD of Enriched Tweets to a DataFrame Display the DataFrame Schema

- 41. ©2016 IBM Corporation How’s Natasha doing? Load the Tweets from cluster Compute the distribution of Sentiments Compute top 5 hashtags Compute aggregate Tone scores for each 5 top hashtags Load the Tweets data from a parquet file in Object Storage Register a Temp SQL Table so it can be queried later on

- 42. ©2016 IBM Corporation How’s Natasha doing? Load the Tweets from cluster Compute the distribution of Sentiments Compute top 5 hashtags Compute aggregate Tone scores for each 5 top hashtags Build an array that contains the number of tweets where score is greater than 60%

- 43. ©2016 IBM Corporation How’s Natasha doing? Load the Tweets from cluster Compute the distribution of Sentiments Compute top 5 hashtags Compute aggregate Tone scores for each 5 top hashtags Creates a flat Map of all the words in the tweets and filter to keep only the hashtags Map then Reduce to count the occurrences of each hashtag

- 44. ©2016 IBM Corporation How’s Natasha doing? Load the Tweets from cluster Compute the distribution of Sentiments Compute top 5 hashtags Compute aggregate Tone scores for each 5 top hashtags Create RDD from tweets dataframe Keep only the entries in the top 10 Index by Tag-Tone

- 45. ©2016 IBM Corporation How’s Natasha doing? Load the Tweets from cluster Compute the distribution of Sentiments Compute top 5 hashtags Compute aggregate Tone scores for each 5 top hashtags Count the occurrences for each score Count the tone average for each tag and count Final Reduce to get the data the way we want it for display

- 46. ©2016 IBM Corporation How’s Natasha doing? Load the Tweets from cluster Compute the distribution of Sentiments Compute top 5 hashtags Compute aggregate Tone scores for each 5 top hashtags

- 47. ©2016 IBM Corporation How good is Python at handling data? Given the following quarterly sales series Year Quarter Revenue Return a list that contains the revenue for a specific quarter, 0 if not defined. e.g.: 1st Quarter: [8977551.03 4th Quarter: [9179464.4, 6717172.01, 2694937.3, 0] Let’s look at a simple task

- 48. ©2016 IBM Corporation Let’s take a look at the application

- 49. ©2016 IBM Corporation Meeting back with the VP This is great, but why do I need to run 2 notebooks – one in Scala and one in Python? Please Fix it!

- 50. ©2016 IBM Corporation Can’t we both get along? • It would be great if we could run Scala code from a Python Notebook • And be able to transfer variables between the 2 languages Open Source Pixiedust Python library let’s us do just that https://github.com/ibm-cds-labs/pixiedust

- 51. ©2016 IBM Corporation New Version with Scala mix-in

- 52. ©2016 IBM Corporation What about the LOB User? courtesy: http://www.flickr.com C-Suite executive need to be able to run the application from Notebook, select filters and see real-time charts without writing code!

- 53. ©2016 IBM Corporation Embedded application with Pixiedust plugin

- 54. ©2016 IBM Corporation Embedded application with Pixiedust plugin

- 55. ©2016 IBM Corporation Conclusion • Programming languages are just tools, you need to choose the right one for you (the programmer) and the task: • Scala is better suited for engineering work that involves large, reusable components • Python is the language of choice for data scientists • If you are starting with Spark and just want to play: • Start local: no need for a big cluster yet, get installed and started in minutes • Use the language you are most familiar with, or is easiest to learn • Use Notebooks to learn the APIs

- 56. ©2016 IBM Corporation Resources • http://programming-scala.org • http://python.org • http://spark.apache.org • www.ibm.com/analytics/us/en/technology/cloud-data-services/spark-as-a-service • http://datascience.ibm.com • http://ibm.biz/spark-kit • www.ibm.com/watson/developercloud/tone-analyzer.html • developer.ibm.com/clouddataservices/2016/01/15/real-time-sentiment-analysis-of- twitter-hashtags-with-spark/ • developer.ibm.com/clouddataservices/start-developing-with-spark-and-notebooks/ • www.ibm.com/analytics/us/en/technology/spark/ • github.com/ibm-cds-labs/spark.samples • github.com/ibm-cds-labs/pixiedust

- 57. ©2016 IBM Corporation Questions! David Taieb [email protected] @DTAIEB55 courtesy: http://omogemura.com/

Editor's Notes

- #12: One thing to note here is how recent all of this attention is – keep in mind that Spark was only founded in 2009 and open-sourced in 2010

- #14: RDD’s track lineage info to rebuild lost data through DAG’s (directed acyclic graph) If “Data node A” fails, the spark engine has all the information which contains what input splits or RDD’s were transformed to compute the RDD part of node A And if taking into account that catastrophic failures like that are a rare occasion , we can see why this approach can enhance performance The only shortcoming of this approach is that there will be a lot of re-computation done when such a failure eventually occurs

![©2016 IBM Corporation

What is Jupyter?

with a “y”, clever ah?

Browser

Kernel

Code Output

https://www.bluetrack.com/uploads/items_images/kernel-of-corn-stress-balls1_thumb.jpg?r=1

Look! Apache Toree for Spark

"Open source, interactive data science and

scientific computing"

– Formerly IPython

– Large, open, growing community and ecosystem

Very popular

– “~2 million users for IPython” [1]

– $6m in funding in 2015 [3]

– 200 contributors to notebook subproject alone [4]

– 275,000 public notebooks on GitHub [2]](https://image.slidesharecdn.com/javaonesparkscalavspython-160920170010/85/JavaOne-2016-Getting-Started-with-Apache-Spark-Use-Scala-Java-Python-or-All-of-Them-22-320.jpg)

![©2016 IBM Corporation

Scala vs Java

• Less Verbose

• Mixes OOP and FP

• Concurrency: Actors and Futures

class JavaOneActor extends Actor {

def receive = {

case ”version" => println(”JavaOne 2016")

case “location” => println(“San Francisco”)

case _ => println(”Sorry, I didn’t get that")

}

}

object JavaOneActorMain extends App {

val system = ActorSystem(”JavaOne”)

val actor= system.actorOf(Props[JavaOneActor],name=”JavaOne")

actor ! ”version"

actor ! ”location”

actor ! “Is it raining?”

}](https://image.slidesharecdn.com/javaonesparkscalavspython-160920170010/85/JavaOne-2016-Getting-Started-with-Apache-Spark-Use-Scala-Java-Python-or-All-of-Them-26-320.jpg)

![©2016 IBM Corporation

How is Ben doing?

Spark Streaming

to Twitter

Get Watson Tone

sentiment scores

Enrich Tweets

with new Scores

Make spark Dataframe

available

ssc = new StreamingContext( sc, Seconds(5) )

ssc.addStreamingListener( new StreamingListener )

val keys = config.getConfig("tweets.key").split(",");

val stream = org.apache.spark.streaming.twitter.TwitterUtils.createStream( ssc, None );

val tweets = stream.filter { status =>

Option(status.getUser).flatMap[String] {

u => Option(u.getLang)

}.getOrElse("").startsWith("en") &&

CharMatcher.ASCII.matchesAllOf(status.getText) &&

( keys.isEmpty || keys.exists{status.getText.contains(_)

})

...

Create a Spark StremingContext

with a 5 sec batch window

Create a Twitter Stream

Filter any tweet that are not in English

or that do not match the word filters](https://image.slidesharecdn.com/javaonesparkscalavspython-160920170010/85/JavaOne-2016-Getting-Started-with-Apache-Spark-Use-Scala-Java-Python-or-All-of-Them-37-320.jpg)

![©2016 IBM Corporation

How is Ben doing?

Spark Streaming

to Twitter

Get Watson Tone

sentiment scores

Enrich Tweets

with new Scores

Make spark Dataframe

available

case class DocumentTone( document_tone: Sentiment )

case class Sentiment(tone_categories: Seq[ToneCategory]);

…

val sentimentResults: String =

EntityEncoder[String].toEntity("{"text": " + JSONObject.quote( status.text ) + "}" ).flatMap {

entity =>

val s = broadcastVar.value.get("watson.tone.url").get + "/v3/tone?version=" + broadcastVar.value.get("watson.api.version").get

val toneuri: Uri = Uri.fromString( s ).getOrElse( null )

client( Request( method = Method.POST, uri = toneuri, headers = …, body = entity.body))

.flatMap { response =>

if (response.status.code == 200 ) {

response.as[String]

} else {

println("Error received from Watson Tone Analyzer.”)

null

}

}

}.run

//Return Sentiment object pickled from response

upickle.read[DocumentTone](sentimentResults).document_tone

Call the Watson Tone Analyzer

Service using a POST Request

Pickle the JSON Response and

return a DocumentTone Object

Define the Sentiment Data Model](https://image.slidesharecdn.com/javaonesparkscalavspython-160920170010/85/JavaOne-2016-Getting-Started-with-Apache-Spark-Use-Scala-Java-Python-or-All-of-Them-38-320.jpg)

.map{ _.getLatitude}.getOrElse(0.0), //lat

Option(status.getGeoLocation).map{_.getLongitude}.getOrElse(0.0) //long

)

var scoreMap = getScoreMap(sentiment)

colValues = colValues ++ ToneAnalyzer.sentimentFactors.map { f => round(f) * 100.0}

//Return [Row, (sentiment, status)]

(Row(colValues.toArray:_*),(sentiment, status))

})

Enrich the Tweets with Sentiment

Scores, returns a Row Object](https://image.slidesharecdn.com/javaonesparkscalavspython-160920170010/85/JavaOne-2016-Getting-Started-with-Apache-Spark-Use-Scala-Java-Python-or-All-of-Them-39-320.jpg)

![©2016 IBM Corporation

How good is Python at handling data?

Given the following quarterly sales series

Year Quarter Revenue

Return a list that contains the revenue for a specific quarter, 0 if not defined. e.g.: 1st Quarter: [8977551.03

4th Quarter: [9179464.4, 6717172.01, 2694937.3, 0]

Let’s look at a simple task](https://image.slidesharecdn.com/javaonesparkscalavspython-160920170010/85/JavaOne-2016-Getting-Started-with-Apache-Spark-Use-Scala-Java-Python-or-All-of-Them-47-320.jpg)