Making the big data ecosystem work together with Python & Apache Arrow, Apache Spark, Apache Beam, and Dask

- 1. Making the Big Data ecosystem work together With Python using Apache Arrow, Spark, Beam and Dask @holdenkarau

- 2. Holden: ● My name is Holden Karau ● Prefered pronouns are she/her ● Developer Advocate at Google ● Apache Spark PMC, Beam contributor ● previously IBM, Alpine, Databricks, Google, Foursquare & Amazon ● co-author of Learning Spark & High Performance Spark ● Twitter: @holdenkarau ● Slide share http://www.slideshare.net/hkarau ● Code review livestreams: https://www.twitch.tv/holdenkarau / https://www.youtube.com/user/holdenkarau ● Spark Talk Videos http://bit.ly/holdenSparkVideos

- 4. Who I think you wonderful humans are? ● Nice enough people ● Possibly know some Python (or excited to learn!) ● Maybe interested in distributed systems, but maybe not Lori Erickson

- 5. What will be covered? ● How big data tools currently play together ● A look at the big data ecosystem outside the JVM ○ Dask ○ Apache Spark, Flink, Kafka, and Beam Outside the JVM ● A look at how tools inside of the big data ecosystem currently play together ● A more detailed look at the current state of PySpark ● Why it is getting better, but also how much work is left ● Less than subtle attempts to get you to buy our new book ● And subscribe to my youtube / twitch channels :p ● Pictures of cats & stuffed animals ● tl;dr - We’ve* made some bad** choices historically, and projects like Arrow & friends can save us from some of these (yay!)

- 6. How do big data tools play together? ● Sometimes sharing a cluster manager/resources (like Yarn or K8s) ● Often distributed files. Lots of distributed files. ○ Sometimes local files (e.g. early versions of TensorFlowOnSpark) ○ If your lucky partitioned files, often in parquet. If your unlucky bzip2 XML, JSON and CSVs ● Sometimes Kafka and then files. ● Special tools to copy data from format 1 to format 2 (sqoop etc.) ○ This can feel like 80% of big data. ETL every day. ● Pipeline coordination tools*, like Luigi, Apache Airflow (incubating), and for K8s Argo. ○ Historically cron + shell scripts (meeps) *Often written in Python! Hormiguita Viajera mir

- 7. Why is this kind of sad? ● Hard to test ● Writing to disk is slow. Writing to distributed disk is double slow. ● Kafka is comparatively fast but still involves copying data around the network ● Strange race conditions with storage systems which don’t support atomic operations ○ e.g. Spark assumes it can move a file and have it show up in the new directory in one go, but sometimes we get partial results from certain storage engines. Ask your vendor for details. *Often written in Python! Lottie

- 8. Part of what lead to the success of Spark ● Integrated different tools which traditionally required different systems ○ Mahout, hive, etc. ● e.g. can use same system to do ML and SQL *Often written in Python! Apache Spark SQL, DataFrames & Datasets Structured Streaming Scala, Java, Python, & R Spark ML bagel & Graph X MLLib Scala, Java, PythonStreaming Graph Frames Paul Hudson

- 9. What’s the state of non-JVM big data? Most of the tools are built in the JVM, so how do we play together from Python? ● Pickling, Strings, JSON, XML, oh my! Over ● Unix pipes, Sockets, files, and mmapped files (sometimes in the same program) What about if we don’t want to copy the data all the time? ● Or standalone “pure”* re-implementations of everything ○ Reasonable option for things like Kafka where you would have the I/O regardless. ○ Also cool projects like dask (pure python) -- but hard to talk to existing ecosystem David Brown

- 10. PySpark: ● The Python interface to Spark ● Fairly mature, integrates well-ish into the ecosystem, less a Pythonrific API ● Has some serious performance hurdles from the design ● Same general technique used as the bases for the other non JVM implementations in Spark ○ C# ○ R ○ Julia ○ Javascript - surprisingly different

- 11. Yes, we have wordcount! :p lines = sc.textFile(src) words = lines.flatMap(lambda x: x.split(" ")) word_count = (words.map(lambda x: (x, 1)) .reduceByKey(lambda x, y: x+y)) word_count.saveAsTextFile(output) No data is read or processed until after this line This is an “action” which forces spark to evaluate the RDD These are still combined and executed in one python executor Trish Hamme

- 12. A quick detour into PySpark’s internals + + JSON

- 13. Spark in Scala, how does PySpark work? ● Py4J + pickling + JSON and magic ○ Py4j in the driver ○ Pipes to start python process from java exec ○ cloudPickle to serialize data between JVM and python executors (transmitted via sockets) ○ Json for dataframe schema ● Data from Spark worker serialized and piped to Python worker --> then piped back to jvm ○ Multiple iterator-to-iterator transformations are still pipelined :) ○ So serialization happens only once per stage ● Spark SQL (and DataFrames) avoid some of this kristin klein

- 14. So what does that look like? Driver py4j Worker 1 Worker K pipe pipe

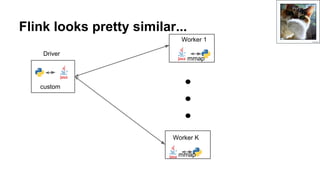

- 15. Flink looks pretty similar... Driver custom Worker 1 Worker K mmap mmap

- 16. So how does that impact PySpark? ● Double serialization cost makes everything more expensive ● Python worker startup takes a bit of extra time ● Python memory isn’t controlled by the JVM - easy to go over container limits if deploying on YARN or similar ● Error messages make ~0 sense ● Spark Features aren’t automatically exposed, but exposing them is normally simple

- 17. Our saviour from serialization: DataFrames ● For the most part keeps data in the JVM ○ Notable exception is UDFs written in Python ● Takes our python calls and turns it into a query plan ● if we need more than the native operations in Spark’s DataFrames we end up in a pickle ;) ● be wary of Distributed Systems bringing claims of usability…. Andy Blackledge

- 18. So what are Spark DataFrames? ● More than SQL tables ● Not Pandas or R DataFrames ● Semi-structured (have schema information) ● tabular ● work on expression as well as lambdas ○ e.g. df.filter(df.col(“happy”) == true) instead of rdd.filter(lambda x: x.happy == true)) ● Not a subset of Spark “Datasets” - since Dataset API isn’t exposed in Python yet :( Quinn Dombrowski

- 19. Word count w/Dataframes df = sqlCtx.read.load(src) # Returns an RDD words = df.select("text").flatMap(lambda x: x.text.split(" ")) words_df = words.map( lambda x: Row(word=x, cnt=1)).toDF() word_count = words_df.groupBy("word").sum() word_count.write.format("parquet").save("wc.parquet") Still have the double serialization here :( jeffreyw

- 20. Difference computing average: Andrew Skudder * *Vendor benchmark. Trust but verify.

- 21. *For a small price of your fun libraries. Bad idea.

- 22. That was a bad idea, buuut….. ● Work going on in Scala land to translate simple Scala into SQL expressions - need the Dataset API ○ Maybe we can try similar approaches with Python? ● POC use Jython for simple UDFs (e.g. 2.7 compat & no native libraries) - SPARK-15369 ○ Early benchmarking w/word count 5% slower than native Scala UDF, close to 2x faster than regular Python ● Willing to share your Python UDFs for benchmarking? - http://bit.ly/pySparkUDF *The future may or may not have better performance than today. But bun-bun the bunny has some lettuce so its ok!

- 24. Faster interchange ● It’s in Spark 2.3 - but there are some rough edges (and bugs) ○ Especially with aggregates, dates, and complex data types ○ You can try it out and help us ● Unifying our cross-language experience ○ And not just “normal” languages, CUDA counts yo ○ Although inside of Spark we only use it for Python today - expanding to more places “soon” Tambako The Jaguar

- 25. Andrew Skudder *Arrow: likely the future. I really hope so. Spark 2.3 and beyond! * *

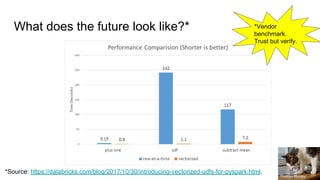

- 26. What does the future look like?* *Source: https://databricks.com/blog/2017/10/30/introducing-vectorized-udfs-for-pyspark.html. *Vendor benchmark. Trust but verify. Thomas Rumley

- 27. Arrow (a poorly drawn big data view) Logos trademarks of their respective projects Juha Kettunen *ish

- 28. What does the future look like - in code @pandas_udf("integer", PandasUDFType.SCALAR) def add_one(x): return x + 1 David McKelvey

- 29. What does the future look like - in code @pandas_udf("id long, v double", PandasUDFType.GROUPED_MAP) def normalize(pdf): v = pdf.v return pdf.assign(v=(v - v.mean()) / v.std()) Erik Huiberts

- 30. Hadoop “streaming” (Python/R) ● Unix pipes! ● Involves a data copy, formats get sad ● But the overhead of a Map/Reduce task is pretty high anyways... Lisa Larsson

- 31. Kafka: re-implement all the things ● Multiple options for connecting to Kafka from outside of the JVM (yay!) ● They implement the protocol to talk to Kafka (yay!) ● This involves duplicated client work, and sometimes the clients can be slow (solution, FFI bindings to C instead of Java) ● Buuuut -- we can’t access all of the cool Kafka business (like Kafka Streams) and features depend on client libraries implementing them (easy to slip below parity) Smokey Combs

- 32. Dask: a new beginning? ● Pure* python implementation ● Provides real enough DataFrame interface for distributed data ○ Much more like a Panda’s DataFrame than Spark’s DataFrames ● Also your standard-ish distributed collections ● Multiple backends ● Primary challenge: interacting with the rest of the big data ecosystem ○ Arrow & friends make this better, but it’s still a bit rough ● There is a proof-of-concept to bootstrap a dask cluster on Spark ● See https://dask.pydata.org/en/latest/ & http://dask.pydata.org/en/latest/spark.html Lisa Zins

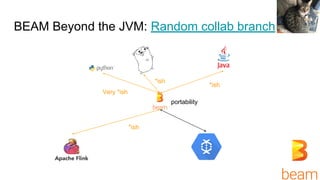

- 33. BEAM Beyond the JVM ● In the current release this only works inside of Google, but there is a branch where this is (starting to) work ● tl;dr : uses grpc / protobuf & docker ○ Docker is cool, we use docker, there for we are cool -- right? ● But exciting new plans to unify the runners and ease the support of different languages (called SDKS) ○ See https://beam.apache.org/contribute/portability/ ● If this is exciting, you can come join me on making BEAM work in Python3 ○ Yes we still don’t have that :( ○ But we're getting closer!

- 34. BEAM Beyond the JVM: Random collab branch *ish *ish Very *ish Nick portability *ish

- 35. Let's count some words! (½) func CountWords(s beam.Scope, lines beam.PCollection) beam.PCollection { s = s.Scope("CountWords") // Convert lines of text into individual words. col := beam.ParDo(s, extractFn, lines) // Count the number of times each word occurs. return stats.Count(s, col) } Look it generalizes to other non-JVM languages. Ada Doglace No escaping Word Count :P

- 36. Let's count some words! (2/2) p := beam.NewPipeline() s := p.Root() lines := textio.Read(s, *input) counted := CountWords(s, lines) formatted := beam.ParDo(s, formatFn, counted) textio.Write(s, *output, formatted) Ada Doglace

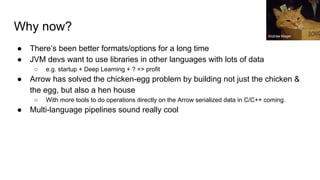

- 37. Why now? ● There’s been better formats/options for a long time ● JVM devs want to use libraries in other languages with lots of data ○ e.g. startup + Deep Learning + ? => profit ● Arrow has solved the chicken-egg problem by building not just the chicken & the egg, but also a hen house ○ With more tools to do operations directly on the Arrow serialized data in C/C++ coming. ● Multi-language pipelines sound really cool Andrew Mager

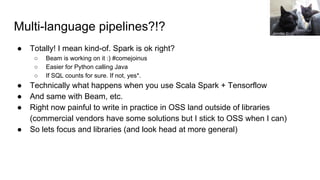

- 38. Multi-language pipelines?!? ● Totally! I mean kind-of. Spark is ok right? ○ Beam is working on it :) #comejoinus ○ Easier for Python calling Java ○ If SQL counts for sure. If not, yes*. ● Technically what happens when you use Scala Spark + Tensorflow ● And same with Beam, etc. ● Right now painful to write in practice in OSS land outside of libraries (commercial vendors have some solutions but I stick to OSS when I can) ● So lets focus and libraries (and look head at more general) Jennifer C.

- 39. What is/why Sparkling ML ● A place for useful Spark ML pipeline stages to live ○ Including both feature transformers and estimators ● The why: Spark ML can’t keep up with every new algorithm ● Lots of cool ML on Spark tools exist, but many don’t play nice with Spark ML or together. ● We make it easier to expose Python transformers into Scala land and vice versa. ● Our repo is at: https://github.com/sparklingpandas/sparklingml

- 40. Using the JVM from non-JVM (e.g. Py) ● non-JVM using JVM is a common pattern already done inside of Spark ● If you have your own JVM code though you need to use some _ hacks ● If UDFs work it looks a bit nicer sc._jvm.com.your.awesome.class.then.func(magic) Sam Thompson

- 41. What do we need for the other way? ● A class for our Java code to call with parameters & request functions ● Code to take the Python UDFS and construct/return the underlying Java UDFS ● A main function to startup the Py4J gateway & Spark context to serialize our functions in the way that is expected ● Pretty much it’s just boilerplate but you can take a look if you want. Jennifer C.

- 42. So what goes in startup.py? class PythonRegistrationProvider(object): class Java: package = "com.sparklingpandas.sparklingml.util.python" className = "PythonRegisterationProvider" implements = [package + "." + className] zenera

- 43. So what goes in startup.py? def registerFunction(self, ssc, jsession, function_name, params): setup_spark_context_if_needed() if function_name in functions_info: function_info = functions_info[function_name] evaledParams = ast.literal_eval(params) func = function_info.func(*evaledParams) udf = UserDefinedFunction(func, ret_type, make_registration_name()) return udf._judf else: print("Could not find function") Jennifer C.

- 44. What’s the boilerplate in Java? ● Call Python ● An interface (trait) representing the Python entry point ● Wrapping the UDFS in Spark ML stages (optional buuut nice?) ● Also kind of boring (especially for y’all I imagine), and it’s in a few files if you want to look. Kurt Bauschardt

- 45. Enough boilerplate: counting words! With Spacy, so you know more than English* def inner(inputString): nlp = SpacyMagic.get(lang) def spacyTokenToDict(token): """Convert the input token into a dictionary""" return dict(map(lookup_field_or_none, fields)) return list(map(spacyTokenToDict, list(nlp(inputString)))) test_t51

- 46. And from the JVM: val transformer = new SpacyTokenizePython() transformer.setLang("en") val input = spark.createDataset( List(InputData("hi boo"), InputData("boo"))) transformer.setInputCol("input") transformer.setOutputCol("output") val result = transformer.transform(input).collect() Alexy Khrabrov

- 47. Ok but now it’s kind of slow…. ● Well yeah ● Think back to that architecture diagram ● It’s not like a fast design ● We could try Jython but what about using Arrow? ○ Turns out Jython wouldn’t work anyways for #reasons zenera

- 48. Wordcount with PyArrow+PySpark: With Spacy now! Non-English language support! def inner(inputSeries): """Tokenize the inputString using spacy for the provided language.""" nlp = SpacyMagic.get(lang) def tokenizeElem(elem): return list(map(lambda token: token.text, list(nlp(unicode(elem))))) return inputSeries.apply(tokenizeElem) PROJennifer C.

- 49. On its own this would look like: @pandas_udf(returnType=ArrayType(StringType())) def inner(inputSeries): """Tokenize the inputString using spacy for the provided language.""" nlp = SpacyMagic.get(lang) def tokenizeElem(elem): return list(map(lambda token: token.text, list(nlp(unicode(elem))))) return inputSeries.apply(tokenizeElem) koi ko

- 50. References ● Sparkling ML - https://github.com/sparklingpandas/sparklingml ● Apache Arrow: https://arrow.apache.org/ ● Brian (IBM) on initial Spark + Arrow https://arrow.apache.org/blog/2017/07/26/spark-arrow/ ● Li Jin (two sigma) https://databricks.com/blog/2017/10/30/introducing-vectorized-udfs-for-pyspar k.html ● Bill Maimone https://blogs.nvidia.com/blog/2017/06/27/gpu-computation-visualization/ ● I’ll have a blog post on this on my medium & O’Reilly ideas “soon” (maybe 2~3 weeks, want to make it work on Google’s Dataproc first) :) Javier Saldivar

- 51. Learning Spark Fast Data Processing with Spark (Out of Date) Fast Data Processing with Spark (2nd edition) Advanced Analytics with Spark Spark in Action High Performance SparkLearning PySpark

- 52. High Performance Spark! You can buy it today! On the internet! Only one chapter on non-JVM stuff, I’m sorry. Cats love it* *Or at least the box it comes in. If buying for a cat, get print rather than e-book.

- 53. And some upcoming talks: ● April ○ Voxxed Days Melbourne (where I’m flying after this) ● May ○ QCon São Paulo - Validating ML Pipelines ○ PyCon US (Cleveland) - Debugging PySpark ○ Stata London - Spark Auto Tuning ○ JOnTheBeach (Spain) - General Purpose Big Data Systems are eating the world ● June ○ Spark Summit SF (Accelerating Tensorflow & Accelerating Python + Dependencies) ○ Scala Days NYC

- 54. k thnx bye :) If you care about Spark testing and don’t hate surveys: http://bit.ly/holdenTestingSpark I need to give a testing talk in a few months, help a “friend” out. Will tweet results “eventually” @holdenkarau Do you want more realistic benchmarks? Share your UDFs! http://bit.ly/pySparkUDF It’s performance review season, so help a friend out and fill out this survey with your talk feedback http://bit.ly/holdenTalkFeedback

- 55. Beyond wordcount: depencies? ● Your machines probably already have pandas ○ But maybe an old version ● But they might not have “special_business_logic” ○ Very special business logic, no one wants change fortran code*. ● Option 1: Talk to your vendor** ● Option 2: Try some sketchy open source software from a hack day ● We’re going to focus on option 2! *Because it’s perfect, it is fortran after all. ** I don’t like this option because the vendor I work for doesn’t have an answer.

- 56. coffee_boat to the rescue* # You can tell it's alpha cause were installing from github !pip install --upgrade git+https://github.com/nteract/coffee_boat.git # Use the coffee boat from coffee_boat import Captain captain = Captain(accept_conda_license=True) captain.add_pip_packages("pyarrow", "edtf") captain.launch_ship() sc = SparkContext(master="yarn") # You can now use pyarrow & edtf captain.add_pip_packages("yourmagic") # You can now use your magic in transformations!

- 57. Bonus Slides Maybe you ask a question and we go here :)

- 58. We can do that w/Kafka streams.. ● Why bother learning from our mistakes? ● Or more seriously, the mistakes weren’t that bad...

- 59. Our “special” business logic def transform(input): """ Transforms the supplied input. """ return str(len(input)) Pargon

- 60. Let’s pretend all the world is a string: override def transform(value: String): String = { // WARNING: This may summon cuthuluhu dataOut.writeInt(value.getBytes.size) dataOut.write(value.getBytes) dataOut.flush() val resultSize = dataIn.readInt() val result = new Array[Byte](resultSize) dataIn.readFully(result) // Assume UTF8, what could go wrong? :p new String(result) } From https://github.com/holdenk/kafka-streams-python-cthulhu

- 61. Then make an instance to use it... val testFuncFile = "kafka_streams_python_cthulhu/strlen.py" stream.transformValues( PythonStringValueTransformerSupplier(testFuncFile)) // Or we could wrap this in the bridge but thats effort. From https://github.com/holdenk/kafka-streams-python-cthulhu

- 62. Let’s pretend all the world is a string: def main(socket): while (True): input_length = _read_int(socket) data = socket.read(input_length) result = transform(data) resultBytes = result.encode() _write_int(len(resultBytes), socket) socket.write(resultBytes) socket.flush() From https://github.com/holdenk/kafka-streams-python-cthulhu

- 63. What does that let us do? ● You can add a map stage with your data scientists Python code in the middle ● You’re limited to strings* ● Still missing the “driver side” integration (e.g. the interface requires someone to make a Scala class at some point)

- 64. What about things other than strings? Use another system ● Like Spark! (oh wait) or BEAM* or FLINK*? Write it in a format Python can understand: ● Pickling (from Java) ● JSON ● XML Purely Python solutions ● Currently roll-your-own (but not that bad) *These are also JVM based solutions calling into Python. I’m not saying they will also summon Cuthulhu, I’m just saying hang onto your souls.

![So what goes in startup.py?

class PythonRegistrationProvider(object):

class Java:

package = "com.sparklingpandas.sparklingml.util.python"

className = "PythonRegisterationProvider"

implements = [package + "." + className]

zenera](https://image.slidesharecdn.com/makingthebigdataecosystemworktogetherwithpythonapachearrowsparkbeamanddask2-180428155945/85/Making-the-big-data-ecosystem-work-together-with-Python-Apache-Arrow-Apache-Spark-Apache-Beam-and-Dask-42-320.jpg)

![So what goes in startup.py?

def registerFunction(self, ssc, jsession, function_name,

params):

setup_spark_context_if_needed()

if function_name in functions_info:

function_info = functions_info[function_name]

evaledParams = ast.literal_eval(params)

func = function_info.func(*evaledParams)

udf = UserDefinedFunction(func, ret_type,

make_registration_name())

return udf._judf

else:

print("Could not find function")

Jennifer C.](https://image.slidesharecdn.com/makingthebigdataecosystemworktogetherwithpythonapachearrowsparkbeamanddask2-180428155945/85/Making-the-big-data-ecosystem-work-together-with-Python-Apache-Arrow-Apache-Spark-Apache-Beam-and-Dask-43-320.jpg)

dataIn.readFully(result)

// Assume UTF8, what could go wrong? :p

new String(result)

}

From https://github.com/holdenk/kafka-streams-python-cthulhu](https://image.slidesharecdn.com/makingthebigdataecosystemworktogetherwithpythonapachearrowsparkbeamanddask2-180428155945/85/Making-the-big-data-ecosystem-work-together-with-Python-Apache-Arrow-Apache-Spark-Apache-Beam-and-Dask-60-320.jpg)