Message Passing, Remote Procedure Calls and Distributed Shared Memory as Communication Paradigms for Distributed Systems & Remote Procedure Call Implementation Using Distributed Algorithms

- 1. Advance Operating System 1 Presented By: Zunera Altaf Sehrish Asif Wajeeha Malik

- 2. Reference: Message Passing, Remote Procedure Calls and Distributed Shared Memory as Communication Paradigms for Distributed Systems && Remote Procedure Call Implementation Using Distributed Algorithms Given By: J. Silcock and A. Goscinski {jackie, [email protected]} School of Computing and Mathematics Deakin University Geelong, Australia && G. MURALI i K.ANUSHAii A. SHIRISHAiii S.SRAVYAiv Assistant Professor, Dept of Computer Science Engineering JNTU-Pulivendula, AP, India 2

- 4. Introduction • Distributed System • Distributed Programming • Reasons for using DS • To connect several computers first application that may require to the use of communication network to communicate. • Second reason to use distributed system is single computer may be possible in principle • Examples of distributed systems • Telephone networks and cellular networks • Computer networks such as the Internet • Wireless sensor network. • Applications of distributed computing • World wide web and peer-to-peer networks. • Massively multiplayer online games and virtual reality communities • Distributed databases and distributed database management systems 4

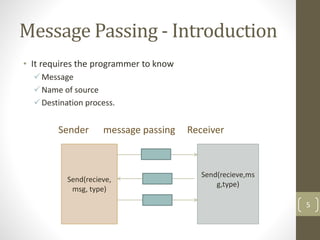

- 5. Message Passing - Introduction • It requires the programmer to know Message Name of source Destination process. Sender message passing Receiver 5 Send(recieve, msg, type) Send(recieve,ms g,type)

- 6. Message Passing • Message passing is the basis of most interprocess communication in distributed systems. It is at the lowest level of abstraction and requires the application programmer to be able to identify Message. Name of source. Destination process. Data types expected for process. 6

- 7. Syntax of MP • Communication in the message passing paradigm, in its simplest form, is performed using the send() and receive() primitives. • The syntax is generally of the form: • send(receiver, message) • receive(sender, message) 7

- 8. Semantics of MP • Decisions have to be made, at the operating system level, regarding the semantics of the send() and receive() primitives. • The most fundamental of these are the choices between blocking and non-blocking primitives and reliable and unreliable primitives. 8

- 9. Semantics(cont.) • Blocking: Blocking will wait until a communication has been completed in its local process before continuing. • A blocking send operation will not return until the message has entered into MP internal buffer to be completed. • A blocking receive operation will wait until a message has been received and completely decoded before returning. • Non-Blocking • Non-blocking will initiate a communication without waiting for that communication to be completed. • The call to non-blocking operation(send/ receive) will return as soon as the operation is began, not when it completes • Pros & cons of non-blocking: This has the advantage of not leaving the CPU idle while the send is being completed. However, the disadvantage of this approach is that the sender does not know and will not be informed when the message buffer has been cleared. 9

- 10. Semantics(cont.) • Buffering • A unbuffered send & receive operation means that the sending process sends the message directly to the receiving process rather than a message buffer. The address, receiver, in the send() is the address of the process and same as recieve() • There is a problem, in the unbuffered case, if the send() is called before the receive() because the address in the send does not refer to any existing process on the server machine. sender process receiver process 10

- 11. Semantics (Cont…) • A buffered send & receive • operation will send address of buffer in send() and recieve() as destination parameter to communicate with other process. • Buffered messages save in buffer until the server process is ready to process them. 11 Sender: Send (buff,type,msg) Receiver buffer

- 12. Semantics (Cont.) - Reliability Fig: A Unreliable Send Fig: A Reliable Send 12 Sender Receiver Sender process waiting for response without knowing message is not transmitted to receiver Sender Receiver Message received

- 13. Semantics(cont…) • Direct/indirect communication: Ports allow indirect communication. Messages are sent to the port by the sender and received from the port by the receiver. Direct communication involves the message being sent direct to the process itself, which is named explicitly in the send, rather than to the intermediate port. • Fixed/variable size messages: Fixed size messages have their size restricted by the system. The implementation of variable size messages is more difficult but makes programming easier, the reverse is true for fixed size messages. 13

- 14. Message passing Demo code class Program { static void Main(string[] args) { using (new MPI.Environment(ref args)) { ntracommunicator comm = Communicator.world; int number = 6; if (comm.Rank == 0) { for (int i = 1; i < comm.Size&& number>0; i++ ,number-- ) { comm.Send<int>(number, i, 0); int[] ansew =comm.Receive<int[]>(i, 1); for (int j = 0; j < ansew.Length; j++) { Console.WriteLine(number+ " * "+ j+ " = "+ansew[j]); } } } else { int size = 10; int[] array = new int[size]; int X = comm.Receive<int>(0, 0); for (int i = 0; i < size; i++) { int product = X * i; array[i]= product; } comm.Send<int[]>(array, 0, 1); Console.WriteLine("rank :" + comm.Rank); } } } } 14

- 15. Output Rank 1 Rank 2 1*1=1 4*1=4 1*2=2 4*2=8 1*3=3 4*3=12 1*4=4 4*4=16 1*5=5 4*5=20 1*6=6 4*6=24 1*7=7 4*7=28 1*8=8 4*8=32 1*9=9 4*9=36 1*10=10 4*10=40 Rank 4 Rank 5 2*1=2 3*1=3 2*2=4 3*2=6 2*3=6 3*3=9 2*4=8 3*4=12 2*5=10 3*5=15 2*6=12 3*6=18 2*7=14 3*7=21 2*8=16 3*8=24 2*9=18 3*9=27 2*10=20 3*10=40 15

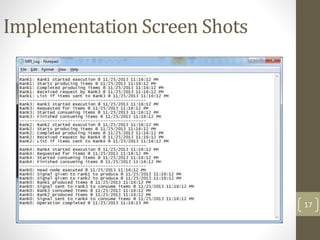

- 16. Producer consumer example by MP 16

- 18. Message Passing • Explicit control of the movement of data RPC • Level of abstraction increases 18

- 19. RPC: Remote Procedure Calling 19 For client-server based applications RPC is a powerful technique between distributed processes Calling procedure, Called procedure need not exist in the same address space. Two systems may be on same system or they may be on the different systems.

- 20. Syntax 20 • call procedure_name(value_arguments; result_arguments) Call • receive procedure_name(in value_parameters; out result_parameters) Receive • reply(caller, result_parameters)Reply

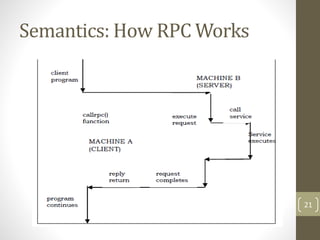

- 21. Semantics: How RPC Works 21

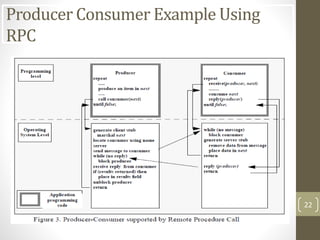

- 22. Producer Consumer Example Using RPC 22

- 23. TSP: Travelling Salesman Problem • The traditional lines of attack for the NP-hard problems are the following: • 1. For finding exact solutions: • Fast only for small problem sizes. • 2. Devising suboptimal algorithms: • Probably provides good solution • Not proved to be optimal. • 3. Finding special cases for the problem: • Either better or exact heuristics are possible. • Given a set of cities and the distance between each possible pair, the Travelling Salesman Problem is to find the best possible way of ‘visiting all the cities exactly once and returning to the starting point’. 23

- 24. TSP: Travelling Salesman Problem 24

- 25. Using Parallel Branch & Bound • B&B uses a tree for TSP. 25 Node of the tree represents a partial tour for the use of TSP. Leaf represents a solution of problem.

- 26. Issuesregardingthepropertiesofremoteprocedurecallstransparency: Binding 26 • (NameLocation) • Implemented at the operating system level, using a static or dynamic linker extension. • Another method is to use procedure variables which contain a value which is linked to the procedure location. Communication and site failures can result in inconsistent data because of partially completed processes. The solution to this problem is often left to the application programmer. Parameter passing in most systems is restricted to the use of value parameters. Exception handling is a problem also associated with heterogeneity. The exceptions available in different languages vary and have to be limited to the lowest common denominator. Communication Transparency: The user should be unaware that the procedure they are calling is remote.

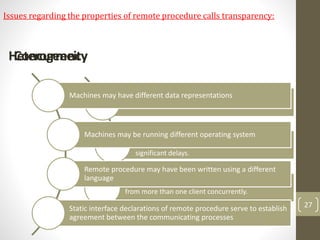

- 27. Should not interfere with communication mechanisms. Single threaded clients and servers, when blocked while waiting for the results from a RPC, can cause significant delays. Lightweight processes allow the server to execute calls from more than one client concurrently. ConcurrencyHeterogeneity 27 Machines may have different data representations Machines may be running different operating system Remote procedure may have been written using a different language Static interface declarations of remote procedure serve to establish agreement between the communicating processes Issues regarding the properties of remote procedure calls transparency:

- 28. • Memory which although distributed over a network of autonomous computers and is accessed through Virtual addresses. • DSM allows programmers to use shared memory style programming. • Programmers are able to access complex data structures. • OS has to send messages b/w machines with request for memory not available locally make replicated memory consistent Distributed Shared Memory 28

- 29. Syntax 29 The syntax used for DSM is the same as that of normal centralized memory multiprocessor systems. -> read(shared_variable) -> write(data, shared_variable) • read() primitive requires the name of the variable to be read as its argument. • write() primitive requires the data and the name of the variable to which the data is to be written. variable through its virtual address

- 30. Semantics:ThereareseveralissuesrelatedtothesemanticsofDSM: 1.Structure&Granularity: Structure:Memorycantakeunstructuredlineararrayofwords orstructuredformsof objects. Granularity:relatestothesizeofthechunksoftheshareddata. Coarse Grained Solution • It is a page-based distributed memory management • Is an attempt to implement a virtual memory model where paging takes place over a network instead of to disk Finer Grained Solution • It can lead to higher network traffic 30

- 31. Semantics(cont) 2.Consistency 31 Consistency Simplest implementation of shared memory a request for a non local piece of data Is very similar to thrashing in virtual memory and it leads to lowering performance Consistency models determine the conditions :memory updates will be propagated through the system

- 32. Semantics(cont)…. 3.Synchronization: Shared data must protect by synchronization primitives,locks,monitors or semaphores. 32 • It can be managed by synchronization manager • It can be made the responsibility of the application developer • Finally it can be made the responsibility of the system developer

- 33. Semantics(cont)…. 4. Heterogeneity: Sharing data b/w heterogeneous machines is an important problem. 4.1 Mermaid Approach: • Is allow only one type of data on an appropriately tagged page • The overhead of converting that data might be too high to make DSM on heterogeneous system 5.Scalability: this is one of the benefits of DSM systems • Is that they scale better than many tightly-coupled multiprocessors. • Is limited by physical bottlenecks 33

- 34. ProducerConsumersupportedbyDSMatSystemLevel Syntax of the code is same as that for the centralized version but implications of the OS are quite different. The Process requiring a lock on semaphore executes wait(sem). When process has completed the critical region it will execute a signal (sem) 34 Best method of measuring the value of the DSM is to measure its performance against the other communication paradigms..

- 35. ProducerConsumersupportedbyDSMatUserLevel It is based on the implementation. In this implementation shared memory exist at user level in the memory space of the server. The producer and consumer both use remote procedure calls to call the cmm. 35

- 36. Analysis: Ease of implementation for system user Ease of use at application programming level Performance 36 This analysis of the implementations of the producer-consumer problem using message passing, RPC and DSM at user and operating system level will be based on the following three criteria:

- 37. EaseofimplementationforSystemuser: 37 When using message passing paradigm for communication b/w processes the system must provide mechanism for passing messages b/w processes. Code written at system level is complex compared with message passing and RPC. In the final ,DSM implemented at user level. System designer must provide message passing and RPC and then use these two mechanisms to implement a central memory manager.

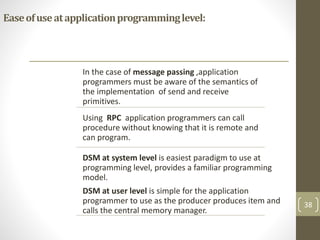

- 38. Easeofuseatapplicationprogramminglevel: In the case of message passing ,application programmers must be aware of the semantics of the implementation of send and receive primitives. Using RPC application programmers can call procedure without knowing that it is remote and can program. DSM at system level is easiest paradigm to use at programming level, provides a familiar programming model. DSM at user level is simple for the application programmer to use as the producer produces item and calls the central memory manager. 38

- 39. Performance: Interprocess communication consumes a large amount of time, a relative measure of performance b/w the three paradigms The message passing implementation requires only 1 message to be passed while RPC requires 2messages while DSM implemented at OS level requires 12 ages and at user level requires 4 messages. DSM has a large overhead which must be minimized as much as possible 39

- 40. Conclusion.. • We have discussed three high level communication paradigms for DOS. • Message passing,RPC and DSM and base their discussion on their semantics ,syntax and discuss the implications of implementation and use of these paradigms for the application programmer and the OS designer. 40

- 41. 41

Editor's Notes

- #5: A distributed system consists of different independent computers that communicate with a computer network to achieve common goals. Distributed program defined as a computer program that runs in a distributed system and process of writing such programs is called distributed programming.

- #24: NP hard problem Is to find shortest possible tour that each city exactly once by the given list of cities. Worst case running time for any a Algo for TSP increases complexity with the number of cities. For these type of problem, we use branch and bound method.

![Message passing Demo code

class Program

{

static void Main(string[] args)

{

using (new MPI.Environment(ref args))

{

ntracommunicator comm = Communicator.world;

int number = 6;

if (comm.Rank == 0)

{

for (int i = 1; i < comm.Size&& number>0; i++ ,number--

)

{

comm.Send<int>(number, i, 0);

int[] ansew =comm.Receive<int[]>(i, 1);

for (int j = 0; j < ansew.Length; j++)

{

Console.WriteLine(number+ " * "+ j+ " = "+ansew[j]);

}

}

}

else

{

int size = 10;

int[] array = new int[size];

int X = comm.Receive<int>(0, 0);

for (int i = 0; i < size; i++) {

int product = X * i;

array[i]= product;

}

comm.Send<int[]>(array, 0, 1);

Console.WriteLine("rank :" + comm.Rank);

}

}

}

}

14](https://image.slidesharecdn.com/presentation-140610111329-phpapp02/85/Message-Passing-Remote-Procedure-Calls-and-Distributed-Shared-Memory-as-Communication-Paradigms-for-Distributed-Systems-Remote-Procedure-Call-Implementation-Using-Distributed-Algorithms-14-320.jpg)