Metaheuristic Optimization: Algorithm Analysis and Open Problems

- 1. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Metaheristics Optimization: Algorithm Analysis and Open Problems Xin-She Yang National Physical Laboratory, UK @ SEA 2011 Xin-She Yang 2011 Metaheuristics and Optimization

- 2. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Intro Intro Computational science is now the third paradigm of science, complementing theory and experiment. - Ken Wilson (Cornell University), Nobel Laureate. Xin-She Yang 2011 Metaheuristics and Optimization

- 3. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Intro Intro Computational science is now the third paradigm of science, complementing theory and experiment. - Ken Wilson (Cornell University), Nobel Laureate. Xin-She Yang 2011 Metaheuristics and Optimization

- 4. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Intro Intro Computational science is now the third paradigm of science, complementing theory and experiment. - Ken Wilson (Cornell University), Nobel Laureate. All models are wrong, but some are useful. - George Box, Statistician Xin-She Yang 2011 Metaheuristics and Optimization

- 5. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Intro Intro Computational science is now the third paradigm of science, complementing theory and experiment. - Ken Wilson (Cornell University), Nobel Laureate. All models are inaccurate, but some are useful. - George Box, Statistician Xin-She Yang 2011 Metaheuristics and Optimization

- 6. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Intro Intro Computational science is now the third paradigm of science, complementing theory and experiment. - Ken Wilson (Cornell University), Nobel Laureate. All models are inaccurate, but some are useful. - George Box, Statistician All algorithms perform equally well on average over all possible functions. - No-free-lunch theorems (Wolpert & Macready) Xin-She Yang 2011 Metaheuristics and Optimization

- 7. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Intro Intro Computational science is now the third paradigm of science, complementing theory and experiment. - Ken Wilson (Cornell University), Nobel Laureate. All models are inaccurate, but some are useful. - George Box, Statistician All algorithms perform equally well on average over all possible functions. How so? - No-free-lunch theorems (Wolpert & Macready) Xin-She Yang 2011 Metaheuristics and Optimization

- 8. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Intro Intro Computational science is now the third paradigm of science, complementing theory and experiment. - Ken Wilson (Cornell University), Nobel Laureate. All models are inaccurate, but some are useful. - George Box, Statistician All algorithms perform equally well on average over all possible functions. Not quite! (more later) - No-free-lunch theorems (Wolpert & Macready) Xin-She Yang 2011 Metaheuristics and Optimization

- 9. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Intro Intro Computational science is now the third paradigm of science, complementing theory and experiment. - Ken Wilson (Cornell University), Nobel Laureate. All models are inaccurate, but some are useful. - George Box, Statistician All algorithms perform equally well on average over all possible functions. Not quite! (more later) - No-free-lunch theorems (Wolpert & Macready) Xin-She Yang 2011 Metaheuristics and Optimization

- 10. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Overview Overview Introduction Metaheuristic Algorithms Applications Markov Chains and Convergence Analysis Exploration and Exploitation Free Lunch or No Free Lunch? Open Problems Xin-She Yang 2011 Metaheuristics and Optimization

- 11. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Metaheuristic Algorithms Metaheuristic Algorithms Essence of an Optimization Algorithm To move to a new, better point xi +1 from an existing known location xi . xi x2 x1 Xin-She Yang 2011 Metaheuristics and Optimization

- 12. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Metaheuristic Algorithms Metaheuristic Algorithms Essence of an Optimization Algorithm To move to a new, better point xi +1 from an existing known location xi . xi x2 x1 Xin-She Yang 2011 Metaheuristics and Optimization

- 13. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Metaheuristic Algorithms Metaheuristic Algorithms Essence of an Optimization Algorithm To move to a new, better point xi +1 from an existing known location xi . xi ? x2 x1 xi +1 Population-based algorithms use multiple, interacting paths. Different algorithms Different strategies/approaches in generating these moves! Xin-She Yang 2011 Metaheuristics and Optimization

- 14. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Optimization Algorithms Optimization Algorithms Deterministic Newton’s method (1669, published in 1711), Newton-Raphson (1690), hill-climbing/steepest descent (Cauchy 1847), least-squares (Gauss 1795), linear programming (Dantzig 1947), conjugate gradient (Lanczos et al. 1952), interior-point method (Karmarkar 1984), etc. Xin-She Yang 2011 Metaheuristics and Optimization

- 15. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Stochastic/Metaheuristic Stochastic/Metaheuristic Genetic algorithms (1960s/1970s), evolutionary strategy (Rechenberg & Swefel 1960s), evolutionary programming (Fogel et al. 1960s). Simulated annealing (Kirkpatrick et al. 1983), Tabu search (Glover 1980s), ant colony optimization (Dorigo 1992), genetic programming (Koza 1992), particle swarm optimization (Kennedy & Eberhart 1995), differential evolution (Storn & Price 1996/1997), harmony search (Geem et al. 2001), honeybee algorithm (Nakrani & Tovey 2004), ..., firefly algorithm (Yang 2008), cuckoo search (Yang & Deb 2009), ... Xin-She Yang 2011 Metaheuristics and Optimization

- 16. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Steepest Descent/Hill Climbing Steepest Descent/Hill Climbing Gradient-Based Methods Use gradient/derivative information – very efficient for local search. Xin-She Yang 2011 Metaheuristics and Optimization

- 17. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Steepest Descent/Hill Climbing Steepest Descent/Hill Climbing Gradient-Based Methods Use gradient/derivative information – very efficient for local search. Xin-She Yang 2011 Metaheuristics and Optimization

- 18. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Steepest Descent/Hill Climbing Steepest Descent/Hill Climbing Gradient-Based Methods Use gradient/derivative information – very efficient for local search. Xin-She Yang 2011 Metaheuristics and Optimization

- 19. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Steepest Descent/Hill Climbing Steepest Descent/Hill Climbing Gradient-Based Methods Use gradient/derivative information – very efficient for local search. Xin-She Yang 2011 Metaheuristics and Optimization

- 20. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Steepest Descent/Hill Climbing Steepest Descent/Hill Climbing Gradient-Based Methods Use gradient/derivative information – very efficient for local search. Xin-She Yang 2011 Metaheuristics and Optimization

- 21. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Steepest Descent/Hill Climbing Steepest Descent/Hill Climbing Gradient-Based Methods Use gradient/derivative information – very efficient for local search. Xin-She Yang 2011 Metaheuristics and Optimization

- 22. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Newton’s Method ∂2f ∂2f ∂x1 2 ··· ∂x1 ∂xn xn+1 = xn − H−1 ∇f , H= . . .. . . . . . . ∂2f ∂2f ∂xn ∂x1 ··· ∂xn 2 Xin-She Yang 2011 Metaheuristics and Optimization

- 23. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Newton’s Method ∂2f ∂2f ∂x1 2 ··· ∂x1 ∂xn xn+1 = xn − H−1 ∇f , H= . . .. . . . . . . ∂2f ∂2f ∂xn ∂x1 ··· ∂xn 2 Quasi-Newton If H is replaced by I, we have xn+1 = xn − αI∇f (xn ). Here α controls the step length. Xin-She Yang 2011 Metaheuristics and Optimization

- 24. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Newton’s Method ∂2f ∂2f ∂x1 2 ··· ∂x1 ∂xn xn+1 = xn − H−1 ∇f , H= . . .. . . . . . . ∂2f ∂2f ∂xn ∂x1 ··· ∂xn 2 Quasi-Newton If H is replaced by I, we have xn+1 = xn − αI∇f (xn ). Here α controls the step length. Xin-She Yang 2011 Metaheuristics and Optimization

- 25. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Newton’s Method ∂2f ∂2f ∂x1 2 ··· ∂x1 ∂xn xn+1 = xn − H−1 ∇f , H= . . .. . . . . . . ∂2f ∂2f ∂xn ∂x1 ··· ∂xn 2 Quasi-Newton If H is replaced by I, we have xn+1 = xn − αI∇f (xn ). Here α controls the step length. Generation of new moves by gradient. Xin-She Yang 2011 Metaheuristics and Optimization

- 26. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Simulated Annealling Simulated Annealling Metal annealing to increase strength =⇒ simulated annealing. Probabilistic Move: p ∝ exp[−E /kB T ]. kB =Boltzmann constant (e.g., kB = 1), T =temperature, E =energy. E ∝ f (x), T = T0 αt (cooling schedule) , (0 < α < 1). T → 0, =⇒p → 0, =⇒ hill climbing. Xin-She Yang 2011 Metaheuristics and Optimization

- 27. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Simulated Annealling Simulated Annealling Metal annealing to increase strength =⇒ simulated annealing. Probabilistic Move: p ∝ exp[−E /kB T ]. kB =Boltzmann constant (e.g., kB = 1), T =temperature, E =energy. E ∝ f (x), T = T0 αt (cooling schedule) , (0 < α < 1). T → 0, =⇒p → 0, =⇒ hill climbing. This is essentially a Markov chain. Generation of new moves by Markov chain. Xin-She Yang 2011 Metaheuristics and Optimization

- 28. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks An Example An Example Xin-She Yang 2011 Metaheuristics and Optimization

- 29. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Genetic Algorithms Genetic Algorithms crossover mutation Xin-She Yang 2011 Metaheuristics and Optimization

- 30. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Genetic Algorithms Genetic Algorithms crossover mutation Xin-She Yang 2011 Metaheuristics and Optimization

- 31. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Genetic Algorithms Genetic Algorithms crossover mutation Xin-She Yang 2011 Metaheuristics and Optimization

- 32. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Xin-She Yang 2011 Metaheuristics and Optimization

- 33. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Xin-She Yang 2011 Metaheuristics and Optimization

- 34. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Generation of new solutions by crossover, mutation and elistism. Xin-She Yang 2011 Metaheuristics and Optimization

- 35. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Swarm Intelligence Swarm Intelligence Ants, bees, birds, fish ... Simple rules lead to complex behaviour. Swarming Starlings Xin-She Yang 2011 Metaheuristics and Optimization

- 36. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks PSO PSO xj g∗ xi Particle swarm optimization (Kennedy and Eberhart 1995) vt+1 = vt + αǫ1 (g∗ − xt ) + βǫ2 (x∗ − xit ), i i i i xt+1 = xt + vit+1 . i i α, β = learning parameters, ǫ1 , ǫ2 =random numbers. Xin-She Yang 2011 Metaheuristics and Optimization

- 37. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks PSO PSO xj g∗ xi Particle swarm optimization (Kennedy and Eberhart 1995) vt+1 = vt + αǫ1 (g∗ − xt ) + βǫ2 (x∗ − xit ), i i i i xt+1 = xt + vit+1 . i i α, β = learning parameters, ǫ1 , ǫ2 =random numbers. Xin-She Yang 2011 Metaheuristics and Optimization

- 38. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks PSO PSO xj g∗ xi Particle swarm optimization (Kennedy and Eberhart 1995) vt+1 = vt + αǫ1 (g∗ − xt ) + βǫ2 (x∗ − xit ), i i i i xt+1 = xt + vit+1 . i i α, β = learning parameters, ǫ1 , ǫ2 =random numbers. Without randomness, generation of new moves by weighted average or pattern search. Adding randomization to increase the diversity of new solutions. Xin-She Yang 2011 Metaheuristics and Optimization

- 39. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks PSO Convergence PSO Convergence Consider a 1D system without randomness (Clerc & Kennedy 2002) vit+1 = vit + α(xit − xi∗ ) + β(xit − g ), xit+1 = xit + vit+1 . Xin-She Yang 2011 Metaheuristics and Optimization

- 40. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks PSO Convergence PSO Convergence Consider a 1D system without randomness (Clerc & Kennedy 2002) vit+1 = vit + α(xit − xi∗ ) + β(xit − g ), xit+1 = xit + vit+1 . αxi∗ +βg Considering only one particle and defining p = α+β , φ =α+β and setting y t = p − xit , we have v t+1 = v t + φy t , y t+1 = −v t + (1 − φ)y t . Xin-She Yang 2011 Metaheuristics and Optimization

- 41. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks PSO Convergence PSO Convergence Consider a 1D system without randomness (Clerc & Kennedy 2002) vit+1 = vit + α(xit − xi∗ ) + β(xit − g ), xit+1 = xit + vit+1 . αxi∗ +βg Considering only one particle and defining p = α+β , φ =α+β and setting y t = p − xit , we have v t+1 = v t + φy t , y t+1 = −v t + (1 − φ)y t . This can be written as vt 1 φ Ut = ,A = , =⇒Ut+1 = AUt , yt −1 (1 − φ) a simple dynamical system whose eigenvalues are φ φ2 − 4φ ± λ± = 1 − . 2 2 Periodic, quasi-periodic depending on φ. Convergence for φ ≈ 4. Xin-She Yang 2011 Metaheuristics and Optimization

- 42. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Ant and Bee Algorithms Ant and Bee Algorithms Ant Colony Optimization (Dorigo 1992) Bee algorithms & many variants (Nakrani & Tovey 2004, Karabogo 2005, Yang 2005, Asfhar et al. 2007, ..., others. Xin-She Yang 2011 Metaheuristics and Optimization

- 43. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Ant and Bee Algorithms Ant and Bee Algorithms Ant Colony Optimization (Dorigo 1992) Bee algorithms & many variants (Nakrani & Tovey 2004, Karabogo 2005, Yang 2005, Asfhar et al. 2007, ..., others. Advantages Very promising for combinatorial optimization, but for continuous problems, it may not be the best choice. Xin-She Yang 2011 Metaheuristics and Optimization

- 44. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Ant & Bee Algorithms Ant & Bee Algorithms Pheromone based Each agent follows paths with higher pheromone concentration (quasi-randomly) Pheromone evaporates (exponentially) with time Xin-She Yang 2011 Metaheuristics and Optimization

- 45. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Firefly Algorithm Firefly Algorithm Firefly Algorithm by Xin-She Yang (2008) (Xin-She Yang, Nature-Inspired Metaheuristic Algorithms, Luniver Press, (2008).) Firefly Behaviour and Idealization Fireflies are unisex and brightness varies with distance. Less bright ones will be attracted to bright ones. If no brighter firefly can be seen, a firefly will move randomly. 2 xt+1 = xt + β0 e −γrij (xj − xi ) + α ǫt . i i i Generation of new solutions by random walk and attraction. Xin-She Yang 2011 Metaheuristics and Optimization

- 46. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks FA Convergence FA Convergence For the firefly motion without the randomness term, we focus on a single agent and replace xt by g j 2 xt+1 = xt + β0 e −γri (g − xt ), i i i where the distance ri = ||g − xt ||2 . i Xin-She Yang 2011 Metaheuristics and Optimization

- 47. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks FA Convergence FA Convergence For the firefly motion without the randomness term, we focus on a single agent and replace xt by g j 2 xt+1 = xt + β0 e −γri (g − xt ), i i i where the distance ri = ||g − xt ||2 . i √ In the 1-D case, we set yt = g − xt and ut = i γyt , we have 2 ut+1 = ut [1 − β0 e −ut ]. Xin-She Yang 2011 Metaheuristics and Optimization

- 48. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks FA Convergence FA Convergence For the firefly motion without the randomness term, we focus on a single agent and replace xt by g j 2 xt+1 = xt + β0 e −γri (g − xt ), i i i where the distance ri = ||g − xt ||2 . i √ In the 1-D case, we set yt = g − xt and ut = i γyt , we have 2 ut+1 = ut [1 − β0 e −ut ]. Analyzing this using the same methodology for ut = λut (1 − ut ), we have a corresponding chaotic map, focusing on the transition from periodic multiple states to chaotic behaviour. Xin-She Yang 2011 Metaheuristics and Optimization

- 49. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Convergence can be achieved for β0 < 2. There is a transition from periodic to chaos at β0 ≈ 4. Chaotic characteristics can often be used as an efficient mixing technique for generating diverse solutions. Too much attraction may cause chaos :) Xin-She Yang 2011 Metaheuristics and Optimization

- 50. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Convergence can be achieved for β0 < 2. There is a transition from periodic to chaos at β0 ≈ 4. Chaotic characteristics can often be used as an efficient mixing technique for generating diverse solutions. Too much attraction may cause chaos :) Xin-She Yang 2011 Metaheuristics and Optimization

- 51. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Cuckoo Breeding Behaviour Cuckoo Breeding Behaviour Evolutionary Advantages Dumps eggs in the nests of host birds and let these host birds raise their chicks. Cuckoo Video (BBC) Xin-She Yang 2011 Metaheuristics and Optimization

- 52. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Cuckoo Search Cuckoo Search Cuckoo Search by Xin-She Yang and Suash Deb (2009) (Xin-She Yang and Suash Deb, Cuckoo search via L´vy flights, in: Proceeings of e World Congress on Nature & Biologically Inspired Computing (NaBIC 2009, India), IEEE Publications, USA, pp. 210-214 (2009). Also, Xin-She Yang and Suash Deb, Engineering Optimization by Cuckoo Search, Int. J. Mathematical Modelling and Numerical Optimisation, Vol. 1, No. 4, 330-343 (2010). ) Cuckoo Behaviour and Idealization Each cuckoo lays one egg (solution) at a time, and dumps its egg in a randomly chosen nest. The best nests with high-quality eggs (solutions) will carry out to the next generation. The egg laid by a cuckoo can be discovered by the host bird with a probability pa and a nest will then be built. Xin-She Yang 2011 Metaheuristics and Optimization

- 53. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Cuckoo Search Cuckoo Search Local random walk: xt+1 = xt + s ⊗ H(pa − ǫ) ⊗ (xt − xt ). i i j k [xi , xj , xk are 3 different solutions, H(u) is a Heaviside function, ǫ is a random number drawn from a uniform distribution, and s is the step size. Xin-She Yang 2011 Metaheuristics and Optimization

- 54. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Cuckoo Search Cuckoo Search Local random walk: xt+1 = xt + s ⊗ H(pa − ǫ) ⊗ (xt − xt ). i i j k [xi , xj , xk are 3 different solutions, H(u) is a Heaviside function, ǫ is a random number drawn from a uniform distribution, and s is the step size. Global random walk via L´vy flights: e λΓ(λ) sin(πλ/2) 1 xit+1 = xt + αL(s, λ), i L(s, λ) = , (s ≫ s0 ). π s 1+λ Xin-She Yang 2011 Metaheuristics and Optimization

- 55. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Cuckoo Search Cuckoo Search Local random walk: xt+1 = xt + s ⊗ H(pa − ǫ) ⊗ (xt − xt ). i i j k [xi , xj , xk are 3 different solutions, H(u) is a Heaviside function, ǫ is a random number drawn from a uniform distribution, and s is the step size. Global random walk via L´vy flights: e λΓ(λ) sin(πλ/2) 1 xit+1 = xt + αL(s, λ), i L(s, λ) = , (s ≫ s0 ). π s 1+λ Xin-She Yang 2011 Metaheuristics and Optimization

- 56. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Cuckoo Search Cuckoo Search Local random walk: xt+1 = xt + s ⊗ H(pa − ǫ) ⊗ (xt − xt ). i i j k [xi , xj , xk are 3 different solutions, H(u) is a Heaviside function, ǫ is a random number drawn from a uniform distribution, and s is the step size. Global random walk via L´vy flights: e λΓ(λ) sin(πλ/2) 1 xit+1 = xt + αL(s, λ), i L(s, λ) = , (s ≫ s0 ). π s 1+λ Generation of new moves by L´vy flights, random walk and elitism. e Xin-She Yang 2011 Metaheuristics and Optimization

- 57. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Applications Applications Design optimization: structural engineering, product design ... Scheduling, routing and planning: often discrete, combinatorial problems ... Applications in almost all areas (e.g., finance, economics, engineering, industry, ...) Xin-She Yang 2011 Metaheuristics and Optimization

- 58. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Pressure Vessel Design Optimization Pressure Vessel Design Optimization d1 L d2 r r Xin-She Yang 2011 Metaheuristics and Optimization

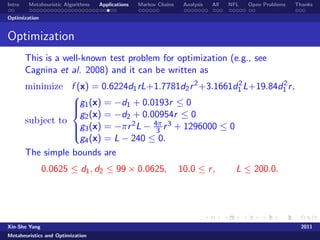

- 59. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Optimization Optimization This is a well-known test problem for optimization (e.g., see Cagnina et al. 2008) and it can be written as minimize f (x) = 0.6224d1 rL+1.7781d2 r 2 +3.1661d1 L+19.84d1 r , 2 2 g1 (x) = −d1 + 0.0193r ≤ 0 g2 (x) = −d2 + 0.00954r ≤ 0 subject to g3 (x) = −πr 2 L − 4π r 3 + 1296000 ≤ 0 3 g4 (x) = L − 240 ≤ 0. Xin-She Yang 2011 Metaheuristics and Optimization

- 60. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Optimization Optimization This is a well-known test problem for optimization (e.g., see Cagnina et al. 2008) and it can be written as minimize f (x) = 0.6224d1 rL+1.7781d2 r 2 +3.1661d1 L+19.84d1 r , 2 2 g1 (x) = −d1 + 0.0193r ≤ 0 g2 (x) = −d2 + 0.00954r ≤ 0 subject to g3 (x) = −πr 2 L − 4π r 3 + 1296000 ≤ 0 3 g4 (x) = L − 240 ≤ 0. The simple bounds are 0.0625 ≤ d1 , d2 ≤ 99 × 0.0625, 10.0 ≤ r , L ≤ 200.0. Xin-She Yang 2011 Metaheuristics and Optimization

- 61. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Optimization Optimization This is a well-known test problem for optimization (e.g., see Cagnina et al. 2008) and it can be written as minimize f (x) = 0.6224d1 rL+1.7781d2 r 2 +3.1661d1 L+19.84d1 r , 2 2 g1 (x) = −d1 + 0.0193r ≤ 0 g2 (x) = −d2 + 0.00954r ≤ 0 subject to g3 (x) = −πr 2 L − 4π r 3 + 1296000 ≤ 0 3 g4 (x) = L − 240 ≤ 0. The simple bounds are 0.0625 ≤ d1 , d2 ≤ 99 × 0.0625, 10.0 ≤ r , L ≤ 200.0. The best solution found so far f∗ = 6059.714, x∗ = (0.8125, 0.4375, 42.0984, 176.6366). Xin-She Yang 2011 Metaheuristics and Optimization

- 62. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Dome Design Dome Design Xin-She Yang 2011 Metaheuristics and Optimization

- 63. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Dome Design Dome Design 120-bar dome: Divided into 7 groups, 120 design elements, about 200 constraints (Kaveh and Talatahari 2010; Gandomi and Yang 2011). Xin-She Yang 2011 Metaheuristics and Optimization

- 64. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Tower Design Tower Design 26-storey tower: 942 design elements, 244 nodal links, 59 groups/types, > 4000 nonlinear constraints (Kaveh & Talatahari 2010; Gandomi & Yang 2011). Xin-She Yang 2011 Metaheuristics and Optimization

- 65. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Monte Carlo Methods Monte Carlo Methods Random walk – A drunkard’s walk: ut+1 = µ + ut + wt , where wt is a random variable, and µ is the drift. For example, wt ∼ N(0, σ 2 ) (Gaussian). Xin-She Yang 2011 Metaheuristics and Optimization

- 66. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Monte Carlo Methods Monte Carlo Methods Random walk – A drunkard’s walk: ut+1 = µ + ut + wt , where wt is a random variable, and µ is the drift. For example, wt ∼ N(0, σ 2 ) (Gaussian). 25 20 15 10 5 0 -5 -10 0 100 200 300 400 500 Xin-She Yang 2011 Metaheuristics and Optimization

- 67. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Monte Carlo Methods Monte Carlo Methods Random walk – A drunkard’s walk: ut+1 = µ + ut + wt , where wt is a random variable, and µ is the drift. For example, wt ∼ N(0, σ 2 ) (Gaussian). 25 10 20 5 15 0 10 -5 5 -10 0 -15 -5 -10 -20 0 100 200 300 400 500 -15 -10 -5 0 5 10 15 20 Xin-She Yang 2011 Metaheuristics and Optimization

- 68. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Markov Chains Markov Chains Markov chain: the next state only depends on the current state and the transition probability. P(i , j) ≡ P(Vt+1 = Sj V0 = Sp , ..., Vt = Si ) = P(Vt+1 = Sj Vt = Sj ), =⇒Pij πi∗ = Pji πj∗ , π ∗ = stionary probability distribution. Examples: Brownian motion ui +1 = µ + ui + ǫi , ǫi ∼ N(0, σ 2 ). Xin-She Yang 2011 Metaheuristics and Optimization

- 69. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Markov Chains Markov Chains Monopoly (board games) Monopoly Animation Xin-She Yang 2011 Metaheuristics and Optimization

- 70. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Markov Chain Monte Carlo Markov Chain Monte Carlo Landmarks: Monte Carlo method (1930s, 1945, from 1950s) e.g., Metropolis Algorithm (1953), Metropolis-Hastings (1970). Markov Chain Monte Carlo (MCMC) methods – A class of methods. Really took off in 1990s, now applied to a wide range of areas: physics, Bayesian statistics, climate changes, machine learning, finance, economy, medicine, biology, materials and engineering ... Xin-She Yang 2011 Metaheuristics and Optimization

- 71. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Convergence Behaviour Convergence Behaviour As the MCMC runs, convergence may be reached When does a chain converge? When to stop the chain ... ? Are multiple chains better than a single chain? 0 100 200 300 400 500 600 0 100 200 300 400 500 600 700 800 900 Xin-She Yang 2011 Metaheuristics and Optimization

- 72. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Convergence Behaviour Convergence Behaviour −∞ ← t t=−2 converged U 1 2 t=2 t=−n 3 t=0 Multiple, interacting chains Multiple agents trace multiple, interacting Markov chains during the Monte Carlo process. Xin-She Yang 2011 Metaheuristics and Optimization

- 73. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Analysis Analysis Classifications of Algorithms Trajectory-based: hill-climbing, simulated annealing, pattern search ... Population-based: genetic algorithms, ant & bee algorithms, artificial immune systems, differential evolutions, PSO, HS, FA, CS, ... Xin-She Yang 2011 Metaheuristics and Optimization

- 74. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Analysis Analysis Classifications of Algorithms Trajectory-based: hill-climbing, simulated annealing, pattern search ... Population-based: genetic algorithms, ant & bee algorithms, artificial immune systems, differential evolutions, PSO, HS, FA, CS, ... Xin-She Yang 2011 Metaheuristics and Optimization

- 75. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Analysis Analysis Classifications of Algorithms Trajectory-based: hill-climbing, simulated annealing, pattern search ... Population-based: genetic algorithms, ant & bee algorithms, artificial immune systems, differential evolutions, PSO, HS, FA, CS, ... Ways of Generating New Moves/Solutions Markov chains with different transition probability. Trajectory-based =⇒ a single Markov chain; Population-based =⇒ multiple, interacting chains. Tabu search (with memory) =⇒ self-avoiding Markov chains. Xin-She Yang 2011 Metaheuristics and Optimization

- 76. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Ergodicity Ergodicity Markov Chains & Markov Processes Most theoretical studies uses Markov chains/process as a framework for convergence analysis. A Markov chain is said be to regular if some positive power k of the transition matrix P has only positive elements. A chain is call time-homogeneous if the change of its transition matrix P is the same after each step, thus the transition probability after k steps become Pk . A chain is ergodic or irreducible if it is aperiodic and positive recurrent – it is possible to reach every state from any state. Xin-She Yang 2011 Metaheuristics and Optimization

- 77. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Convergence Behaviour Convergence Behaviour As k → ∞, we have the stationary probability distribution π π = πP, =⇒ thus the first eigenvalue is always 1. Asymptotic convergence to optimality: lim θk → θ∗ , (with probability one). k→∞ Xin-She Yang 2011 Metaheuristics and Optimization

- 78. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Convergence Behaviour Convergence Behaviour As k → ∞, we have the stationary probability distribution π π = πP, =⇒ thus the first eigenvalue is always 1. Asymptotic convergence to optimality: lim θk → θ∗ , (with probability one). k→∞ The rate of convergence is usually determined by the second eigenvalue 0 < λ2 < 1. An algorithm can converge, but may not be necessarily efficient, as the rate of convergence is typically low. Xin-She Yang 2011 Metaheuristics and Optimization

- 79. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Convergence of GA Convergence of GA Important studies by Aytug et al. (1996)1 , Aytug and Koehler (2000)2 , Greenhalgh and Marschall (2000)3 , Gutjahr (2010),4 etc.5 The number of iterations t(ζ) in GA with a convergence probability of ζ can be estimated by ln(1 − ζ) t(ζ) ≤ , ln 1 − min[(1 − µ)Ln , µLn ] where µ=mutation rate, L=string length, and n=population size. 1 H. Aytug, S. Bhattacharrya and G. J. Koehler, A Markov chain analysis of genetic algorithms with power of 2 cardinality alphabets, Euro. J. Operational Research, 96, 195-201 (1996). 2 H. Aytug and G. J. Koehler, New stopping criterion for genetic algorithms, Euro. J. Operational research, 126, 662-674 (2000). 3 D. Greenhalgh & S. Marshal, Convergence criteria for genetic algorithms, SIAM J. Computing, 30, 269-282 (2000). Xin-She Yang 2011 4 Metaheuristics and Gutjahr, Convergence Analysis of Metaheuristics Annals of Information Systems, 10, 159-187 (2010). W. J. Optimization

- 80. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Multiobjective Metaheuristics Multiobjective Metaheuristics Asymptotic convergence of metaheuristic for multiobjective optimization (Villalobos-Arias et al. 2005)6 The transition matrix P of a metaheuristic algorithm has a stationary distribution π such that |Pij − πj | ≤ (1 − ζ)k−1 , k ∀i , j, (k = 1, 2, ...), where ζ is a function of mutation probability µ, string length L and population size. For example, ζ = 2nL µnL , so µ < 0.5. Xin-She Yang 6 2011 M. Villalobos-Arias, C. A. Coello Coello and O. Hern´ndez-Lerma, Asymptotic convergence of metaheuristics a Metaheuristics and Optimization

- 81. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Multiobjective Metaheuristics Multiobjective Metaheuristics Asymptotic convergence of metaheuristic for multiobjective optimization (Villalobos-Arias et al. 2005)6 The transition matrix P of a metaheuristic algorithm has a stationary distribution π such that |Pij − πj | ≤ (1 − ζ)k−1 , k ∀i , j, (k = 1, 2, ...), where ζ is a function of mutation probability µ, string length L and population size. For example, ζ = 2nL µnL , so µ < 0.5. Xin-She Yang 6 2011 M. Villalobos-Arias, C. A. Coello Coello and O. Hern´ndez-Lerma, Asymptotic convergence of metaheuristics a Metaheuristics and Optimization

- 82. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Multiobjective Metaheuristics Multiobjective Metaheuristics Asymptotic convergence of metaheuristic for multiobjective optimization (Villalobos-Arias et al. 2005)6 The transition matrix P of a metaheuristic algorithm has a stationary distribution π such that |Pij − πj | ≤ (1 − ζ)k−1 , k ∀i , j, (k = 1, 2, ...), where ζ is a function of mutation probability µ, string length L and population size. For example, ζ = 2nL µnL , so µ < 0.5. Note: An algorithm satisfying this condition may not converge (for multiobjective optimization) However, an algorithm with elitism, obeying the above condition, does converge!. Xin-She Yang 6 2011 M. Villalobos-Arias, C. A. Coello Coello and O. Hern´ndez-Lerma, Asymptotic convergence of metaheuristics a Metaheuristics and Optimization

- 83. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Other results Other results Limited results on convergence analysis exist, concerning (finite states/domains) ant colony optimization generalized hill-climbers and simulated annealing, best-so-far convergence of cross-entropy optimization, nested partition method, Tabu search, and of course, combinatorial optimization. Xin-She Yang 2011 Metaheuristics and Optimization

- 84. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Other results Other results Limited results on convergence analysis exist, concerning (finite states/domains) ant colony optimization generalized hill-climbers and simulated annealing, best-so-far convergence of cross-entropy optimization, nested partition method, Tabu search, and of course, combinatorial optimization. However, more challenging tasks for infinite states/domains and continuous problems. Many, many open problems needs satisfactory answers. Xin-She Yang 2011 Metaheuristics and Optimization

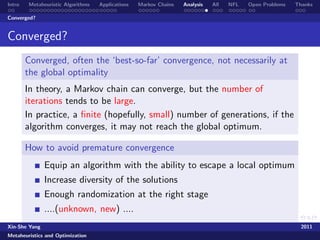

- 85. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Converged? Converged? Converged, often the ‘best-so-far’ convergence, not necessarily at the global optimality In theory, a Markov chain can converge, but the number of iterations tends to be large. In practice, a finite (hopefully, small) number of generations, if the algorithm converges, it may not reach the global optimum. Xin-She Yang 2011 Metaheuristics and Optimization

- 86. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Converged? Converged? Converged, often the ‘best-so-far’ convergence, not necessarily at the global optimality In theory, a Markov chain can converge, but the number of iterations tends to be large. In practice, a finite (hopefully, small) number of generations, if the algorithm converges, it may not reach the global optimum. Xin-She Yang 2011 Metaheuristics and Optimization

- 87. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Converged? Converged? Converged, often the ‘best-so-far’ convergence, not necessarily at the global optimality In theory, a Markov chain can converge, but the number of iterations tends to be large. In practice, a finite (hopefully, small) number of generations, if the algorithm converges, it may not reach the global optimum. How to avoid premature convergence Equip an algorithm with the ability to escape a local optimum Increase diversity of the solutions Enough randomization at the right stage ....(unknown, new) .... Xin-She Yang 2011 Metaheuristics and Optimization

- 88. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks All All So many algorithms – what are the common characteristics? What are the key components? How to use and balance different components? What controls the overall behaviour of an algorithm? Xin-She Yang 2011 Metaheuristics and Optimization

- 89. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Exploration and Exploitation Exploration and Exploitation Characteristics of Metaheuristics Exploration and Exploitation, or Diversification and Intensification. Xin-She Yang 2011 Metaheuristics and Optimization

- 90. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Exploration and Exploitation Exploration and Exploitation Characteristics of Metaheuristics Exploration and Exploitation, or Diversification and Intensification. Exploitation/Intensification Intensive local search, exploiting local information. E.g., hill-climbing. Xin-She Yang 2011 Metaheuristics and Optimization

- 91. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Exploration and Exploitation Exploration and Exploitation Characteristics of Metaheuristics Exploration and Exploitation, or Diversification and Intensification. Exploitation/Intensification Intensive local search, exploiting local information. E.g., hill-climbing. Exploration/Diversification Exploratory global search, using randomization/stochastic components. E.g., hill-climbing with random restart. Xin-She Yang 2011 Metaheuristics and Optimization

- 92. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Summary Summary Exploration Exploitation Xin-She Yang 2011 Metaheuristics and Optimization

- 93. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Summary Summary uniform search Exploration Exploitation Xin-She Yang 2011 Metaheuristics and Optimization

- 94. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Summary Summary uniform search Exploration steepest Exploitation descent Xin-She Yang 2011 Metaheuristics and Optimization

- 95. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Summary Summary uniform search CS Ge net Exploration ic alg ori PS thms O/ SA EP FA Ant /E /Be S e Newton- Raphson Tabu Nelder-Mead steepest Exploitation descent Xin-She Yang 2011 Metaheuristics and Optimization

- 96. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Summary Summary uniform search Best? CS Free lunch? Ge net Exploration ic alg ori PS thms O/ SA EP FA Ant /E /Be S e Newton- Raphson Tabu Nelder-Mead steepest Exploitation descent Xin-She Yang 2011 Metaheuristics and Optimization

- 97. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks No-Free-Lunch (NFL) Theorems No-Free-Lunch (NFL) Theorems Algorithm Performance Any algorithm is as good/bad as random search, when averaged over all possible problems/functions. Xin-She Yang 2011 Metaheuristics and Optimization

- 98. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks No-Free-Lunch (NFL) Theorems No-Free-Lunch (NFL) Theorems Algorithm Performance Any algorithm is as good/bad as random search, when averaged over all possible problems/functions. Finite domains No universally efficient algorithm! Xin-She Yang 2011 Metaheuristics and Optimization

- 99. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks No-Free-Lunch (NFL) Theorems No-Free-Lunch (NFL) Theorems Algorithm Performance Any algorithm is as good/bad as random search, when averaged over all possible problems/functions. Finite domains No universally efficient algorithm! Any free taster or dessert? Yes and no. (more later) Xin-She Yang 2011 Metaheuristics and Optimization

- 100. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks NFL Theorems (Wolpert and Macready 1997) NFL Theorems (Wolpert and Macready 1997) Search space is finite (though quite large), thus the space of possible “cost” values is also finite. Objective function f : X → Y, with F = Y X (space of all possible problems). Assumptions: finite domain, closed under permutation (c.u.p). For m iterations, m distinct visited points form a time-ordered x y x y set dm = dm (1), dm (1) , ..., dm (m), dm (m) . The performance of an algorithm a iterated m times on a cost y function f is denoted by P(dm |f , m, a). For any pair of algorithms a and b, the NFL theorem states y y P(dm |f , m, a) = P(dm |f , m, b). f f Xin-She Yang 2011 Metaheuristics and Optimization

- 101. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks NFL Theorems (Wolpert and Macready 1997) NFL Theorems (Wolpert and Macready 1997) Search space is finite (though quite large), thus the space of possible “cost” values is also finite. Objective function f : X → Y, with F = Y X (space of all possible problems). Assumptions: finite domain, closed under permutation (c.u.p). For m iterations, m distinct visited points form a time-ordered x y x y set dm = dm (1), dm (1) , ..., dm (m), dm (m) . The performance of an algorithm a iterated m times on a cost y function f is denoted by P(dm |f , m, a). For any pair of algorithms a and b, the NFL theorem states y y P(dm |f , m, a) = P(dm |f , m, b). f f Xin-She Yang 2011 Metaheuristics and Optimization

- 102. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks NFL Theorems (Wolpert and Macready 1997) NFL Theorems (Wolpert and Macready 1997) Search space is finite (though quite large), thus the space of possible “cost” values is also finite. Objective function f : X → Y, with F = Y X (space of all possible problems). Assumptions: finite domain, closed under permutation (c.u.p). For m iterations, m distinct visited points form a time-ordered x y x y set dm = dm (1), dm (1) , ..., dm (m), dm (m) . The performance of an algorithm a iterated m times on a cost y function f is denoted by P(dm |f , m, a). For any pair of algorithms a and b, the NFL theorem states y y P(dm |f , m, a) = P(dm |f , m, b). f f Any algorithm is as good (bad) as a random search! Xin-She Yang 2011 Metaheuristics and Optimization

- 103. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Proof Sketch Proof Sketch Wolpert and Macready’s original proof by induction x y y x For m = 1, d1 = {d1 , d1 }, so the only possible value of d1 is f (d1 ), and thus y x )). This means δ(d1 , f (d1 y y P(d1 |f , m = 1, a) = δ(d1 , f (d1 )) = |Y||X |−1 , x f f which is independent of algorithm a. [|Y| is the size of Y.] y If it is true for m, or f P(dm |f , m, a) is independent of a, then for m + 1, we x y have dm+1 = dm ∪ {x, f (x)} with dm+1 (m + 1) = x and dm+1 (m + 1) = f (x). Thus, we get (Bayesian approach) y y y P(dm+1 |f , m + 1, a) = P(dm+1 (m + 1)|dm , f , m + 1, a)P(dm |f , m + 1, a). y So f P(dm+1 |f , m + 1, a) = m (m + 1), f (x))P(x|d y , f , m + 1, a)P(d y |f , m + 1, a). f ,x δ(dm+1 m m Using P(x|dm , a) = δ(x, a(dm )) and P(dm |f , m + 1, a) = P(dm |f , m, a), this leads to y 1 y P(dm+1 |f , m + 1, a) = P(dm |f , m, a), f |Y| f which is also independent of a. Xin-She Yang 2011 Metaheuristics and Optimization

- 104. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Proof Sketch Proof Sketch Wolpert and Macready’s original proof by induction x y y x For m = 1, d1 = {d1 , d1 }, so the only possible value of d1 is f (d1 ), and thus y x )). This means δ(d1 , f (d1 y y P(d1 |f , m = 1, a) = δ(d1 , f (d1 )) = |Y||X |−1 , x f f which is independent of algorithm a. [|Y| is the size of Y.] y If it is true for m, or f P(dm |f , m, a) is independent of a, then for m + 1, we x y have dm+1 = dm ∪ {x, f (x)} with dm+1 (m + 1) = x and dm+1 (m + 1) = f (x). Thus, we get (Bayesian approach) y y y P(dm+1 |f , m + 1, a) = P(dm+1 (m + 1)|dm , f , m + 1, a)P(dm |f , m + 1, a). y So f P(dm+1 |f , m + 1, a) = m (m + 1), f (x))P(x|d y , f , m + 1, a)P(d y |f , m + 1, a). f ,x δ(dm+1 m m Using P(x|dm , a) = δ(x, a(dm )) and P(dm |f , m + 1, a) = P(dm |f , m, a), this leads to y 1 y P(dm+1 |f , m + 1, a) = P(dm |f , m, a), f |Y| f which is also independent of a. Xin-She Yang 2011 Metaheuristics and Optimization

- 105. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Proof Sketch Proof Sketch Wolpert and Macready’s original proof by induction x y y x For m = 1, d1 = {d1 , d1 }, so the only possible value of d1 is f (d1 ), and thus y x )). This means δ(d1 , f (d1 y y P(d1 |f , m = 1, a) = δ(d1 , f (d1 )) = |Y||X |−1 , x f f which is independent of algorithm a. [|Y| is the size of Y.] y If it is true for m, or f P(dm |f , m, a) is independent of a, then for m + 1, we x y have dm+1 = dm ∪ {x, f (x)} with dm+1 (m + 1) = x and dm+1 (m + 1) = f (x). Thus, we get (Bayesian approach) y y y P(dm+1 |f , m + 1, a) = P(dm+1 (m + 1)|dm , f , m + 1, a)P(dm |f , m + 1, a). y So f P(dm+1 |f , m + 1, a) = m (m + 1), f (x))P(x|d y , f , m + 1, a)P(d y |f , m + 1, a). f ,x δ(dm+1 m m Using P(x|dm , a) = δ(x, a(dm )) and P(dm |f , m + 1, a) = P(dm |f , m, a), this leads to y 1 y P(dm+1 |f , m + 1, a) = P(dm |f , m, a), f |Y| f which is also independent of a. Xin-She Yang 2011 Metaheuristics and Optimization

- 106. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Proof Sketch Proof Sketch Wolpert and Macready’s original proof by induction x y y x For m = 1, d1 = {d1 , d1 }, so the only possible value of d1 is f (d1 ), and thus y x )). This means δ(d1 , f (d1 y y P(d1 |f , m = 1, a) = δ(d1 , f (d1 )) = |Y||X |−1 , x f f which is independent of algorithm a. [|Y| is the size of Y.] y If it is true for m, or f P(dm |f , m, a) is independent of a, then for m + 1, we x y have dm+1 = dm ∪ {x, f (x)} with dm+1 (m + 1) = x and dm+1 (m + 1) = f (x). Thus, we get (Bayesian approach) y y y P(dm+1 |f , m + 1, a) = P(dm+1 (m + 1)|dm , f , m + 1, a)P(dm |f , m + 1, a). y So f P(dm+1 |f , m + 1, a) = m (m + 1), f (x))P(x|d y , f , m + 1, a)P(d y |f , m + 1, a). f ,x δ(dm+1 m m Using P(x|dm , a) = δ(x, a(dm )) and P(dm |f , m + 1, a) = P(dm |f , m, a), this leads to y 1 y P(dm+1 |f , m + 1, a) = P(dm |f , m, a), f |Y| f which is also independent of a. Xin-She Yang 2011 Metaheuristics and Optimization

- 107. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Free Lunches Free Lunches NFL – not true for continuous domains (Auger and Teytaud 2009) Continuous free lunches =⇒ some algorithms are better than others! For example, for a 2D sphere function, an efficient algorithm only needs 4 iterations/steps to reach the optimality (global minimum).7 7 A. Auger and O. Teytaud, Continuous lunches are free plus the design of optimal optimization algorithms, Algorithmica, 57, 121-146 (2010). 8 J. A. Marshall and T. G. Hinton, Beyond no free lunch: realistic algorithms for arbitrary problem classes, WCCI 2010 IEEE World Congress on Computational Intelligence, July 1823, Barcelona, Spain, pp. 1319-1324. Xin-She Yang 2011 Metaheuristics and Optimization

- 108. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Free Lunches Free Lunches NFL – not true for continuous domains (Auger and Teytaud 2009) Continuous free lunches =⇒ some algorithms are better than others! For example, for a 2D sphere function, an efficient algorithm only needs 4 iterations/steps to reach the optimality (global minimum).7 7 A. Auger and O. Teytaud, Continuous lunches are free plus the design of optimal optimization algorithms, Algorithmica, 57, 121-146 (2010). 8 J. A. Marshall and T. G. Hinton, Beyond no free lunch: realistic algorithms for arbitrary problem classes, WCCI 2010 IEEE World Congress on Computational Intelligence, July 1823, Barcelona, Spain, pp. 1319-1324. Xin-She Yang 2011 Metaheuristics and Optimization

- 109. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Free Lunches Free Lunches NFL – not true for continuous domains (Auger and Teytaud 2009) Continuous free lunches =⇒ some algorithms are better than others! For example, for a 2D sphere function, an efficient algorithm only needs 4 iterations/steps to reach the optimality (global minimum).7 Revisiting algorithms NFL assumes that the time-ordered set has m distinct points (non-revisiting). For revisiting points, it breaks the closed under permutation, so NFL does not hold (Marshall and Hinton 2010)8 7 A. Auger and O. Teytaud, Continuous lunches are free plus the design of optimal optimization algorithms, Algorithmica, 57, 121-146 (2010). 8 J. A. Marshall and T. G. Hinton, Beyond no free lunch: realistic algorithms for arbitrary problem classes, WCCI 2010 IEEE World Congress on Computational Intelligence, July 1823, Barcelona, Spain, pp. 1319-1324. Xin-She Yang 2011 Metaheuristics and Optimization

- 110. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks More Free Lunches More Free Lunches Coevolutionary algorithms A set of players (agents?) in self-play problems work together to produce a champion – like training a chess champion – free lunches exist (Wolpert and Macready 2005).9 [A single player tries to pursue the best next move, or for two players, the fitness function depends on the moves of both players.] 9 D. H. Wolpert and W. G. Macready, Coevolutonary free lunches, IEEE Trans. Evolutionary Computation, 9, 721-735 (2005). 10 D. Corne and J. Knowles, Some multiobjective optimizers are better than others, Evolutionary Computation, Xin-She Yang 4, 2506-2512 (2003). CEC’03, 2011 Metaheuristics and Optimization

- 111. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks More Free Lunches More Free Lunches Coevolutionary algorithms A set of players (agents?) in self-play problems work together to produce a champion – like training a chess champion – free lunches exist (Wolpert and Macready 2005).9 [A single player tries to pursue the best next move, or for two players, the fitness function depends on the moves of both players.] 9 D. H. Wolpert and W. G. Macready, Coevolutonary free lunches, IEEE Trans. Evolutionary Computation, 9, 721-735 (2005). 10 D. Corne and J. Knowles, Some multiobjective optimizers are better than others, Evolutionary Computation, Xin-She Yang 4, 2506-2512 (2003). CEC’03, 2011 Metaheuristics and Optimization

- 112. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks More Free Lunches More Free Lunches Coevolutionary algorithms A set of players (agents?) in self-play problems work together to produce a champion – like training a chess champion – free lunches exist (Wolpert and Macready 2005).9 [A single player tries to pursue the best next move, or for two players, the fitness function depends on the moves of both players.] Multiobjective “Some multiobjective optimizers are better than others” (Corne and Knowles 2003).10 [results for finite domains only] Free lunches due to archiver and generator. 9 D. H. Wolpert and W. G. Macready, Coevolutonary free lunches, IEEE Trans. Evolutionary Computation, 9, 721-735 (2005). 10 D. Corne and J. Knowles, Some multiobjective optimizers are better than others, Evolutionary Computation, Xin-She Yang 4, 2506-2512 (2003). CEC’03, 2011 Metaheuristics and Optimization

- 113. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Open Problems Open Problems Framework: Need to develop a unified framework for algorithmic analysis (e.g.,convergence). Exploration and exploitation: What is the optimal balance between these two components? (50-50 or what?) Performance measure: What are the best performance measures ? Statistically? Why ? Convergence: Convergence analysis of algorithms for infinite, continuous domains require systematic approaches? Xin-She Yang 2011 Metaheuristics and Optimization

- 114. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Open Problems Open Problems Framework: Need to develop a unified framework for algorithmic analysis (e.g.,convergence). Exploration and exploitation: What is the optimal balance between these two components? (50-50 or what?) Performance measure: What are the best performance measures ? Statistically? Why ? Convergence: Convergence analysis of algorithms for infinite, continuous domains require systematic approaches? Xin-She Yang 2011 Metaheuristics and Optimization

- 115. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Open Problems Open Problems Framework: Need to develop a unified framework for algorithmic analysis (e.g.,convergence). Exploration and exploitation: What is the optimal balance between these two components? (50-50 or what?) Performance measure: What are the best performance measures ? Statistically? Why ? Convergence: Convergence analysis of algorithms for infinite, continuous domains require systematic approaches? Xin-She Yang 2011 Metaheuristics and Optimization

- 116. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Open Problems Open Problems Framework: Need to develop a unified framework for algorithmic analysis (e.g.,convergence). Exploration and exploitation: What is the optimal balance between these two components? (50-50 or what?) Performance measure: What are the best performance measures ? Statistically? Why ? Convergence: Convergence analysis of algorithms for infinite, continuous domains require systematic approaches? Xin-She Yang 2011 Metaheuristics and Optimization

- 117. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks More Open Problems More Open Problems Free lunches: Unproved for infinite or continuous domains for multiobjective optimization. (possible free lunches!) What are implications of NFL theorems in practice? If free lunches exist, how to find the best algorithm(s)? Knowledge: Problem-specific knowledge always helps to find appropriate solutions? How to quantify such knowledge? Intelligent algorithms: Any practical way to design truly intelligent, self-evolving algorithms? Xin-She Yang 2011 Metaheuristics and Optimization

- 118. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks More Open Problems More Open Problems Free lunches: Unproved for infinite or continuous domains for multiobjective optimization. (possible free lunches!) What are implications of NFL theorems in practice? If free lunches exist, how to find the best algorithm(s)? Knowledge: Problem-specific knowledge always helps to find appropriate solutions? How to quantify such knowledge? Intelligent algorithms: Any practical way to design truly intelligent, self-evolving algorithms? Xin-She Yang 2011 Metaheuristics and Optimization

- 119. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks More Open Problems More Open Problems Free lunches: Unproved for infinite or continuous domains for multiobjective optimization. (possible free lunches!) What are implications of NFL theorems in practice? If free lunches exist, how to find the best algorithm(s)? Knowledge: Problem-specific knowledge always helps to find appropriate solutions? How to quantify such knowledge? Intelligent algorithms: Any practical way to design truly intelligent, self-evolving algorithms? Xin-She Yang 2011 Metaheuristics and Optimization

- 120. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Thanks Thanks Yang X. S., Engineering Optimization: An Introduction with Metaheuristic Applications, Wiley, (2010). Yang X. S., Introduction to Computational Mathematics, World Scientific, (2008). Yang X. S., Nature-Inspired Metaheuristic Algorithms, Luniver Press, (2008). Yang X. S., Introduction to Mathematical Optimization: From Linear Programming to Metaheuristics, Cambridge Int. Science Publishing, (2008). Yang X. S., Applied Engineering Optimization, Cambridge Int. Science Publishing, (2007). Xin-She Yang 2011 Metaheuristics and Optimization

- 121. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks IJMMNO IJMMNO International Journal of Mathematical Modelling and Numerical Optimization (IJMMNO) http://www.inderscience.com/ijmmno Thank you! Xin-She Yang 2011 Metaheuristics and Optimization

- 122. Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks Thank you! Questions ? Xin-She Yang 2011 Metaheuristics and Optimization

![Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks

Simulated Annealling

Simulated Annealling

Metal annealing to increase strength =⇒ simulated annealing.

Probabilistic Move: p ∝ exp[−E /kB T ].

kB =Boltzmann constant (e.g., kB = 1), T =temperature, E =energy.

E ∝ f (x), T = T0 αt (cooling schedule) , (0 < α < 1).

T → 0, =⇒p → 0, =⇒ hill climbing.

Xin-She Yang 2011

Metaheuristics and Optimization](https://image.slidesharecdn.com/sea2011yang-130122094234-phpapp01-130122125440-phpapp02/85/Metaheuristic-Optimization-Algorithm-Analysis-and-Open-Problems-26-320.jpg)

![Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks

Simulated Annealling

Simulated Annealling

Metal annealing to increase strength =⇒ simulated annealing.

Probabilistic Move: p ∝ exp[−E /kB T ].

kB =Boltzmann constant (e.g., kB = 1), T =temperature, E =energy.

E ∝ f (x), T = T0 αt (cooling schedule) , (0 < α < 1).

T → 0, =⇒p → 0, =⇒ hill climbing.

This is essentially a Markov chain.

Generation of new moves by Markov chain.

Xin-She Yang 2011

Metaheuristics and Optimization](https://image.slidesharecdn.com/sea2011yang-130122094234-phpapp01-130122125440-phpapp02/85/Metaheuristic-Optimization-Algorithm-Analysis-and-Open-Problems-27-320.jpg)

![Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks

FA Convergence

FA Convergence

For the firefly motion without the randomness term, we focus on a

single agent and replace xt by g

j

2

xt+1 = xt + β0 e −γri (g − xt ),

i i i

where the distance ri = ||g − xt ||2 .

i

√

In the 1-D case, we set yt = g − xt and ut =

i γyt , we have

2

ut+1 = ut [1 − β0 e −ut ].

Xin-She Yang 2011

Metaheuristics and Optimization](https://image.slidesharecdn.com/sea2011yang-130122094234-phpapp01-130122125440-phpapp02/85/Metaheuristic-Optimization-Algorithm-Analysis-and-Open-Problems-47-320.jpg)

![Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks

FA Convergence

FA Convergence

For the firefly motion without the randomness term, we focus on a

single agent and replace xt by g

j

2

xt+1 = xt + β0 e −γri (g − xt ),

i i i

where the distance ri = ||g − xt ||2 .

i

√

In the 1-D case, we set yt = g − xt and ut =

i γyt , we have

2

ut+1 = ut [1 − β0 e −ut ].

Analyzing this using the same methodology for ut = λut (1 − ut ),

we have a corresponding chaotic map, focusing on the transition

from periodic multiple states to chaotic behaviour.

Xin-She Yang 2011

Metaheuristics and Optimization](https://image.slidesharecdn.com/sea2011yang-130122094234-phpapp01-130122125440-phpapp02/85/Metaheuristic-Optimization-Algorithm-Analysis-and-Open-Problems-48-320.jpg)

![Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks

Convergence of GA

Convergence of GA

Important studies by Aytug et al. (1996)1 , Aytug and Koehler

(2000)2 , Greenhalgh and Marschall (2000)3 , Gutjahr (2010),4 etc.5

The number of iterations t(ζ) in GA with a convergence

probability of ζ can be estimated by

ln(1 − ζ)

t(ζ) ≤ ,

ln 1 − min[(1 − µ)Ln , µLn ]

where µ=mutation rate, L=string length, and n=population size.

1

H. Aytug, S. Bhattacharrya and G. J. Koehler, A Markov chain analysis of genetic algorithms with power of

2 cardinality alphabets, Euro. J. Operational Research, 96, 195-201 (1996).

2

H. Aytug and G. J. Koehler, New stopping criterion for genetic algorithms, Euro. J. Operational research,

126, 662-674 (2000).

3

D. Greenhalgh & S. Marshal, Convergence criteria for genetic algorithms, SIAM J. Computing, 30, 269-282

(2000).

Xin-She Yang 2011

4

Metaheuristics and Gutjahr, Convergence Analysis of Metaheuristics Annals of Information Systems, 10, 159-187 (2010).

W. J. Optimization](https://image.slidesharecdn.com/sea2011yang-130122094234-phpapp01-130122125440-phpapp02/85/Metaheuristic-Optimization-Algorithm-Analysis-and-Open-Problems-79-320.jpg)

![Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks

Proof Sketch

Proof Sketch

Wolpert and Macready’s original proof by induction

x y y x

For m = 1, d1 = {d1 , d1 }, so the only possible value of d1 is f (d1 ), and thus

y x )). This means

δ(d1 , f (d1

y y

P(d1 |f , m = 1, a) = δ(d1 , f (d1 )) = |Y||X |−1 ,

x

f f

which is independent of algorithm a. [|Y| is the size of Y.]

y

If it is true for m, or f P(dm |f , m, a) is independent of a, then for m + 1, we

x y

have dm+1 = dm ∪ {x, f (x)} with dm+1 (m + 1) = x and dm+1 (m + 1) = f (x).

Thus, we get (Bayesian approach)

y y y

P(dm+1 |f , m + 1, a) = P(dm+1 (m + 1)|dm , f , m + 1, a)P(dm |f , m + 1, a).

y

So f P(dm+1 |f , m + 1, a) =

m (m + 1), f (x))P(x|d y , f , m + 1, a)P(d y |f , m + 1, a).

f ,x δ(dm+1 m m

Using P(x|dm , a) = δ(x, a(dm )) and P(dm |f , m + 1, a) = P(dm |f , m, a), this

leads to

y 1 y

P(dm+1 |f , m + 1, a) = P(dm |f , m, a),

f

|Y| f

which is also independent of a.

Xin-She Yang 2011

Metaheuristics and Optimization](https://image.slidesharecdn.com/sea2011yang-130122094234-phpapp01-130122125440-phpapp02/85/Metaheuristic-Optimization-Algorithm-Analysis-and-Open-Problems-103-320.jpg)

![Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks

Proof Sketch

Proof Sketch

Wolpert and Macready’s original proof by induction

x y y x

For m = 1, d1 = {d1 , d1 }, so the only possible value of d1 is f (d1 ), and thus

y x )). This means

δ(d1 , f (d1

y y

P(d1 |f , m = 1, a) = δ(d1 , f (d1 )) = |Y||X |−1 ,

x

f f

which is independent of algorithm a. [|Y| is the size of Y.]

y

If it is true for m, or f P(dm |f , m, a) is independent of a, then for m + 1, we

x y

have dm+1 = dm ∪ {x, f (x)} with dm+1 (m + 1) = x and dm+1 (m + 1) = f (x).

Thus, we get (Bayesian approach)

y y y

P(dm+1 |f , m + 1, a) = P(dm+1 (m + 1)|dm , f , m + 1, a)P(dm |f , m + 1, a).

y

So f P(dm+1 |f , m + 1, a) =

m (m + 1), f (x))P(x|d y , f , m + 1, a)P(d y |f , m + 1, a).

f ,x δ(dm+1 m m

Using P(x|dm , a) = δ(x, a(dm )) and P(dm |f , m + 1, a) = P(dm |f , m, a), this

leads to

y 1 y

P(dm+1 |f , m + 1, a) = P(dm |f , m, a),

f

|Y| f

which is also independent of a.

Xin-She Yang 2011

Metaheuristics and Optimization](https://image.slidesharecdn.com/sea2011yang-130122094234-phpapp01-130122125440-phpapp02/85/Metaheuristic-Optimization-Algorithm-Analysis-and-Open-Problems-104-320.jpg)

![Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks

Proof Sketch

Proof Sketch

Wolpert and Macready’s original proof by induction

x y y x

For m = 1, d1 = {d1 , d1 }, so the only possible value of d1 is f (d1 ), and thus

y x )). This means

δ(d1 , f (d1

y y

P(d1 |f , m = 1, a) = δ(d1 , f (d1 )) = |Y||X |−1 ,

x

f f

which is independent of algorithm a. [|Y| is the size of Y.]

y

If it is true for m, or f P(dm |f , m, a) is independent of a, then for m + 1, we

x y

have dm+1 = dm ∪ {x, f (x)} with dm+1 (m + 1) = x and dm+1 (m + 1) = f (x).

Thus, we get (Bayesian approach)

y y y

P(dm+1 |f , m + 1, a) = P(dm+1 (m + 1)|dm , f , m + 1, a)P(dm |f , m + 1, a).

y

So f P(dm+1 |f , m + 1, a) =

m (m + 1), f (x))P(x|d y , f , m + 1, a)P(d y |f , m + 1, a).

f ,x δ(dm+1 m m

Using P(x|dm , a) = δ(x, a(dm )) and P(dm |f , m + 1, a) = P(dm |f , m, a), this

leads to

y 1 y

P(dm+1 |f , m + 1, a) = P(dm |f , m, a),

f

|Y| f

which is also independent of a.

Xin-She Yang 2011

Metaheuristics and Optimization](https://image.slidesharecdn.com/sea2011yang-130122094234-phpapp01-130122125440-phpapp02/85/Metaheuristic-Optimization-Algorithm-Analysis-and-Open-Problems-105-320.jpg)

![Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks

Proof Sketch

Proof Sketch

Wolpert and Macready’s original proof by induction

x y y x

For m = 1, d1 = {d1 , d1 }, so the only possible value of d1 is f (d1 ), and thus

y x )). This means

δ(d1 , f (d1

y y

P(d1 |f , m = 1, a) = δ(d1 , f (d1 )) = |Y||X |−1 ,

x

f f

which is independent of algorithm a. [|Y| is the size of Y.]

y

If it is true for m, or f P(dm |f , m, a) is independent of a, then for m + 1, we

x y

have dm+1 = dm ∪ {x, f (x)} with dm+1 (m + 1) = x and dm+1 (m + 1) = f (x).

Thus, we get (Bayesian approach)

y y y

P(dm+1 |f , m + 1, a) = P(dm+1 (m + 1)|dm , f , m + 1, a)P(dm |f , m + 1, a).

y

So f P(dm+1 |f , m + 1, a) =

m (m + 1), f (x))P(x|d y , f , m + 1, a)P(d y |f , m + 1, a).

f ,x δ(dm+1 m m

Using P(x|dm , a) = δ(x, a(dm )) and P(dm |f , m + 1, a) = P(dm |f , m, a), this

leads to

y 1 y

P(dm+1 |f , m + 1, a) = P(dm |f , m, a),

f

|Y| f

which is also independent of a.

Xin-She Yang 2011

Metaheuristics and Optimization](https://image.slidesharecdn.com/sea2011yang-130122094234-phpapp01-130122125440-phpapp02/85/Metaheuristic-Optimization-Algorithm-Analysis-and-Open-Problems-106-320.jpg)

![Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks

More Free Lunches

More Free Lunches

Coevolutionary algorithms

A set of players (agents?) in self-play problems work together to

produce a champion – like training a chess champion

– free lunches exist (Wolpert and Macready 2005).9

[A single player tries to pursue the best next move, or for two

players, the fitness function depends on the moves of both players.]

9

D. H. Wolpert and W. G. Macready, Coevolutonary free lunches, IEEE Trans. Evolutionary Computation, 9,

721-735 (2005).

10

D. Corne and J. Knowles, Some multiobjective optimizers are better than others, Evolutionary Computation,

Xin-She Yang 4, 2506-2512 (2003).

CEC’03, 2011

Metaheuristics and Optimization](https://image.slidesharecdn.com/sea2011yang-130122094234-phpapp01-130122125440-phpapp02/85/Metaheuristic-Optimization-Algorithm-Analysis-and-Open-Problems-110-320.jpg)

![Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks

More Free Lunches

More Free Lunches

Coevolutionary algorithms

A set of players (agents?) in self-play problems work together to

produce a champion – like training a chess champion

– free lunches exist (Wolpert and Macready 2005).9

[A single player tries to pursue the best next move, or for two

players, the fitness function depends on the moves of both players.]

9

D. H. Wolpert and W. G. Macready, Coevolutonary free lunches, IEEE Trans. Evolutionary Computation, 9,

721-735 (2005).

10

D. Corne and J. Knowles, Some multiobjective optimizers are better than others, Evolutionary Computation,

Xin-She Yang 4, 2506-2512 (2003).

CEC’03, 2011

Metaheuristics and Optimization](https://image.slidesharecdn.com/sea2011yang-130122094234-phpapp01-130122125440-phpapp02/85/Metaheuristic-Optimization-Algorithm-Analysis-and-Open-Problems-111-320.jpg)

![Intro Metaheuristic Algorithms Applications Markov Chains Analysis All NFL Open Problems Thanks

More Free Lunches

More Free Lunches

Coevolutionary algorithms

A set of players (agents?) in self-play problems work together to

produce a champion – like training a chess champion

– free lunches exist (Wolpert and Macready 2005).9

[A single player tries to pursue the best next move, or for two

players, the fitness function depends on the moves of both players.]

Multiobjective

“Some multiobjective optimizers are better than others” (Corne

and Knowles 2003).10 [results for finite domains only]

Free lunches due to archiver and generator.

9

D. H. Wolpert and W. G. Macready, Coevolutonary free lunches, IEEE Trans. Evolutionary Computation, 9,

721-735 (2005).

10

D. Corne and J. Knowles, Some multiobjective optimizers are better than others, Evolutionary Computation,

Xin-She Yang 4, 2506-2512 (2003).

CEC’03, 2011

Metaheuristics and Optimization](https://image.slidesharecdn.com/sea2011yang-130122094234-phpapp01-130122125440-phpapp02/85/Metaheuristic-Optimization-Algorithm-Analysis-and-Open-Problems-112-320.jpg)