MS SQL SERVER: Decision trees algorithm

2 likes4,698 views

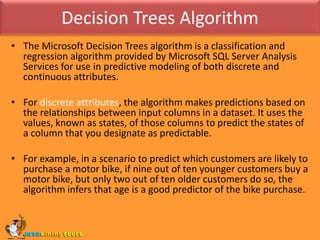

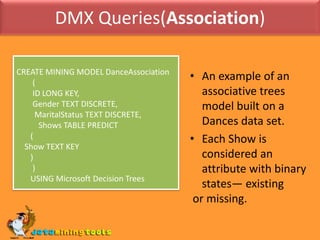

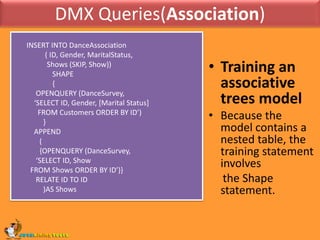

The document discusses Microsoft's decision trees algorithm and its use for classification, regression, and association mining. It provides examples of DMX queries for classification models predicting school plans based on student attributes, regression models predicting parent income, and association models for customer dance preferences. It also covers interpreting decision tree model content, parameters for controlling tree growth and shape, and stored procedures for viewing and manipulating decision tree models.

1 of 32

![DMX Queries(Classification)SELECT t.ID, SchoolPlans.SchoolPlans, PredictProbability(SchoolPlans) AS [Probability]FROM SchoolPlans PREDICTION JOIN OPENQUERY(SchoolPlans, ‘SELECT ID, Sex, IQ, ParentSupport, ParentIncome FROM NewStudents’) AS tON SchoolPlans.ParentIncome= t.ParentIncome ANDSchoolPlans.IQ = t.IQ ANDSchoolPlans.Sex= t.Sex ANDSchoolPlans.ParentSupport= t.ParentSupportPredicting the SchoolPlan for a new student.This query returns ID, SchoolPlans, andProbability.](https://image.slidesharecdn.com/decisiontreesalgorithm-100711090606-phpapp02/85/MS-SQL-SERVER-Decision-trees-algorithm-8-320.jpg)

![DMX Queries(Classification)SELECT t.ID, PredictHistogram(SchoolPlans) AS [SchoolPlans] FROM SchoolPlans PREDICTION JOIN OPENQUERY(SchoolPlans, ‘SELECT ID, Sex, IQ, ParentSupport, ParentIncome FROM NewStudents’) AS t ON SchoolPlans.ParentIncome= t.ParentIncome ANDSchoolPlans.IQ = t.IQ ANDSchoolPlans.Sex= t.Sex ANDSchoolPlans.ParentSupport= t.ParentSupportnQuery returns the histogram of the SchoolPlans predictions in the form of a nested table.Result of this query is shown in the next slide.](https://image.slidesharecdn.com/decisiontreesalgorithm-100711090606-phpapp02/85/MS-SQL-SERVER-Decision-trees-algorithm-9-320.jpg)

![DMX Queries(Association) INSERT INTO DanceAssociation ( ID, Gender, MaritalStatus, Shows (SKIP, Show)) SHAPE { OPENQUERY (DanceSurvey, ‘SELECT ID, Gender, [Marital Status] FROM Customers ORDER BY ID’) } APPEND ( {OPENQUERY (DanceSurvey, ‘SELECT ID, Show FROM Shows ORDER BY ID’)} RELATE ID TO ID )AS ShowsTraining an associative trees modelBecause the model contains a nested table, the training statement involves the Shape statement.](https://image.slidesharecdn.com/decisiontreesalgorithm-100711090606-phpapp02/85/MS-SQL-SERVER-Decision-trees-algorithm-15-320.jpg)

![Decision Tree ParametersCOMPLEXITY _PENALTYis a floating point number with the range [0,1] which controls how much penalty the algorithm applies to complex trees.When the value of this parameter is set close to 0, there is a lower penalty for the tree growth, and you may see large trees.When its value is set close to 1, the tree growth is penalized heavily, and the resulting trees are relatively small.If there are fewer than 10 input attributes, the value is set to 0.5.if there are more than 100 attributes, the value is set to 0.99. If you have between 10 and 100 input attributes, the value is set to 0.9.](https://image.slidesharecdn.com/decisiontreesalgorithm-100711090606-phpapp02/85/MS-SQL-SERVER-Decision-trees-algorithm-23-320.jpg)

Ad

Recommended

MS SQL SERVER:Microsoft neural network and logistic regression

MS SQL SERVER:Microsoft neural network and logistic regressionDataminingTools Inc This document provides an overview of Microsoft Neural Network and Logistic Regression algorithms. It describes how neural networks can detect nonlinear relationships in data and are composed of an input, hidden and output layer. The Microsoft Neural Network algorithm uses backpropagation to update weights and minimize errors. Parameters like maximum inputs/outputs, sample size, and hidden node ratio can be configured. Examples of DMX queries are provided to create models for predicting customer attributes from demographic and technology usage data.

MS SQL SERVER: Microsoft sequence clustering and association rules

MS SQL SERVER: Microsoft sequence clustering and association rulesDataminingTools Inc This document provides an overview of Microsoft Sequence Clustering and Association Rules algorithms in SQL Server Analysis Services. It describes how sequence clustering models group sequences into clusters based on identical transitions between states. It also explains how association rule mining analyzes frequent patterns in transactional data to generate rules and make recommendations. Key parameters for each algorithm like minimum support, cluster count, and maximum itemset size are also outlined.

WEKA: Data Mining Input Concepts Instances And Attributes

WEKA: Data Mining Input Concepts Instances And AttributesDataminingTools Inc This document discusses concepts related to data mining input, including concepts, instances, and attributes. It also covers different types of learning in data mining like classification, numeric prediction, clustering, and association rules. Key steps to prepare data for mining are discussed, such as data assembly, integration, cleaning, and preparation. Formats for data like ARFF files and handling sparse data are also covered.

Data mining techniques using weka

Data mining techniques using wekarathorenitin87 This document discusses classification and clustering techniques using the Weka data mining tool. It begins with an introduction to Weka and its capabilities for classification, clustering, and other data mining functions. It then provides an example of using Weka's J48 decision tree algorithm to classify iris flower samples based on sepal and petal attributes. Finally, it demonstrates k-means clustering on customer purchase data from a BMW dealership to group customers into five clusters based on their buying behaviors.

Weka_Manual_Sagar

Weka_Manual_SagarSagar Kumar This document provides an overview of using the WEKA data mining tool to perform two common techniques: clustering and linear regression. It first introduces WEKA and its interfaces. It then provides details on k-means clustering, including how to implement it in WEKA on a sample BMW customer dataset. This identifies five distinct customer clusters. The document also explains linear regression and uses a house pricing dataset in WEKA to build a regression model to predict house value based on features.

MS SQL SERVER: Microsoft naive bayes algorithm

MS SQL SERVER: Microsoft naive bayes algorithmDataminingTools Inc The Naive Bayes algorithm is a classification algorithm in SQL Server that uses Bayes' theorem with the assumption of independence between attributes. It is less computationally intensive than other algorithms. A Naive Bayes model can be explored using different views to understand attribute relationships and feature importance. DMX queries can retrieve model metadata, content, and predictions. Parameters like maximum input attributes control the algorithm behavior.

Explanations in Data Systems

Explanations in Data SystemsFotis Savva This document discusses explanations in data systems. It provides examples of explaining outliers in datasets and answers to database queries. It also covers representing explanations as attribute-value pairs or predicates, efficiently finding explanations using techniques like frequent itemsets or decision trees, and ranking explanations based on their influence. The document proposes research ideas around assisting data exploration by providing explanations for aggregate query results over ranges.

Preparing your data for Machine Learning with Feature Scaling

Preparing your data for Machine Learning with Feature ScalingRahul K Chauhan Feature scaling, including standardization and normalization, is crucial for ensuring that independent variables have a uniform scale, which is essential for machine learning algorithms that rely on distance calculations. Standardization rescales values to have a mean of 0 and a standard deviation of 1, while normalization adjusts attributes to a range of 0 to 1. Different algorithms have varying requirements for scaling techniques, with standardization preferred for linear regression and normalization for methods like SVM and k-nearest neighbors.

[M4A2] Data Analysis and Interpretation Specialization

[M4A2] Data Analysis and Interpretation Specialization Andrea Rubio This document describes a random forest analysis performed to evaluate factors influencing student alcohol consumption. The analysis involved: 1) cleaning and preprocessing a student dataset; 2) defining predictor and response variables; 3) running a random forest model and calculating accuracy; 4) identifying the most important predictors. The random forest accuracy was 67% and the top 3 predictors were absences, age, and time spent with friends. Adding more trees improved accuracy up to 72% suggesting the random forest approach was appropriate.

Processes and threads

Processes and threadsSatyamevjayte Haxor Processes are heavyweight flows of execution that run concurrently in separate address spaces, while threads are lightweight flows that run concurrently within the same process address space. Active classes represent concurrent flows of control and can be stereotyped as <<process>> or <<thread>>. There are four types of communication between active and passive objects: active to active, active to passive, passive to active, and passive to passive. Synchronization coordinates concurrent flows using sequential, guarded, or concurrent approaches.

Feed forward neural network for sine

Feed forward neural network for sineijcsa This document presents a method for designing a feed-forward neural network to approximate the sine function using a symmetric table addition method (STAM) integrated with LabVIEW and MATLAB. The proposed neural network achieved a training accuracy of 100% and testing accuracy of 97.22%, demonstrating effective performance in real-time applications through a designed graphical user interface. The work outlines the architecture, training process, and results, highlighting the significance of STAM in optimizing neural network synapses.

Collaborative Filtering 2: Item-based CF

Collaborative Filtering 2: Item-based CFYusuke Yamamoto This document discusses item-based collaborative filtering for recommender systems. It describes how item-based collaborative filtering works by predicting a target user's rating for an item based on the ratings of similar items. It highlights advantages over user-based filtering like lower computational cost and more stable similarity computations. Key aspects covered include using cosine similarity to calculate item similarities, adjusting for individual rating biases, selecting the top K similar items, and predicting ratings based on similar items' ratings.

WEKA: Algorithms The Basic Methods

WEKA: Algorithms The Basic MethodsDataminingTools Inc 1) The 1R algorithm generates a one-level decision tree by considering each attribute individually and assigning the majority class to each branch. It chooses the attribute with the minimum classification error.

2) Naive Bayes classification assumes attributes are independent and calculates the probability of each class using Bayes' rule. It handles missing and numeric attributes.

3) Decision tree algorithms like ID3 use a divide-and-conquer approach, recursively splitting the data on attributes that maximize information gain or gain ratio at each node.

4) Rule-based algorithms like PRISM generate rules to cover instances of each class sequentially, maximizing the ratio of correctly covered to total covered instances at each step.

Data Science - Part XVII - Deep Learning & Image Processing

Data Science - Part XVII - Deep Learning & Image ProcessingDerek Kane The document discusses image processing for machine learning, particularly focusing on deep learning architectures like convolutional neural networks and their applications in text recognition and image segmentation. It outlines practical examples, such as detecting handwritten text and automating test grading, while emphasizing the challenges of accurately recognizing objects in complex images. The presentation also touches on advancements in deep learning, stating its significant impact on various technologies and fields, including self-driving cars and facial recognition.

04 Classification in Data Mining

04 Classification in Data MiningValerii Klymchuk Chapter 4 discusses classification as a method of predicting attribute values into discrete classes, highlighting the importance of training data and various classification techniques such as statistical methods, distance-based algorithms, decision trees, and neural networks. It addresses the issue of overfitting and the importance of accuracy measurement through confusion matrices and operating characteristic curves. Additionally, the chapter covers specific algorithms and models, including ID3, C4.5, and logistic regression, along with their advantages and disadvantages.

Matrix Factorization

Matrix FactorizationYusuke Yamamoto Matrix factorization techniques can be used to address some of the limitations of traditional collaborative filtering approaches for recommender systems. Matrix factorization decomposes the user-item rating matrix into the product of two lower-dimensional matrices, one representing latent factors for users and the other for items. This reduced dimensionality addresses data sparsity and scalability issues. Specifically, singular value decomposition is often used to perform this matrix factorization, which can approximate the original rating matrix while ignoring less important singular values and factor vectors. The decomposed matrices can then be multiplied to predict unknown user ratings.

The solution of problem of parameterization of the proximity function in ace ...

The solution of problem of parameterization of the proximity function in ace ...eSAT Journals This document presents a new approach for defining the proximity function in algorithms for calculating estimates (ACE) in pattern recognition, utilizing genetic algorithms to optimize membership function parameters. It discusses the problems of comparing fuzzy attributes and outlines the methodology for overcoming these challenges using evolutionary algorithms. Key contributions include the formulation of the proximity function and the genetic algorithm process for optimizing parameters, enhancing the recognition capabilities of various object features.

Ijartes v1-i2-006

Ijartes v1-i2-006IJARTES This document compares four clustering algorithms (K-means, hierarchical, EM, and density-based) using the WEKA tool. It applies the algorithms to a dataset of software classes and evaluates them based on number of clusters, time to build models, squared errors, and log likelihood. The results show that K-means performs best in terms of time to build models, while density-based clustering performs best in terms of log likelihood. Overall, the document concludes that K-means is the best algorithm for this dataset because it balances low runtime and good clustering accuracy.

Data Science Interview Questions | Data Science Interview Questions And Answe...

Data Science Interview Questions | Data Science Interview Questions And Answe...Simplilearn The document contains various data science interview questions covering topics such as measures and dimensions, logistic regression, decision trees, random forests, model overfitting, features selection methods, handling missing data, and accuracy calculations using a confusion matrix. It also includes comparisons between supervised and unsupervised learning, details on recommender systems, dimensionality reduction, p-values, and outlier handling techniques. Key programming examples and methods for evaluating models are presented throughout.

Feature selection on boolean symbolic objects

Feature selection on boolean symbolic objectsijcsity The document discusses the importance of feature selection in data mining, particularly for complex datasets represented by Boolean symbolic objects (BSOs). It introduces a new algorithm called minset-plus, which improves upon existing feature selection methods by optimizing the discrimination process for symbolic objects, allowing for the selection of relevant features while reducing computational complexity. The paper also highlights the need for effective dissimilarity measures and proposes criteria for evaluating discrimination power among various features.

An integrated mechanism for feature selection

An integrated mechanism for feature selectionsai kumar This document discusses an integrated mechanism for feature selection and fuzzy rule extraction for classification problems. It aims to select a useful set of features that can solve the classification problem while designing an interpretable fuzzy rule-based system. The mechanism is an embedded feature selection method, meaning feature selection is integrated into the rule base formation process. This allows it to account for possible nonlinear interactions between features and between features and the modeling tool. The authors demonstrate the effectiveness of the proposed method on several datasets.

Clustering and Regression using WEKA

Clustering and Regression using WEKAVijaya Prabhu This document demonstrates clustering and regression techniques using the Weka data mining software. It shows how Weka can be used to cluster 600 bank customer records into 6 groups based on attributes like age, income, family status, etc. It also uses Weka to create a linear regression model to predict house prices based on attributes like size, number of bedrooms, lot size, and more. Overall, the document shows how Weka allows easy implementation of common data mining algorithms and visualization of results.

Data mining: Classification and prediction

Data mining: Classification and predictionDataminingTools Inc This document discusses various machine learning techniques for classification and prediction. It covers decision tree induction, tree pruning, Bayesian classification, Bayesian belief networks, backpropagation, association rule mining, and ensemble methods like bagging and boosting. Classification involves predicting categorical labels while prediction predicts continuous values. Key steps for preparing data include cleaning, transformation, and comparing different methods based on accuracy, speed, robustness, scalability, and interpretability.

Catching co occurrence information using word2vec-inspired matrix factorization

Catching co occurrence information using word2vec-inspired matrix factorizationhyunsung lee - Factorizing a PMI matrix involves using matrix factorization techniques to represent words in a latent space based on their co-occurrence information, similar to word2vec.

- Recommender systems use matrix factorization to represent users and items numerically in a latent space to predict target values like ratings. Known ratings are used to find latent vectors for users and items that best approximate the rating matrix.

- These latent vectors can then be used to predict unknown ratings by taking the dot product of the user and item vectors.

Branch And Bound and Beam Search Feature Selection Algorithms

Branch And Bound and Beam Search Feature Selection AlgorithmsChamin Nalinda Loku Gam Hewage This document discusses feature selection algorithms, specifically branch and bound and beam search algorithms. It provides an overview of feature selection, discusses the fundamentals and objectives of feature selection. It then goes into more detail about how branch and bound works, including pseudocode, a flowchart, and an example. It also discusses beam search and compares branch and bound to other algorithms. In summary, it thoroughly explains branch and bound and beam search algorithms for performing feature selection on datasets.

Bayesian classifiers programmed in sql

Bayesian classifiers programmed in sqlingenioustech This document describes implementing Bayesian classifiers in SQL. It introduces two classifiers: Naive Bayes (NB) and a Bayesian classifier based on class decomposition using K-means clustering (BKM). For both classifiers, it considers model computation and scoring a data set based on the model. It studies table layouts, indexing alternatives, and query optimizations to efficiently transform equations into SQL queries. Experiments evaluate classification accuracy, optimizations, and scalability. The BKM classifier achieves high accuracy and scales linearly, outperforming NB and decision trees. Distance computation is significantly accelerated through denormalized storage and pivoting.

Hyperparameter optimization with approximate gradient

Hyperparameter optimization with approximate gradientFabian Pedregosa This document discusses hyperparameter optimization using approximate gradients. It introduces the problem of optimizing hyperparameters along with model parameters. While model parameters can be estimated from data, hyperparameters require methods like cross-validation. The document proposes using approximate gradients to optimize hyperparameters more efficiently than costly methods like grid search. It derives the gradient of the objective with respect to hyperparameters and presents an algorithm called HOAG that approximates this gradient using inexact solutions. The document analyzes HOAG's convergence and provides experimental results comparing it to other hyperparameter optimization methods.

Portavocía en redes sociales

Portavocía en redes socialesMuévete en bici por Madrid El documento aborda el impacto de las redes sociales en la influencia y reputación personal, destacando la importancia de una estrategia digital integral que vaya más allá de las redes. Se presentan recomendaciones sobre cómo gestionar contenido, crear interacciones y manejar crises en línea, enfatizando la responsabilidad y el respeto en la publicación de información. Además, se sugiere el uso de herramientas tecnológicas para mejorar la conexión, el aprendizaje y la colaboración en el ámbito digital.

Public Transportation

Public Transportationdpapageorge We should protect our home by reducing global warming and air pollution through public transportation. An application will be designed to make public transportation easier by using an iPhone's GPS, compass and augmented reality to find the closest public transit option and view schedules and locations. Augmented reality superimposes graphics over the real world in real-time using mobile internet, video tracking and a camera.

Apresentação Red Advisers

Apresentação Red Advisersmezkita A Red Advisers é uma empresa de consultoria multidisciplinar especializada em imobiliário e turismo, com foco em mercados emergentes como Moçambique, Angola e Cabo Verde. A missão da empresa é oferecer serviços de excelência com uma equipe altamente qualificada, visando garantir o sucesso nos projetos dos clientes. A empresa opera em diversas áreas, incluindo consultoria estratégica, avaliação de imóveis, estudos de mercado e due diligence técnica.

More Related Content

What's hot (17)

[M4A2] Data Analysis and Interpretation Specialization

[M4A2] Data Analysis and Interpretation Specialization Andrea Rubio This document describes a random forest analysis performed to evaluate factors influencing student alcohol consumption. The analysis involved: 1) cleaning and preprocessing a student dataset; 2) defining predictor and response variables; 3) running a random forest model and calculating accuracy; 4) identifying the most important predictors. The random forest accuracy was 67% and the top 3 predictors were absences, age, and time spent with friends. Adding more trees improved accuracy up to 72% suggesting the random forest approach was appropriate.

Processes and threads

Processes and threadsSatyamevjayte Haxor Processes are heavyweight flows of execution that run concurrently in separate address spaces, while threads are lightweight flows that run concurrently within the same process address space. Active classes represent concurrent flows of control and can be stereotyped as <<process>> or <<thread>>. There are four types of communication between active and passive objects: active to active, active to passive, passive to active, and passive to passive. Synchronization coordinates concurrent flows using sequential, guarded, or concurrent approaches.

Feed forward neural network for sine

Feed forward neural network for sineijcsa This document presents a method for designing a feed-forward neural network to approximate the sine function using a symmetric table addition method (STAM) integrated with LabVIEW and MATLAB. The proposed neural network achieved a training accuracy of 100% and testing accuracy of 97.22%, demonstrating effective performance in real-time applications through a designed graphical user interface. The work outlines the architecture, training process, and results, highlighting the significance of STAM in optimizing neural network synapses.

Collaborative Filtering 2: Item-based CF

Collaborative Filtering 2: Item-based CFYusuke Yamamoto This document discusses item-based collaborative filtering for recommender systems. It describes how item-based collaborative filtering works by predicting a target user's rating for an item based on the ratings of similar items. It highlights advantages over user-based filtering like lower computational cost and more stable similarity computations. Key aspects covered include using cosine similarity to calculate item similarities, adjusting for individual rating biases, selecting the top K similar items, and predicting ratings based on similar items' ratings.

WEKA: Algorithms The Basic Methods

WEKA: Algorithms The Basic MethodsDataminingTools Inc 1) The 1R algorithm generates a one-level decision tree by considering each attribute individually and assigning the majority class to each branch. It chooses the attribute with the minimum classification error.

2) Naive Bayes classification assumes attributes are independent and calculates the probability of each class using Bayes' rule. It handles missing and numeric attributes.

3) Decision tree algorithms like ID3 use a divide-and-conquer approach, recursively splitting the data on attributes that maximize information gain or gain ratio at each node.

4) Rule-based algorithms like PRISM generate rules to cover instances of each class sequentially, maximizing the ratio of correctly covered to total covered instances at each step.

Data Science - Part XVII - Deep Learning & Image Processing

Data Science - Part XVII - Deep Learning & Image ProcessingDerek Kane The document discusses image processing for machine learning, particularly focusing on deep learning architectures like convolutional neural networks and their applications in text recognition and image segmentation. It outlines practical examples, such as detecting handwritten text and automating test grading, while emphasizing the challenges of accurately recognizing objects in complex images. The presentation also touches on advancements in deep learning, stating its significant impact on various technologies and fields, including self-driving cars and facial recognition.

04 Classification in Data Mining

04 Classification in Data MiningValerii Klymchuk Chapter 4 discusses classification as a method of predicting attribute values into discrete classes, highlighting the importance of training data and various classification techniques such as statistical methods, distance-based algorithms, decision trees, and neural networks. It addresses the issue of overfitting and the importance of accuracy measurement through confusion matrices and operating characteristic curves. Additionally, the chapter covers specific algorithms and models, including ID3, C4.5, and logistic regression, along with their advantages and disadvantages.

Matrix Factorization

Matrix FactorizationYusuke Yamamoto Matrix factorization techniques can be used to address some of the limitations of traditional collaborative filtering approaches for recommender systems. Matrix factorization decomposes the user-item rating matrix into the product of two lower-dimensional matrices, one representing latent factors for users and the other for items. This reduced dimensionality addresses data sparsity and scalability issues. Specifically, singular value decomposition is often used to perform this matrix factorization, which can approximate the original rating matrix while ignoring less important singular values and factor vectors. The decomposed matrices can then be multiplied to predict unknown user ratings.

The solution of problem of parameterization of the proximity function in ace ...

The solution of problem of parameterization of the proximity function in ace ...eSAT Journals This document presents a new approach for defining the proximity function in algorithms for calculating estimates (ACE) in pattern recognition, utilizing genetic algorithms to optimize membership function parameters. It discusses the problems of comparing fuzzy attributes and outlines the methodology for overcoming these challenges using evolutionary algorithms. Key contributions include the formulation of the proximity function and the genetic algorithm process for optimizing parameters, enhancing the recognition capabilities of various object features.

Ijartes v1-i2-006

Ijartes v1-i2-006IJARTES This document compares four clustering algorithms (K-means, hierarchical, EM, and density-based) using the WEKA tool. It applies the algorithms to a dataset of software classes and evaluates them based on number of clusters, time to build models, squared errors, and log likelihood. The results show that K-means performs best in terms of time to build models, while density-based clustering performs best in terms of log likelihood. Overall, the document concludes that K-means is the best algorithm for this dataset because it balances low runtime and good clustering accuracy.

Data Science Interview Questions | Data Science Interview Questions And Answe...

Data Science Interview Questions | Data Science Interview Questions And Answe...Simplilearn The document contains various data science interview questions covering topics such as measures and dimensions, logistic regression, decision trees, random forests, model overfitting, features selection methods, handling missing data, and accuracy calculations using a confusion matrix. It also includes comparisons between supervised and unsupervised learning, details on recommender systems, dimensionality reduction, p-values, and outlier handling techniques. Key programming examples and methods for evaluating models are presented throughout.

Feature selection on boolean symbolic objects

Feature selection on boolean symbolic objectsijcsity The document discusses the importance of feature selection in data mining, particularly for complex datasets represented by Boolean symbolic objects (BSOs). It introduces a new algorithm called minset-plus, which improves upon existing feature selection methods by optimizing the discrimination process for symbolic objects, allowing for the selection of relevant features while reducing computational complexity. The paper also highlights the need for effective dissimilarity measures and proposes criteria for evaluating discrimination power among various features.

An integrated mechanism for feature selection

An integrated mechanism for feature selectionsai kumar This document discusses an integrated mechanism for feature selection and fuzzy rule extraction for classification problems. It aims to select a useful set of features that can solve the classification problem while designing an interpretable fuzzy rule-based system. The mechanism is an embedded feature selection method, meaning feature selection is integrated into the rule base formation process. This allows it to account for possible nonlinear interactions between features and between features and the modeling tool. The authors demonstrate the effectiveness of the proposed method on several datasets.

Clustering and Regression using WEKA

Clustering and Regression using WEKAVijaya Prabhu This document demonstrates clustering and regression techniques using the Weka data mining software. It shows how Weka can be used to cluster 600 bank customer records into 6 groups based on attributes like age, income, family status, etc. It also uses Weka to create a linear regression model to predict house prices based on attributes like size, number of bedrooms, lot size, and more. Overall, the document shows how Weka allows easy implementation of common data mining algorithms and visualization of results.

Data mining: Classification and prediction

Data mining: Classification and predictionDataminingTools Inc This document discusses various machine learning techniques for classification and prediction. It covers decision tree induction, tree pruning, Bayesian classification, Bayesian belief networks, backpropagation, association rule mining, and ensemble methods like bagging and boosting. Classification involves predicting categorical labels while prediction predicts continuous values. Key steps for preparing data include cleaning, transformation, and comparing different methods based on accuracy, speed, robustness, scalability, and interpretability.

Catching co occurrence information using word2vec-inspired matrix factorization

Catching co occurrence information using word2vec-inspired matrix factorizationhyunsung lee - Factorizing a PMI matrix involves using matrix factorization techniques to represent words in a latent space based on their co-occurrence information, similar to word2vec.

- Recommender systems use matrix factorization to represent users and items numerically in a latent space to predict target values like ratings. Known ratings are used to find latent vectors for users and items that best approximate the rating matrix.

- These latent vectors can then be used to predict unknown ratings by taking the dot product of the user and item vectors.

Branch And Bound and Beam Search Feature Selection Algorithms

Branch And Bound and Beam Search Feature Selection AlgorithmsChamin Nalinda Loku Gam Hewage This document discusses feature selection algorithms, specifically branch and bound and beam search algorithms. It provides an overview of feature selection, discusses the fundamentals and objectives of feature selection. It then goes into more detail about how branch and bound works, including pseudocode, a flowchart, and an example. It also discusses beam search and compares branch and bound to other algorithms. In summary, it thoroughly explains branch and bound and beam search algorithms for performing feature selection on datasets.

Viewers also liked (20)

Bayesian classifiers programmed in sql

Bayesian classifiers programmed in sqlingenioustech This document describes implementing Bayesian classifiers in SQL. It introduces two classifiers: Naive Bayes (NB) and a Bayesian classifier based on class decomposition using K-means clustering (BKM). For both classifiers, it considers model computation and scoring a data set based on the model. It studies table layouts, indexing alternatives, and query optimizations to efficiently transform equations into SQL queries. Experiments evaluate classification accuracy, optimizations, and scalability. The BKM classifier achieves high accuracy and scales linearly, outperforming NB and decision trees. Distance computation is significantly accelerated through denormalized storage and pivoting.

Hyperparameter optimization with approximate gradient

Hyperparameter optimization with approximate gradientFabian Pedregosa This document discusses hyperparameter optimization using approximate gradients. It introduces the problem of optimizing hyperparameters along with model parameters. While model parameters can be estimated from data, hyperparameters require methods like cross-validation. The document proposes using approximate gradients to optimize hyperparameters more efficiently than costly methods like grid search. It derives the gradient of the objective with respect to hyperparameters and presents an algorithm called HOAG that approximates this gradient using inexact solutions. The document analyzes HOAG's convergence and provides experimental results comparing it to other hyperparameter optimization methods.

Portavocía en redes sociales

Portavocía en redes socialesMuévete en bici por Madrid El documento aborda el impacto de las redes sociales en la influencia y reputación personal, destacando la importancia de una estrategia digital integral que vaya más allá de las redes. Se presentan recomendaciones sobre cómo gestionar contenido, crear interacciones y manejar crises en línea, enfatizando la responsabilidad y el respeto en la publicación de información. Además, se sugiere el uso de herramientas tecnológicas para mejorar la conexión, el aprendizaje y la colaboración en el ámbito digital.

Public Transportation

Public Transportationdpapageorge We should protect our home by reducing global warming and air pollution through public transportation. An application will be designed to make public transportation easier by using an iPhone's GPS, compass and augmented reality to find the closest public transit option and view schedules and locations. Augmented reality superimposes graphics over the real world in real-time using mobile internet, video tracking and a camera.

Apresentação Red Advisers

Apresentação Red Advisersmezkita A Red Advisers é uma empresa de consultoria multidisciplinar especializada em imobiliário e turismo, com foco em mercados emergentes como Moçambique, Angola e Cabo Verde. A missão da empresa é oferecer serviços de excelência com uma equipe altamente qualificada, visando garantir o sucesso nos projetos dos clientes. A empresa opera em diversas áreas, incluindo consultoria estratégica, avaliação de imóveis, estudos de mercado e due diligence técnica.

Data Applied:Decision Trees

Data Applied:Decision TreesDataminingTools Inc Decision trees are models that use divide and conquer techniques to split data into branches based on attribute values at each node. To construct a decision tree, an attribute is selected to label the root node using the maximum information gain approach. Then the process is recursively repeated on the branches. However, highly branching attributes may be favored. To address this, gain ratio is used which compensates for number of branches by considering split information. The example demonstrates calculating information gain and gain ratio to select the best attribute to split on at each node.

Drc 2010 D.J.Pawlik

Drc 2010 D.J.Pawlikslrommel This work summarizes the fabrication and characterization of sub-micron InGaAs Esaki tunnel diodes with record high peak current densities. Two types of diodes were fabricated with different doping levels. Extensive SEM analysis was required to accurately measure the small junction areas down to 0.015 mm2. The diodes exhibited peak current densities as high as 9.75 mA/mm2 without degradation, even at small sizes. Series resistance effects were minimized and characterized for the sub-micron devices.

MS Sql Server: Reporting introduction

MS Sql Server: Reporting introductionDataminingTools Inc SQL Server 2008 Reporting Services allows users to create reports with any structure using a unique data format and integrated with Microsoft Office SharePoint Services for centralized delivery and management. It provides enhanced performance, scalability, and visualization capabilities. The reporting lifecycle involves authoring reports using Report Designer or Report Builder, managing reports on a central report server, and delivering reports via e-mail, web portal, or custom applications.

Bind How To

Bind How Tocntlinux The document provides instructions for configuring a DNS server on a Linux server. It describes installing the server without a desktop environment and ensuring the DNS package is selected. It explains that the /etc/named.conf file defines the zones and /var/named stores the zone files. It outlines steps to copy a sample zone file, edit it to reference the new domain, link it, and add the zone to named.conf. Finally, it describes starting the DNS service, checking configuration by resolving names, and getting approval from an instructor.

Huidige status van de testtaal TTCN-3

Huidige status van de testtaal TTCN-3Erik Altena Door de groeiende complexiteit en verwevenheid van softwaresystemen neemt het belang van geautomatiseerd testen toe. In een eerder artikel in Informatie is TTCN-3 geschetst als de testtaal van de toekomst, mede ingegeven door het succesvolle TT-Medal-project. Vijf jaar later, in het jaar waarin TTCN-3 tien jaar bestond, vonden Jos en ik het een goed moment om de balans op te maken. Blijkt het inderdaad de silver bullet te zijn of niet?

R Datatypes

R DatatypesDataminingTools Inc R supports various data types including numbers, strings, factors, data frames, and tables. Numbers and strings can be assigned to variables using <- or =. Factors represent discrete groupings. Lists are ordered collections of objects that can contain different data types. Data frames are lists that behave like tables, with each component being a vector of the same length. The table() function generates frequency tables from factors.

MySql:Basics

MySql:BasicsDataminingTools Inc Mysql is a popular open source database system. It can be downloaded from the mysql website for free. Mysql allows users to create, manipulate and store data in databases. A database contains tables which hold related information. Structured Query Language (SQL) is used to perform operations like querying and manipulating data within MySQL databases. Some common SQL queries include SELECT, INSERT, UPDATE and DELETE.

Data Applied:Tree Maps

Data Applied:Tree MapsDataminingTools Inc Treemaps are a visualization technique that displays hierarchical data as a set of nested rectangles. Each branch of the tree is represented by a rectangle, with smaller rectangles representing sub-branches. Leaf nodes are sized proportionally and colored to show additional data dimensions. Standard treemaps can result in thin, elongated rectangles. Squarified treemaps aim to reduce the aspect ratio and "squarify" the rectangles for a more efficient use of space and easier comparisons. The Data-Applied API supports executing treemaps using the RootTreeMapTaskInfo entity and a sequence of messages.

How To Make Pb J

How To Make Pb Jspencer shanks This document provides instructions for making a peanut butter and jelly sandwich in 3 steps: spread peanut butter on one slice of bread and jelly on the other slice, then put the two slices together to make a sandwich.

Festivals Refuerzo

Festivals Refuerzoguest9536ef5 The document provides instructions for a school project where students will work in groups to research the origins, customs, food, music, clothing, and other aspects of various festivals celebrated in Britain. It lists websites for the students to use to research festivals like Halloween, Christmas, New Year's Eve, Valentine's Day, Easter, Earth Day, and America's Independence Day. The students will fill out a board with their findings and present their research in a PowerPoint with pictures.

LISP: Declarations In Lisp

LISP: Declarations In LispDataminingTools Inc Declarations allow users to provide extra information to Lisp about variables, functions, and forms. Declarations are optional but can affect interpretation. The declare construct embeds declarations and they are valid at the start of certain special forms and lambda expressions. Common declaration specifiers include type, special, ftype, inline, and notinline to provide type or implementation information.

Association Rules

Association RulesDataminingTools Inc The document discusses association rule mining for market basket analysis. It describes the market basket model where items are products and baskets represent customer purchases. It defines key terms like support, frequent itemsets, and association rules. It also discusses the challenges of scale for large retail datasets and describes the A-Priori algorithm for efficiently finding frequent itemsets and generating association rules from transaction data.

XL-Miner: Timeseries

XL-Miner: TimeseriesDataminingTools Inc XLMiner is a data mining add-in for Microsoft Excel that provides tools for time series analysis. There are two exploratory techniques used in XLMiner for time series analysis: the autocorrelation function (ACF), which finds the correlation between observations separated by a time lag, and the partial autocorrelation function (PACF), which measures the strength of relationships between components while accounting for other components. It is important to partition the time series data into training and validation sets to validate that the time series pattern is consistent and the model is accurate enough for prediction purposes.

Asha & Beckis Nc Presentation

Asha & Beckis Nc PresentationAsha Stremcha North Carolina's state symbols include the red cardinal as the state bird, dogwood as the state flower, and pine tree as the state tree. The state flag features horizontal red and white bars and a vertical blue bar with the dates of the Mecklenburg Declaration of Independence and the Halifax Resolves. North Carolina has two nicknames - The Old North State and The Tar Heel State. Some key facts about North Carolina include that it has a population of over 9 million people and became the 12th US state in 1789. Famous North Carolinians include evangelist Billy Graham and actor Andy Griffith.

Introduction to Data-Applied

Introduction to Data-AppliedDataminingTools Inc Data Applied provides data analytics, mining, and visualization capabilities through a web interface and API. It allows users to pinpoint key influences in data, share analysis results, visualize large datasets, build pivot charts, identify hidden associations, detect anomalies, visualize correlations, forecast time series, discover similarities, categorize records, collaborate, and protect data. The platform offers amazing graphics and visualization from the start of the data analysis process and allows forecasting, comparing, categorizing, and visualizing data for traffic, sales, conversions, accounts, cash, claims, defects, products, crime levels, and more.

Ad

Similar to MS SQL SERVER: Decision trees algorithm (20)

chap4_basic_classification(2).ppt

chap4_basic_classification(2).pptssuserfdf196 This document provides an overview of classification tasks in data mining. Classification involves using a training dataset to build a model that can predict the class of new, unlabeled data. The document discusses different classification techniques like decision trees and neural networks. It also explains how decision trees are built using a greedy algorithm that recursively splits the data based on attributes. The goal is to find the split that optimizes some criterion at each node. Issues like determining the optimal split condition and knowing when to stop splitting are also covered.

chap4_basic_classification.ppt

chap4_basic_classification.pptBantiParsaniya The document discusses classification, which is a type of data mining task where a model is created to predict the class of new data based on attributes of training data. Specifically, it covers:

- The goal of classification is to assign classes to new, unseen data as accurately as possible based on a model built from a training dataset.

- Common classification techniques include decision trees, rules, neural networks, naive Bayes, and support vector machines.

- Decision tree induction works by splitting the training data into purer subsets based on attribute tests that optimize an impurity measure, with the splits forming the branches of the tree.

- Key considerations in decision tree induction include how to specify the attribute test conditions for splits

data mining Module 4.ppt

data mining Module 4.pptPremaJain2 The document discusses classification, which is the task of assigning objects to predefined categories. Classification involves learning a target function that maps attribute sets to class labels. Decision tree induction is a common classification technique that builds a tree-like model by recursively splitting the data into purer subsets based on attribute values. The document covers concepts like decision tree structure, algorithms like Hunt's algorithm for building trees, evaluating performance, and handling different attribute types when constructing decision tree models.

Data Mining Lecture_10(a).pptx

Data Mining Lecture_10(a).pptxSubrata Kumer Paul The document covers classification concepts in data mining, specifically focusing on decision trees used for predicting tax evasion based on attributes like marital status and taxable income. It discusses the process of building classification models, evaluating their effectiveness using confusion matrices, and outlines various classification techniques. Additionally, it delves into decision tree construction, including attribute selection and impurity measurements, emphasizing methods such as ID3, C4.5, and CART.

Decision Tree based Classification - ML.ppt

Decision Tree based Classification - ML.pptamrita chaturvedi The document discusses the basics of classification tasks, specifically focusing on decision trees and their associated algorithms. It explains how to create a model using training sets and test sets, detailing various classification methods such as k-nearest neighbors and decision tree induction. Additionally, it covers techniques for splitting attributes and measuring impurity, such as Gini index and entropy.

Classification

ClassificationAuliyaRahman9 Classification is a supervised machine learning task where a model is trained on labeled data to predict the class labels of new unlabeled data. The given document discusses classification and decision tree induction for classification. It defines classification, provides examples of classification tasks, and describes how decision trees are constructed through a greedy top-down induction approach that aims to split the training data into homogeneous subsets based on measures of impurity like Gini index or information gain. The goal is to build an accurate model that can classify new unlabeled data.

Classification Slides and decision tree .ppt

Classification Slides and decision tree .pptKiran119578 This document covers basic concepts and techniques in data mining classification, detailing the process of building classification models and the types of classification tasks. It highlights various classification techniques including decision trees, rule-based methods, and ensemble classifiers, along with their applications to real-world examples. The document further discusses decision tree induction, Hunt's algorithm, splitting methods, and measures of node impurity such as Gini index and entropy.

20120140506004

20120140506004IAEME Publication This document discusses using Data Mining Extensions (DMX) queries to make predictions on data mining models built using birth registration data from an e-governance system. Two DMX queries are presented that use the Predict and PredictProbability functions to predict the most likely delivery method and delivery attendant, and the probabilities of different options. The results from running the queries on the models find that a natural delivery and delivery by a private institutional attendant are the most likely predictions.

Basic Classification.pptx

Basic Classification.pptxPerumalPitchandi The document discusses classification techniques in data mining. Classification involves using a training dataset containing records with attributes and class labels to build a model that predicts the class labels of new records. Several classification techniques are described, including decision trees which use a tree structure to split the data into purer subsets based on attribute values. The Hunt's algorithm for generating decision trees is explained in detail, including how it recursively splits the training data based on evaluating attribute tests until reaching single class leaf nodes. Design considerations for decision tree induction like attribute test methods and stopping criteria are also covered.

Cluster analysis

Cluster analysisMahesh Kaluti The document discusses classification and prediction in data mining. It describes classification as predicting categorical class labels based on a training set, while prediction models continuous values. The two-step classification process involves model construction from a training set and then using the model to classify new data. Decision tree induction is covered as a classification method, including how trees are constructed in a top-down recursive manner by selecting attributes that optimize a splitting criterion. Factors in evaluating classification methods such as accuracy, speed, and interpretability are also summarized.

Decision-Tree-.pdf techniques and many more

Decision-Tree-.pdf techniques and many moreshalinipriya1692 Decision-Tree-.pdf techniques and many more

Classification

ClassificationAnurag jain The document discusses classification, which involves using a training dataset containing records with attributes and classes to build a model that can predict the class of previously unseen records. It describes dividing the dataset into training and test sets, with the training set used to build the model and test set to validate it. Decision trees are presented as a classification method that splits records recursively into partitions based on attribute values to optimize a metric like GINI impurity, allowing predictions to be made by following the tree branches. The key steps of building a decision tree classifier are outlined.

Lecture_21_22_Classification_Instance-based Learning

Lecture_21_22_Classification_Instance-based Learningmomtajhossainmowmoni Lecture_21_22_Classification_Instance-based Learning

Classification: Basic Concepts and Decision Trees

Classification: Basic Concepts and Decision Treessathish sak The document discusses the basic concepts of classification and decision trees, detailing the process of classifying records based on their attributes to assign a class label accurately. It covers various classification techniques such as k-nearest neighbors, decision trees, and algorithms like Hunt's, CART, and ID3, providing examples and illustrating the structure and induction of decision trees. Additionally, it explains methods for evaluating splits using criteria like Gini index, entropy, and classification error.

Lecture 5 Decision tree.pdf

Lecture 5 Decision tree.pdfssuser4c50a9 The document discusses decision trees, which are a popular classification algorithm. It covers:

- Why decision trees are used for classification and prediction, and that they represent rules that can be understood by humans.

- The key components of a decision tree, including root nodes, internal decision nodes, leaf nodes, and branches. It describes how a decision tree classifies examples by moving from the root to a leaf node.

- The greedy algorithm for learning decision trees, which starts with an empty tree and recursively splits the data into purer subsets based on a splitting criterion until some stopping condition is met.

MS SQL SERVER: Microsoft naive bayes algorithm

MS SQL SERVER: Microsoft naive bayes algorithmsqlserver content The Naive Bayes algorithm is a classification algorithm in SQL Server that uses Bayes' theorem with the assumption of independence between attributes. It is less computationally intensive than other algorithms. A Naive Bayes model can be explored using different views to understand attribute relationships and feature importance. DMX queries can retrieve model metadata, content, and predictions. Parameters like maximum input attributes control the algorithm behavior.

Data mining by example - building predictive model using microsoft decision t...

Data mining by example - building predictive model using microsoft decision t...Shaoli Lu This document discusses building a predictive model using Microsoft Decision Trees to predict a prospect's likelihood of purchasing a bike. It outlines setting up the necessary software including SQL Server and Visual Studio, creating a data mining project with a data source and mining structure using Microsoft Decision Trees, deploying and processing the model, and analyzing the model's accuracy using holdout data and visualizations. The summary emphasizes that Microsoft Decision Trees is a powerful yet easy to use model that can perform both single and mass predictions, and that holdout data and visualizations help test and explore the trained model.

Classification Using Decision tree

Classification Using Decision treeMohd. Noor Abdul Hamid The document provides an introduction to classification techniques in machine learning. It defines classification as assigning objects to predefined categories based on their attributes. The goal is to build a model from a training set that can accurately classify previously unseen records. Decision trees are discussed as a popular classification technique that recursively splits data into more homogeneous subgroups based on attribute tests. The document outlines the process of building decision trees, including selecting splitting attributes, stopping criteria, and evaluating performance on a test set. Examples are provided to illustrate classification tasks and building a decision tree model.

NN Classififcation Neural Network NN.pptx

NN Classififcation Neural Network NN.pptxcmpt cmpt The document provides an overview of classification methods. It begins with defining classification tasks and discussing evaluation metrics like accuracy, recall, and precision. It then describes several common classification techniques including nearest neighbor classification, decision tree induction, Naive Bayes, logistic regression, and support vector machines. For decision trees specifically, it explains the basic structure of decision trees, the induction process using Hunt's algorithm, and issues in tree induction.

Ad

More from DataminingTools Inc (20)

Terminology Machine Learning

Terminology Machine LearningDataminingTools Inc The document defines several key machine learning and neural network terminology including:

- Activation level - The output value of a neuron in an artificial neural network.

- Activation function - The function that determines the output value of a neuron based on its net input.

- Attributes - Properties of an instance that can be used to determine its classification in machine learning tasks.

- Axon - The output part of a biological neuron that transmits signals to other neurons.

Techniques Machine Learning

Techniques Machine LearningDataminingTools Inc Machine learning techniques can be used to enable computers to learn from data and perform tasks. Some key techniques discussed in the document include decision tree learning, artificial neural networks, Bayesian learning, support vector machines, genetic algorithms, graph-based learning, reinforcement learning, and pattern recognition. Each technique has its own strengths and applications.

Machine learning Introduction

Machine learning IntroductionDataminingTools Inc Machine learning is the ability of machines to learn from experience and improve their performance on tasks over time without being explicitly programmed. It involves the development of algorithms that allow computers to learn from large amounts of data. There are different types of machine learning including supervised learning, unsupervised learning, and semi-supervised learning. The history of machine learning began in the 1950s with research into neural networks, pattern recognition, and knowledge systems. Significant developments occurred in each subsequent decade, including decision trees, connectionism, reinforcement learning, and support vector machines. Machine learning continues to progress and find new applications in areas like data mining, language processing, and robotics.

Areas of machine leanring

Areas of machine leanringDataminingTools Inc This document provides an overview of machine learning applications across several domains:

- Financial applications including trading strategies, forecasting, and portfolio management utilize techniques like reinforcement learning and neural networks.

- Weather forecasting uses neural networks, support vector machines, and time series analysis to predict temperature and rainfall.

- Speech recognition and natural language processing apply machine learning to tasks like document classification, tagging, and parsing using probabilistic and neural network models.

- Other applications include smart environments using predictive models, computer games using reinforcement learning, robotics combining mechanics and software, and medical decision support analyzing clinical and biological data.

AI: Planning and AI

AI: Planning and AIDataminingTools Inc A situated planning agent treats planning and acting as a single process rather than separate processes. It uses conditional planning to construct plans that account for possible contingencies by including sensing actions. The agent resolves any flaws in the conditional plan before executing actions when their conditions are met. When facing uncertainty, the agent must have preferences between outcomes to make decisions using utility theory and represent probabilities using a joint probability distribution over variables in the domain.

AI: Logic in AI 2

AI: Logic in AI 2DataminingTools Inc A simple planning agent uses percepts from the environment to build a model of the current state and calls a planning algorithm to generate a plan to achieve its goal. Practical planning involves restricting the language used to define problems, using specialized planners rather than general theorem provers, and adopting hierarchical decomposition to store and retrieve abstract plans from a library. A solution is a fully instantiated, totally ordered plan that guarantees achieving the goal.

AI: Logic in AI

AI: Logic in AIDataminingTools Inc The document discusses different types of logical reasoning systems used in artificial intelligence, including knowledge-based agents, first-order logic, higher-order logic, goal-based agents, knowledge engineering, and description logics. It provides examples of objects, properties, relations, and functions that can be represented and reasoned about logically. It also compares different approaches to logical indexing and outlines the key components and inference tasks involved in description logics.

AI: Learning in AI 2

AI: Learning in AI 2DataminingTools Inc Bayesian learning views hypotheses as intermediaries between data and predictions. Belief networks can represent learning problems with known or unknown structures and fully or partially observable variables. Belief networks use localized representations, whereas neural networks use distributed representations. Reinforcement learning uses rewards to learn successful agent functions, such as Q-learning which learns action-value functions. Active learning agents consider actions, outcomes, and how actions affect rewards received. Genetic algorithms evolve individuals to successful solutions measured by fitness functions. Explanation-based learning speeds up programs by reusing results of prior computations.

AI: Learning in AI

AI: Learning in AI DataminingTools Inc Neural networks can be used for machine learning tasks like classification. They consist of interconnected nodes that update their weight values during a training process using examples. Neural networks have been applied successfully to tasks like handwritten character recognition, autonomous vehicle control by observing human drivers, and text-to-speech pronunciation generation. Their architecture is inspired by the human brain but neural networks are trained using computational methods while the brain uses biological processes.

AI: Introduction to artificial intelligence

AI: Introduction to artificial intelligenceDataminingTools Inc This document provides an introduction to artificial intelligence including definitions of AI, categories of AI systems, requirements for an artificially intelligent system, a brief history of AI, examples of AI in the real world, definitions of intelligent agents and different types of agent programs. It defines AI as the study of intelligent behavior in computational processes and how to make computers capable of tasks that humans currently perform better. It outlines categories of systems that think like humans rationally, or act like humans rationally. It also describes the requirements for a system to exhibit intelligent behavior through natural language processing, knowledge representation, reasoning, and machine learning.

AI: Belief Networks

AI: Belief NetworksDataminingTools Inc Conditional planning deals with incomplete information by constructing conditional plans that account for possible contingencies. The agent includes sensing actions to determine which part of the plan to execute based on conditions. Belief networks are constructed by choosing relevant variables, ordering them, and adding nodes while satisfying conditional independence properties. Inference in multi-connected belief networks can use clustering, conditioning, or stochastic simulation methods. Knowledge engineering for probabilistic reasoning first decides on topics and variables, then encodes general and problem-specific dependencies and relationships to answer queries.

AI: AI & Searching

AI: AI & SearchingDataminingTools Inc 1. There are three main ways to avoid repeated states during search: do not return to the previous state, avoid paths with cycles, and do not re-generate any previously generated state.

2. Constraint satisfaction problems have additional structural properties beyond basic problem requirements, including satisfying constraints.

3. Best first search orders nodes so the best evaluation is expanded first, making it an informed search method.

AI: AI & Problem Solving

AI: AI & Problem SolvingDataminingTools Inc The document discusses various problem solving techniques in artificial intelligence, including different types of problems, components of well-defined problems, measuring problem solving performance, and different search strategies. It describes single-state and multiple-state problems, and defines the key components of a problem including the data type, operators, goal test, and path cost. It also explains different search strategies such as breadth-first search, uniform cost search, depth-first search, depth-limited search, iterative deepening search, and bidirectional search.

Data Mining: Text and web mining

Data Mining: Text and web miningDataminingTools Inc This document discusses text and web mining. It defines text mining as analyzing huge amounts of text data to extract information. It discusses measures for text retrieval like precision and recall. It also covers text retrieval and indexing methods like inverted indices and signature files. Query processing techniques and ways to reduce dimensionality like latent semantic indexing are explained. The document also discusses challenges in mining the world wide web due to its size and dynamic nature. It defines web usage mining as collecting web access information to analyze paths to accessed web pages.

Data Mining: Outlier analysis

Data Mining: Outlier analysisDataminingTools Inc Outlier analysis is used to identify outliers, which are data objects that are inconsistent with the general behavior or model of the data. There are two main types of outlier detection - statistical distribution-based detection, which identifies outliers based on how far they are from the average statistical distribution, and distance-based detection, which finds outliers based on how far they are from other data objects. Outlier analysis is useful for tasks like fraud detection, where outliers may indicate fraudulent activity that is different from normal patterns in the data.

Data Mining: Mining stream time series and sequence data

Data Mining: Mining stream time series and sequence dataDataminingTools Inc This document discusses various methodologies for processing and analyzing stream data, time series data, and sequence data. It covers topics such as random sampling and sketches/synopses for stream data, data stream management systems, the Hoeffding tree and VFDT algorithms for stream data classification, concept-adapting algorithms, ensemble approaches, clustering of evolving data streams, time series databases, Markov chains for sequence analysis, and algorithms like the forward algorithm, Viterbi algorithm, and Baum-Welch algorithm for hidden Markov models.

Data Mining: Mining ,associations, and correlations

Data Mining: Mining ,associations, and correlationsDataminingTools Inc Market basket analysis examines customer purchasing patterns to determine which items are commonly bought together. This can help retailers with marketing strategies like product bundling and complementary product placement. Association rule mining is a two-step process that first finds frequent item sets that occur together above a minimum support threshold, and then generates strong association rules from these frequent item sets that satisfy minimum support and confidence. Various techniques can improve the efficiency of the Apriori algorithm for mining association rules, such as hashing, transaction reduction, partitioning, sampling, and dynamic item-set counting. Pruning strategies like item merging, sub-item-set pruning, and item skipping can also enhance efficiency. Constraint-based mining allows users to specify constraints on the type of

Data Mining: Graph mining and social network analysis

Data Mining: Graph mining and social network analysisDataminingTools Inc Graph mining analyzes structured data like social networks and the web through graph search algorithms. It aims to find frequent subgraphs using Apriori-based or pattern growth approaches. Social networks exhibit characteristics like densification and heavy-tailed degree distributions. Link mining analyzes heterogeneous, multi-relational social network data through tasks like link prediction and group detection, facing challenges of logical vs statistical dependencies and collective classification. Multi-relational data mining searches for patterns across multiple database tables, including multi-relational clustering that utilizes information across relations.

Data warehouse and olap technology

Data warehouse and olap technologyDataminingTools Inc This document discusses data warehousing and online analytical processing (OLAP) technology. It defines a data warehouse, compares it to operational databases, and explains how OLAP systems organize and present data for analysis. The document also describes multidimensional data models, common OLAP operations, and the steps to design and construct a data warehouse. Finally, it discusses applications of data warehouses and efficient processing of OLAP queries.

Data Mining: Data processing

Data Mining: Data processingDataminingTools Inc Data processing involves cleaning, integrating, transforming, reducing, and summarizing data from various sources into a coherent and useful format. It aims to handle issues like missing values, noise, inconsistencies, and volume to produce an accurate and compact representation of the original data without losing information. Some key techniques involved are data cleaning through binning, regression, and clustering to smooth or detect outliers; data integration to combine multiple sources; data transformation through smoothing, aggregation, generalization and normalization; and data reduction using cube aggregation, attribute selection, dimensionality reduction, and discretization.

Recently uploaded (20)

The State of Web3 Industry- Industry Report

The State of Web3 Industry- Industry ReportLiveplex Web3 is poised for mainstream integration by 2030, with decentralized applications potentially reaching billions of users through improved scalability, user-friendly wallets, and regulatory clarity. Many forecasts project trillions of dollars in tokenized assets by 2030 , integration of AI, IoT, and Web3 (e.g. autonomous agents and decentralized physical infrastructure), and the possible emergence of global interoperability standards. Key challenges going forward include ensuring security at scale, preserving decentralization principles under regulatory oversight, and demonstrating tangible consumer value to sustain adoption beyond speculative cycles.

AudGram Review: Build Visually Appealing, AI-Enhanced Audiograms to Engage Yo...

AudGram Review: Build Visually Appealing, AI-Enhanced Audiograms to Engage Yo...SOFTTECHHUB AudGram changes everything by bridging the gap between your audio content and the visual engagement your audience craves. This cloud-based platform transforms your existing audio into scroll-stopping visual content that performs across all social media platforms.

cnc-drilling-dowel-inserting-machine-drillteq-d-510-english.pdf

cnc-drilling-dowel-inserting-machine-drillteq-d-510-english.pdfAmirStern2 CNC מכונות קידוח drillteq d-510

Floods in Valencia: Two FME-Powered Stories of Data Resilience

Floods in Valencia: Two FME-Powered Stories of Data ResilienceSafe Software In October 2024, the Spanish region of Valencia faced severe flooding that underscored the critical need for accessible and actionable data. This presentation will explore two innovative use cases where FME facilitated data integration and availability during the crisis. The first case demonstrates how FME was used to process and convert satellite imagery and other geospatial data into formats tailored for rapid analysis by emergency teams. The second case delves into making human mobility data—collected from mobile phone signals—accessible as source-destination matrices, offering key insights into population movements during and after the flooding. These stories highlight how FME's powerful capabilities can bridge the gap between raw data and decision-making, fostering resilience and preparedness in the face of natural disasters. Attendees will gain practical insights into how FME can support crisis management and urban planning in a changing climate.

“From Enterprise to Makers: Driving Vision AI Innovation at the Extreme Edge,...

“From Enterprise to Makers: Driving Vision AI Innovation at the Extreme Edge,...Edge AI and Vision Alliance For the full video of this presentation, please visit: https://www.edge-ai-vision.com/2025/06/from-enterprise-to-makers-driving-vision-ai-innovation-at-the-extreme-edge-a-presentation-from-sony-semiconductor-solutions/

Amir Servi, Edge Deep Learning Product Manager at Sony Semiconductor Solutions, presents the “From Enterprise to Makers: Driving Vision AI Innovation at the Extreme Edge” tutorial at the May 2025 Embedded Vision Summit.

Sony’s unique integrated sensor-processor technology is enabling ultra-efficient intelligence directly at the image source, transforming vision AI for enterprises and developers alike. In this presentation, Servi showcases how the AITRIOS platform simplifies vision AI for enterprises with tools for large-scale deployments and model management.

Servi also highlights his company’s collaboration with Ultralytics and Raspberry Pi, which brings YOLO models to the developer community, empowering grassroots innovation. Whether you’re scaling vision AI for industry or experimenting with cutting-edge tools, this presentation will demonstrate how Sony is accelerating high-performance, energy-efficient vision AI for all.

Enabling BIM / GIS integrations with Other Systems with FME

Enabling BIM / GIS integrations with Other Systems with FMESafe Software Jacobs has successfully utilized FME to tackle the complexities of integrating diverse data sources in a confidential $1 billion campus improvement project. The project aimed to create a comprehensive digital twin by merging Building Information Modeling (BIM) data, Construction Operations Building Information Exchange (COBie) data, and various other data sources into a unified Geographic Information System (GIS) platform. The challenge lay in the disparate nature of these data sources, which were siloed and incompatible with each other, hindering efficient data management and decision-making processes.

To address this, Jacobs leveraged FME to automate the extraction, transformation, and loading (ETL) of data between ArcGIS Indoors and IBM Maximo. This process ensured accurate transfer of maintainable asset and work order data, creating a comprehensive 2D and 3D representation of the campus for Facility Management. FME's server capabilities enabled real-time updates and synchronization between ArcGIS Indoors and Maximo, facilitating automatic updates of asset information and work orders. Additionally, Survey123 forms allowed field personnel to capture and submit data directly from their mobile devices, triggering FME workflows via webhooks for real-time data updates. This seamless integration has significantly enhanced data management, improved decision-making processes, and ensured data consistency across the project lifecycle.

ENERGY CONSUMPTION CALCULATION IN ENERGY-EFFICIENT AIR CONDITIONER.pdf

ENERGY CONSUMPTION CALCULATION IN ENERGY-EFFICIENT AIR CONDITIONER.pdfMuhammad Rizwan Akram DC Inverter Air Conditioners are revolutionizing the cooling industry by delivering affordable,

energy-efficient, and environmentally sustainable climate control solutions. Unlike conventional

fixed-speed air conditioners, DC inverter systems operate with variable-speed compressors that

modulate cooling output based on demand, significantly reducing energy consumption and

extending the lifespan of the appliance.

These systems are critical in reducing electricity usage, lowering greenhouse gas emissions, and

promoting eco-friendly technologies in residential and commercial sectors. With advancements in

compressor control, refrigerant efficiency, and smart energy management, DC inverter air conditioners