Parallel programming in modern world .net technics shared

- 1. Parallel programming in modern world .NET TECHNICS

- 2. parallelism vs concurrency Concurrency existence of multiple threads of execution goal of concurrency is to prevent thread starvation concurrency is required operationally Parallelism concurrent threads execute at the same time on multiple cores parallelism is only about throughput It is an optimization, not a functional requirement

- 3. Limitations to linear speedup of parallel code Serial code Overhead from parallelization Synchronization Sequential input/output

- 4. Parallel Speedup Calculation Amdahl’s Law: Speedup = 1 1 − P + (P/N) Gustafson’s Law: Speedup = S + N (1 − S) S + (1 − S) - 0n

- 5. Phases of parallel development Finding Concurrency Task Decomposition pattern Data Decomposition pattern Group Tasks Pattern Order Tasks Pattern Data Sharing pattern Algorithm Structures Support Structures Implementation Mechanisms

- 6. The Algorithm Structure Pattern Task Parallelism Pattern Divide and Conquer Pattern Geometric Decomposition Pattern Recursive Data Pattern Pipeline Pattern

- 7. The Supporting Structures Pattern SPMD (Single Program/Multiple Data) Master/Worker Loop Parallelism Fork/Join

- 8. Data Parallelism Search for Loops Unroll Sequential Loops Evaluating Performance Considerations: Conduct performance benchmarks to confirm potential performance improvements When there is minimal or no performance gain, one solution is to change the chunk size Parallel.For and Parallel.ForEach ParallelLoopState for breaking

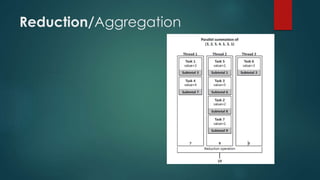

- 10. Variations of Reduce Scan pattern - each iteration of a loop depends on data computed in the previous iteration. Pack pattern - uses a parallel loop to select elements to retain or discard => The result is a subset of the original input. Map Reduce

- 11. MapReduce Pattern Elements: 1) input - a collection of key and value pairs; 2) intermediate collection - a non- unique collection of key and value pairs; 3) third collection - a reduction of the non-unique keys from the intermediate collection.

- 12. MapReduce Example Counting Words across multiple documents

- 14. Futures Future is a stand-in for a computational result that is initially unknown but becomes available at a later time. A future in .NET is a Task<TResult> that returns a value. A .NET continuation task is a task that automatically starts when other tasks, known as its antecedents, complete.

- 15. Futures example

- 16. Dynamic Task Parallelism Dynamic Tasks (decomposition or “divide and conquer”) - tasks that are dynamically added to the work queue as the computation proceeds. Most known instance – recursion.

- 18. Pipelines Each task implements a stage of the pipeline, and the queues act as buffers that allow the stages of the pipeline to execute concurrently, even though the values are processed in order. The buffers BlockingCollection<T>

- 19. Pipeline example

- 20. C# 5 : async and await asynchronous pattern, event-based asynchronous pattern, task-based asynchronous pattern (TAP) : async&await!

- 24. Using Multiple Asynchronous Methods Using Multiple Asynchronous Methods: vs Using Combinators:

- 25. Converting the Asynchronous Pattern The TaskFactory class defines the FromAsync method that allows converting methods using the asynchronous pattern to the TAP.

- 26. ERROR HANDLING

- 27. Multiple tasks error handling

- 28. CANCELLATION Cancellation with Framework Features

- 29. CANCELLATION Cancellation with custom tasks

- 30. Literature Concurrent Programming on Windows “MapReduce: Simplified Data Processing on Large Clusters” by Jeffrey Dean and Sanjay Ghemawat. 2004 Parallel Programming with Microsoft Visual Studio 2010 Step by Step Parallel Programming with Microsoft®.NET: Design Patterns for Decomposition and Coordination on Multicore Architectures Pro .NET 4 Parallel Programming in C# [Adam_Freeman] Professional Parallel Programming with C# [Gaston_Hillar] Professional Parallel Programming with C# Master Parallel Extensions with NET 4 .NET 4.5 Parallel Extensions Cookbook | Packt Publishing

- 31. Questions? Parallel programming in modern world .NET technics

- 32. Thanks!

Editor's Notes

- #5: Amdahl’s Law calculates the speedup of parallel code based on three variables: ■ Duration of running the application on a single-core machine ■ The percentage of the application that is parallel ■ The number of processor cores This formula uses the duration of the application on a single-core machine as the benchmark. The numerator of the equation represents that base duration, which is always one. The dynamic portion of the calculation is in the denominator. The variable P is the percent of the application that runs in parallel, and N is the number of processor cores. The serial and parallel portions each remain half of the program. But in the real world, as computing power increases, more work gets completed, so the relative duration of the sequential portion is reduced. In addition, Amdahl’s Law does not account for the overhead required to schedule, manage, and execute parallel tasks. Gustafson’s Law takes both of these additional factors into account.

- #16: Suppose that F1, F2, F3, and F4 are processor-intensive functions that communicate with one another using arguments and return values instead of reading and updating shared state variables. Suppose, also, that you want to distribute the work of these functions across available cores, and you want your code to run correctly no matter how many cores are available. When you look at the inputs and outputs, you can see that F1 can run in parallel with F2 and F3 but that F3 can’t start until after F2 finishes. How do you know this? The possible orderings become apparent when you visualize the function calls as a graph. Figure 1 illustrates this. The nodes of the graph are the functions F1, F2, F3, and F4. The incoming arrows for each node are the inputs required by the function, and the outgoing arrows are values calculated by each function. It’s easy to see that F1 and F2 can run at the same time but that F3 must follow F2. Here’s an example that shows how to create futures for this example. For simplicity, the code assumes that the values being calculated are integers and that the value of variable a has already been supplied, perhaps as an argument to the current method. This code creates a future that begins to asynchronously calculate the value of F1(a). On a multicore system, F1 will be able to run in parallel with the current thread. This means that F2 can begin executing without waiting for F1. The function F4 will execute as soon as the data it needs becomes available. It doesn’t matter whether F1 or F3 finishes first, because the results of both functions are required before F4 can be invoked. (Recall that the Result property does not return until the future’s value is available.) Note that the calls to F2, F3, and F4 do not need to be wrapped inside of a future because a single additional asynchronous operation is all that is needed to take advantage of the parallelism of this example.

- #20: Each stage of the pipeline reads from a dedicated input and writes to a particular output. For example, the “Read Strings” task reads from a source and writes to buffer 1. All the stages of the pipeline can execute at the same time because concurrent queues buffer any shared inputs and outputs. If there are four available cores, the stages can run in parallel. As long as there is room in its output buffer, a stage of the pipeline can add the value it produces to its output queue. If the output buffer is full, the producer of the new value waits until space becomes available. Stages can also wait (that is, block) on inputs. An input wait is familiar from other programming contexts—if an enumeration or a stream does not have a value, the consumer of that enumeration or stream waits until a value is available or an “end of file” condition occurs. Blocking a collection works the same way. Using buffers that hold more than one value at a time compensates for variability in the time it takes to process each value. The BlockingCollection<T> class lets you signal the “end of file” condition with the CompleteAdding method. This method tells the consumer that it can end its processing loop after all the data previously added to the collection is removed or processed. The following code demonstrates how to implement a pipeline that uses the BlockingCollection class for the buffers and tasks for the stages of the pipeline.

![Literature

Concurrent Programming on Windows

“MapReduce: Simplified Data Processing on Large Clusters” by

Jeffrey Dean and Sanjay Ghemawat. 2004

Parallel Programming with Microsoft Visual Studio 2010 Step by Step

Parallel Programming with Microsoft®.NET: Design Patterns for

Decomposition and Coordination on Multicore Architectures

Pro .NET 4 Parallel Programming in C# [Adam_Freeman]

Professional Parallel Programming with C# [Gaston_Hillar]

Professional Parallel Programming with C# Master Parallel Extensions

with NET 4

.NET 4.5 Parallel Extensions Cookbook | Packt Publishing](https://image.slidesharecdn.com/parallelprogramminginmodernworld-140611055915-phpapp01/85/Parallel-programming-in-modern-world-net-technics-shared-30-320.jpg)