Parallel Programming Models: Shared variable model, Message passing model, Data Parallel Model, Object Oriented Model

- 1. Parallel Computing Parallel Computing Presented by Justin Reschke Presented by Justin Reschke 9-14-04 9-14-04

- 2. Overview Overview Concepts and Terminology Concepts and Terminology Parallel Computer Memory Architectures Parallel Computer Memory Architectures Parallel Programming Models Parallel Programming Models Designing Parallel Programs Designing Parallel Programs Parallel Algorithm Examples Parallel Algorithm Examples Conclusion Conclusion

- 3. Concepts and Terminology: Concepts and Terminology: What is Parallel Computing? What is Parallel Computing? Traditionally software has been written for Traditionally software has been written for serial computation. serial computation. Parallel computing is the simultaneous use Parallel computing is the simultaneous use of multiple compute resources to solve a of multiple compute resources to solve a computational problem. computational problem.

- 4. Concepts and Terminology: Concepts and Terminology: Why Use Parallel Computing? Why Use Parallel Computing? Saves time – wall clock time Saves time – wall clock time Cost savings Cost savings Overcoming memory constraints Overcoming memory constraints It’s the future of computing It’s the future of computing

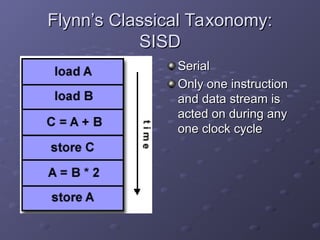

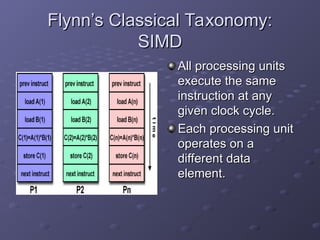

- 5. Concepts and Terminology: Concepts and Terminology: Flynn’s Classical Taxonomy Flynn’s Classical Taxonomy Distinguishes multi-processor architecture Distinguishes multi-processor architecture by instruction and data by instruction and data SISD – Single Instruction, Single Data SISD – Single Instruction, Single Data SIMD – Single Instruction, Multiple Data SIMD – Single Instruction, Multiple Data MISD – Multiple Instruction, Single Data MISD – Multiple Instruction, Single Data MIMD – Multiple Instruction, Multiple Data MIMD – Multiple Instruction, Multiple Data

- 6. Flynn’s Classical Taxonomy: Flynn’s Classical Taxonomy: SISD SISD Serial Serial Only one instruction Only one instruction and data stream is and data stream is acted on during any acted on during any one clock cycle one clock cycle

- 7. Flynn’s Classical Taxonomy: Flynn’s Classical Taxonomy: SIMD SIMD All processing units All processing units execute the same execute the same instruction at any instruction at any given clock cycle. given clock cycle. Each processing unit Each processing unit operates on a operates on a different data different data element. element.

- 8. Flynn’s Classical Taxonomy: Flynn’s Classical Taxonomy: MISD MISD Different instructions operated on a single Different instructions operated on a single data element. data element. Very few practical uses for this type of Very few practical uses for this type of classification. classification. Example: Multiple cryptography algorithms Example: Multiple cryptography algorithms attempting to crack a single coded attempting to crack a single coded message. message.

- 9. Flynn’s Classical Taxonomy: Flynn’s Classical Taxonomy: MIMD MIMD Can execute different Can execute different instructions on instructions on different data different data elements. elements. Most common type of Most common type of parallel computer. parallel computer.

- 10. Concepts and Terminology: Concepts and Terminology: General Terminology General Terminology Task – A logically discrete section of Task – A logically discrete section of computational work computational work Parallel Task – Task that can be executed Parallel Task – Task that can be executed by multiple processors safely by multiple processors safely Communications – Data exchange Communications – Data exchange between parallel tasks between parallel tasks Synchronization – The coordination of Synchronization – The coordination of parallel tasks in real time parallel tasks in real time

- 11. Concepts and Terminology: Concepts and Terminology: More Terminology More Terminology Granularity – The ratio of computation to Granularity – The ratio of computation to communication communication Coarse – High computation, low communication Coarse – High computation, low communication Fine – Low computation, high communication Fine – Low computation, high communication Parallel Overhead Parallel Overhead Synchronizations Synchronizations Data Communications Data Communications Overhead imposed by compilers, libraries, tools, Overhead imposed by compilers, libraries, tools, operating systems, etc. operating systems, etc.

- 12. Parallel Computer Memory Parallel Computer Memory Architectures: Architectures: Shared Memory Architecture Shared Memory Architecture All processors access All processors access all memory as a all memory as a single global address single global address space. space. Data sharing is fast. Data sharing is fast. Lack of scalability Lack of scalability between memory and between memory and CPUs CPUs

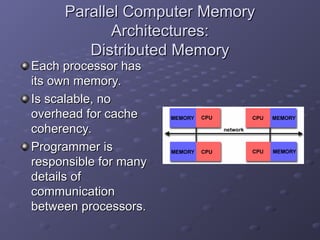

- 13. Parallel Computer Memory Parallel Computer Memory Architectures: Architectures: Distributed Memory Distributed Memory Each processor has Each processor has its own memory. its own memory. Is scalable, no Is scalable, no overhead for cache overhead for cache coherency. coherency. Programmer is Programmer is responsible for many responsible for many details of details of communication communication between processors. between processors.

- 14. Parallel Programming Models Parallel Programming Models Exist as an abstraction above hardware Exist as an abstraction above hardware and memory architectures and memory architectures Examples: Examples: Shared Memory Shared Memory Threads Threads Messaging Passing Messaging Passing Data Parallel Data Parallel

- 15. Parallel Programming Models: Parallel Programming Models: Shared Memory Model Shared Memory Model Appears to the user as a single shared Appears to the user as a single shared memory, despite hardware memory, despite hardware implementations. implementations. Locks and semaphores may be used to Locks and semaphores may be used to control shared memory access. control shared memory access. Program development can be simplified Program development can be simplified since there is no need to explicitly specify since there is no need to explicitly specify communication between tasks. communication between tasks.

- 16. Parallel Programming Models: Parallel Programming Models: Threads Model Threads Model A single process may have multiple, A single process may have multiple, concurrent execution paths. concurrent execution paths. Typically used with a shared memory Typically used with a shared memory architecture. architecture. Programmer is responsible for determining Programmer is responsible for determining all parallelism. all parallelism.

- 17. Parallel Programming Models: Parallel Programming Models: Message Passing Model Message Passing Model Tasks exchange data by sending and receiving Tasks exchange data by sending and receiving messages. messages. Typically used with distributed memory Typically used with distributed memory architectures. architectures. Data transfer requires cooperative operations to Data transfer requires cooperative operations to be performed by each process. Ex.- a send be performed by each process. Ex.- a send operation must have a receive operation. operation must have a receive operation. MPI (Message Passing Interface) is the interface MPI (Message Passing Interface) is the interface standard for message passing. standard for message passing.

- 18. Parallel Programming Models: Parallel Programming Models: Data Parallel Model Data Parallel Model Tasks performing the same operations on Tasks performing the same operations on a set of data. Each task working on a a set of data. Each task working on a separate piece of the set. separate piece of the set. Works well with either shared memory or Works well with either shared memory or distributed memory architectures. distributed memory architectures.

- 19. Designing Parallel Programs: Designing Parallel Programs: Automatic Parallelization Automatic Parallelization Automatic Automatic Compiler analyzes code and identifies Compiler analyzes code and identifies opportunities for parallelism opportunities for parallelism Analysis includes attempting to compute Analysis includes attempting to compute whether or not the parallelism actually whether or not the parallelism actually improves performance. improves performance. Loops are the most frequent target for Loops are the most frequent target for automatic parallelism. automatic parallelism.

- 20. Designing Parallel Programs: Designing Parallel Programs: Manual Parallelization Manual Parallelization Understand the problem Understand the problem A Parallelizable Problem: A Parallelizable Problem: Calculate the potential energy for each of several Calculate the potential energy for each of several thousand independent conformations of a thousand independent conformations of a molecule. When done find the minimum energy molecule. When done find the minimum energy conformation. conformation. A Non-Parallelizable Problem: A Non-Parallelizable Problem: The Fibonacci Series The Fibonacci Series All calculations are dependent All calculations are dependent

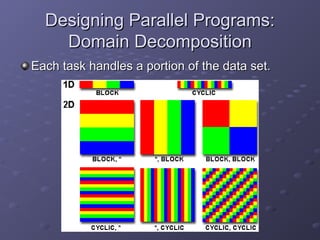

- 21. Designing Parallel Programs: Designing Parallel Programs: Domain Decomposition Domain Decomposition Each task handles a portion of the data set. Each task handles a portion of the data set.

- 22. Designing Parallel Programs: Designing Parallel Programs: Functional Decomposition Functional Decomposition Each task performs a function of the overall work Each task performs a function of the overall work

- 23. Parallel Algorithm Examples: Parallel Algorithm Examples: Array Processing Array Processing Serial Solution Serial Solution Perform a function on a 2D array. Perform a function on a 2D array. Single processor iterates through each Single processor iterates through each element in the array element in the array Possible Parallel Solution Possible Parallel Solution Assign each processor a partition of the array. Assign each processor a partition of the array. Each process iterates through its own Each process iterates through its own partition. partition.

- 24. Parallel Algorithm Examples: Parallel Algorithm Examples: Odd-Even Transposition Sort Odd-Even Transposition Sort Basic idea is bubble sort, but concurrently Basic idea is bubble sort, but concurrently comparing odd indexed elements with an comparing odd indexed elements with an adjacent element, then even indexed adjacent element, then even indexed elements. elements. If there are n elements in an array and If there are n elements in an array and there are n/2 processors. The algorithm is there are n/2 processors. The algorithm is effectively O(n)! effectively O(n)!

- 25. Parallel Algorithm Examples: Parallel Algorithm Examples: Odd Even Transposition Sort Odd Even Transposition Sort Initial array: Initial array: 6, 5, 4, 3, 2, 1, 0 6, 5, 4, 3, 2, 1, 0 6, 4, 5, 2, 3, 0, 1 6, 4, 5, 2, 3, 0, 1 4, 6, 2, 5, 0, 3, 1 4, 6, 2, 5, 0, 3, 1 4, 2, 6, 0, 5, 1, 3 4, 2, 6, 0, 5, 1, 3 2, 4, 0, 6, 1, 5, 3 2, 4, 0, 6, 1, 5, 3 2, 0, 4, 1, 6, 3, 5 2, 0, 4, 1, 6, 3, 5 0, 2, 1, 4, 3, 6, 5 0, 2, 1, 4, 3, 6, 5 0, 1, 2, 3, 4, 5, 6 0, 1, 2, 3, 4, 5, 6 Worst case scenario. Worst case scenario. Phase 1 Phase 1 Phase 2 Phase 2 Phase 1 Phase 1 Phase 2 Phase 2 Phase 1 Phase 1 Phase 2 Phase 2 Phase 1 Phase 1

- 26. Other Parallelizable Problems Other Parallelizable Problems The n-body problem The n-body problem Floyd’s Algorithm Floyd’s Algorithm Serial: O(n^3), Parallel: O(n log p) Serial: O(n^3), Parallel: O(n log p) Game Trees Game Trees Divide and Conquer Algorithms Divide and Conquer Algorithms

- 27. Conclusion Conclusion Parallel computing is fast. Parallel computing is fast. There are many different approaches and There are many different approaches and models of parallel computing. models of parallel computing. Parallel computing is the future of Parallel computing is the future of computing. computing.

- 28. References References A Library of Parallel Algorithms, A Library of Parallel Algorithms, www-2.cs.cmu.edu/~scandal/nesl/algorithms.html www-2.cs.cmu.edu/~scandal/nesl/algorithms.html Internet Parallel Computing Archive, wotug.ukc.ac.uk/parallel Internet Parallel Computing Archive, wotug.ukc.ac.uk/parallel Introduction to Parallel Computing, Introduction to Parallel Computing, www.llnl.gov/computing/tutorials/parallel_comp/#Whatis www.llnl.gov/computing/tutorials/parallel_comp/#Whatis Parallel Programming in C with MPI and OpenMP Parallel Programming in C with MPI and OpenMP, Michael J. Quinn, , Michael J. Quinn, McGraw Hill Higher Education, 2003 McGraw Hill Higher Education, 2003 The New Turing Omnibus The New Turing Omnibus, A. K. Dewdney, Henry Holt and , A. K. Dewdney, Henry Holt and Company, 1993 Company, 1993