使用 PostgreSQL 及 MongoDB 從零開始建置社群必備的按讚追蹤功能

- 1. 使用 PostgreSQL 及 MongoDB 從零開始建 置社群必備的按讚追蹤功能 Kewang, Funliday

- 2. Kewang ● 王慕羣 Kewang ● Java / JavaScript ● HBase / PostgreSQL / MongoDB / Elasticsearch ● Git / DevOps ● 熱愛開源 Linkedin Linkedin kewangtw kewangtw SlideShare SlideShare kewang kewang Gmail Gmail cpckewang cpckewang Facebook Facebook Kewang 的資訊進化論 Kewang 的資訊進化論 devopsday taipei devopsday taipei '17 '17 hadoopcon hadoopcon '14 '15 '14 '15 jcconf jcconf '16 '17 '18 '16 '17 '18 modernweb modernweb '18 '19 '20 '18 '19 '20 GitHub GitHub kewang kewang Funliday Funliday kewang kewang coscup coscup '20 '20 mopcon mopcon '14 '20 '14 '20

- 4. 4 分享的內容

- 5. 5 這次會提到

- 7. 7 這次會提到 1. PostgreSQL table 設計 2. Redis lock 應用

- 8. 8 這次會提到 1. PostgreSQL table 設計 2. Redis lock 應用 3. Message Queue 發送通知

- 9. 9 這次會提到 1. PostgreSQL table 設計 2. Redis lock 應用 3. Message Queue 發送通知 4. MongoDB collection 設計

- 10. 10 這次不會提到

- 12. 12 這次不會提到 1. Facebook API 2. PostgreSQL 及 MongoDB 的維護及參數調校

- 13. 13 開始

- 14. 14 按讚前

- 15. 15 按讚後

- 16. 16 問題:如何設計資料庫?

- 20. 20 最直覺的作法:按讚

- 21. 21 最直覺的作法:按讚

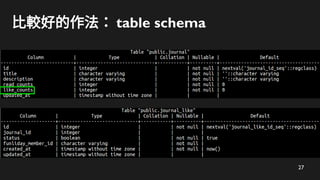

- 28. 28 比較好的作法:按讚

- 29. 29 比較好的作法:按讚

- 30. 30 比較好的作法:按讚

- 37. 37 問題:如何避免重複按讚?

- 38. 38 使用 lock

- 39. 39 Simple sequential diagram client A Redis client A'

- 40. 40 Simple sequential diagram client A Redis client A' acquire lock

- 41. 41 Simple sequential diagram client A Redis client A' acquire lock acquire success

- 42. 42 Simple sequential diagram client A Redis client A' acquire lock acquire success acquire lock

- 43. 43 Simple sequential diagram client A Redis client A' acquire lock acquire success acquire lock acquire fail

- 44. 44 Simple sequential diagram client A Redis client A' acquire lock acquire success acquire lock acquire fail write to DB

- 45. 45 Simple sequential diagram client A Redis client A' acquire lock acquire success acquire lock acquire fail write to DB release lock

- 46. 46 Simple sequential diagram client A Redis client A' acquire lock acquire success acquire lock acquire fail write to DB release lock release success

- 47. 47 Acquire lock

- 48. 48 Acquire lock

- 49. 49 Acquire lock 1. journalid_like : lock 時,同一篇 journal 僅允許按讚一次 2. like_memberid : lock 時,同一人僅允許按讚一次 3. journalid_like_memberid : lock 時,同一篇 journal 僅允許同一人按讚一次

- 50. 50 Acquire lock Set if key doesn’t exist 1. journalid_like : lock 時,同一篇 journal 僅允許按讚一次 2. like_memberid : lock 時,同一人僅允許按讚一次 3. journalid_like_memberid : lock 時,同一篇 journal 僅允許同一人按讚一次

- 51. 51 Acquire lock Set expire time Set if key doesn’t exist 1. journalid_like : lock 時,同一篇 journal 僅允許按讚一次 2. like_memberid : lock 時,同一人僅允許按讚一次 3. journalid_like_memberid : lock 時,同一篇 journal 僅允許同一人按讚一次

- 52. 52 Release lock

- 54. 54 Architecture diagram - wrong way client A server FCM client B

- 55. 55 Architecture diagram - wrong way client A server FCM like req. client B

- 56. 56 Architecture diagram - wrong way client A server FCM like req. client B FCM req.

- 57. 57 Architecture diagram - wrong way client A server FCM like req. like res. client B FCM req.

- 58. 58 Architecture diagram - wrong way client A server FCM like req. like res. client B send push FCM req.

- 59. 59 Architecture diagram - right way

- 60. 60 Architecture diagram - right way client A server MQ FCM client B worker

- 61. 61 Architecture diagram - right way client A server MQ FCM like req. client B worker

- 62. 62 Architecture diagram - right way client A server MQ FCM like req. produce job client B worker

- 63. 63 Architecture diagram - right way client A server MQ FCM like req. produce job like res. client B worker

- 64. 64 Architecture diagram - right way client A server MQ FCM like req. produce job like res. consume job client B worker

- 65. 65 Architecture diagram - right way client A server MQ FCM like req. produce job like res. consume job client B worker FCM req.

- 66. 66 Architecture diagram - right way client A server MQ FCM like req. produce job like res. consume job client B send push worker FCM req.

- 67. 67 Architecture diagram - MQ

- 68. 68 Architecture diagram - MQ producer broker consumer

- 69. 69 Architecture diagram - MQ producer broker consumer job

- 70. 70 Architecture diagram - MQ producer broker consumer job job

- 75. 75 改變資料庫設計

- 76. 76 改變資料庫設計

- 77. 77 改變資料庫設計

- 78. 78 改變資料庫設計

- 79. 79 共用 API

- 80. 80 共用 API

- 81. 81 共用 API

- 82. 82 共用 API

- 83. 83 MQ 有多種 producer 及 consumer

- 84. 84 MQ 有多種 producer 及 consumer trip producer product producer journal producer

- 85. 85 MQ 有多種 producer 及 consumer broker trip producer product producer journal producer

- 86. 86 MQ 有多種 producer 及 consumer broker trip consumer trip producer product producer journal producer product consumer journal consumer

- 87. 87 問題:如何追蹤使用者?

- 88. 88 需求

- 92. 92 追蹤使用者就是對使用者按讚

- 93. 93 追蹤使用者就是對使用者按讚

- 94. 94 追蹤使用者就是對使用者按讚

- 96. 96 首頁 layout 設計方式 feature list popular journal following

- 97. 97 首頁 layout 設計方式 feature list popular journal following

- 98. 98 首頁 layout 設計方式 feature list popular journal following 存在 MongoDB

- 99. 99 首頁 layout 設計方式 feature list popular journal following 個人化

- 100. 100 following layout 設計方式 feature list popular journal following

- 101. 101 following layout 設計方式 feature list popular journal following

- 102. 102 following layout 設計方式 feature list popular journal following 每日定期清理

- 103. 103 被追蹤者發文後要即時通知追蹤者

- 104. 104 被追蹤者發文後要即時通知追蹤者

- 105. 105 被追蹤者發文後要即時通知追蹤者

- 106. 106 番外篇: MQ 的應用

- 107. 107 MQ 的應用

- 108. 108 MQ 的應用 1.較耗時的工作

- 111. 111 MQ 不是想用就能用

- 115. 115 舉例:建立索引

- 116. 116 舉例:建立索引

- 117. 117 舉例:建立索引

- 119. 119 舉例:建立索引 client A server MQ Elasticsearch worker

- 120. 120 舉例:建立索引 client A server MQ Elasticsearch public req. worker

- 121. 121 舉例:建立索引 client A server MQ Elasticsearch public req. produce job worker

- 122. 122 舉例:建立索引 client A server MQ Elasticsearch public req. produce job public res. worker

- 123. 123 舉例:建立索引 client A server MQ Elasticsearch public req. produce job public res. consume job worker

- 124. 124 舉例:建立索引 client A server MQ Elasticsearch public req. produce job public res. consume job worker indexing content

- 125. 125 舉例:計算行程經過的城市

- 126. 126 舉例:計算行程經過的城市

- 128. 128 舉例:計算行程經過的城市 client A server MQ PostgreSQL worker

- 129. 129 舉例:計算行程經過的城市 client A server MQ PostgreSQL add POI req. worker

- 130. 130 舉例:計算行程經過的城市 client A server MQ PostgreSQL add POI req. produce job worker

- 131. 131 舉例:計算行程經過的城市 client A server MQ PostgreSQL add POI req. produce job add POI res. worker

- 132. 132 舉例:計算行程經過的城市 client A server MQ PostgreSQL add POI req. produce job add POI res. consume job worker

- 133. 133 舉例:計算行程經過的城市 client A server MQ PostgreSQL add POI req. produce job add POI res. consume job worker query cities from trip

- 134. 134 Conclusion

- 135. 135 Conclusion

- 138. 138 Conclusion 1.為了讓系統變快,反正規化是必要之惡 2.慎選 lock key 3.善用 MQ 可以加快系統效能

- 139. 139 工商時間

- 141. 141 References 1. Distributed locks with Redis 2. The Architecture Twitter Uses To Deal With 150M Active Users, 300K QPS, A 22 MB/S Firehose, And Send Tweets In Under 5 Se conds 3. BullMQ - Premium Message Queue for NodeJS based on Redis

- 142. 142