Programming using MPI and OpenMP

- 1. Programming using MPI & OpenMP HIGH PERFORMANCE COMPUTING MODULE 4 DIVYA TIWARI MEIT TERNA ENGINEERING COLLEGE

- 2. INTRODUCTION • Parallel computing has made a tremendous impact on a variety of areas from computational simulations for scientific and engineering applications to commercial applications in data mining and transaction processing. • Numerous programming languages and libraries have been developed for explicit parallel programming. • They differ - in their view of the address space that they make available to the programmer - the degree of synchronization imposed on concurrent activities - the multiplicity of programs. • The message-passing programming (MPI) is one of the oldest and most widely used approaches for programming parallel computers.

- 3. Principles Key Atrributes of MPI Assumes partitioned address space Supports only explicit parallelization • Implications of Partitioned address space: 1. Each data must belong to one of the partition of the space. 2. Interaction requires co-operation of two processes (the process that has the data and process that wants to access the data.) • Explicit Parallelization: Programmer is responsible for analysing underlying serial algorithm/application and identifying ways by which he or she can decompose the computations and extract concurrency.

- 4. Structure of Message Passing Program • Message-passing programs are often written using: 1. Asynchronous 2. Loosely Synchronous • In its most general form, the message-passing paradigm supports execution of a different program on each of the p processes. • Most message-passing programs are written using the single program multiple data (SPMD) approach.

- 5. Building Blocks: Send and Receive Operations • Communication between the processes are accomplished by sending and receiving messages. • Basic operations in message passing program are send and receive. • Example: send(void *sendbuf, int nelems, int dest) receive(void *recvbuf, int nelems, int source) sendbuf - points to a buffer that stores the data to be sent. recvbuf - points to a buffer that stores the data to be received. nelems - is the number of data units to be sent and received. dest - is the identifier of the process that receives the data. source - is the identifier of the process that sends the data.

- 6. • Example: A process sending a piece of data to another process send(void *sendbuf, int nelems, int dest) receive(void *recvbuf, int nelems, int source) 1 P0 P1 2 3 a = 100; receive(&a, 1, 0) 4 send(&a, 1, 1); printf("%dn", a); 5 a=0;

- 7. • In message passing operation there are two types: 1. Blocking Message Passing Operations. i. Blocking Non-Buffered Send/Receive ii. Blocking Buffered Send/Receive 2. Non-Blocking Message Passing Operations.

- 8. Blocking Message Passing Operations i. Blocking Non-Buffered Send/Receive

- 9. ii. Blocking Buffered Send/Receive a. In the presence of communication hardware with buffers at send and receive ends b. In the absence of communication hardware sender interrupts receiver and deposits data in buffer at receiver end

- 10. Non-Blocking Message Passing Operations Space of possible protocols for send and receive operations.

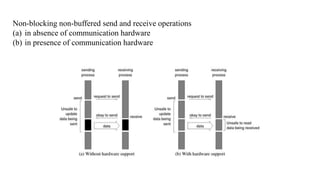

- 11. Non-blocking non-buffered send and receive operations (a) in absence of communication hardware (b) in presence of communication hardware

- 12. MPI: the Message Passing Interface • Message-passing architecture is used in parallel computer due to its lower cost relative to shared-address-space architectures. • Message-passing is the natural programming paradigm for these machines leads to development of different message-passing libraries. • These message-passing libraries works well in vendors own hardware but was incompatible with parallel computers offered by other vendors. • Difference between libraries are mostly syntactic but have some serious semantic differences which require significant re-engineering to port a message-passing program from one library to another. • The message-passing interface, or MPI as it is commonly known, was created to essentially solve this problem.

- 13. • The MPI library contains over 125 routines, but the number of key concepts is much smaller. • It is possible to write fully-functional message-passing programs by using only the six routines shown below: 1. MPI_Init : Initializes MPI. 2. MPI_Finalize : Terminates MPI. 3. MPI_Comm_size : Determines the number of processes. 4. MPI_Comm_rank : Determines the label of the calling process. 5. MPI_Send : Sends a message. 6. MPI_Recv : Receives a message.

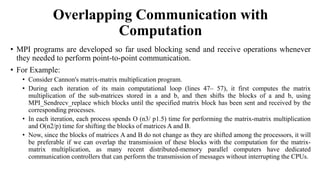

- 14. Overlapping Communication with Computation • MPI programs are developed so far used blocking send and receive operations whenever they needed to perform point-to-point communication. • For Example: • Consider Cannon's matrix-matrix multiplication program. • During each iteration of its main computational loop (lines 47– 57), it first computes the matrix multiplication of the sub-matrices stored in a and b, and then shifts the blocks of a and b, using MPI_Sendrecv_replace which blocks until the specified matrix block has been sent and received by the corresponding processes. • In each iteration, each process spends O (n3/ p1.5) time for performing the matrix-matrix multiplication and O(n2/p) time for shifting the blocks of matrices A and B. • Now, since the blocks of matrices A and B do not change as they are shifted among the processors, it will be preferable if we can overlap the transmission of these blocks with the computation for the matrix- matrix multiplication, as many recent distributed-memory parallel computers have dedicated communication controllers that can perform the transmission of messages without interrupting the CPUs.

- 15. Non-Blocking Communication Operations • MPI provide pairs of functions for performing non-blocking send and receive operations to overlap communication with computation. • These functions are: • MPI_Isend: MPI_Isend starts a send operation but does not complete, that is, it returns before the data is copied out of the buffer. • MPI_Irecv: MPI_Irecv starts a receive operation but returns before the data has been received and copied into the buffer. • MPI_Test: MPI_TEST tests whether or not a non-blocking operation has finished. • MPI_Wait: waits (i.e., gets blocked) until a non-blocking operation actually finishes. • With the support of appropriate hardware, the transmission and reception of messages can proceed concurrently with the computations performed by the program upon the return of the above functions.

- 16. Collective Communication and Computation Operation • MPI provides an extensive set of functions for performing many commonly used collective communication operations. • All of the collective communication functions provided by MPI take as an argument a communicator that defines the group of processes that participate in the collective operation. • All the processes that belong to this communicator participate in the operation, and all of them must call the collective communication function. • Even though collective communication operations do not act like barriers (i.e., it is possible for a processor to go past its call for the collective communication operation even before other processes have reached it), it acts like a virtual synchronization step in the following sense: the parallel program should be written such that it behaves correctly even if a global synchronization is performed before and after the collective call.

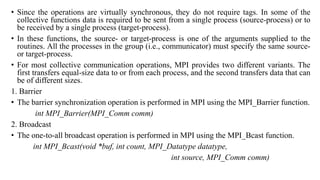

- 17. • Since the operations are virtually synchronous, they do not require tags. In some of the collective functions data is required to be sent from a single process (source-process) or to be received by a single process (target-process). • In these functions, the source- or target-process is one of the arguments supplied to the routines. All the processes in the group (i.e., communicator) must specify the same source- or target-process. • For most collective communication operations, MPI provides two different variants. The first transfers equal-size data to or from each process, and the second transfers data that can be of different sizes. 1. Barrier • The barrier synchronization operation is performed in MPI using the MPI_Barrier function. int MPI_Barrier(MPI_Comm comm) 2. Broadcast • The one-to-all broadcast operation is performed in MPI using the MPI_Bcast function. int MPI_Bcast(void *buf, int count, MPI_Datatype datatype, int source, MPI_Comm comm)

- 18. 3. Reduction • The all-to-one reduction operation is performed in MPI using the MPI_Reduce function. int MPI_Reduce(void *sendbuf, void *recvbuf, int count, MPI_Datatype datatype, MPI_Op op, int target, MPI_Comm comm) 4. Prefix • The prefix-sum operation is performed in MPI using the MPI_Scan function. int MPI_Scan(void *sendbuf, void *recvbuf, int count, MPI_Datatype datatype, MPI_Op op, MPI_Comm comm) 5. Gather • The gather operation is performed in MPI using the MPI_Gather function. int MPI_Gather(void *sendbuf, int sendcount, MPI_Datatype senddatatype, void *recvbuf, int recvcount, MPI_Datatype recvdatatype, int target, MPI_Comm comm)

- 19. 6. Scatter • The scatter operation described is performed in MPI using the MPI_Scatter function. int MPI_Scatter(void *sendbuf, int sendcount, MPI_Datatype senddatatype, void *recvbuf, int recvcount, MPI_Datatype recvdatatype, int source, MPI_Comm comm) 7. All-to-All • The all-to-all personalized communication operation described in Section 4.5 is performed in MPI by using the MPI_Alltoall function. int MPI_Alltoall(void *sendbuf, int sendcount, MPI_Datatype senddatatype, void *recvbuf, int recvcount, MPI_Datatype recvdatatype, MPI_Comm comm)

- 20. OpenMP Parallel Programming Model • OpenMP is an API that can be used with FORTRAN, C, and C++ for programming shared address space machines. • OpenMP directives provide support for concurrency, synchronization and data handling while obviating the need for explicitly setting up mutexes, condition variables, data scope, and initialization. • OpenMP directives in C and C++ are based on the #pragma compiler directives. The directive itself consists of a directive name followed by clauses. 1 #pragma omp directive [clause list] • OpenMP programs execute serially until they encounter the parallel directive.

- 21. • parallel directive is responsible for creating a group of threads. • The main thread that encounters the parallel directive becomes the master of this group of threads and is assigned the thread id 0 within the group. • The parallel directive has the following prototype: 1 #pragma omp parallel [clause list] 2 /* structured block */ 3 • Each thread created by this directive executes the structured block specified by the parallel directive. • The clause list is used to specify conditional parallelization, number of threads, and data handling. • Conditional Parallelization: The clause if (scalar expression) determines whether the parallel construct results in creation of threads. Only one if clause can be used with a parallel directive. • Degree of Concurrency: The clause num_threads (integer expression) specifies the number of threads that are created by the parallel directive.

- 22. • Data Handling: The clause private (variable list) indicates that the set of variables specified is local to each thread – i.e., each thread has its own copy of each variable in the list. • The clause firstprivate (variable list) is similar to the private clause, except the values of variables on entering the threads are initialized to corresponding values before the parallel directive. • The clause shared (variable list) indicates that all variables in the list are shared across all the threads, i.e., there is only one copy. Special care must be taken while handling these variables by threads to ensure serializability.

- 23. A sample OpenMP program along with its Pthreads translation that might be performed by an OpenMP compiler.

- 24. • Given below are powerful set of OpenMP compiler directives: 1. parallel: which precedes a block of code to be executed in parallel by multiple threads. 2. for: which precedes a for loop with independent iterations that may be divided among threads executing in parallel. 3. parallel for: a combination of the parallel and for directives. 4. sections: which precedes a series of blocks that may be executed in parallel. 5. parallel sections: a combination of the parallel and sections directives. 6. critical: which precedes a critical section. 7. single: which precedes a code block to be executed by a single thread.

- 25. Shared Memory Model • Processors interact and synchronize with each other through shared variables.

- 26. Fork/Join Parallelism • Initially only master thread is active. • Master thread executes sequential code. • Fork: Master thread creates or awakens additional threads to execute parallel code. • Join: At end of parallel code created threads die or are suspended.

- 27. Parallel for Loops • C programs often express data-parallel operations as for loops for (i = first; i < size; i += prime) marked[i] = 1; • OpenMP makes it easy to indicate when the iterations of a loop may execute in parallel. • Compiler takes care of generating code that forks/joins threads and allocates the iterations to threads

- 28. Shared and Private Variables • Shared variable: has same address in execution context of every thread. • Private variable: has different address in execution context of every thread. • A thread cannot access the private variables of another thread.

- 29. Function omp_get_num_procs • Returns number of physical processors available for use by the parallel program int omp_get_num_procs (void) Function omp_set_num_threads • Uses the parameter value to set the number of threads to be active in parallel sections of code. • May be called at multiple points in a program. void omp_set_num_threads (int t)

- 30. MU Exam Questions May 2018 • Explain the concept of shared memory programming. 5 marks • Explain in brief about Performance bottleneck, Data Race and Determinism, Data Race Avoidance and Deadlock Avoidance. 10 marks Dec 2018 • Discuss the term collective communication in MPI. 5 marks • Differentiate between buffered blocking and non-buffered blocking message passing operation in MPI. 10 marks May 2019 • Discuss the term collective communication in MPI. 5 marks • Differentiate between buffered blocking and non-buffered blocking message passing operation in MPI. 10 marks • Write a small program demonstrating functional and compiler directives in OpenMP paradigm and MP paradigm.

- 31. Research Paper

![OpenMP Parallel Programming Model

• OpenMP is an API that can be used with FORTRAN, C, and C++ for programming shared

address space machines.

• OpenMP directives provide support for concurrency, synchronization and data handling

while obviating the need for explicitly setting up mutexes, condition variables, data scope,

and initialization.

• OpenMP directives in C and C++ are based on the #pragma compiler directives. The

directive itself consists of a directive name followed by clauses.

1 #pragma omp directive [clause list]

• OpenMP programs execute serially until they encounter the parallel directive.](https://image.slidesharecdn.com/programmingusingmpiandopenmp-210924144434/85/Programming-using-MPI-and-OpenMP-20-320.jpg)

![• parallel directive is responsible for creating a group of threads.

• The main thread that encounters the parallel directive becomes the master of this group of

threads and is assigned the thread id 0 within the group.

• The parallel directive has the following prototype:

1 #pragma omp parallel [clause list]

2 /* structured block */

3

• Each thread created by this directive executes the structured block specified by the parallel

directive.

• The clause list is used to specify conditional parallelization, number of threads, and data

handling.

• Conditional Parallelization: The clause if (scalar expression) determines whether the

parallel construct results in creation of threads. Only one if clause can be used with a

parallel directive.

• Degree of Concurrency: The clause num_threads (integer expression) specifies the

number of threads that are created by the parallel directive.](https://image.slidesharecdn.com/programmingusingmpiandopenmp-210924144434/85/Programming-using-MPI-and-OpenMP-21-320.jpg)

![Parallel for Loops

• C programs often express data-parallel operations as for loops

for (i = first; i < size; i += prime)

marked[i] = 1;

• OpenMP makes it easy to indicate when the iterations of a loop may execute in

parallel.

• Compiler takes care of generating code that forks/joins threads and allocates the

iterations to threads](https://image.slidesharecdn.com/programmingusingmpiandopenmp-210924144434/85/Programming-using-MPI-and-OpenMP-27-320.jpg)