Sequence to sequence (encoder-decoder) learning

5 likes2,184 views

The document discusses various neural network models, including RNNs, LSTMs, and GRUs, focusing on their capabilities to handle sequence-to-sequence tasks. It highlights challenges in long-term dependencies and variable input sizes while outlining the use of attention mechanisms in machine translation and encoder-decoder architectures. Additionally, it explores applications like chatbots and image captioning, and suggests future directions for sequence-to-sequence learning.

1 of 46

Downloaded 75 times

![padding

Source: http://suriyadeepan.github.io/

EOS : End of sentence

PAD : Filler

GO : Start decoding

UNK : Unknown; word not in vocabulary

Q : "What time is it? "

A : "It is seven thirty."

Q : [ PAD, PAD, PAD, PAD, PAD, “?”, “it”,“is”, “time”, “What” ]

A : [ GO, “It”, “is”, “seven”, “thirty”, “.”, EOS, PAD, PAD, PAD ]](https://image.slidesharecdn.com/seq2seqfinalroberto-170201172152/85/Sequence-to-sequence-encoder-decoder-learning-27-320.jpg)

![Source: https://www.tensorflow.org/

bucketing

Efficiently handle sentences of different lengths

Ex: 100 tokens is the largest sentence in corpus

How about short sentences like: "How are you?" → lots of PAD

Bucket list: [(5, 10), (10, 15), (20, 25), (40, 50)]

(defaut on Tensorflow translate.py)

Q : [ PAD, PAD, “.”, “go”,“I”]

A : [GO "Je" "vais" "." EOS PAD PAD PAD PAD PAD]](https://image.slidesharecdn.com/seq2seqfinalroberto-170201172152/85/Sequence-to-sequence-encoder-decoder-learning-28-320.jpg)

Ad

Recommended

Introduction For seq2seq(sequence to sequence) and RNN

Introduction For seq2seq(sequence to sequence) and RNNHye-min Ahn The document presents an introduction to sequence-to-sequence models and recurrent neural networks (RNNs), discussing their ability to process sequential information. It explains the concepts behind RNNs, Long Short-Term Memory (LSTM), and Gated Recurrent Units (GRU), highlighting their mechanisms for remembering and forgetting memory. Additionally, it outlines the basic implementation of sequence-to-sequence models and introduces the attention mechanism for enhancing encoder-decoder architectures.

Introduction to Transformer Model

Introduction to Transformer ModelNuwan Sriyantha Bandara This document provides an overview of natural language processing (NLP) and the evolution of its techniques from symbolic and statistical methods to neural networks and deep learning. It explains the transformer architecture, focusing on its use of self-attention for sequence-to-sequence tasks and its advantages in handling long-range dependencies. The document also highlights challenges such as context fragmentation due to fixed-length input segments and discusses future directions, including transformer XL and BERT.

Natural language processing and transformer models

Natural language processing and transformer modelsDing Li The document discusses several approaches for text classification using machine learning algorithms:

1. Count the frequency of individual words in tweets and sum for each tweet to create feature vectors for classification models like regression. However, this loses some word context information.

2. Use Bayes' rule and calculate word probabilities conditioned on class to perform naive Bayes classification. Laplacian smoothing is used to handle zero probabilities.

3. Incorporate word n-grams and context by calculating word probabilities within n-gram contexts rather than independently. This captures more linguistic information than the first two approaches.

Natural language processing (nlp)

Natural language processing (nlp)Kuppusamy P This document provides an overview of natural language processing (NLP). It discusses how NLP allows computers to understand human language through techniques like speech recognition, text analysis, and language generation. The document outlines the main components of NLP including natural language understanding and natural language generation. It also describes common NLP tasks like part-of-speech tagging, named entity recognition, and dependency parsing. Finally, the document explains how to build an NLP pipeline by applying these techniques in a sequential manner.

Natural Language Processing with Python

Natural Language Processing with PythonBenjamin Bengfort The document provides an overview of natural language processing (NLP) using Python, discussing the evolution of language study and the distinction between formal and natural languages. It addresses various NLP techniques, applications, and the Natural Language Toolkit (NLTK), emphasizing the importance of machine learning for flexibility in language understanding. Key tasks in NLP include classification, parsing, and feature extraction, with a focus on transforming raw language data for practical applications.

Abstractive Text Summarization

Abstractive Text SummarizationTho Phan The document discusses the concepts of abstractive text summarization using sequence-to-sequence models with attention mechanisms, highlighting their role in natural language generation. It explains the differences between extractive and abstractive summarization, the importance of attention in improving model performance, and various decoding algorithms. Additionally, it covers evaluation metrics like ROUGE and challenges in measuring the quality of generated summaries.

BERT introduction

BERT introductionHanwha System / ICT BERT is a deeply bidirectional, unsupervised language representation model pre-trained using only plain text. It is the first model to use a bidirectional Transformer for pre-training. BERT learns representations from both left and right contexts within text, unlike previous models like ELMo which use independently trained left-to-right and right-to-left LSTMs. BERT was pre-trained on two large text corpora using masked language modeling and next sentence prediction tasks. It establishes new state-of-the-art results on a wide range of natural language understanding benchmarks.

Data Visualization in Python

Data Visualization in PythonJagriti Goswami This document provides an overview of data visualization in Python. It discusses popular Python libraries and modules for visualization like Matplotlib, Seaborn, Pandas, NumPy, Plotly, and Bokeh. It also covers different types of visualization plots like bar charts, line graphs, pie charts, scatter plots, histograms and how to create them in Python using the mentioned libraries. The document is divided into sections on visualization libraries, version overview of updates to plots, and examples of various plot types created in Python.

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

BERT: Pre-training of Deep Bidirectional Transformers for Language UnderstandingMinh Pham The document presents a seminar on BERT (Bidirectional Encoder Representations from Transformers), a breakthrough in natural language processing that utilizes deep bidirectional learning to enhance language understanding. It discusses the limitations of previous models and outlines BERT's architecture, pre-training tasks, and fine-tuning procedures, demonstrating its superiority in various NLP tasks. The findings indicate that BERT's bidirectional nature and unique training approach significantly improve performance across many benchmarks.

NAMED ENTITY RECOGNITION

NAMED ENTITY RECOGNITIONlive_and_let_live This document presents an overview of named entity recognition (NER) and the conditional random field (CRF) algorithm for NER. It defines NER as the identification and classification of named entities like people, organizations, locations, etc. in unstructured text. The document discusses the types of named entities, common NER techniques including rule-based and supervised methods, and explains the CRF algorithm and its mathematical model. It also covers the advantages of CRF for NER and examples of its applications in areas like information extraction.

Text Classification

Text ClassificationRAX Automation Suite The document outlines a research overview on text classification for Rax Studio, emphasizing natural language processing applications such as sentiment analysis and intent classification. It details the data preprocessing steps, feature extraction methods like one-hot encoding and word2vec, and various machine learning models including Naive Bayes and multilayer perceptron. Additionally, it suggests an implementation plan focused on email management and cleaning, with a framework for model updating and retraining.

Bert

BertAbdallah Bashir The document discusses the BERT model for natural language processing. It begins with an introduction to BERT and how it achieved state-of-the-art results on 11 NLP tasks in 2018. The document then covers related work on language representation models including ELMo and GPT. It describes the key aspects of the BERT model, including its bidirectional Transformer architecture, pre-training using masked language modeling and next sentence prediction, and fine-tuning for downstream tasks. Experimental results are presented showing BERT outperforming previous models on the GLUE benchmark, SQuAD 1.1, SQuAD 2.0, and SWAG. Ablation studies examine the importance of the pre-training tasks and the effect of model size.

Nlp toolkits and_preprocessing_techniques

Nlp toolkits and_preprocessing_techniquesankit_ppt This document discusses natural language processing (NLP) toolkits and preprocessing techniques. It introduces popular Python NLP libraries like NLTK, TextBlob, spaCy and gensim. It also covers various text preprocessing methods including tokenization, removing punctuation/characters, stemming, lemmatization, part-of-speech tagging, named entity recognition and more. Code examples demonstrate how to implement these techniques in Python to clean and normalize text data for analysis.

An introduction to the Transformers architecture and BERT

An introduction to the Transformers architecture and BERTSuman Debnath The document provides an overview of natural language processing (NLP) and the evolution of its algorithms, particularly focusing on the transformer architecture and BERT. It explains how these models work, highlighting key components such as the encoder mechanisms, attention processes, and pre-training tasks. Additionally, it addresses various use cases of NLP, including text classification, summarization, and question answering.

Bert.pptx

Bert.pptxDivya Gera The document discusses transformer and BERT models. It provides an overview of attention models, the transformer architecture, and how transformer models work. It then introduces BERT, explaining how it differs from transformer models in that it does not use a decoder and is pretrained using two unsupervised tasks. The document outlines BERT's architecture and embeddings. Pretrained BERT models are discussed, including DistilBERT, RoBERTa, ALBERT and DeBERTa.

Transformers AI PPT.pptx

Transformers AI PPT.pptxRahulKumar854607 1) Transformers use self-attention to solve problems with RNNs like vanishing gradients and parallelization. They combine CNNs and attention.

2) Transformers have encoder and decoder blocks. The encoder models input and decoder models output. Variations remove encoder (GPT) or decoder (BERT) for language modeling.

3) GPT-3 is a large Transformer with 175B parameters that can perform many NLP tasks but still has safety and bias issues.

NLP

NLPJeet Das The document presents a proposal for developing a Bangla tokenizer for part-of-speech tagging and token classification to address challenges in natural language processing for the Bengali language. It discusses the importance of tokenization and the complexities of Bangla grammar, emphasizing the need for comprehensive technology and standards to facilitate effective language processing. Future work aims to improve token classification and stemming to enhance the accuracy and efficiency of Bengali text processing.

BERT Finetuning Webinar Presentation

BERT Finetuning Webinar Presentationbhavesh_physics This document discusses fine-tuning the BERT model with PyTorch and the Transformers library. It provides an overview of BERT, how it was trained, its special tokens, the Transformers library, preprocessing text for BERT, using the BertModel class, the approach to fine-tuning BERT for a task, creating a dataset and data loaders, and training and validating the model.

Lexical Analysis - Compiler design

Lexical Analysis - Compiler design Aman Sharma The document discusses the role and implementation of a lexical analyzer. It can be summarized as:

1. A lexical analyzer scans source code, groups characters into lexemes, and produces tokens which it returns to the parser upon request. It handles tasks like removing whitespace and expanding macros.

2. It implements buffering techniques to efficiently scan large inputs and uses transition diagrams to represent patterns for matching tokens.

3. Regular expressions are used to specify patterns for tokens, and flex is a common language for implementing lexical analyzers based on these specifications.

Word embedding

Word embedding ShivaniChoudhary74 Word embeddings are numerical representations of words in a continuous vector space, crucial for natural language processing (NLP) tasks. These embeddings capture semantic relationships and can be generated through techniques like co-occurrence matrices, dimensionality reduction, and neural networks. Popular models include word2vec and GloVe, each with representation approaches that impact context understanding and embedding efficiency.

BERT

BERTMohd Shukri Hasan BERT is a technique for pre-training deep bidirectional representations from unlabeled text by using a Transformer encoder. It can be fine-tuned with just one additional output layer to create state-of-the-art models for a wide range of natural language processing tasks, including question answering and text classification. The presentation provides an overview of what BERT is, how it works through pre-training and fine-tuning, and example tasks it can be applied to such as sentence classification, question answering, and named entity recognition.

Knowledge representation and Predicate logic

Knowledge representation and Predicate logicAmey Kerkar 1. The document discusses knowledge representation and predicate logic.

2. It explains that knowledge representation involves representing facts through internal representations that can then be manipulated to derive new knowledge. Predicate logic allows representing objects and relationships between them using predicates, quantifiers, and logical connectives.

3. Several examples are provided to demonstrate representing simple facts about individuals as predicates and using quantifiers like "forall" and "there exists" to represent generalized statements.

Pandas

Pandasmaikroeder Pandas is a powerful Python library for data analysis and manipulation. It provides rich data structures for working with structured and time series data easily. Pandas allows for data cleaning, analysis, modeling, and visualization. It builds on NumPy and provides data frames for working with tabular data similarly to R's data frames, as well as time series functionality and tools for plotting, merging, grouping, and handling missing data.

Pass Structure of Assembler

Pass Structure of AssemblerInternational Institute of Information Technology (I²IT) The document discusses concepts related to systems programming, specifically focusing on single-pass and two-pass assemblers and their functions. It explains forward references, symbol tables, intermediate code, and includes examples to demonstrate the assembly process. Additionally, it provides details on required data structures and sample code implementations for both single-pass and two-pass assemblers.

Python Machine Learning Tutorial | Machine Learning Algorithms | Python Train...

Python Machine Learning Tutorial | Machine Learning Algorithms | Python Train...Edureka! This document is a training outline for a Python certification course focusing on machine learning. It covers topics such as the definition of machine learning, its importance, types (supervised and unsupervised learning), and specific algorithms like k-nearest neighbors (KNN) and k-means clustering. The material is intended to equip learners with the necessary skills to apply machine learning techniques effectively.

Natural Language Processing (NLP)

Natural Language Processing (NLP)Yuriy Guts The document is a comprehensive overview of Natural Language Processing (NLP), detailing key concepts, common tasks, and the challenges faced in the field. It emphasizes the transition from traditional rule-based systems to machine learning and deep learning approaches, highlighting techniques such as tokenization, named entity recognition, and language modeling using neural networks. Additionally, it includes resources and tools for NLP development, particularly focusing on progress with the Ukrainian language.

Design and Analysis of Algorithms

Design and Analysis of AlgorithmsArvind Krishnaa This document summarizes a lecture on algorithms and graph traversal techniques. It discusses:

1) Breadth-first search (BFS) and depth-first search (DFS) algorithms for traversing graphs. BFS uses a queue while DFS uses a stack.

2) Applications of BFS and DFS, including finding connected components, minimum spanning trees, and bi-connected components.

3) Identifying articulation points to determine biconnected components in a graph.

4) The 0/1 knapsack problem and approaches for solving it using greedy algorithms, backtracking, and branch and bound search.

Introduction to Named Entity Recognition

Introduction to Named Entity RecognitionTomer Lieber Named Entity Recognition (NER) is a common task in Natural Language Processing that aims to find and classify named entities in text, such as person names, organizations, and locations, into predefined categories. NER can be used for applications like machine translation, information retrieval, and question answering. Traditional approaches to NER involve feature extraction and training statistical or machine learning models on features, while current state-of-the-art methods use deep learning models like LSTMs combined with word embeddings. NER performance is typically evaluated using the F1 score, which balances precision and recall of named entity detection.

Word Embeddings - Introduction

Word Embeddings - IntroductionChristian Perone The document provides an introduction to word embeddings and two related techniques: Word2Vec and Word Movers Distance. Word2Vec is an algorithm that produces word embeddings by training a neural network on a large corpus of text, with the goal of producing dense vector representations of words that encode semantic relationships. Word Movers Distance is a method for calculating the semantic distance between documents based on the embedded word vectors, allowing comparison of documents with different words but similar meanings. The document explains these techniques and provides examples of their applications and properties.

More Related Content

What's hot (20)

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

BERT: Pre-training of Deep Bidirectional Transformers for Language UnderstandingMinh Pham The document presents a seminar on BERT (Bidirectional Encoder Representations from Transformers), a breakthrough in natural language processing that utilizes deep bidirectional learning to enhance language understanding. It discusses the limitations of previous models and outlines BERT's architecture, pre-training tasks, and fine-tuning procedures, demonstrating its superiority in various NLP tasks. The findings indicate that BERT's bidirectional nature and unique training approach significantly improve performance across many benchmarks.

NAMED ENTITY RECOGNITION

NAMED ENTITY RECOGNITIONlive_and_let_live This document presents an overview of named entity recognition (NER) and the conditional random field (CRF) algorithm for NER. It defines NER as the identification and classification of named entities like people, organizations, locations, etc. in unstructured text. The document discusses the types of named entities, common NER techniques including rule-based and supervised methods, and explains the CRF algorithm and its mathematical model. It also covers the advantages of CRF for NER and examples of its applications in areas like information extraction.

Text Classification

Text ClassificationRAX Automation Suite The document outlines a research overview on text classification for Rax Studio, emphasizing natural language processing applications such as sentiment analysis and intent classification. It details the data preprocessing steps, feature extraction methods like one-hot encoding and word2vec, and various machine learning models including Naive Bayes and multilayer perceptron. Additionally, it suggests an implementation plan focused on email management and cleaning, with a framework for model updating and retraining.

Bert

BertAbdallah Bashir The document discusses the BERT model for natural language processing. It begins with an introduction to BERT and how it achieved state-of-the-art results on 11 NLP tasks in 2018. The document then covers related work on language representation models including ELMo and GPT. It describes the key aspects of the BERT model, including its bidirectional Transformer architecture, pre-training using masked language modeling and next sentence prediction, and fine-tuning for downstream tasks. Experimental results are presented showing BERT outperforming previous models on the GLUE benchmark, SQuAD 1.1, SQuAD 2.0, and SWAG. Ablation studies examine the importance of the pre-training tasks and the effect of model size.

Nlp toolkits and_preprocessing_techniques

Nlp toolkits and_preprocessing_techniquesankit_ppt This document discusses natural language processing (NLP) toolkits and preprocessing techniques. It introduces popular Python NLP libraries like NLTK, TextBlob, spaCy and gensim. It also covers various text preprocessing methods including tokenization, removing punctuation/characters, stemming, lemmatization, part-of-speech tagging, named entity recognition and more. Code examples demonstrate how to implement these techniques in Python to clean and normalize text data for analysis.

An introduction to the Transformers architecture and BERT

An introduction to the Transformers architecture and BERTSuman Debnath The document provides an overview of natural language processing (NLP) and the evolution of its algorithms, particularly focusing on the transformer architecture and BERT. It explains how these models work, highlighting key components such as the encoder mechanisms, attention processes, and pre-training tasks. Additionally, it addresses various use cases of NLP, including text classification, summarization, and question answering.

Bert.pptx

Bert.pptxDivya Gera The document discusses transformer and BERT models. It provides an overview of attention models, the transformer architecture, and how transformer models work. It then introduces BERT, explaining how it differs from transformer models in that it does not use a decoder and is pretrained using two unsupervised tasks. The document outlines BERT's architecture and embeddings. Pretrained BERT models are discussed, including DistilBERT, RoBERTa, ALBERT and DeBERTa.

Transformers AI PPT.pptx

Transformers AI PPT.pptxRahulKumar854607 1) Transformers use self-attention to solve problems with RNNs like vanishing gradients and parallelization. They combine CNNs and attention.

2) Transformers have encoder and decoder blocks. The encoder models input and decoder models output. Variations remove encoder (GPT) or decoder (BERT) for language modeling.

3) GPT-3 is a large Transformer with 175B parameters that can perform many NLP tasks but still has safety and bias issues.

NLP

NLPJeet Das The document presents a proposal for developing a Bangla tokenizer for part-of-speech tagging and token classification to address challenges in natural language processing for the Bengali language. It discusses the importance of tokenization and the complexities of Bangla grammar, emphasizing the need for comprehensive technology and standards to facilitate effective language processing. Future work aims to improve token classification and stemming to enhance the accuracy and efficiency of Bengali text processing.

BERT Finetuning Webinar Presentation

BERT Finetuning Webinar Presentationbhavesh_physics This document discusses fine-tuning the BERT model with PyTorch and the Transformers library. It provides an overview of BERT, how it was trained, its special tokens, the Transformers library, preprocessing text for BERT, using the BertModel class, the approach to fine-tuning BERT for a task, creating a dataset and data loaders, and training and validating the model.

Lexical Analysis - Compiler design

Lexical Analysis - Compiler design Aman Sharma The document discusses the role and implementation of a lexical analyzer. It can be summarized as:

1. A lexical analyzer scans source code, groups characters into lexemes, and produces tokens which it returns to the parser upon request. It handles tasks like removing whitespace and expanding macros.

2. It implements buffering techniques to efficiently scan large inputs and uses transition diagrams to represent patterns for matching tokens.

3. Regular expressions are used to specify patterns for tokens, and flex is a common language for implementing lexical analyzers based on these specifications.

Word embedding

Word embedding ShivaniChoudhary74 Word embeddings are numerical representations of words in a continuous vector space, crucial for natural language processing (NLP) tasks. These embeddings capture semantic relationships and can be generated through techniques like co-occurrence matrices, dimensionality reduction, and neural networks. Popular models include word2vec and GloVe, each with representation approaches that impact context understanding and embedding efficiency.

BERT

BERTMohd Shukri Hasan BERT is a technique for pre-training deep bidirectional representations from unlabeled text by using a Transformer encoder. It can be fine-tuned with just one additional output layer to create state-of-the-art models for a wide range of natural language processing tasks, including question answering and text classification. The presentation provides an overview of what BERT is, how it works through pre-training and fine-tuning, and example tasks it can be applied to such as sentence classification, question answering, and named entity recognition.

Knowledge representation and Predicate logic

Knowledge representation and Predicate logicAmey Kerkar 1. The document discusses knowledge representation and predicate logic.

2. It explains that knowledge representation involves representing facts through internal representations that can then be manipulated to derive new knowledge. Predicate logic allows representing objects and relationships between them using predicates, quantifiers, and logical connectives.

3. Several examples are provided to demonstrate representing simple facts about individuals as predicates and using quantifiers like "forall" and "there exists" to represent generalized statements.

Pandas

Pandasmaikroeder Pandas is a powerful Python library for data analysis and manipulation. It provides rich data structures for working with structured and time series data easily. Pandas allows for data cleaning, analysis, modeling, and visualization. It builds on NumPy and provides data frames for working with tabular data similarly to R's data frames, as well as time series functionality and tools for plotting, merging, grouping, and handling missing data.

Pass Structure of Assembler

Pass Structure of AssemblerInternational Institute of Information Technology (I²IT) The document discusses concepts related to systems programming, specifically focusing on single-pass and two-pass assemblers and their functions. It explains forward references, symbol tables, intermediate code, and includes examples to demonstrate the assembly process. Additionally, it provides details on required data structures and sample code implementations for both single-pass and two-pass assemblers.

Python Machine Learning Tutorial | Machine Learning Algorithms | Python Train...

Python Machine Learning Tutorial | Machine Learning Algorithms | Python Train...Edureka! This document is a training outline for a Python certification course focusing on machine learning. It covers topics such as the definition of machine learning, its importance, types (supervised and unsupervised learning), and specific algorithms like k-nearest neighbors (KNN) and k-means clustering. The material is intended to equip learners with the necessary skills to apply machine learning techniques effectively.

Natural Language Processing (NLP)

Natural Language Processing (NLP)Yuriy Guts The document is a comprehensive overview of Natural Language Processing (NLP), detailing key concepts, common tasks, and the challenges faced in the field. It emphasizes the transition from traditional rule-based systems to machine learning and deep learning approaches, highlighting techniques such as tokenization, named entity recognition, and language modeling using neural networks. Additionally, it includes resources and tools for NLP development, particularly focusing on progress with the Ukrainian language.

Design and Analysis of Algorithms

Design and Analysis of AlgorithmsArvind Krishnaa This document summarizes a lecture on algorithms and graph traversal techniques. It discusses:

1) Breadth-first search (BFS) and depth-first search (DFS) algorithms for traversing graphs. BFS uses a queue while DFS uses a stack.

2) Applications of BFS and DFS, including finding connected components, minimum spanning trees, and bi-connected components.

3) Identifying articulation points to determine biconnected components in a graph.

4) The 0/1 knapsack problem and approaches for solving it using greedy algorithms, backtracking, and branch and bound search.

Introduction to Named Entity Recognition

Introduction to Named Entity RecognitionTomer Lieber Named Entity Recognition (NER) is a common task in Natural Language Processing that aims to find and classify named entities in text, such as person names, organizations, and locations, into predefined categories. NER can be used for applications like machine translation, information retrieval, and question answering. Traditional approaches to NER involve feature extraction and training statistical or machine learning models on features, while current state-of-the-art methods use deep learning models like LSTMs combined with word embeddings. NER performance is typically evaluated using the F1 score, which balances precision and recall of named entity detection.

Viewers also liked (8)

Word Embeddings - Introduction

Word Embeddings - IntroductionChristian Perone The document provides an introduction to word embeddings and two related techniques: Word2Vec and Word Movers Distance. Word2Vec is an algorithm that produces word embeddings by training a neural network on a large corpus of text, with the goal of producing dense vector representations of words that encode semantic relationships. Word Movers Distance is a method for calculating the semantic distance between documents based on the embedded word vectors, allowing comparison of documents with different words but similar meanings. The document explains these techniques and provides examples of their applications and properties.

RF Encoder / Decoder Chipset

RF Encoder / Decoder ChipsetPremier Farnell This document introduces the RF600 Encoder/Decoder chipset for remote wireless systems using KEELOQ encryption. The RF600E encoder chip is used in wireless transmitters to encrypt and transmit data, while the RF600D decoder chip receives and decrypts the encoded signals. The chips support various modulation schemes and have features like learn/erase modes and serial data output for integration into wireless access control, tracking, and remote keyless entry applications.

5g wireless technology

5g wireless technology Sudhanshu Jha This document presents a seminar on 5G wireless technology. It discusses the evolution from 1G to 5G mobile networks, with each generation offering higher speeds and better connectivity. 5G is expected to offer speeds up to 1 Gbps, make wireless networks globally accessible, and power applications like wearable devices with artificial intelligence. The proposed 5G architecture uses open wireless and transport protocols to provide a unified standard across networks and intelligent quality of service management. 5G aims to be more user-centric compared to previous operator-centric mobile generations.

Marvel Case Presentation

Marvel Case PresentationChetan Dua - Marvel Entertainment was founded in 1939 and created iconic characters like the Fantastic Four, Spiderman, X-Men, and others.

- It has established a rich fictional universe with over 5,500 interconnecting characters.

- Marvel generates revenue through comic books, movies, toys, video games, licensing, and other consumer products. Major movies under Marvel Studios have generated over $4.5 billion at the box office.

Network Architecture of 5G Mobile Tecnology

Network Architecture of 5G Mobile Tecnologyvineetkathan This document provides an overview of the network architecture of 5G mobile technology. It discusses that 5G will have significantly higher bandwidth than 4G and will allow speeds up to 1 Gbps. The 5G network architecture is described as having physical and data link layers that make up the Open Wireless Architecture, as well as lower and upper network layers for routing data. It also covers the hardware and software requirements of 5G, such as the use of ultra wide band networks and software defined radio. Applications of 5G are expected to include global networks, wearable devices with AI, and enabling new technologies like 6th sense interfaces.

5G Wireless Technology

5G Wireless TechnologyNiki Upadhyay The document discusses the evolution of wireless technologies from 1G to 5G. It describes the key concepts and architecture of 5G, including its hardware, software, and features. 5G is expected to offer speeds up to 1 Gbps, make wireless communication almost limitless, and enable new applications through its high connectivity and capabilities. It concludes that 5G will be more user-centric and available at lower costs than previous generations of wireless technology.

Presentation on 1G/2G/3G/4G/5G/Cellular & Wireless Technologies

Presentation on 1G/2G/3G/4G/5G/Cellular & Wireless TechnologiesKaushal Kaith The document provides an overview of the evolution of wireless technology from 1G to 5G, detailing key features, drawbacks, and advancements in each generation. It highlights the increasing data speeds, enhanced services, and applications associated with each progression in wireless technology. The conclusion emphasizes that 5G represents a significant leap forward, offering improved reliability and cost-effectiveness for users in a wireless society.

Ad

Similar to Sequence to sequence (encoder-decoder) learning (20)

RNN is recurrent neural networks and deep learning

RNN is recurrent neural networks and deep learningFeiXiao19 The document discusses recurrent neural networks (RNNs), specifically focusing on long short-term memory (LSTM) and gated recurrent units (GRUs) as techniques to mitigate issues such as gradient vanishing. It outlines various applications of RNNs in tasks like sentiment analysis, sequence-to-sequence learning for machine translation, and video caption generation, showcasing their versatility. The document also touches on the integration of deep learning with structured learning approaches, emphasizing the need for a comprehensive understanding of these models in modern machine learning.

05-transformers.pdf

05-transformers.pdfChaoYang81 The document discusses Transformers and sequence-to-sequence learning. It provides an overview of:

- The encoder-decoder architecture of sequence-to-sequence models including self-attention.

- Key components of the Transformer model including multi-head self-attention, positional encodings, and residual connections.

- Training procedures like label smoothing and techniques used to improve Transformer performance like adding feedforward layers and stacking multiple Transformer blocks.

Recent Advances in Natural Language Processing

Recent Advances in Natural Language ProcessingApache MXNet The document provides an overview of recent advances in natural language processing (NLP), including traditional methods like bag-of-words models and word2vec, as well as more recent contextualized word embedding techniques like ELMo and BERT. It discusses applications of NLP like text classification, language modeling, machine translation and question answering, and how different models like recurrent neural networks, convolutional neural networks, and transformer models are used.

LSA algorithm

LSA algorithmAndrew Koo LSA works by first separating text into sentences, then building a matrix of word counts in each sentence. It normalizes the matrix using tf-idf to weigh common words lower. SVD transforms the matrix into a conceptual space, where each sentence is represented as a vector. The top sentences are picked based on the absolute values of their vectors in this space.

Tg noh jeju_workshop

Tg noh jeju_workshopTae-Gil Noh This document proposes a new semantic relatedness measure based on representing words as co-occurrence networks instead of vectors. It addresses two key issues: 1) defining network operations to represent phrases and 2) measuring similarity between networks using a graph kernel. The approach is evaluated on tasks like synonym finding, word sense disambiguation, and translation disambiguation, showing improved performance over vector-based baselines.

Subword tokenizers

Subword tokenizersHa Loc Do The document provides a literature review on subword tokenization methods, including byte pair encoding (BPE) and its variants, highlighting the motivations and challenges associated with different levels of tokenization such as words, characters, and subwords. It emphasizes the importance of improving model robustness and performance through subword regularization techniques that allow for multiple tokenization results. Additionally, it discusses practical implementations and considerations for using subword tokenizers in various applications.

Devoxx traitement automatique du langage sur du texte en 2019

Devoxx traitement automatique du langage sur du texte en 2019 Alexis Agahi This document contains a summary of a presentation on natural language processing of text given at Devoxx in April 2019. It discusses using natural language processing for contract management, data extraction, and review. The document also mentions using a machine learning pipeline to analyze documents and extract titles.

ICDM 2019 Tutorial: Speech and Language Processing: New Tools and Applications

ICDM 2019 Tutorial: Speech and Language Processing: New Tools and ApplicationsForward Gradient The document outlines an AI and NLP seminar, including three parts: natural language processing, speech, and introduction. Part II on NLP covers topics like word representations, sentence representations, NLP benchmarks, multilingual representations, and applications of text and graph embeddings. Part III on speech discusses speech recognition approaches and multimodal speech and text for emotion recognition.

Word2Vec model to generate synonyms on the fly in Apache Lucene.pdf

Word2Vec model to generate synonyms on the fly in Apache Lucene.pdfSease The document discusses the implementation of a word2vec model for real-time synonym generation within Apache Lucene, highlighting the advantages of machine learning-based synonym expansion over traditional vocabulary-based methods. It elaborates on the model's architecture, training process, and integration with Lucene, including performance metrics and future enhancements. Additionally, it presents a command-line tool for generating word2vec models and demonstrates practical examples for both indexing and querying synonyms.

Recursive Neural Networks

Recursive Neural NetworksSangwoo Mo Recursive neural networks (RNNs) were developed to model recursive structures like images, sentences, and phrases. RNNs construct feature representations recursively from components. Later models like recursive autoencoders (RAEs), matrix-vector RNNs (MV-RNNs), and recursive neural tensor networks (RNTNs) improved on RNNs by handling unlabeled data, incorporating different composition rules, and reducing parameters. These recursive models achieved strong performance on tasks like image segmentation, sentiment analysis, and paraphrase detection.

"SSC" - Geometria e Semantica del Linguaggio

"SSC" - Geometria e Semantica del LinguaggioAlumni Mathematica The document discusses distributional semantic models, focusing on how word meanings are derived from their contextual usage within language corpora. It explains methods for creating semantic representations using vectors derived from co-occurrence statistics, emphasizing models like Latent Semantic Analysis and Random Indexing. The content also covers the processing steps necessary for building these models, including pre-processing text, weighting, and calculating similarities between words.

Generating Natural-Language Text with Neural Networks

Generating Natural-Language Text with Neural NetworksJonathan Mugan The document discusses neural text generation, emphasizing the development of methods to encode and decode representations of the world into language using neural networks. It highlights applications like machine translation and image captioning while also addressing limitations, such as lack of common sense and precision. Additionally, the text explores the concept of a continuous meaning space for generating coherent sentences and introduces variational methods to structure this space.

Java Annotations and Pre-processing

Java Annotations and Pre-processingDanilo Pereira De Luca The document discusses Java annotations, including their creation, elements, and retention policies, as well as how they enhance meta-documentation and simplify code management. It covers the limitations of annotations, such as valid element types and the lack of inheritance, and explores advanced concepts like repeating and type annotations. Practical examples and tools for annotation preprocessing, along with potential applications and analysis, are also highlighted.

Information Retrieval-05(wild card query_positional index_spell correction)

Information Retrieval-05(wild card query_positional index_spell correction)Jeet Das The document discusses various methods for handling wildcard queries in information retrieval, including the use of binary trees, permuterm indexes, and k-gram indexes to efficiently enumerate and filter terms that meet specific query patterns. It also covers phonetic matching techniques like Soundex and correction algorithms for misspellings, highlighting the importance of utilizing a lexicon to improve the accuracy of document retrieval. Additional insights include handling queries with homophones and ensuring contextual sensitivity when correcting spelling errors.

Quoc Le, Software Engineer, Google at MLconf SF

Quoc Le, Software Engineer, Google at MLconf SFMLconf The document discusses sequence learning for language understanding, focusing on machine translation and the use of recurrent neural networks (RNNs). It highlights the advantages of encoding input sequences into vectors and decoding them back into output sequences, introducing techniques like beam search for improved output. A notable experiment achieved state-of-the-art results in machine translation using a new representation method that could benefit various language understanding tasks.

Computational Techniques for the Statistical Analysis of Big Data in R

Computational Techniques for the Statistical Analysis of Big Data in Rherbps10 The document describes techniques for improving the computational performance of statistical analysis of big data in R. It uses as a case study the rlme package for rank-based regression of nested effects models. The workflow involves identifying bottlenecks, rewriting algorithms, benchmarking versions, and testing. Examples include replacing sorting with a faster C++ selection algorithm for the Wilcoxon Tau estimator, vectorizing a pairwise function, and preallocating memory for a covariance matrix calculation. The document suggests future directions like parallelization using MPI and GPUs to further optimize R for big data applications.

JNTUK r20 AIML SOC NLP-LAB-MANUAL-R20.docx

JNTUK r20 AIML SOC NLP-LAB-MANUAL-R20.docxbslsdevi The document outlines a series of experiments involving text processing techniques using Python and the NLTK library. It covers tasks such as noise removal, lemmatization, stemming, object standardization, part of speech tagging, topic modeling, TF-IDF calculations, and word embeddings. Each experiment includes sample code and outputs demonstrating the functionality of various text processing methods.

SAE: Structured Aspect Extraction

SAE: Structured Aspect ExtractionGiorgio Orsi The document describes structured aspect extraction (SAE), a technique for extracting hierarchical aspects from unstructured text. SAE involves several steps: normalizing text, identifying noun phrases (NPs), clustering NPs to identify aspect terms, segmenting NPs to identify modifiers and multi-word expressions, and generalizing patterns while maintaining types. Scoring is used to evaluate patterns without labeled data by using cluster heads as surrogate labels and measuring discrimination between correct and incorrect extractions. The technique is unsupervised and aims to handle complex, hierarchical aspects in noisy text.

Declare Your Language (at DLS)

Declare Your Language (at DLS)Eelco Visser The document discusses name resolution in programming languages, presenting the theory, syntax definitions, and various methodologies for handling variable bindings within code. It emphasizes the importance of a standard formalism for representing name resolutions and proposes a multi-purpose name binding language. Furthermore, it explores the challenges of ambiguity and scope resolution in language design and implementation.

Ad

Recently uploaded (20)

cnc-processing-centers-centateq-p-110-en.pdf

cnc-processing-centers-centateq-p-110-en.pdfAmirStern2 מרכז עיבודים תעשייתי בעל 3/4/5 צירים, עד 22 החלפות כלים עם כל אפשרויות העיבוד הדרושות. בעל שטח עבודה גדול ומחשב נוח וקל להפעלה בשפה העברית/רוסית/אנגלית/ספרדית/ערבית ועוד..

מסוגל לבצע פעולות עיבוד שונות המתאימות לענפים שונים: קידוח אנכי, אופקי, ניסור, וכרסום אנכי.

The Future of Product Management in AI ERA.pdf

The Future of Product Management in AI ERA.pdfAlyona Owens Hi, I’m Aly Owens, I have a special pleasure to stand here as over a decade ago I graduated from CityU as an international student with an MBA program. I enjoyed the diversity of the school, ability to work and study, the network that came with being here, and of course the price tag for students here has always been more affordable than most around.

Since then I have worked for major corporations like T-Mobile and Microsoft and many more, and I have founded a startup. I've also been teaching product management to ensure my students save time and money to get to the same level as me faster avoiding popular mistakes. Today as I’ve transitioned to teaching and focusing on the startup, I hear everybody being concerned about Ai stealing their jobs… We’ll talk about it shortly.

But before that, I want to take you back to 1997. One of my favorite movies is “Fifth Element”. It wowed me with futuristic predictions when I was a kid and I’m impressed by the number of these predictions that have already come true. Self-driving cars, video calls and smart TV, personalized ads and identity scanning. Sci-fi movies and books gave us many ideas and some are being implemented as we speak. But we often get ahead of ourselves:

Flying cars,Colonized planets, Human-like AI: not yet, Time travel, Mind-machine neural interfaces for everyone: Only in experimental stages (e.g. Neuralink).

Cyberpunk dystopias: Some vibes (neon signs + inequality + surveillance), but not total dystopia (thankfully).

On the bright side, we predict that the working hours should drop as Ai becomes our helper and there shouldn’t be a need to work 8 hours/day. Nobody knows for sure but we can require that from legislation. Instead of waiting to see what the government and billionaires come up with, I say we should design our own future.

So, we as humans, when we don’t know something - fear takes over. The same thing happened during the industrial revolution. In the Industrial Era, machines didn’t steal jobs—they transformed them but people were scared about their jobs. The AI era is making similar changes except it feels like robots will take the center stage instead of a human. First off, even when it comes to the hottest space in the military - drones, Ai does a fraction of work. AI algorithms enable real-time decision-making, obstacle avoidance, and mission optimization making drones far more autonomous and capable than traditional remote-controlled aircraft. Key technologies include computer vision for object detection, GPS-enhanced navigation, and neural networks for learning and adaptation. But guess what? There are only 2 companies right now that utilize Ai in drones to make autonomous decisions - Skydio and DJI.

“MPU+: A Transformative Solution for Next-Gen AI at the Edge,” a Presentation...

“MPU+: A Transformative Solution for Next-Gen AI at the Edge,” a Presentation...Edge AI and Vision Alliance For the full video of this presentation, please visit: https://www.edge-ai-vision.com/2025/06/mpu-a-transformative-solution-for-next-gen-ai-at-the-edge-a-presentation-from-fotonation/

Petronel Bigioi, CEO of FotoNation, presents the “MPU+: A Transformative Solution for Next-Gen AI at the Edge” tutorial at the May 2025 Embedded Vision Summit.

In this talk, Bigioi introduces MPU+, a novel programmable, customizable low-power platform for real-time, localized intelligence at the edge. The platform includes an AI-augmented image signal processor that enables leading image and video quality.

In addition, it integrates ultra-low-power object and motion detection capabilities to enable always-on computer vision. A programmable neural processor provides flexibility to efficiently implement new neural networks. And additional specialized engines facilitate image stabilization and audio enhancements.

AI Agents and FME: A How-to Guide on Generating Synthetic Metadata

AI Agents and FME: A How-to Guide on Generating Synthetic MetadataSafe Software In the world of AI agents, semantics is king. Good metadata is thus essential in an organization's AI readiness checklist. But how do we keep up with the massive influx of new data? In this talk we go over the tips and tricks in generating synthetic metadata for the consumption of human users and AI agents alike.

Tech-ASan: Two-stage check for Address Sanitizer - Yixuan Cao.pdf

Tech-ASan: Two-stage check for Address Sanitizer - Yixuan Cao.pdfcaoyixuan2019 A presentation at Internetware 2025.

Securing Account Lifecycles in the Age of Deepfakes.pptx

Securing Account Lifecycles in the Age of Deepfakes.pptxFIDO Alliance Securing Account Lifecycles in the Age of Deepfakes

EIS-Webinar-Engineering-Retail-Infrastructure-06-16-2025.pdf

EIS-Webinar-Engineering-Retail-Infrastructure-06-16-2025.pdfEarley Information Science As AI reshapes expectations in retail and B2B commerce, organizations are recognizing a critical reality: meaningful AI outcomes depend on well-structured, adaptable infrastructure. In this session, Seth Earley is joined by Phil Ryan - AI strategist, search technologist, and founder of Glass Leopard Technologies - for a candid conversation on what it truly means to engineer systems for scale, agility, and intelligence.

Phil draws on more than two decades of experience leading search and AI initiatives for enterprise organizations. Together, he and Seth explore the challenges businesses face when legacy architectures limit personalization, agility, and real-time decisioning - and what needs to change to support agentic technologies and next-best-action capabilities.

Key themes from the webinar include:

Composability as a prerequisite for AI - Why modular, loosely coupled systems are essential for adapting to rapid innovation and evolving business needs

Search and relevance as foundational to AI - How techniques honed-in enterprise search have laid the groundwork for more responsive and intelligent customer experiences

From MDM and CDP to agentic systems - How data platforms are evolving to support richer customer context and dynamic orchestration

Engineering for business alignment - Why successful AI programs require architectural decisions grounded in measurable outcomes

The conversation is practical and forward-looking, connecting deep technical understanding with real-world business needs. Whether you’re modernizing your commerce stack or exploring how AI can enhance product discovery, personalization, or customer journeys, this session provides a clear-eyed view of the capabilities, constraints, and priorities that matter most.

Cracking the Code - Unveiling Synergies Between Open Source Security and AI.pdf

Cracking the Code - Unveiling Synergies Between Open Source Security and AI.pdfPriyanka Aash Cracking the Code - Unveiling Synergies Between Open Source Security and AI

OpenPOWER Foundation & Open-Source Core Innovations

OpenPOWER Foundation & Open-Source Core InnovationsIBM penPOWER offers a fully open, royalty-free CPU architecture for custom chip design.

It enables both lightweight FPGA cores (like Microwatt) and high-performance processors (like POWER10).

Developers have full access to source code, specs, and tools for end-to-end chip creation.

It supports AI, HPC, cloud, and embedded workloads with proven performance.

Backed by a global community, it fosters innovation, education, and collaboration.

WebdriverIO & JavaScript: The Perfect Duo for Web Automation

WebdriverIO & JavaScript: The Perfect Duo for Web Automationdigitaljignect In today’s dynamic digital landscape, ensuring the quality and dependability of web applications is essential. While Selenium has been a longstanding solution for automating browser tasks, the integration of WebdriverIO (WDIO) with Selenium and JavaScript marks a significant advancement in automation testing. WDIO enhances the testing process by offering a robust interface that improves test creation, execution, and management. This amalgamation capitalizes on the strengths of both tools, leveraging Selenium’s broad browser support and WDIO’s modern, efficient approach to test automation. As automation testing becomes increasingly vital for faster development cycles and superior software releases, WDIO emerges as a versatile framework, particularly potent when paired with JavaScript, making it a preferred choice for contemporary testing teams.

Connecting Data and Intelligence: The Role of FME in Machine Learning

Connecting Data and Intelligence: The Role of FME in Machine LearningSafe Software In this presentation, we want to explore powerful data integration and preparation for Machine Learning. FME is known for its ability to manipulate and transform geospatial data, connecting diverse data sources into efficient and automated workflows. By integrating FME with Machine Learning techniques, it is possible to transform raw data into valuable insights faster and more accurately, enabling intelligent analysis and data-driven decision making.

The Future of Technology: 2025-2125 by Saikat Basu.pdf

The Future of Technology: 2025-2125 by Saikat Basu.pdfSaikat Basu A peek into the next 100 years of technology. From Generative AI to Global AI networks to Martian Colonisation to Interstellar exploration to Industrial Nanotechnology to Artificial Consciousness, this is a journey you don't want to miss. Which ones excite you the most? Which ones are you apprehensive about? Feel free to comment! Let the conversation begin!

AI vs Human Writing: Can You Tell the Difference?

AI vs Human Writing: Can You Tell the Difference?Shashi Sathyanarayana, Ph.D This slide illustrates a side-by-side comparison between human-written, AI-written, and ambiguous content. It highlights subtle cues that help readers assess authenticity, raising essential questions about the future of communication, trust, and thought leadership in the age of generative AI.

Security Tips for Enterprise Azure Solutions

Security Tips for Enterprise Azure SolutionsMichele Leroux Bustamante Delivering solutions to Azure may involve a variety of architecture patterns involving your applications, APIs data and associated Azure resources that comprise the solution. This session will use reference architectures to illustrate the security considerations to protect your Azure resources and data, how to achieve Zero Trust, and why it matters. Topics covered will include specific security recommendations for types Azure resources and related network security practices. The goal is to give you a breadth of understanding as to typical security requirements to meet compliance and security controls in an enterprise solution.

CapCut Pro Crack For PC Latest Version {Fully Unlocked} 2025

CapCut Pro Crack For PC Latest Version {Fully Unlocked} 2025pcprocore 👉𝗡𝗼𝘁𝗲:𝗖𝗼𝗽𝘆 𝗹𝗶𝗻𝗸 & 𝗽𝗮𝘀𝘁𝗲 𝗶𝗻𝘁𝗼 𝗚𝗼𝗼𝗴𝗹𝗲 𝗻𝗲𝘄 𝘁𝗮𝗯> https://pcprocore.com/ 👈◀

CapCut Pro Crack is a powerful tool that has taken the digital world by storm, offering users a fully unlocked experience that unleashes their creativity. With its user-friendly interface and advanced features, it’s no wonder why aspiring videographers are turning to this software for their projects.

You are not excused! How to avoid security blind spots on the way to production

You are not excused! How to avoid security blind spots on the way to productionMichele Leroux Bustamante We live in an ever evolving landscape for cyber threats creating security risk for your production systems. Mitigating these risks requires participation throughout all stages from development through production delivery - and by every role including architects, developers QA and DevOps engineers, product owners and leadership. No one is excused! This session will cover examples of common mistakes or missed opportunities that can lead to vulnerabilities in production - and ways to do better throughout the development lifecycle.

Using the SQLExecutor for Data Quality Management: aka One man's love for the...

Using the SQLExecutor for Data Quality Management: aka One man's love for the...Safe Software The SQLExecutor is one of FME’s most powerful and flexible transformers. Pivvot maintains a robust internal metadata hierarchy used to support ingestion and curation of thousands of external data sources that must be managed for quality before entering our platform. By using the SQLExecutor, Pivvot can efficiently detect problems and perform analysis before data is extracted from our staging environment, removing the need for rollbacks or cycles waisted on a failed job. This presentation will walk through three distinct examples of how Pivvot uses the SQLExecutor to engage its metadata hierarchy and integrate with its Data Quality Management workflows efficiently and within the source postgres database. Spatial Validation –Validating spatial prerequisites before entering a production environment. Reference Data Validation - Dynamically validate domain-ed columns across any table and multiple columns per table. Practical De-duplication - Removing identical or near-identical well point locations from two distinct source datasets in the same table.

Salesforce Summer '25 Release Frenchgathering.pptx.pdf

Salesforce Summer '25 Release Frenchgathering.pptx.pdfyosra Saidani Salesforce Summer '25 Release Frenchgathering.pptx.pdf

“MPU+: A Transformative Solution for Next-Gen AI at the Edge,” a Presentation...

“MPU+: A Transformative Solution for Next-Gen AI at the Edge,” a Presentation...Edge AI and Vision Alliance

You are not excused! How to avoid security blind spots on the way to production

You are not excused! How to avoid security blind spots on the way to productionMichele Leroux Bustamante

Sequence to sequence (encoder-decoder) learning

- 2. Hello!I am Roberto Silveira EE engineer, ML enthusiast [email protected] @rsilveira79

- 3. Sequence Is a matter of time

- 4. RNN Is what you need!

- 5. Basic Recurrent cells (RNN) Source: http://colah.github.io/ Issues × Difficulties to deal with long term dependencies × Difficult to train - vanish gradient issues

- 6. Long term issues Source: http://colah.github.io/, CS224d notes Sentence 1 "Jane walked into the room. John walked in too. Jane said hi to ___" Sentence 2 "Jane walked into the room. John walked in too. It was late in the day, and everyone was walking home after a long day at work. Jane said hi to ___"

- 7. LSTM in 2 min... Review × Address long term dependencies × More complex to train × Very powerful, lots of data Source: http://colah.github.io/

- 8. LSTM in 2 min... Review × Address long term dependencies × More complex to train × Very powerful, lots of data Cell state Source: http://colah.github.io/ Forget gate Input gate Output gate

- 9. Gated recurrent unit (GRU) in 2 min ... Review × Fewer hyperparameters × Train faster × Better solution w/ less data Source: http://www.wildml.com/, arXiv:1412.3555

- 10. Gated recurrent unit (GRU) in 2 min ... Review × Fewer hyperparameters × Train faster × Better solution w/ less data Source: http://www.wildml.com/, arXiv:1412.3555 Reset gate Update gate

- 12. Variable size input - output Source: http://karpathy.github.io/

- 13. Variable size input - output Source: http://karpathy.github.io/

- 14. Basic idea "Variable" size input (encoder) -> Fixed size vector representation -> "Variable" size output (decoder) "Machine", "Learning", "is", "fun" "Aprendizado", "de", "Máquina", "é", "divertido" 0.636 0.122 0.981 Input One word at a time Stateful Model Stateful Model Encoded Sequence Output One word at a time First RNN (Encoder) Second RNN (Decoder) Memory of previous word influence next result Memory of previous word influence next result

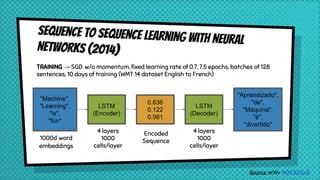

- 15. Sequence to Sequence Learning with NeuralNetworks (2014) "Machine", "Learning", "is", "fun" "Aprendizado", "de", "Máquina", "é", "divertido" 0.636 0.122 0.981 1000d word embeddings 4 layers 1000 cells/layer Encoded Sequence LSTM (Encoder) LSTM (Decoder) Source: arXiv 1409.3215v3 TRAINING → SGD w/o momentum, fixed learning rate of 0.7, 7.5 epochs, batches of 128 sentences, 10 days of training (WMT 14 dataset English to French) 4 layers 1000 cells/layer

- 16. Recurrent encoder-decoders Les chiens aiment les os <EOS> Dogs love bones Dogs love bones <EOS> Source Sequence Target Sequence Source: arXiv 1409.3215v3

- 17. Recurrent encoder-decoders Les chiens aiment les os <EOS> Dogs love bones Dogs love bones <EOS> Source: arXiv 1409.3215v3

- 18. Recurrent encoder-decoders Leschiensaimentlesos <EOS> Dogs love bones Dogs love bones <EOS> Source: arXiv 1409.3215v3

- 19. Source: arXiv 1409.3215v3 Recurrent encoder-decoders - issues ● Difficult to cope with large sentences (longer than training corpus) ● Decoder w/ attention mechanism →relieve encoder to squash into fixed length vector

- 20. NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN ANDTRANSLATE (2015) Source: arXiv 1409.0473v7 Decoder Context vector for each target word Weights of each annotation hj

- 21. NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN ANDTRANSLATE (2015) Source: arXiv 1409.0473v7 Decoder Context vector for each target word Weights of each annotation hj Non-monotonic alignment

- 22. Attention models for NLP Source: arXiv 1409.0473v7 Les chiens aiment les os <EOS> + <EOS>

- 23. Attention models for NLP Source: arXiv 1409.0473v7 Les chiens aiment les os <EOS> + <EOS> Dogs

- 24. Attention models for NLP Source: arXiv 1409.0473v7 Les chiens aiment les os <EOS> + <EOS> Dogs Dogs love +

- 25. Attention models for NLP Source: arXiv 1409.0473v7 Les chiens aiment les os <EOS> + <EOS> Dogs Dogs love + love bones+

- 26. Challenges in using the model ● Cannot handle true variable size input Source: http://suriyadeepan.github.io/ PADDING BUCKETING WORD EMBEDDINGS ● Capture context semantic meaning ● Hard to deal with both short and large sentences

- 27. padding Source: http://suriyadeepan.github.io/ EOS : End of sentence PAD : Filler GO : Start decoding UNK : Unknown; word not in vocabulary Q : "What time is it? " A : "It is seven thirty." Q : [ PAD, PAD, PAD, PAD, PAD, “?”, “it”,“is”, “time”, “What” ] A : [ GO, “It”, “is”, “seven”, “thirty”, “.”, EOS, PAD, PAD, PAD ]

- 28. Source: https://www.tensorflow.org/ bucketing Efficiently handle sentences of different lengths Ex: 100 tokens is the largest sentence in corpus How about short sentences like: "How are you?" → lots of PAD Bucket list: [(5, 10), (10, 15), (20, 25), (40, 50)] (defaut on Tensorflow translate.py) Q : [ PAD, PAD, “.”, “go”,“I”] A : [GO "Je" "vais" "." EOS PAD PAD PAD PAD PAD]

- 29. Word embeddings (remember previous presentation ;-) Distributed representations → syntactic and semantic is captured Take = 0.286 0.792 -0.177 -0.107 0.109 -0.542 0.349 0.271

- 30. Word embeddings (remember previous presentation ;-) Linguistic regularities (recap)

- 31. Phrase representations(Paper - earning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation) Source: arXiv 1406.1078v3

- 32. Phrase representations(Paper - earning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation) Source: arXiv 1406.1078v3 1000d vector representation

- 33. applications

- 34. Neural conversational model - chatbots Source: arXiv 1506.05869v3

- 36. Google Smart reply Source: arXiv 1606.04870v1 Interesting facts ● Currently responsible for 10% Inbox replies ● Training set 238 million messages

- 37. Google Smart reply Source: arXiv 1606.04870v1 Seq2Seq model Interesting facts ● Currently responsible for 10% Inbox replies ● Training set 238 million messages Feedforward triggering model Semi-supervised semantic clustering

- 38. Image captioning(Paper - Show and Tell: A Neural Image Caption Generator) Source: arXiv 1411.4555v2

- 39. Image captioning(Paper - Show and Tell: A Neural Image Caption Generator) Encoder Decoder Source: arXiv 1411.4555v2

- 41. Multi-task sequence to sequence(Paper - MULTI-TASK SEQUENCE TO SEQUENCE LEARNING) Source: arXiv 1511.06114v4 One-to-Many (common encoder) Many-to-One (common decoder) Many-to-Many

- 42. Neural programmer(Paper - NEURAL PROGRAMMER: INDUCING LATENT PROGRAMS WITH GRADIENT DESCENT) Source: arXiv 1511.04834v3

- 43. Unsupervised pre-training for seq2seq - 2017(Paper - UNSUPERVISED PRETRAINING FOR SEQUENCE TO SEQUENCE LEARNING) Source: arXiv 1611.02683v1

- 44. Unsupervised pre-training for seq2seq - 2017(Paper - UNSUPERVISED PRETRAINING FOR SEQUENCE TO SEQUENCE LEARNING) Source: arXiv 1611.02683v1 Pre-trained Pre-trained

- 46. Place your screenshot here A Quick example on tensorflow