Software coding & testing, software engineering

- 2. Coding Phase • Coding is undertaken once design phase is complete. • During coding phase: – every module identified in the design document is coded and unit tested. • Unit testing : – testing of different modules of a system in isolation.

- 3. Unit Testing –Why test each module in isolation first? –then integrate the modules and again test the set of modules? –why not just test the integrated set of modules once thoroughly?

- 4. Unit Testing It is a good idea to test modules in isolation before they are integrated: it makes debugging easier.

- 5. If an error is detected when several modules are being tested together, it would be difficult to determine which module has the error. Another reason: the modules with which this module needs to interface may not be ready. Unit Testing

- 6. Integration Testing • After all modules of a system have been coded and unit tested: –integration of modules is done • according to an integration plan.

- 7. Integration Testing • The full product takes shape: – only after all the modules have been integrated. • Modules are integrated together according to an integration plan: – involves integration of the modules through a number of steps.

- 8. Integration Testing • During each integration step, –a number of modules are added to the partially integrated system • and the system is tested. • Once all modules have been integrated and tested, –system testing can start.

- 9. System Testing • During system testing: –the fully integrated system is tested against the requirements recorded in the SRS document.

- 10. Coding • The input to the coding phase is the design document. • During coding phase: –modules identified in the design document are coded according to the module specifications.

- 11. Coding • At the end of the design phase we have: – module structure (e.g. structure chart) of the system – module specifications: • data structures and algorithms for each module. • Objective of coding phase: – transform design into code – unit test the code.

- 12. Coding Standards • Good software development organizations require their programmers to: –adhere to some standard style of coding –called coding standards.

- 13. Coding Standards • Many software development organizations: –formulate their own coding standards that suits them most, –require their engineers to follow these standards rigorously.

- 14. Coding Standards • Advantage of adhering to a standard style of coding: –it gives a uniform appearance to the codes written by different engineers, –it enhances code understanding, –encourages good programming practices.

- 15. Coding Standards • A coding standard –sets out standard ways of doing several things: • the way variables are named, • code is laid out, • maximum number of source lines allowed per function, etc.

- 16. Coding guidelines • Provide general suggestions regarding coding style to be followed: –leave actual implementation of the guidelines: • to the discretion of the individual engineers.

- 17. Code inspection and code walk throughs • After a module has been coded, –code inspection and code walk through are carried out –ensures that coding standards are followed –helps detect as many errors as possible before testing.

- 18. Code inspection and code walk throughs • Detect as many errors as possible during inspection and walkthrough: –detected errors require less effort for correction • much higher effort needed if errors were to be detected during integration or system testing.

- 19. Representative Coding Standards • Rules for limiting the use of globals: – what types of data can be declared global and what can not. • Naming conventions for – global variables, – local variables, and – constant identifiers.

- 20. Representative Coding Standards • Header data: – Name of the module, – date on which the module was created, – author's name, – modification history, – synopsis of the module, – different functions supported, along with their input/output parameters, – global variables accessed/modified by the module.

- 21. Representative Coding Standards • Error return conventions and exception handling mechanisms. – the way error and exception conditions are handled should be standard within an organization. – For example, when different functions encounter error conditions • should either return a 0 or 1 consistently.

- 22. Representative Coding Guidelines • Do not use too clever and difficult to understand coding style. – Code should be easy to understand. • Many inexperienced engineers actually take pride: – in writing cryptic and incomprehensible code.

- 23. Representative Coding Guidelines • Clever coding can unclear meaning of the code: – hampers understanding. –makes later maintenance difficult. • Avoid obscure side effects.

- 24. Representative Coding Guidelines • The side effects of a function call include: – modification of parameters passed by reference, – modification of global variables, – I/O operations. • An obscure side effect: – one that is not obvious from a casual examination of the code.

- 25. Representative Coding Guidelines • Obscure side effects make it difficult to understand a piece of code. • For example, – if a global variable is changed obscurely in a called module, – it becomes difficult for anybody trying to understand the code.

- 26. Representative Coding Guidelines • Do not use an identifier (variable name) for multiple purposes. – Programmers often use the same identifier for multiple purposes. – For example, some programmers use a temporary loop variable • also for storing the final result.

- 27. Example use of a variable for multiple purposes • for(i=1;i<100;i++) {…..} i=2*p*q; return(i);

- 28. Use of a variable for multiple purposes • The justification given by programmers for such use: – memory efficiency: – e.g. three variables use up three memory locations, – whereas the same variable used in three different ways uses just one memory location.

- 29. Use of a variable for multiple purposes • There are several things wrong with this approach: – hence should be avoided. • Each variable should be given a name indicating its purpose: – This is not possible if an identifier is used for multiple purposes.

- 30. Use of a variable for multiple purposes • Leads to confusion and annoyance –for anybody trying to understand the code. –Also makes future maintenance difficult.

- 31. Representative Coding Guidelines • Code should be well-documented. • Rules of thumb: – on the average there must be at least one comment line • for every three source lines. – The length of any function should not exceed 10 source lines.

- 32. Representative Coding Guidelines • Lengthy functions: –usually very difficult to understand –probably do too many different things.

- 33. Representative Coding Guidelines • Do not use goto statements. • Use of goto statements: –make a program unstructured –make it very difficult to understand.

- 34. Code Walk Through • An informal code analysis technique. – undertaken after the coding of a module is complete. • A few members of the development team select some test cases: – simulate execution of the code by hand using these test cases.

- 35. Code Inspection • For instance, consider: – classical error of writing a procedure that modifies a formal parameter – while the calling routine calls the procedure with a constant actual parameter. • It is more likely that such an error will be discovered: – by looking for this kind of mistakes in the code, – rather than by simply hand simulating execution of the procedure.

- 36. Code Inspection • Good software development companies: – collect statistics of errors committed by their engineers – identify the types of errors most frequently committed. • A list of common errors: – can be used during code inspection to look out for possible errors.

- 37. Commonly made errors • Use of uninitialized variables. • Nonterminating loops. • Array indices out of bounds. • Incompatible assignments. • Improper storage allocation and deallocation. • Actual and formal parameter mismatch in procedure calls. • Jumps into loops.

- 38. Code Inspection • Use of incorrect logical operators – or incorrect precedence among operators. • Improper modification of loop variables. • Comparison of equality of floating point values, etc. • Also during code inspection, – adherence to coding standards is checked.

- 39. Testing Tactics

- 40. Psychology of Testing • Test cases are designed to detect errors but does not guarantee that all possible error get detected. • There is no standard method for selecting test cases. • Selection of test cases is an art. • One of the reason why organization is not selecting developer as a tester is depend upon human psychology.

- 41. Project Testing Flow • Unit Testing • Integration Testing • System Testing • User Acceptance Testing

- 43. Testing Process • Testing is carried out at the till later stage of s/w development. • Testing is also necessary even after release of product. • Therefore testing is considered as the COSTLIEST activity in s/w devp. & should be done efficiently.

- 44. Testing Principles • All tests should be traceable to customer requirements. • Tests should be planned long before testing begins. • The Pareto principle applies to software testing. • Testing should begin “in the small” and progress toward testing “in the large.” • Complete testing is not possible. • To be most effective, testing should be conducted by an independent third party.

- 45. Software Testability • S/w testability is simply how easily system or program or product can be tested. • Testing must exhibit set of characteristics that achieve the goal of finding errors with a minimum of effort. Characteristics of s/w Testability: • Operability - “The better it works, the more efficiently it can be tested” – Relatively few bugs will block the execution of tests. – Allowing testing progress without fits and starts.

- 46. • Observability - "What you see is what you test.“ – Distinct output is generated for each input. – System states and variables are visible or queriable during execution. – Incorrect output is easily identified. – Internal errors are automatically detected & reported. – Source code is accessible. • Controllability - "The better we can control the software, the more the testing can be automated and optimized.“ – Software and hardware states and variables can be controlled directly by the test engineer. – Tests can be conveniently specified, automated, and reproduced. • Decomposability - By controlling the scope of testing, we can more quickly isolate problems and perform smarter retesting. – Independent modules can be tested independently.

- 47. • Simplicity - The less there is to test, the more quickly we can test it." – Functional simplicity (e.g., the feature set is the minimum necessary to meet requirements). – Structural simplicity (e.g., architecture is modularized to limit the propagation of faults). – Code simplicity (e.g., a coding standard is adopted for ease of inspection and maintenance). • Stability - "The fewer the changes, the fewer the disruptions to testing." – Changes to the software are infrequent. – Changes to the software are controlled. – Changes to the software do not invalidate existing tests. • Understandability – "The more information we have, the smarter we will test." – Dependencies between internal, external, and shared components are well understood. – Changes to the design are communicated to testers. – Technical documentation is instantly accessible, well organized, specific and detailed, and accurate.

- 48. Test Case Design Specifies • how to carry out testing process? • Which unit need to be tested? • What are the tools that can used for testing?

- 49. Test Case Specification Test Case ID Test Case Name Test Case Description Test Steps Status (pass/fail) Test Priority Defect Severity

- 50. Taxonomy of Testing • There are two general approaches of testing 1.Black Box Testing 2.White Box Testing

- 51. Black-box testing • Functional testing approach focuses on application externals. • We can call it as Requirements-based or Specifications-based. • Characteristics: Functionality Requirements, use, standards Correctness Does system meet business requirements

- 53. Black-box testing • Type of Test during Black Box Approach: 1. System Testing 2. Acceptance Testing

- 54. Black box testing • Also called behavioral testing, focuses on the functional requirements of the software. • It enables the software engineer to derive sets of input conditions that will fully exercise all functional requirements for a program. • Black-box testing is not an alternative to white-box techniques but it is complementary approach. • Black-box testing attempts to find errors in the following categories: – Incorrect or missing functions, – Interface errors, – Errors in data structures or external data base access. – Behavior or performance errors, – Initialization and termination errors.

- 55. • Black-box testing purposely ignored control structure, attention is focused on the information domain. Tests are designed to answer the following questions: – How is functional validity tested? – How is system behavior and performance tested? – What classes of input will make good test cases? • By applying black-box techniques, we derive a set of test cases that satisfy the following criteria – Test cases that reduce the number of additional test cases that must be designed to achieve reasonable testing (i.e minimize effort and time) – Test cases that tell us something about the presence or absence of classes of errors • Black box testing methods – Graph-Based Testing Methods – Equivalence partitioning – Boundary value analysis (BVA) – Orthogonal Array Testing

- 56. White Box Testing • Structural testing approach focuses on application internals. We can call it as Program-based • Characteristics: 1. Implementation 2. Do modules meet functional and design specifications? 3. Do program structures meet functional and design specifications? 4. How does the program work

- 57. White box testing • White-box testing of software is predicated on close examination of procedural detail. • Logical paths through the software are tested by providing test cases that exercise specific sets of conditions and/or loops. • The "status of the program" may be examined at various points. • White-box testing, sometimes called glass-box testing, is a test case design method that uses the control structure of the procedural design to derive test cases.

- 58. White box testing Using this method, SE can derive test cases that 1. Guarantee that all independent paths within a module have been exercised at least once 2. Exercise all logical decisions on their true and false sides, 3. Execute all loops at their boundaries and within their operational bounds 4. Exercise internal data structures to ensure their validity.

- 59. White Box Testing • Type of Test during White Box Approach: 1. Unit Testing 2. Integration Testing

- 61. Validation and Verification • V & V • Validation – Are we building the right product? • Verification – Are we building the product right? – Testing – Inspection – Static analysis

- 62. Verification and Validation Testing is one element of a broader topic that is often referred to as verification and validation (V&V). • Verification refers to the set of activities that ensure that software correctly implements a specific function. • Validation refers to a different set of activities that ensure that the software that has been built is traceable to customer requirements. State another way: – Verification: "Are we building the product right?" – Validation: "Are we building the right product?“ The definition of V&V encompasses many of the activities that are similar to software quality assurance (SQA).

- 63. Test Cases • Key elements of a test plan • May include scripts, data, checklists • May map to a Requirements Coverage Matrix • A traceability tool

- 65. Software Testing Strategy for conventional software architecture

- 66. • Software process from a procedural point of view; a series of four steps that are implemented sequentially.

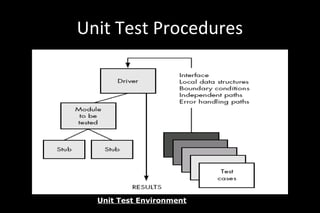

- 68. Unit Test Procedures Unit Test Environment

- 69. Regression Testing • Each time a new module is added as part of integration testing – New data flow paths are established – New I/O may occur – New control logic is invoked • Due to these changes, may cause problems with functions that previously worked flawlessly. • Regression testing is the re-execution of some subset of tests that have already been conducted to ensure that changes have not propagated unintended side effects. • Whenever software is corrected, some aspect of the software configuration (the program, its documentation, or the data that support it) is changed.

- 70. Smoke Testing • Smoke testing is an integration testing approach that is commonly used when “shrink wrapped” software products are being developed. • It is designed as a pacing mechanism for time-critical projects, allowing the software team to assess its project on a frequent basis. Smoke testing approach activities • Software components that have been translated into code are integrated into a “build.” – A build includes all data files, libraries, reusable modules, and engineered components that are required to implement one or more product functions. • A series of tests is designed to expose errors that will keep the build from properly performing its function. – The intent should be to uncover “show stopper” errors that have the highest likelihood of throwing the software project behind schedule. • The build is integrated with other builds and the entire product is smoke

- 71. • Integration risk is minimized. – Smoke tests are conducted daily, incompatibilities and other show- stopper errors are uncovered early • The quality of the end-product is improved. – Smoke testing is likely to uncover both functional errors and architectural and component-level design defects. At the end, better product quality will result. • Error diagnosis and correction are simplified. – Software that has just been added to the build(s) is a probable cause of a newly discovered error. • Progress is easier to assess. – Frequent tests give both managers and practitioners a realistic assessment of integration testing progress. Smoke Testing benefits

- 72. Validation Testing • Validation testing succeeds when software functions in a manner that can be reasonably expected by the customer. • Like all other testing steps, validation tries to uncover errors, but the focus is at the requirements level— on things that will be immediately apparent to the end-user. • Reasonable expectations are defined in the Software Requirements Specification— a document that describes all user-visible attributes of the software. • Validation testing comprises of – Validation Test criteria – Configuration review – Alpha & Beta Testing

- 73. Alpha and Beta Testing • When custom software is built for one customer, a series of acceptance tests are conducted to enable the customer to validate all requirements. • Conducted by the end-user rather than software engineers, an acceptance test can range from an informal "test drive" to a planned and systematically executed series of tests. • Most software product builders use a process called alpha and beta testing to uncover errors that only the end-user seems able to find.

- 74. Alpha testing • The alpha test is conducted at the developer's site by a customer. • The software is used in a natural setting with the developer "looking over the shoulder" of the user and recording errors and usage problems. • Alpha tests are conducted in a controlled environment.

- 75. Beta testing • The beta test is conducted at one or more customer sites by the end-user of the software. • beta test is a "live" application of the software in an environment that cannot be controlled by the developer. • The customer records all problems (real or imagined) that are encountered during beta testing and reports these to the developer at regular intervals. • As a result of problems reported during beta tests, software engineers make modifications and then prepare for release of the software product to the entire customer base.

- 76. System Testing • System testing is actually a series of different tests whose primary purpose is to fully exercise the computer-based system. • Although each test has a different purpose, all work to verify that system elements have been properly integrated and perform allocated functions. • Types of system tests are: – Recovery Testing – Security Testing – Stress Testing – Performance Testing

- 77. Recovery Testing • Recovery testing is a system test that forces the software to fail in a variety of ways and verifies that recovery is properly performed. • If recovery is automatic (performed by the system itself), reinitialization, checkpointing mechanisms, data recovery, and restart are evaluated for correctness. • If recovery requires human intervention, that is mean-time- to-repair (MTTR) is evaluated to determine whether it is within acceptable limits.

- 78. Security Testing • Security testing attempts to verify that protection mechanisms built into a system will, in fact, protect it from improper break through . • During security testing, the tester plays the role(s) of the individual who desires to break through the system. • Given enough time and resources, good security testing will ultimately penetrate a system. • The role of the system designer is to make penetration cost more than the value of the information that will be obtained. • The tester may attempt to acquire passwords through externally, may attack the system with custom software designed to breakdown any defenses that have been constructed; may browse through insecure data; may purposely cause system errors.

- 79. Stress Testing • Stress testing executes a system in a manner that demands resources in abnormal quantity, frequency, or volume. For example, 1. special tests may be designed that generate ten interrupts per second 2. Input data rates may be increased by an order of magnitude to determine how input functions will respond 3. test cases that require maximum memory or other resources are executed 4. test cases that may cause excessive hunting for disk-resident data are created • A variation of stress testing is a technique called sensitivity testing

- 80. Performance Testing • Performance testing occurs throughout all steps in the testing process. • Even at the unit level, the performance of an individual module may be assessed as white-box tests are conducted. • Performance tests are often coupled with stress testing and usually require both hardware and software instrumentation • It is often necessary to measure resource utilization (e.g., processor cycles).

- 81. THE ART OF DEBUGGING • Debugging is the process that results in the removal of the error. • Although debugging can and should be an orderly process, it is still very much an art. • Debugging is not testing but always occurs as a consequence of testing.

Editor's Notes

- #71: sometimes called builds or cluster