Spidal Java: High Performance Data Analytics with Java on Large Multicore HPC Clusters

- 1. SPIDAL JavaHigh Performance Data Analytics with Java on Large Multicore HPC Clusters [email protected] https://github.com/DSC-SPIDAL | http://saliya.org 24th High Performance Computing Symposium (HPC 2016) April 3-6, 2016, Pasadena, CA, USA as part of the SCS Spring Simulation Multi-Conference (SpringSim'16) Saliya Ekanayake | Supun Kamburugamuve | Geoffrey Fox

- 2. High Performance? 4/4/2016 HPC 2016 2 48 Nodes 128 Nodes 40x Speedup with SPIDAL Java Typical Java with All MPI Typical Java with Threads and MPI 64x Ideal (if life was so fair!) We’ll discuss today Intel Haswell HPC Clusterwith 40Gbps Infiniband

- 3. Introduction • Big Data and HPC Big data + cloud is the norm, but not always Some applications demand significant computation and communication HPC clusters are ideal However, it’s not easy • Java Unprecedented big data ecosystem Apache has over 300 big data systems, mostly written in Java Performance and APIs have improved greatly with 1.7x Not much used in HPC, but can give comparative performance (e.g. SPIDAL Java) Comparative performance to C (more on this later) Google query https://www.google.com/webhp?sourceid=chrome-instant&ion=1&espv=2&ie=UTF- 8#q=java%20faster%20than%20c will point to interesting discussions on why. Interoperable and productive 4/4/2016 HPC 2016 3

- 4. SPIDAL Java • Scalable Parallel Interoperable Data Analytics Library (SPIDAL) Available at https://github.com/DSC-SPIDAL • Includes Multidimensional Scaling (MDS) and Clustering Applications DA-MDS Y. Ruan and G. Fox, "A Robust and Scalable Solution for Interpolative Multidimensional Scaling with Weighting," eScience (eScience), 2013 IEEE 9th International Conference on, Beijing, 2013, pp. 61-69. doi: 10.1109/eScience.2013.30 DA-PWC Fox, G. C. Deterministic annealing and robust scalable data mining for the data deluge. In Proceedings of the 2nd International Workshop on Petascal Data Analytics: Challenges and Opportunities, PDAC ’11, ACM (New York, NY, USA, 2011), 39–40. DA-VS Fox, G., Mani, D., and Pyne, S. Parallel deterministic annealing clustering and its application to lc-ms data analysis. In Big Data, 2013 IEEE International Conference on (Oct 2013), 665–673. MDSasChisq General MDS implementation using LevenbergMarquardt algorithm Levenberg, K. A method for the solution of certain non-linear problems in least squares. Quarterly Journal of Applied Mathmatics II, 2 (1944), 164–168.) 4/4/2016 HPC 2016 4

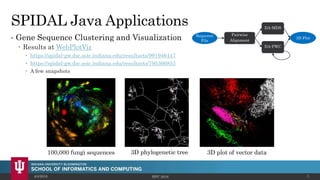

- 5. SPIDAL Java Applications • Gene Sequence Clustering and Visualization Results at WebPlotViz https://spidal-gw.dsc.soic.indiana.edu/resultsets/991946447 https://spidal-gw.dsc.soic.indiana.edu/resultsets/795366853 A few snapshots 4/4/2016 HPC 2016 5 Sequence File Pairwise Alignment DA-MDS DA-PWC 3D Plot 100,000 fungi sequences 3D phylogenetic tree 3D plot of vector data

- 6. SPIDAL Java Applications • Stocks Data Analysis Time series view of stocks E.g. with 1 year moving window https://spidal- gw.dsc.soic.indiana.edu/public/timeseriesview/825496517 4/4/2016 HPC 2016 6

- 7. Performance Challenges • Intra-node Communication • Exploiting Fat Nodes • Overhead of Garbage Collection • Cost of Heap Allocated Objects • Cache and Memory Access 4/4/2016 HPC 2016 7

- 8. Performance Challenges • Intra-node Communication [1/3] Large core counts per node – 24 to 36 Data analytics use global collective communication – Allreduce, Allgather, Broadcast, etc. HPC simulations, in contrast, typically, uses local communications for tasks like halo exchanges. 4/4/2016 HPC 2016 8 3 million double values distributed uniformly over 48 nodes • Identical message size per node, yet 24 MPI is ~10 times slower than 1 MPI • Suggests #ranks per node should be 1 for the best performance • But how to exploit all available cores?

- 9. Performance Challenges • Intra-node Communication [2/3] Solution: Shared memory Use threads? didn’t work well (explained shortly) Processes with shared memory communication Custom implementation in SPIDAL Java outside of MPI framework 4/4/2016 HPC 2016 9 • Only 1 rank per node participates in the MPI collective call • Others exchange data using shared memory maps 100K DA-MDS Run Communication 200K DA-MDS Run Communication

- 10. Performance Challenges • Intra-node Communication [3/3] Heterogeneity support Nodes with 24 and 36 cores Automatically detects configuration and allocates memory maps Implementation Custom shared memory implementation using OpenHFT’s Bytes API Supports collective calls necessary within SPIDAL Java 4/4/2016 HPC 2016 10

- 11. Performance Challenges • Exploiting Fat Nodes [1/2] Large #Cores per Node E.g. 1 Node in Juliet HPC cluster 2 Sockets 12 Cores each 2 Hardware threads per core L1 and L2 per core L3 shared per socket Two approaches All processes 1 proc per core 1 Process multiple threads Which is better? 4/4/2016 HPC 2016 11 Socket 0 Socket 1 1 Core – 2 HTs

- 12. Performance Challenges • Exploiting Fat Nodes [2/2] Suggested thread model in literature fork-join regions within a process 4/4/2016 HPC 2016 12 Iterations 1. Thread creation and scheduling overhead accumulates over iterations and significant (~5%) • True for Java threads as well as OpenMP in C/C++ (see https://github.com/esaliya/JavaThreads and https://github.com/esaliya/CppStack/tree/ master/omp2/letomppar) 2. Long running threads do better than this model, still have non- negligible overhead 3. Solution is to use processes with shared memory communications as in SPIDAL Java process Prev. Optimization

- 13. Performance Challenges • Garbage Collection “Stop the world” events are expensive Especially, for parallel processes with collective communications Typical OOP allocate – use – forget Original SPIDAL code produced frequent garbage of small arrays Solution: Zero-GC using Static allocation and reuse Off-heap static buffers (more on next slide) Advantage No GC – obvious Scale to larger problem sizes E.g. Original SPIDAL code required 5GB (x 24 = 120 GB per node) heap per process to handle 200K MDS. Optimized code use < 1GB heap to finish within the same timing. Note. Physical memory is 128GB, so with optimized SPIDAL can now do 1 million point MDS within hardware limits. 4/4/2016 HPC 2016 13 Heap size per process reaches –Xmx (2.5GB) early in the computation Frequent GC Heap size per process is well below (~1.1GB) of –Xmx (2.5GB) Virtually no GC activity after optimizing

- 14. Performance Challenges 4/4/2016 HPC 2016 14 • I/O with Heap Allocated Objects Java-to-native I/O creates copies of objects in heap Otherwise can’t guarantee object’s memory location due to GC Too expensive Solution: Off-heap buffers (memory maps) Initial data loading significantly faster than Java stream API calls Intra-node messaging gives the best performance MPI inter-node communications • Cache and Memory Access Nested data structures are neat, but expensive Solution: Contiguous memory with 1D arrays over 2D structures Indirect memory references are costly Also, adopted from HPC Blocked loops and loop ordering

- 15. Evaluation 4/4/2016 HPC 2016 15 • HPC Cluster 128 Intel Haswell nodes with 2.3GHz nominal frequency 96 nodes with 24 cores on 2 sockets (12 cores each) 32 nodes with 36 cores on 2 sockets (18 cores each) 128GB memory per node 40Gbps Infiniband • Software Java 1.8 OpenHFT JavaLang 6.7.2 Habanero Java 0.1.4 OpenMPI 1.10.1 Application: DA-MDS • Computations grow 𝑂 𝑁2 • Communication global and is 𝑂(𝑁)

- 16. 4/4/2016 HPC 2016 16 100K DA-MDS 200K DA-MDS 400K DA-MDS • 1152 Total Parallelism across 48 nodes • All combinations of 24 way parallelism per node • LHS is all processes • RHS is all internal threads and MPI across nodes 1. With SM communications in SPIDAL, processes outperform threads (blue line) 2. Other optimizations further improves performance (green line)

- 17. 4/4/2016 HPC 2016 17 • Speedup for varying data sizes • All processes • LHS is 1 proc per node across 48 nodes • RHS is 24 procs per node across 128 nodes (ideal 64x speedup) Larger data sizes show better speedup (400K – 45x, 200K – 40x, 100K – 38x) • Speedup on 36 core nodes • All processes • LHS is 1 proc per node across 32 nodes • RHS is 36 procs per node across 32 nodes (ideal 36x speedup) Speedup plateaus around 23x after 24 way parallelism per node

- 18. 4/4/2016 HPC 2016 18 The effect of different optimizations on speedup Java, is it worth it? – YES! Also, with JIT some cases in MDS are better than C

![Performance Challenges

• Intra-node Communication [1/3]

Large core counts per node – 24 to 36

Data analytics use global collective communication – Allreduce, Allgather, Broadcast, etc.

HPC simulations, in contrast, typically, uses local communications for tasks like halo exchanges.

4/4/2016 HPC 2016 8

3 million double values distributed uniformly over 48 nodes

• Identical message size per node, yet 24

MPI is ~10 times slower than 1 MPI

• Suggests #ranks per node should be 1

for the best performance

• But how to exploit all available cores?](https://image.slidesharecdn.com/spidaljava-160405134848/85/Spidal-Java-High-Performance-Data-Analytics-with-Java-on-Large-Multicore-HPC-Clusters-8-320.jpg)

![Performance Challenges

• Intra-node Communication [2/3]

Solution: Shared memory

Use threads? didn’t work well (explained shortly)

Processes with shared memory communication

Custom implementation in SPIDAL Java outside of MPI framework

4/4/2016 HPC 2016 9

• Only 1 rank per node participates in the MPI collective call

• Others exchange data using shared memory maps

100K DA-MDS Run Communication

200K DA-MDS Run Communication](https://image.slidesharecdn.com/spidaljava-160405134848/85/Spidal-Java-High-Performance-Data-Analytics-with-Java-on-Large-Multicore-HPC-Clusters-9-320.jpg)

![Performance Challenges

• Intra-node Communication [3/3]

Heterogeneity support

Nodes with 24 and 36 cores

Automatically detects configuration and allocates memory maps

Implementation

Custom shared memory implementation using OpenHFT’s Bytes API

Supports collective calls necessary within SPIDAL Java

4/4/2016 HPC 2016 10](https://image.slidesharecdn.com/spidaljava-160405134848/85/Spidal-Java-High-Performance-Data-Analytics-with-Java-on-Large-Multicore-HPC-Clusters-10-320.jpg)

![Performance Challenges

• Exploiting Fat Nodes [1/2]

Large #Cores per Node

E.g. 1 Node in Juliet HPC cluster

2 Sockets

12 Cores each

2 Hardware threads per core

L1 and L2 per core

L3 shared per socket

Two approaches

All processes 1 proc per core

1 Process multiple threads

Which is better?

4/4/2016 HPC 2016 11

Socket 0

Socket 1

1 Core – 2 HTs](https://image.slidesharecdn.com/spidaljava-160405134848/85/Spidal-Java-High-Performance-Data-Analytics-with-Java-on-Large-Multicore-HPC-Clusters-11-320.jpg)

![Performance Challenges

• Exploiting Fat Nodes [2/2]

Suggested thread model in literature fork-join regions within a process

4/4/2016 HPC 2016 12

Iterations

1. Thread creation and scheduling

overhead accumulates over

iterations and significant (~5%)

• True for Java threads as well as

OpenMP in C/C++ (see

https://github.com/esaliya/JavaThreads

and

https://github.com/esaliya/CppStack/tree/

master/omp2/letomppar)

2. Long running threads do better

than this model, still have non-

negligible overhead

3. Solution is to use processes with

shared memory communications as

in SPIDAL Java

process

Prev. Optimization](https://image.slidesharecdn.com/spidaljava-160405134848/85/Spidal-Java-High-Performance-Data-Analytics-with-Java-on-Large-Multicore-HPC-Clusters-12-320.jpg)