Using Apache Spark to Solve Sessionization Problem in Batch and Streaming

0 likes1,767 views

This document discusses sessionization techniques using Apache Spark batch and streaming processing. It describes using Spark to join previous session data with new log data to generate user sessions in batch mode. For streaming, it covers using watermarks and stateful processing to continuously generate sessions from streaming data. Key aspects covered include checkpointing to provide fault tolerance, configuring the state store, and techniques for reprocessing data in batch and streaming contexts.

1 of 32

Downloaded 21 times

![The code

val writeQuery = query.writeStream.outputMode(OutputMode.Update())

.option("checkpointLocation", s"s3://my-checkpoint-bucket")

.foreachBatch((dataset: Dataset[SessionIntermediaryState], batchId: Long) => {

BatchWriter.writeDataset(dataset, s"${outputDir}/${batchId}")

})

val dataFrame = sparkSession.readStream

.format("kafka")

.option("kafka.bootstrap.servers", kafkaConfiguration.broker).option(...) .load()

val query = dataFrame.selectExpr("CAST(value AS STRING)")

.select(functions.from_json($"value", Visit.Schema).as("data"))

.select($"data.*").withWatermark("event_time", "3 minutes")

.groupByKey(row => row.getAs[Long]("user_id"))

.mapGroupsWithState(GroupStateTimeout.EventTimeTimeout())

(mapStreamingLogsToSessions(sessionTimeout))

watermark - late events & state

expiration

stateful processing - sessions

generation

checkpoint - fault-tolerance

14](https://image.slidesharecdn.com/bartoszkonieczny-191030005055/85/Using-Apache-Spark-to-Solve-Sessionization-Problem-in-Batch-and-Streaming-14-320.jpg)

![Checkpoint - fault-tolerance

load state

for t0

query

load offsets

to process &

write them

for t1

query

process data

write

processed

offsets

write state

checkpoint location

state store offset log commit log

val writeQuery = query.writeStream.outputMode(OutputMode.Update())

.option("checkpointLocation", s"s3://sessionization-demo/checkpoint")

.foreachBatch((dataset: Dataset[SessionIntermediaryState], batchId: Long) => {

BatchWriter.writeDataset(dataset, s"${outputDir}/${batchId}")

})

.start()

15](https://image.slidesharecdn.com/bartoszkonieczny-191030005055/85/Using-Apache-Spark-to-Solve-Sessionization-Problem-in-Batch-and-Streaming-15-320.jpg)

![Stateful processing

update

remove

get

getput,remove

write update

finalize file

make snapshot

recover state

def mapStreamingLogsToSessions(timeoutDurationMs: Long)(key: Long, logs: Iterator[Row],

currentState: GroupState[SessionIntermediaryState]): SessionIntermediaryState = {

if (currentState.hasTimedOut) {

val expiredState = currentState.get.expire

currentState.remove()

expiredState

} else {

val newState = currentState.getOption.map(state => state.updateWithNewLogs(logs, timeoutDurationMs))

.getOrElse(SessionIntermediaryState.createNew(logs, timeoutDurationMs))

currentState.update(newState)

currentState.setTimeoutTimestamp(currentState.getCurrentWatermarkMs() + timeoutDurationMs)

currentState.get

}

}

17](https://image.slidesharecdn.com/bartoszkonieczny-191030005055/85/Using-Apache-Spark-to-Solve-Sessionization-Problem-in-Batch-and-Streaming-17-320.jpg)

![Stateful processing

update

remove

get

getput,remove

- write update

- finalize file

- make snapshot

recover state

18

.mapGroupsWithState(...)

state store

TreeMap[Long,

ConcurrentHashMap[UnsafeRow,

UnsafeRow]

]

in-memory storage for the most

recent versions

1.delta

2.delta

3.snapshot

checkpoint

location](https://image.slidesharecdn.com/bartoszkonieczny-191030005055/85/Using-Apache-Spark-to-Solve-Sessionization-Problem-in-Batch-and-Streaming-18-320.jpg)

)

.mapGroupsWithState(GroupStateTimeout.EventTimeTimeout())

(Mapping.mapStreamingLogsToSessions(sessionTimeout))

19](https://image.slidesharecdn.com/bartoszkonieczny-191030005055/85/Using-Apache-Spark-to-Solve-Sessionization-Problem-in-Batch-and-Streaming-19-320.jpg)

![Watermark - expired state

State representation [simplified]

{value, TTL configuration}

Algorithm:

1. Update all states with new data → eventually extend TTL

2. Retrieve TTL configuration for the query → here: watermark

3. Retrieve all states that expired → no new data in this query & TTL expired

4. Call mapGroupsWithState on it with hasTimedOut param = true & no new data

(Iterator.empty)

// full implementation: org.apache.spark.sql.execution.streaming.FlatMapGroupsWithStateExec.InputProcessor

21](https://image.slidesharecdn.com/bartoszkonieczny-191030005055/85/Using-Apache-Spark-to-Solve-Sessionization-Problem-in-Batch-and-Streaming-21-320.jpg)

Ad

Recommended

Enabling Vectorized Engine in Apache Spark

Enabling Vectorized Engine in Apache SparkKazuaki Ishizaki The document discusses enabling a vectorized engine in Apache Spark to improve performance through vectorization and SIMD (Single Instruction, Multiple Data) techniques. It covers limitations of the current Spark architecture regarding SIMD compatibility and introduces the Vector API as a solution for ensuring efficient SIMD code generation. The document also outlines various applications and comparisons of SIMD implementations, highlighting performance benchmarks between different approaches.

KSQL Performance Tuning for Fun and Profit ( Nick Dearden, Confluent) Kafka S...

KSQL Performance Tuning for Fun and Profit ( Nick Dearden, Confluent) Kafka S...confluent The document outlines performance tuning for ksql, discussing query anatomy, topologies, tasks, and the factors that influence performance such as latency, throughput, and message formats. It presents testing highlights and measurements, emphasizing the efficiency of projection queries while noting that joins and aggregates generally incur more overhead. Key takeaways include recommendations for optimizing query performance and resource allocation in a ksql environment.

Multi cluster, multitenant and hierarchical kafka messaging service slideshare

Multi cluster, multitenant and hierarchical kafka messaging service slideshareAllen (Xiaozhong) Wang This document discusses Netflix's approach to scaling Apache Kafka through a hierarchical, multi-cluster architecture. It addresses the challenges of scaling a single Kafka cluster, such as increased latency and vulnerability to failures. Netflix uses multiple "fronting" Kafka clusters for data collection and buffering optimized for producers. Consumer clusters scale by adding brokers or moving partitions to new clusters. Producers and consumers can operate across multiple clusters simultaneously using routing services. This multi-cluster approach allows independent scaling of producers and consumers while balancing data distribution.

Enhance your multi-cloud application performance using Redis Enterprise P2

Enhance your multi-cloud application performance using Redis Enterprise P2Ashnikbiz This document provides an overview of Redis Enterprise. It discusses how Redis Enterprise is an in-memory multi-model database built on open source Redis that supports high-performance operational, analytics, and hybrid use cases. It offers deployment options including cloud, on-premises, and Kubernetes and supports a wide range of modern use cases including caching, transactions, streaming, messaging, and analytics workloads. Redis Enterprise provides features like high availability, security, and support for Redis modules to extend its capabilities.

Geospatial Indexing at Scale: The 15 Million QPS Redis Architecture Powering ...

Geospatial Indexing at Scale: The 15 Million QPS Redis Architecture Powering ...Daniel Hochman The document discusses the evolution of Lyft's geospatial indexing architecture, focusing on the transition to Redis for enhanced scalability and performance. It covers design challenges faced in their initial monolithic setup, the use of geohashing for location data, and the implementation of Nutcracker for request handling. Additionally, it highlights Lyft's future roadmap involving Envoy for load balancing and improved service communication.

Exploring KSQL Patterns

Exploring KSQL Patternsconfluent Tim Berglund, a senior director at Confluent, discusses ksql, the streaming SQL engine for Apache Kafka, focusing on its features like stream and table creation, usage patterns for streaming ETL, anomaly detection, and real-time monitoring. The session covers practical examples, including how to create and inspect streams and tables, as well as deployment patterns for ksql. Resources for further exploration are provided, including links to GitHub and the Confluent community.

Webinar: 99 Ways to Enrich Streaming Data with Apache Flink - Konstantin Knauf

Webinar: 99 Ways to Enrich Streaming Data with Apache Flink - Konstantin KnaufVerverica The document outlines various methods for enriching streaming data with Apache Flink, focusing on different approaches for handling reference data, such as synchronous and asynchronous lookups, cache usage, and pre-loading techniques. It details the trade-offs involved in terms of database load, latency, and data staleness, ultimately highlighting the advantages of streaming architectures that utilize local stream joins and temporal table joins for high throughput and low latency. The summary emphasizes the importance of selecting the appropriate enrichment method based on specific use cases and reference data characteristics.

NetApp XCP データ移行ツールインストールと設定

NetApp XCP データ移行ツールインストールと設定Kan Itani NASからデータを移行するためのツールの紹介とインストール方法を記載しています。移行元のNASのストレージの種類は選ばず、NFS to NFS, CIFS to CIFSで移行できます。オンプレからクラウド上のNASサービスに移行する際にも利用できます。

One sink to rule them all: Introducing the new Async Sink

One sink to rule them all: Introducing the new Async SinkFlink Forward The document introduces the new async sink for Apache Flink, detailing its internal architecture and functionality including buffering, batching, retry mechanisms, and managing throughput. It outlines configuration options and current limitations like ordering guarantees and thread pool management. Future enhancements mentioned include rate limiting features and integration with Amazon DynamoDB.

NVMe over Fabric

NVMe over Fabricsingh.gurjeet NVMe over Fabrics (NVMe-oF) allows NVMe-based storage to be shared across multiple servers over a network. It provides better utilization of resources and scalability compared to directly attached storage. NVMe-oF maintains NVMe performance by transferring commands and data end-to-end over the fabric using technologies like RDMA that bypass legacy storage stacks. It enables applications like composable infrastructure with remote direct memory access (RDMA) providing near-local performance. While NVMe-oF can use different transports, RDMA has been most common due to low latency it provides.

Ceph Performance and Sizing Guide

Ceph Performance and Sizing GuideJose De La Rosa The Red Hat Ceph Performance & Sizing Guide provides an overview of Ceph, its architecture, and data storage methods while detailing test methodologies and results. Key findings emphasize the performance outcomes of various configurations, including the advantages of replication and erasure coding for different workloads. Recommendations for sizing and configuration are included to optimize performance and cost efficiency in storage solutions.

KafkaConsumer - Decoupling Consumption and Processing for Better Resource Uti...

KafkaConsumer - Decoupling Consumption and Processing for Better Resource Uti...confluent The document discusses a multi-threaded implementation for processing Kafka records, highlighting the challenges and considerations such as offset committing and group rebalancing. It presents two threading models: a 'fork join' model and a 'fully decoupled' model, emphasizing the need for synchronization and managing partition processing order. The conclusion underscores the complexity of multi-threading in Kafka while providing links to demo application source code for further exploration.

Building a SIMD Supported Vectorized Native Engine for Spark SQL

Building a SIMD Supported Vectorized Native Engine for Spark SQLDatabricks The document discusses the development of a vectorized native SQL engine for Spark, highlighting issues with the current row-based Spark SQL engine, such as challenges with SIMD optimizations and high GC overhead. It proposes a columnar-based design utilizing Arrow format, optimizing performance through native code execution and LLVM code generation. Key features include native memory management, efficient columnar data processing, and integration with various data sources, emphasizing ongoing development and open-source availability.

A Deep Dive into Structured Streaming: Apache Spark Meetup at Bloomberg 2016

A Deep Dive into Structured Streaming: Apache Spark Meetup at Bloomberg 2016 Databricks The document discusses advancements in structured streaming in Apache Spark, highlighting its features such as fault-tolerant state management and unified APIs for batch and streaming data. It outlines the importance of real-time analytics, and various use cases, particularly in IoT device monitoring. The presentation emphasizes the advantages of structured streaming over traditional DStreams, focusing on data processing efficiency and integrating batch, interactive, and streaming workloads seamlessly.

Flink powered stream processing platform at Pinterest

Flink powered stream processing platform at PinterestFlink Forward Pinterest's Xenon platform is a stateful stream processing solution that enables real-time data handling for various applications, including ads, user signals, and safety measures, while supporting around 100 engineers in managing over 100 applications. The focus is on stability, developer velocity, and cloud efficiency, aiming to enhance real-time processing capabilities and operational resilience. Ongoing initiatives include automated scaling, resource optimization, and improving the developer experience with better support and tools.

Dynamically Scaling Data Streams across Multiple Kafka Clusters with Zero Fli...

Dynamically Scaling Data Streams across Multiple Kafka Clusters with Zero Fli...Flink Forward The document outlines the design and manual migration steps for multi-cluster Kafka source in Apache Flink, detailing the necessary tasks for swapping and upgrading clusters while minimizing downtime. It highlights the challenges of scaling multiple Kafka clusters across hybrid cloud environments, emphasizing complex operability and failover issues. The document also discusses benefits such as automated migrations and future work opportunities regarding watermark alignment and optimizations.

A Deep Dive into Stateful Stream Processing in Structured Streaming with Tath...

A Deep Dive into Stateful Stream Processing in Structured Streaming with Tath...Databricks The document details stateful stream processing in Apache Spark's Structured Streaming, highlighting its fast, scalable, and fault-tolerant capabilities with high-level APIs. It covers the anatomy of streaming queries, including sources, transformations, and sinks, while emphasizing the importance of state management, watermarking, and fault recovery mechanisms. Additionally, it discusses various operations such as streaming aggregation, deduplication, and user-defined stateful processing using APIs like mapGroupsWithState.

A Deep Dive Into Understanding Apache Cassandra

A Deep Dive Into Understanding Apache CassandraDataStax Academy The document provides an overview of Cassandra's architecture and its internals, emphasizing the differences between RDBMS and Cassandra's distributed hashing model. It discusses the functioning of consistent hashing, write and read mechanisms, data replication, and the role of memtables and SSTables in data storage. Additionally, it covers performance optimizations such as skip lists, bloom filters, and different compaction strategies used by Cassandra.

Optimizing Performance in Rust for Low-Latency Database Drivers

Optimizing Performance in Rust for Low-Latency Database DriversScyllaDB The document discusses the optimization techniques for low-latency database drivers, specifically focusing on the ScyllaDB Rust driver, which supports advanced features such as shard awareness and asynchronous interfaces. Developed over three years, it utilizes the Tokio framework and is designed for high performance, with significant improvements in serialization, connection management, and load balancing. It also emphasizes the importance of profiling tools and community contributions for continuous enhancement of the driver.

The Parquet Format and Performance Optimization Opportunities

The Parquet Format and Performance Optimization OpportunitiesDatabricks The document discusses optimization opportunities for data storage and processing using the Parquet format, emphasizing its hybrid storage model and efficient data organization strategies. Key points include the significance of handling row-wise and columnar data, minimizing I/O operations through techniques like predicate pushdown and partitioning, and the advantages of using Delta Lake for managing data at scale. It serves as a guide for software engineers in understanding performance improvements in data analytics workflows.

Introduction to Apache Spark

Introduction to Apache Sparkdatamantra Apache Spark is a fast, general engine for large-scale data processing. It provides unified analytics engine for batch, interactive, and stream processing using an in-memory abstraction called resilient distributed datasets (RDDs). Spark's speed comes from its ability to run computations directly on data stored in cluster memory and optimize performance through caching. It also integrates well with other big data technologies like HDFS, Hive, and HBase. Many large companies are using Spark for its speed, ease of use, and support for multiple workloads and languages.

Kafka to the Maxka - (Kafka Performance Tuning)

Kafka to the Maxka - (Kafka Performance Tuning)DataWorks Summit The document provides a comprehensive guide on performance tuning for Kafka, highlighting the importance of load testing and empirical data to inform configuration changes. Key aspects include JVM settings, file system choices, batch management, and monitoring tools, alongside caution about modifying default settings without thorough consideration. It emphasizes that there is no one-size-fits-all solution, urging experimentation and careful testing to optimize Kafka performance.

Tech Talk: RocksDB Slides by Dhruba Borthakur & Haobo Xu of Facebook

Tech Talk: RocksDB Slides by Dhruba Borthakur & Haobo Xu of FacebookThe Hive The document discusses RocksDB, an embedded key-value store optimized for fast storage, emphasizing its architecture, performance improvements over previous databases like LevelDB, and features such as pluggable components and efficient compaction strategies. It highlights RocksDB's ability to substantially reduce write amplification, improve read efficiency with bloom filters, and support various storage backends. Additionally, it outlines practical use cases and optimizations that cater to specific application requirements, underscoring its suitability for server workloads.

OFI Overview 2019 Webinar

OFI Overview 2019 Webinarseanhefty The document provides an overview and agenda for the libfabric software interface. It discusses the design guidelines, user requirements, software development strategies, architecture, providers, and getting started with libfabric. The key points are that libfabric provides portable low-level networking interfaces, has an object-oriented design, supports multiple fabric hardware through providers, and gives applications high-level and low-level interfaces to optimize performance.

Introduction àJava

Introduction àJavaChristophe Vaudry Ce document présente les bases de la programmation en Java, incluant l'historique et l'évolution du langage depuis sa création, ainsi que ses caractéristiques majeures. Il souligne l'importance de Java dans l'éducation et la recherche, tout en mettant en avant ses avantages tels que la portabilité et un vaste écosystème de bibliothèque. Enfin, le document aborde les défis rencontrés avec les anciennes versions et les améliorations apportées au fil du temps.

An Introduction to Apache Kafka

An Introduction to Apache KafkaAmir Sedighi The document discusses Apache Kafka, highlighting its architecture as a fast, scalable, and durable publish-subscribe messaging system designed for handling large data streams. Key components include topics, producers, consumers, and brokers, which enable efficient data management and processing within a distributed environment. Kafka serves various use cases, including messaging, log aggregation, stream processing, and event sourcing, while offering strong durability and fault-tolerance features.

Deep dive into stateful stream processing in structured streaming by Tathaga...

Deep dive into stateful stream processing in structured streaming by Tathaga...Databricks The document provides an in-depth exploration of stateful stream processing within Spark's Structured Streaming framework, presented at the Spark Summit Europe 2017. It covers the architecture, including sources, sinks, transformations, and checkpointing, while explaining concepts such as watermarking for managing late data, streaming joins, and custom stateful operations using functions like mapGroupsWithState. The talk also discusses performance monitoring and debugging strategies for stateful queries in real-time processing applications.

Flink 0.10 @ Bay Area Meetup (October 2015)

Flink 0.10 @ Bay Area Meetup (October 2015)Stephan Ewen Flink 0.10 focuses on operational readiness with improvements to high availability, monitoring, and integration with other systems. It provides first-class support for event time processing and refines the DataStream API to be both easy to use and powerful for stream processing tasks.

[JEEConf-2017] RxJava as a key component in mature Big Data product

[JEEConf-2017] RxJava as a key component in mature Big Data productIgor Lozynskyi The document discusses the integration of RxJava in the architecture of Zoomdata, a big data visualization software, highlighting both positive experiences and challenges encountered. Key benefits include efficient stream processing, query cancellation, and the ability to manage complex workflows; however, issues such as the need for unit tests, handling backpressure, and maintaining stream lifetimes are also emphasized. The speaker predicts a shift towards reactive programming in future software developments, particularly with the advent of Java 9 and Spring 5.

More Related Content

What's hot (20)

One sink to rule them all: Introducing the new Async Sink

One sink to rule them all: Introducing the new Async SinkFlink Forward The document introduces the new async sink for Apache Flink, detailing its internal architecture and functionality including buffering, batching, retry mechanisms, and managing throughput. It outlines configuration options and current limitations like ordering guarantees and thread pool management. Future enhancements mentioned include rate limiting features and integration with Amazon DynamoDB.

NVMe over Fabric

NVMe over Fabricsingh.gurjeet NVMe over Fabrics (NVMe-oF) allows NVMe-based storage to be shared across multiple servers over a network. It provides better utilization of resources and scalability compared to directly attached storage. NVMe-oF maintains NVMe performance by transferring commands and data end-to-end over the fabric using technologies like RDMA that bypass legacy storage stacks. It enables applications like composable infrastructure with remote direct memory access (RDMA) providing near-local performance. While NVMe-oF can use different transports, RDMA has been most common due to low latency it provides.

Ceph Performance and Sizing Guide

Ceph Performance and Sizing GuideJose De La Rosa The Red Hat Ceph Performance & Sizing Guide provides an overview of Ceph, its architecture, and data storage methods while detailing test methodologies and results. Key findings emphasize the performance outcomes of various configurations, including the advantages of replication and erasure coding for different workloads. Recommendations for sizing and configuration are included to optimize performance and cost efficiency in storage solutions.

KafkaConsumer - Decoupling Consumption and Processing for Better Resource Uti...

KafkaConsumer - Decoupling Consumption and Processing for Better Resource Uti...confluent The document discusses a multi-threaded implementation for processing Kafka records, highlighting the challenges and considerations such as offset committing and group rebalancing. It presents two threading models: a 'fork join' model and a 'fully decoupled' model, emphasizing the need for synchronization and managing partition processing order. The conclusion underscores the complexity of multi-threading in Kafka while providing links to demo application source code for further exploration.

Building a SIMD Supported Vectorized Native Engine for Spark SQL

Building a SIMD Supported Vectorized Native Engine for Spark SQLDatabricks The document discusses the development of a vectorized native SQL engine for Spark, highlighting issues with the current row-based Spark SQL engine, such as challenges with SIMD optimizations and high GC overhead. It proposes a columnar-based design utilizing Arrow format, optimizing performance through native code execution and LLVM code generation. Key features include native memory management, efficient columnar data processing, and integration with various data sources, emphasizing ongoing development and open-source availability.

A Deep Dive into Structured Streaming: Apache Spark Meetup at Bloomberg 2016

A Deep Dive into Structured Streaming: Apache Spark Meetup at Bloomberg 2016 Databricks The document discusses advancements in structured streaming in Apache Spark, highlighting its features such as fault-tolerant state management and unified APIs for batch and streaming data. It outlines the importance of real-time analytics, and various use cases, particularly in IoT device monitoring. The presentation emphasizes the advantages of structured streaming over traditional DStreams, focusing on data processing efficiency and integrating batch, interactive, and streaming workloads seamlessly.

Flink powered stream processing platform at Pinterest

Flink powered stream processing platform at PinterestFlink Forward Pinterest's Xenon platform is a stateful stream processing solution that enables real-time data handling for various applications, including ads, user signals, and safety measures, while supporting around 100 engineers in managing over 100 applications. The focus is on stability, developer velocity, and cloud efficiency, aiming to enhance real-time processing capabilities and operational resilience. Ongoing initiatives include automated scaling, resource optimization, and improving the developer experience with better support and tools.

Dynamically Scaling Data Streams across Multiple Kafka Clusters with Zero Fli...

Dynamically Scaling Data Streams across Multiple Kafka Clusters with Zero Fli...Flink Forward The document outlines the design and manual migration steps for multi-cluster Kafka source in Apache Flink, detailing the necessary tasks for swapping and upgrading clusters while minimizing downtime. It highlights the challenges of scaling multiple Kafka clusters across hybrid cloud environments, emphasizing complex operability and failover issues. The document also discusses benefits such as automated migrations and future work opportunities regarding watermark alignment and optimizations.

A Deep Dive into Stateful Stream Processing in Structured Streaming with Tath...

A Deep Dive into Stateful Stream Processing in Structured Streaming with Tath...Databricks The document details stateful stream processing in Apache Spark's Structured Streaming, highlighting its fast, scalable, and fault-tolerant capabilities with high-level APIs. It covers the anatomy of streaming queries, including sources, transformations, and sinks, while emphasizing the importance of state management, watermarking, and fault recovery mechanisms. Additionally, it discusses various operations such as streaming aggregation, deduplication, and user-defined stateful processing using APIs like mapGroupsWithState.

A Deep Dive Into Understanding Apache Cassandra

A Deep Dive Into Understanding Apache CassandraDataStax Academy The document provides an overview of Cassandra's architecture and its internals, emphasizing the differences between RDBMS and Cassandra's distributed hashing model. It discusses the functioning of consistent hashing, write and read mechanisms, data replication, and the role of memtables and SSTables in data storage. Additionally, it covers performance optimizations such as skip lists, bloom filters, and different compaction strategies used by Cassandra.

Optimizing Performance in Rust for Low-Latency Database Drivers

Optimizing Performance in Rust for Low-Latency Database DriversScyllaDB The document discusses the optimization techniques for low-latency database drivers, specifically focusing on the ScyllaDB Rust driver, which supports advanced features such as shard awareness and asynchronous interfaces. Developed over three years, it utilizes the Tokio framework and is designed for high performance, with significant improvements in serialization, connection management, and load balancing. It also emphasizes the importance of profiling tools and community contributions for continuous enhancement of the driver.

The Parquet Format and Performance Optimization Opportunities

The Parquet Format and Performance Optimization OpportunitiesDatabricks The document discusses optimization opportunities for data storage and processing using the Parquet format, emphasizing its hybrid storage model and efficient data organization strategies. Key points include the significance of handling row-wise and columnar data, minimizing I/O operations through techniques like predicate pushdown and partitioning, and the advantages of using Delta Lake for managing data at scale. It serves as a guide for software engineers in understanding performance improvements in data analytics workflows.

Introduction to Apache Spark

Introduction to Apache Sparkdatamantra Apache Spark is a fast, general engine for large-scale data processing. It provides unified analytics engine for batch, interactive, and stream processing using an in-memory abstraction called resilient distributed datasets (RDDs). Spark's speed comes from its ability to run computations directly on data stored in cluster memory and optimize performance through caching. It also integrates well with other big data technologies like HDFS, Hive, and HBase. Many large companies are using Spark for its speed, ease of use, and support for multiple workloads and languages.

Kafka to the Maxka - (Kafka Performance Tuning)

Kafka to the Maxka - (Kafka Performance Tuning)DataWorks Summit The document provides a comprehensive guide on performance tuning for Kafka, highlighting the importance of load testing and empirical data to inform configuration changes. Key aspects include JVM settings, file system choices, batch management, and monitoring tools, alongside caution about modifying default settings without thorough consideration. It emphasizes that there is no one-size-fits-all solution, urging experimentation and careful testing to optimize Kafka performance.

Tech Talk: RocksDB Slides by Dhruba Borthakur & Haobo Xu of Facebook

Tech Talk: RocksDB Slides by Dhruba Borthakur & Haobo Xu of FacebookThe Hive The document discusses RocksDB, an embedded key-value store optimized for fast storage, emphasizing its architecture, performance improvements over previous databases like LevelDB, and features such as pluggable components and efficient compaction strategies. It highlights RocksDB's ability to substantially reduce write amplification, improve read efficiency with bloom filters, and support various storage backends. Additionally, it outlines practical use cases and optimizations that cater to specific application requirements, underscoring its suitability for server workloads.

OFI Overview 2019 Webinar

OFI Overview 2019 Webinarseanhefty The document provides an overview and agenda for the libfabric software interface. It discusses the design guidelines, user requirements, software development strategies, architecture, providers, and getting started with libfabric. The key points are that libfabric provides portable low-level networking interfaces, has an object-oriented design, supports multiple fabric hardware through providers, and gives applications high-level and low-level interfaces to optimize performance.

Introduction àJava

Introduction àJavaChristophe Vaudry Ce document présente les bases de la programmation en Java, incluant l'historique et l'évolution du langage depuis sa création, ainsi que ses caractéristiques majeures. Il souligne l'importance de Java dans l'éducation et la recherche, tout en mettant en avant ses avantages tels que la portabilité et un vaste écosystème de bibliothèque. Enfin, le document aborde les défis rencontrés avec les anciennes versions et les améliorations apportées au fil du temps.

An Introduction to Apache Kafka

An Introduction to Apache KafkaAmir Sedighi The document discusses Apache Kafka, highlighting its architecture as a fast, scalable, and durable publish-subscribe messaging system designed for handling large data streams. Key components include topics, producers, consumers, and brokers, which enable efficient data management and processing within a distributed environment. Kafka serves various use cases, including messaging, log aggregation, stream processing, and event sourcing, while offering strong durability and fault-tolerance features.

Deep dive into stateful stream processing in structured streaming by Tathaga...

Deep dive into stateful stream processing in structured streaming by Tathaga...Databricks The document provides an in-depth exploration of stateful stream processing within Spark's Structured Streaming framework, presented at the Spark Summit Europe 2017. It covers the architecture, including sources, sinks, transformations, and checkpointing, while explaining concepts such as watermarking for managing late data, streaming joins, and custom stateful operations using functions like mapGroupsWithState. The talk also discusses performance monitoring and debugging strategies for stateful queries in real-time processing applications.

Similar to Using Apache Spark to Solve Sessionization Problem in Batch and Streaming (20)

Flink 0.10 @ Bay Area Meetup (October 2015)

Flink 0.10 @ Bay Area Meetup (October 2015)Stephan Ewen Flink 0.10 focuses on operational readiness with improvements to high availability, monitoring, and integration with other systems. It provides first-class support for event time processing and refines the DataStream API to be both easy to use and powerful for stream processing tasks.

[JEEConf-2017] RxJava as a key component in mature Big Data product

[JEEConf-2017] RxJava as a key component in mature Big Data productIgor Lozynskyi The document discusses the integration of RxJava in the architecture of Zoomdata, a big data visualization software, highlighting both positive experiences and challenges encountered. Key benefits include efficient stream processing, query cancellation, and the ability to manage complex workflows; however, issues such as the need for unit tests, handling backpressure, and maintaining stream lifetimes are also emphasized. The speaker predicts a shift towards reactive programming in future software developments, particularly with the advent of Java 9 and Spring 5.

Kick your database_to_the_curb_reston_08_27_19

Kick your database_to_the_curb_reston_08_27_19confluent This document discusses using Kafka Streams interactive queries to enable powerful microservices by making stream processing results queryable in real-time. It provides an overview of Kafka Streams, describes how to embed an interactive query server to expose stateful stream processing results via HTTP endpoints, and demonstrates how to securely query processing state from client applications.

Online Meetup: Why should container system / platform builders care about con...

Online Meetup: Why should container system / platform builders care about con...Docker, Inc. The document outlines a presentation on containerd, a container runtime technology, covering its features, architecture, and how to get started with it. Key points include a clean gRPC-based API, runtime agility, and a focus on stability and performance. The document also highlights practical usage examples, metrics support, and potential contribution avenues for developers.

Meetup spark structured streaming

Meetup spark structured streamingJosé Carlos García Serrano Our product uses third generation Big Data technologies and Spark Structured Streaming to enable comprehensive Digital Transformation. It provides a unified streaming API that allows for continuous processing, interactive queries, joins with static data, continuous aggregations, stateful operations, and low latency. The presentation introduces Spark Structured Streaming's basic concepts including loading from stream sources like Kafka, writing to sinks, triggers, SQL integration, and mixing streaming with batch processing. It also covers continuous aggregations with windows, stateful operations with checkpointing, reading from and writing to Kafka, and benchmarks compared to other streaming frameworks.

GDG Jakarta Meetup - Streaming Analytics With Apache Beam

GDG Jakarta Meetup - Streaming Analytics With Apache BeamImre Nagi The document presents an overview of Google Cloud Dataflow, detailing its unified model for stream and batch processing using Apache Beam SDKs. It discusses various components like ETL, data input/output transformations, and windowing concepts essential for stream analytics. Additionally, it covers advanced topics like trigger mechanisms, accumulation modes, and the importance of batch processing for accurate analytics results.

Spark streaming with kafka

Spark streaming with kafkaDori Waldman Spark Streaming with Kafka allows processing streaming data from Kafka in real-time. There are two main approaches - receiver-based and direct. The receiver-based approach uses Spark receivers to read data from Kafka and write to write-ahead logs for fault tolerance. The direct approach reads Kafka offsets directly without a receiver for better performance but less fault tolerance. The document discusses using Spark Streaming to aggregate streaming data from Kafka in real-time, persisting aggregates to Cassandra and raw data to S3 for analysis. It also covers using stateful transformations to update Cassandra in real-time.

Spark stream - Kafka

Spark stream - Kafka Dori Waldman Spark Streaming can be used to process streaming data from Kafka in real-time. There are two main approaches - the receiver-based approach where Spark receives data from Kafka receivers, and the direct approach where Spark directly reads data from Kafka. The document discusses using Spark Streaming to process tens of millions of transactions per minute from Kafka for an ad exchange system. It describes architectures where Spark Streaming is used to perform real-time aggregations and update databases, as well as save raw data to object storage for analytics and recovery. Stateful processing with mapWithState transformations is also demonstrated to update Cassandra in real-time.

A new kind of BPM with Activiti

A new kind of BPM with ActivitiAlfresco Software The document discusses the capabilities and features of the Activiti BPM platform, highlighting its process execution model, activity types, and integration with Java. It covers deployment processes, versioning of process definitions, and testing of business processes, as well as additional features like timers, script tasks, and easy integration with Spring. The emphasis is on providing developers with tools and examples to effectively implement and manage workflows using Activiti.

Reactive programming every day

Reactive programming every dayVadym Khondar Vadym Khondar is a senior software engineer with 8 years of experience, including 2.5 years at EPAM. He leads a development team that works on web and JavaScript projects. The document discusses reactive programming, including its benefits of responsiveness, resilience, and other qualities. Examples demonstrate using streams, behaviors, and other reactive concepts to write more declarative and asynchronous code.

Continuous Application with Structured Streaming 2.0

Continuous Application with Structured Streaming 2.0Anyscale The document discusses the advancements in Apache Spark 2.0, particularly focusing on structured streaming which unifies batch, interactive, and streaming queries. It highlights the importance of handling complex streaming requirements such as event-time processing, state management, and real-time analytics through a high-level API. Additionally, it presents use cases, advantages, and practical examples, emphasizing the continuous and incremental execution capabilities of Spark structured streaming.

JavaZone 2014 - goto java;

JavaZone 2014 - goto java;Martin (高馬丁) Skarsaune This document discusses the implementation of the 'goto' statement in Java within the OpenJDK compiler. It covers syntax, compiler structure, and how the 'goto' statement interacts with existing language features and constructs. Additionally, it addresses potential issues such as circular gotos and the handling of semantic checks during compilation.

こわくないよ❤️ Playframeworkソースコードリーディング入門

こわくないよ❤️ Playframeworkソースコードリーディング入門tanacasino This document discusses the Play framework and how it starts a Play application from the command line using the ProdServerStart object.

1. The ProdServerStart object's main method creates a RealServerProcess and calls the start method, passing the process.

2. The start method reads server configuration settings, creates a PID file, starts the Play application, and starts the HTTP server provider.

3. It loads the Play application using an ApplicationLoader, which can use Guice for dependency injection. The loaded application is then started by Play.

Introducing the WSO2 Complex Event Processor

Introducing the WSO2 Complex Event ProcessorWSO2 This document provides an overview of complex event processing (CEP) and the WSO2 CEP server. It discusses CEP concepts like event streams, queries, and patterns. It also describes the Siddhi query language and runtime that is used by WSO2 CEP to process events. Finally, it demonstrates how WSO2 CEP can be used to monitor stock exchange and social media data to detect market moving events.

Scalable Angular 2 Application Architecture

Scalable Angular 2 Application ArchitectureFDConf This document discusses scalable application architecture. It covers topics like dynamic requirements, using a scalable communication layer with various package formats, handling multiple state mutation sources, building scalable teams, and lazy loading. It provides examples of component architecture using Angular, services, state management with ngrx/redux, immutability with ImmutableJS, and asynchronous logic with RxJS. The goal is to build modular, extensible applications that can handle complex requirements through separation of concerns and well-designed architecture.

VPN Access Runbook

VPN Access RunbookTaha Shakeel 1. The runbook grants a user VPN access by making changes to their Active Directory profile after their request is approved.

2. It runs .NET scripts to extract the user's SAM account name and grant VPN access by setting the msnpallowdialin property to true.

3. It then gets information on the user and their manager from Active Directory to notify them by email that VPN access was granted.

spring aop.pptx aspt oreinted programmin

spring aop.pptx aspt oreinted programminzmulani8 sadsadsadsadsaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa

Event Sourcing - what could go wrong - Devoxx BE

Event Sourcing - what could go wrong - Devoxx BEAndrzej Ludwikowski The document discusses event sourcing and command query responsibility segregation (CQRS) patterns at different levels of implementation. It covers the benefits of event sourcing including having a complete log of state changes, improved debugging, performance, scalability, and microservices integration. It also discusses challenges with increasing complexity, eventual consistency, and different event store and serialization options.

Data in Motion: Streaming Static Data Efficiently

Data in Motion: Streaming Static Data EfficientlyMartin Zapletal The document discusses efficiently streaming static data in Akka Persistence, focusing on concepts like change data capture and event querying. It outlines the architecture for real-time asynchronous streams, leveraging databases for batch processing and providing mechanisms for querying data by persistence ID and tags. Key components include actors for managing stream states, event extraction, and methods for pulling data from databases.

The Stream Processor as a Database Apache Flink

The Stream Processor as a Database Apache FlinkDataWorks Summit/Hadoop Summit Apache Flink enables stream processing on continuously produced data through its DataStream and DataSet APIs. It allows for streaming and batch processing as first class citizens. Flink programs are composed of sources that ingest data, transformations on those data streams, and sinks that output the results. Queryable state in Flink allows for querying the system state without writing to an external database, improving performance over traditional architectures that rely on writing intermediate results to external key-value stores. Flink's use of lightweight snapshots for fault tolerance and its log-based approach to persistence allows queryable state to have high throughput and low latency.

Ad

More from Databricks (20)

DW Migration Webinar-March 2022.pptx

DW Migration Webinar-March 2022.pptxDatabricks The document discusses migrating a data warehouse to the Databricks Lakehouse Platform. It outlines why legacy data warehouses are struggling, how the Databricks Platform addresses these issues, and key considerations for modern analytics and data warehousing. The document then provides an overview of the migration methodology, approach, strategies, and key takeaways for moving to a lakehouse on Databricks.

Data Lakehouse Symposium | Day 1 | Part 1

Data Lakehouse Symposium | Day 1 | Part 1Databricks The document discusses the concept of a data lakehouse, highlighting the integration of structured, textual, and analog/IOT data. It emphasizes the importance of common identifiers and universal connectors for meaningful analytics across different data types, ultimately aiming to improve healthcare and manufacturing outcomes through effective data analysis. The presentation outlines the challenges of managing diverse data formats and the potential for data-driven insights to enhance quality of life.

Data Lakehouse Symposium | Day 1 | Part 2

Data Lakehouse Symposium | Day 1 | Part 2Databricks The document compares data lakehouses and data warehouses, outlining their similarities and differences. Both serve analytical processing and contain vetted, historical data, but the data lakehouse handles a much larger volume of machine-generated data and features fundamentally different structures from transaction-based data warehouses. Ultimately, they are presented as related yet distinct entities in the realm of data management.

Data Lakehouse Symposium | Day 2

Data Lakehouse Symposium | Day 2Databricks The Data Lakehouse Symposium held in February 2022 discussed the evolution of data management from data warehouses to lakehouses, emphasizing the integration of governance and metadata. It highlighted the challenges companies face in utilizing various types of data, particularly unstructured textual data, and the importance of adding context for effective analysis. The presentation also examined strategies for transforming unstructured data into structured formats to enable better decision-making and analytical processes.

Data Lakehouse Symposium | Day 4

Data Lakehouse Symposium | Day 4Databricks The document discusses the challenges of modern data, analytics, and AI workloads. Most enterprises struggle with siloed data systems that make integration and productivity difficult. The future of data lies with a data lakehouse platform that can unify data engineering, analytics, data warehousing, and machine learning workloads on a single open platform. The Databricks Lakehouse platform aims to address these challenges with its open data lake approach and capabilities for data engineering, SQL analytics, governance, and machine learning.

5 Critical Steps to Clean Your Data Swamp When Migrating Off of Hadoop

5 Critical Steps to Clean Your Data Swamp When Migrating Off of HadoopDatabricks The document outlines the challenges and considerations for migrating from Hadoop to Databricks, emphasizing the complexities of the Hadoop ecosystem and the advantages of a modern cloud-based data architecture. It provides a comprehensive migration plan that includes internal assessments, technical planning, and execution while addressing key topics such as data migration, security, and SQL integration. Specific tools and methodologies for effective transition and enhanced performance in data analytics are also discussed.

Democratizing Data Quality Through a Centralized Platform

Democratizing Data Quality Through a Centralized PlatformDatabricks Zillow's Data Governance Platform team addresses data quality challenges by creating a centralized platform that enhances visibility and standardizes data quality rules. The platform includes self-service capabilities and integrates with data lineage, allowing for built-in alerting and scalable onboarding. Key takeaways emphasize the importance of early alerting, collaboration, and the shared responsibility for maintaining high-quality data to improve decision-making.

Learn to Use Databricks for Data Science

Learn to Use Databricks for Data ScienceDatabricks The document outlines the challenges and workflows involved in data science, emphasizing the need for proper setup and resource management. It highlights the importance of sharing results with stakeholders and describes how Databricks' lakehouse platform simplifies these processes by integrating data sources and providing essential tools for data analysis. Overall, the goal is to help data scientists focus on their core analytical work rather than dealing with setup complexities.

Why APM Is Not the Same As ML Monitoring

Why APM Is Not the Same As ML MonitoringDatabricks The document discusses the distinctions between application performance monitoring (APM) and machine learning (ML) monitoring, emphasizing the unique challenges of ML monitoring, such as the need for intelligent detection and alerting. It outlines the essential components of ML monitoring, including statistical summarization, distribution comparison, and actionable alerts based on model performance. Additionally, it introduces Verta's end-to-end MLOps platform designed to meet the specialized needs of ML monitoring throughout the entire model lifecycle.

The Function, the Context, and the Data—Enabling ML Ops at Stitch Fix

The Function, the Context, and the Data—Enabling ML Ops at Stitch FixDatabricks Elijah Ben Izzy, a Data Platform Engineer at Stitch Fix, discusses building abstractions for machine learning operations to optimize workflows and enhance the separation of concerns between data science and platform engineering. The presentation highlights the importance of a custom-built model envelope for seamless integration and management of ML models, as well as advancements in deployment and inference processes. Future directions include enhanced production monitoring and sophisticated feature integration to further streamline data science workflows.

Stage Level Scheduling Improving Big Data and AI Integration

Stage Level Scheduling Improving Big Data and AI IntegrationDatabricks The document discusses stage-level scheduling and resource allocation in Apache Spark to enhance big data and AI integration. It outlines various resource requirements such as executors, memory, and accelerators, while presenting benefits like improved hardware utilization and simplified application pipelines. Additionally, it introduces the RAPIDS Accelerator for Spark and distributed deep learning with Horovod, emphasizing performance optimizations and future enhancements.

Simplify Data Conversion from Spark to TensorFlow and PyTorch

Simplify Data Conversion from Spark to TensorFlow and PyTorchDatabricks The document discusses the importance of data conversion between Spark and deep learning frameworks like TensorFlow and PyTorch. It highlights key pain points, such as challenges in migrating from single-node to distributed training and the complexity of saving and loading data. Additionally, it introduces the Spark Dataset Converter, which simplifies data handling while training deep learning models and offers best practices for efficient usage.

Scaling your Data Pipelines with Apache Spark on Kubernetes

Scaling your Data Pipelines with Apache Spark on KubernetesDatabricks This document discusses the integration of Apache Spark with Kubernetes on Google Cloud, highlighting its advantages for running data engineering and machine learning workloads within existing infrastructure. It outlines benefits such as improved cost optimization, faster scaling, and enhanced resource management through Google Kubernetes Engine (GKE) and Dataproc, while detailing implementation steps and monitoring options. Additionally, it covers the compatibility with big data ecosystem tools, job execution, and enterprise security features.

Scaling and Unifying SciKit Learn and Apache Spark Pipelines

Scaling and Unifying SciKit Learn and Apache Spark PipelinesDatabricks The document discusses the integration and scaling of AI/ML pipelines using Ray, aiming to unify Scikit-learn and Spark pipelines. Key features include Python functions as computation units, data exchange capabilities, and support for advanced execution strategies. It concludes with contact information for collaboration and emphasizes the importance of feedback from the community.

Sawtooth Windows for Feature Aggregations

Sawtooth Windows for Feature AggregationsDatabricks The document discusses the Sawtooth Windows Zipline, a feature engineering framework focusing on machine learning with structured data. It emphasizes the importance of real-time, stable, and consistent features for model training and serving, while highlighting the challenges of data sources and the intricacies of aggregations. Key topics include model complexity, data quality, and various types of windowed aggregations for efficient data processing.

Redis + Apache Spark = Swiss Army Knife Meets Kitchen Sink

Redis + Apache Spark = Swiss Army Knife Meets Kitchen SinkDatabricks The document discusses the integration of Redis with Apache Spark for managing long-running batch jobs and distributed counters. It outlines the challenges faced in submitting queries and the inefficiencies of existing solutions, proposing a system that utilizes Redis for queuing and job status communication. Key workflows and code views are provided to demonstrate the proposed solutions for efficient query handling and data processing.

Re-imagine Data Monitoring with whylogs and Spark

Re-imagine Data Monitoring with whylogs and SparkDatabricks The document discusses the challenges of monitoring machine learning data, emphasizing how traditional data analysis techniques fall short in addressing issues in ML data pipelines. It introduces the open-source library Whylogs for data logging, highlighting its lightweight profiling methods suitable for large datasets and integration with Apache Spark. Key topics include data quality problems, the need for scalable monitoring, and approaches for logging and analyzing ML data effectively.

Raven: End-to-end Optimization of ML Prediction Queries

Raven: End-to-end Optimization of ML Prediction QueriesDatabricks The document discusses Raven, an optimizer for machine learning prediction queries at Microsoft, focusing on its ability to improve the performance of SQL-based ML operations. It details how Raven integrates with Azure data engines, utilizing techniques like model projection pushdown and model-to-SQL translation to enhance query efficiency. Performance evaluations indicate that Raven significantly outperforms existing ML runtimes in various scenarios, achieving speed increases of up to 44 times compared to traditional approaches.

Processing Large Datasets for ADAS Applications using Apache Spark

Processing Large Datasets for ADAS Applications using Apache SparkDatabricks The document outlines the use of Spark for processing large datasets in automated driving applications, focusing on semantic segmentation and the challenges of moving from prototype to production. It presents the architecture of the system, covering ETL processes, model training, and inference, while addressing design considerations like scaling, security, and governance. Key takeaways emphasize the importance of leveraging cloud-based solutions and effective workflow management to enhance the development of perception software for autonomous vehicles.

Massive Data Processing in Adobe Using Delta Lake

Massive Data Processing in Adobe Using Delta LakeDatabricks The document discusses massive data processing at Adobe using Delta Lake, highlighting various aspects such as data representation, schema evolution, and challenges in data ingestion. It emphasizes the performance benefits of utilizing Delta Lake for handling large-scale data efficiently, while considering issues like schema management and replication lag. Key features like ACID transactions and lazy schema on-read approaches are also outlined to address the complexities of multi-tenant data architecture.

Ad

Recently uploaded (20)

All the DataOps, all the paradigms .

All the DataOps, all the paradigms .Lars Albertsson Data warehouses, lakes, lakehouses, streams, fabrics, hubs, vaults, and meshes. We sometimes choose deliberately, sometimes influenced by trends, yet often get an organic blend. But the choices have orders of magnitude in impact on operations cost and iteration speed. Let's dissect the paradigms and their operational aspects once and for all.

Presentation by Tariq & Mohammed (1).pptx

Presentation by Tariq & Mohammed (1).pptxAbooddSandoqaa this presenration is talking about data and analaysis and caucusus analysis of the rotten egg tommetos and viral infections

624753984-Annex-A3-RPMS-Tool-for-Proficient-Teachers-SY-2024-2025.pdf

624753984-Annex-A3-RPMS-Tool-for-Proficient-Teachers-SY-2024-2025.pdfCristineGraceAcuyan The little toys

最新版美国芝加哥大学毕业证(UChicago毕业证书)原版定制

最新版美国芝加哥大学毕业证(UChicago毕业证书)原版定制taqyea 2025原版芝加哥大学毕业证书pdf电子版【q薇1954292140】美国毕业证办理UChicago芝加哥大学毕业证书多少钱?【q薇1954292140】海外各大学Diploma版本,因为疫情学校推迟发放证书、证书原件丢失补办、没有正常毕业未能认证学历面临就业提供解决办法。当遭遇挂科、旷课导致无法修满学分,或者直接被学校退学,最后无法毕业拿不到毕业证。此时的你一定手足无措,因为留学一场,没有获得毕业证以及学历证明肯定是无法给自己和父母一个交代的。

【复刻芝加哥大学成绩单信封,Buy The University of Chicago Transcripts】

购买日韩成绩单、英国大学成绩单、美国大学成绩单、澳洲大学成绩单、加拿大大学成绩单(q微1954292140)新加坡大学成绩单、新西兰大学成绩单、爱尔兰成绩单、西班牙成绩单、德国成绩单。成绩单的意义主要体现在证明学习能力、评估学术背景、展示综合素质、提高录取率,以及是作为留信认证申请材料的一部分。

芝加哥大学成绩单能够体现您的的学习能力,包括芝加哥大学课程成绩、专业能力、研究能力。(q微1954292140)具体来说,成绩报告单通常包含学生的学习技能与习惯、各科成绩以及老师评语等部分,因此,成绩单不仅是学生学术能力的证明,也是评估学生是否适合某个教育项目的重要依据!

我们承诺采用的是学校原版纸张(原版纸质、底色、纹路)我们工厂拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有成品以及工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!

【主营项目】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理毕业证|办理文凭: 买大学毕业证|买大学文凭【q薇1954292140】芝加哥大学学位证明书如何办理申请?

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理美国成绩单芝加哥大学毕业证【q薇1954292140】国外大学毕业证, 文凭办理, 国外文凭办理, 留信网认证

The Influence off Flexible Work Policies

The Influence off Flexible Work Policiessales480687 This topic explores how flexible work policies—such as remote work, flexible hours, and hybrid models—are transforming modern workplaces. It examines the impact on employee productivity, job satisfaction, work-life balance, and organizational performance. The topic also addresses challenges such as communication gaps, maintaining company culture, and ensuring accountability. Additionally, it highlights how flexible work arrangements can attract top talent, promote inclusivity, and adapt businesses to an evolving global workforce. Ultimately, it reflects the shift in how and where work gets done in the 21st century.

NVIDIA Triton Inference Server, a game-changing platform for deploying AI mod...

NVIDIA Triton Inference Server, a game-changing platform for deploying AI mod...Tamanna36 NVIDIA Triton Inference Server! 🌟

Learn how Triton streamlines AI model deployment with dynamic batching, support for TensorFlow, PyTorch, ONNX, and more, plus GPU-optimized performance. From YOLO11 object detection to NVIDIA Dynamo’s future, it’s your guide to scalable AI inference.

Check out the slides and share your thoughts! 👇

#AI #NVIDIA #TritonInferenceServer #MachineLearning

Artigo - Playing to Win.planejamento docx

Artigo - Playing to Win.planejamento docxKellyXavier15 Excelente artifo para quem está iniciando processo de aquisiçãode planejamento estratégico

Data Visualisation in data science for students

Data Visualisation in data science for studentsconfidenceascend Data visualisation is explained in a simple manner.

一比一原版(TUC毕业证书)开姆尼茨工业大学毕业证如何办理

一比一原版(TUC毕业证书)开姆尼茨工业大学毕业证如何办理taqyed 鉴于此,办理TUC大学毕业证开姆尼茨工业大学毕业证书【q薇1954292140】留学一站式办理学历文凭直通车(开姆尼茨工业大学毕业证TUC成绩单原版开姆尼茨工业大学学位证假文凭)未能正常毕业?【q薇1954292140】办理开姆尼茨工业大学毕业证成绩单/留信学历认证/学历文凭/使馆认证/留学回国人员证明/录取通知书/Offer/在读证明/成绩单/网上存档永久可查!

如果您处于以下几种情况:

◇在校期间,因各种原因未能顺利毕业……拿不到官方毕业证

◇面对父母的压力,希望尽快拿到;

◇不清楚认证流程以及材料该如何准备;

◇回国时间很长,忘记办理;

◇回国马上就要找工作,办给用人单位看;

◇企事业单位必须要求办理的

◇需要报考公务员、购买免税车、落转户口

◇申请留学生创业基金

【办理开姆尼茨工业大学成绩单Buy Technische Universität Chemnitz Transcripts】

购买日韩成绩单、英国大学成绩单、美国大学成绩单、澳洲大学成绩单、加拿大大学成绩单(q微1954292140)新加坡大学成绩单、新西兰大学成绩单、爱尔兰成绩单、西班牙成绩单、德国成绩单。成绩单的意义主要体现在证明学习能力、评估学术背景、展示综合素质、提高录取率,以及是作为留信认证申请材料的一部分。

开姆尼茨工业大学成绩单能够体现您的的学习能力,包括开姆尼茨工业大学课程成绩、专业能力、研究能力。(q微1954292140)具体来说,成绩报告单通常包含学生的学习技能与习惯、各科成绩以及老师评语等部分,因此,成绩单不仅是学生学术能力的证明,也是评估学生是否适合某个教育项目的重要依据!

Residential Zone 4 for industrial village

Residential Zone 4 for industrial villageMdYasinArafat13 based on assumption that failure of such a weld is by shear on the

effective area whether the shear transfer is parallel to or

perpendicular to the axis of the line of fillet weld. In fact, the

strength is greater for shear transfer perpendicular to the weld axis;

however, for simplicity the situations are treated the same.

最新版美国威斯康星大学河城分校毕业证(UWRF毕业证书)原版定制

最新版美国威斯康星大学河城分校毕业证(UWRF毕业证书)原版定制taqyea 2025原版威斯康星大学河城分校毕业证书pdf电子版【q薇1954292140】美国毕业证办理UWRF威斯康星大学河城分校毕业证书多少钱?【q薇1954292140】海外各大学Diploma版本,因为疫情学校推迟发放证书、证书原件丢失补办、没有正常毕业未能认证学历面临就业提供解决办法。当遭遇挂科、旷课导致无法修满学分,或者直接被学校退学,最后无法毕业拿不到毕业证。此时的你一定手足无措,因为留学一场,没有获得毕业证以及学历证明肯定是无法给自己和父母一个交代的。

【复刻威斯康星大学河城分校成绩单信封,Buy University of Wisconsin-River Falls Transcripts】

购买日韩成绩单、英国大学成绩单、美国大学成绩单、澳洲大学成绩单、加拿大大学成绩单(q微1954292140)新加坡大学成绩单、新西兰大学成绩单、爱尔兰成绩单、西班牙成绩单、德国成绩单。成绩单的意义主要体现在证明学习能力、评估学术背景、展示综合素质、提高录取率,以及是作为留信认证申请材料的一部分。

威斯康星大学河城分校成绩单能够体现您的的学习能力,包括威斯康星大学河城分校课程成绩、专业能力、研究能力。(q微1954292140)具体来说,成绩报告单通常包含学生的学习技能与习惯、各科成绩以及老师评语等部分,因此,成绩单不仅是学生学术能力的证明,也是评估学生是否适合某个教育项目的重要依据!

我们承诺采用的是学校原版纸张(原版纸质、底色、纹路)我们工厂拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有成品以及工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!

【主营项目】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理毕业证|办理文凭: 买大学毕业证|买大学文凭【q薇1954292140】威斯康星大学河城分校学位证明书如何办理申请?

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理美国成绩单威斯康星大学河城分校毕业证【q薇1954292140】国外大学毕业证, 文凭办理, 国外文凭办理, 留信网认证

Using Apache Spark to Solve Sessionization Problem in Batch and Streaming

- 1. Solving sessionization problem with Apache Spark batch and streaming processing Bartosz Konieczny @waitingforcode1

- 2. About me Bartosz Konieczny #dataEngineer #ApacheSparkEnthusiast #AWSuser #waitingforcode.com #becomedataengineer.com #@waitingforcode #github.com/bartosz25 #canalplus #Paris 2

- 3. 3

- 4. Sessions "user activity followed by a closing action or a period of inactivity" 4

- 5. 5 © https://pixabay.com/users/maxmann-665103/ from https://pixabay.com

- 6. Batch architecture 6 data producer sync consumer input logs (DFS) input logs (streaming broker) orchestrator sessions generator <triggers> previous window raw sessions (DFS) output sessions (DFS)

- 7. Streaming architecture 7 data producer sessions generator output sessions (DFS) metadata state <uses> checkpoint location input logs (streaming broker)

- 9. The code val previousSessions = loadPreviousWindowSessions(sparkSession, previousSessionsDir) val sessionsInWindow = sparkSession.read.schema(Visit.Schema) .json(inputDir) val joinedData = previousSessions.join(sessionsInWindow, sessionsInWindow("user_id") === previousSessions("userId"), "fullouter") .groupByKey(log => SessionGeneration.resolveGroupByKey(log)) .flatMapGroups(SessionGeneration.generate(TimeUnit.MINUTES.toMillis(5), windowUpperBound)).cache() joinedData.filter("isActive = true").write.mode(SaveMode.Overwrite).json(outputDir) joinedData.filter(state => !state.isActive) .flatMap(state => state.toSessionOutputState) .coalesce(50).write.mode(SaveMode.Overwrite) .option("compression", "gzip") .json(outputDir) 9

- 10. Full outer join val previousSessions = loadPreviousWindowSessions(sparkSession, previousSessionsDir) val sessionsInWindow = sparkSession.read.schema(Visit.Schema) .json(inputDir) val joinedData = previousSessions.join(sessionsInWindow, sessionsInWindow("user_id") === previousSessions("userId"), "fullouter") .groupByKey(log => SessionGeneration.resolveGroupByKey(log)) .flatMapGroups(SessionGeneration.generate(TimeUnit.MINUTES.toMillis(5), windowUpperBound)) joinedData.filter("isActive = true").write.mode(SaveMode.Overwrite).json(outputDir) joinedData.filter(state => !state.isActive) .flatMap(state => state.toSessionOutputState) .coalesce(50).write.mode(SaveMode.Overwrite) .option("compression", "gzip") .json(outputDir) 10 processing logic previous window active sessions new input logs full outer join

- 11. Watermark simulation val previousSessions = loadPreviousWindowSessions(sparkSession, previousSessionsDir) val sessionsInWindow = sparkSession.read.schema(Visit.Schema) .json(inputDir) val joinedData = previousSessions.join(sessionsInWindow, sessionsInWindow("user_id") === previousSessions("userId"), "fullouter") .groupByKey(log => SessionGeneration.resolveGroupByKey(log)) .flatMapGroups(SessionGeneration.generate(TimeUnit.MINUTES.toMillis(5), windowUpperBound)) joinedData.filter("isActive = true").write.mode(SaveMode.Overwrite).json(outputDir) joinedData.filter(state => !state.isActive) .flatMap(state => state.toSessionOutputState) .coalesce(50).write.mode(SaveMode.Overwrite) .option("compression", "gzip") .json(outputDir) case class SessionIntermediaryState(userId: Long, … expirationTimeMillisUtc: Long, isActive: Boolean) 11

- 12. Save modes val previousSessions = loadPreviousWindowSessions(sparkSession, previousSessionsDir) val sessionsInWindow = sparkSession.read.schema(Visit.Schema) .json(inputDir) val joinedData = previousSessions.join(sessionsInWindow, sessionsInWindow("user_id") === previousSessions("userId"), "fullouter") .groupByKey(log => SessionGeneration.resolveGroupByKey(log)) .flatMapGroups(SessionGeneration.generate(TimeUnit.MINUTES.toMillis(5), windowUpperBound)) joinedData.filter("isActive = true").write.mode(SaveMode.Overwrite).json(outputDir) joinedData.filter(state => !state.isActive) .flatMap(state => state.toSessionOutputState) .coalesce(50).write.mode(SaveMode.Overwrite) .option("compression", "gzip") .json(outputDir) SaveMode.Append ⇒ duplicates & invalid results (e.g. multiplied revenue!) SaveMode.ErrorIfExists ⇒ failures & maintenance burden SaveMode.Ignore ⇒ no data & old data present in case of reprocessing SaveMode.Overwrite ⇒ always fresh data & easy maintenance 12

- 14. The code val writeQuery = query.writeStream.outputMode(OutputMode.Update()) .option("checkpointLocation", s"s3://my-checkpoint-bucket") .foreachBatch((dataset: Dataset[SessionIntermediaryState], batchId: Long) => { BatchWriter.writeDataset(dataset, s"${outputDir}/${batchId}") }) val dataFrame = sparkSession.readStream .format("kafka") .option("kafka.bootstrap.servers", kafkaConfiguration.broker).option(...) .load() val query = dataFrame.selectExpr("CAST(value AS STRING)") .select(functions.from_json($"value", Visit.Schema).as("data")) .select($"data.*").withWatermark("event_time", "3 minutes") .groupByKey(row => row.getAs[Long]("user_id")) .mapGroupsWithState(GroupStateTimeout.EventTimeTimeout()) (mapStreamingLogsToSessions(sessionTimeout)) watermark - late events & state expiration stateful processing - sessions generation checkpoint - fault-tolerance 14

- 15. Checkpoint - fault-tolerance load state for t0 query load offsets to process & write them for t1 query process data write processed offsets write state checkpoint location state store offset log commit log val writeQuery = query.writeStream.outputMode(OutputMode.Update()) .option("checkpointLocation", s"s3://sessionization-demo/checkpoint") .foreachBatch((dataset: Dataset[SessionIntermediaryState], batchId: Long) => { BatchWriter.writeDataset(dataset, s"${outputDir}/${batchId}") }) .start() 15

- 16. Checkpoint - fault-tolerance load state for t1 query load offsets to process & write them for t1 query process data confirm processed offsets & next watermark commit state t2 partition-based checkpoint location state store offset log commit log 16

- 17. Stateful processing update remove get getput,remove write update finalize file make snapshot recover state def mapStreamingLogsToSessions(timeoutDurationMs: Long)(key: Long, logs: Iterator[Row], currentState: GroupState[SessionIntermediaryState]): SessionIntermediaryState = { if (currentState.hasTimedOut) { val expiredState = currentState.get.expire currentState.remove() expiredState } else { val newState = currentState.getOption.map(state => state.updateWithNewLogs(logs, timeoutDurationMs)) .getOrElse(SessionIntermediaryState.createNew(logs, timeoutDurationMs)) currentState.update(newState) currentState.setTimeoutTimestamp(currentState.getCurrentWatermarkMs() + timeoutDurationMs) currentState.get } } 17

- 18. Stateful processing update remove get getput,remove - write update - finalize file - make snapshot recover state 18 .mapGroupsWithState(...) state store TreeMap[Long, ConcurrentHashMap[UnsafeRow, UnsafeRow] ] in-memory storage for the most recent versions 1.delta 2.delta 3.snapshot checkpoint location

- 19. Watermark val sessionTimeout = TimeUnit.MINUTES.toMillis(5) val query = dataFrame.selectExpr("CAST(value AS STRING)") .select(functions.from_json($"value", Visit.Schema).as("data")) .select($"data.*") .withWatermark("event_time", "3 minutes") .groupByKey(row => row.getAs[Long]("user_id")) .mapGroupsWithState(GroupStateTimeout.EventTimeTimeout()) (Mapping.mapStreamingLogsToSessions(sessionTimeout)) 19

- 20. Watermark - late events on-time event late event 20 .mapGroupsWithState(...)

- 21. Watermark - expired state State representation [simplified] {value, TTL configuration} Algorithm: 1. Update all states with new data → eventually extend TTL 2. Retrieve TTL configuration for the query → here: watermark 3. Retrieve all states that expired → no new data in this query & TTL expired 4. Call mapGroupsWithState on it with hasTimedOut param = true & no new data (Iterator.empty) // full implementation: org.apache.spark.sql.execution.streaming.FlatMapGroupsWithStateExec.InputProcessor 21

- 23. Batch

- 24. reschedule your job © https://pics.me.me/just-one-click-and-the-zoo-is-mine-8769663.png

- 25. Streaming

- 27. State store 1. Restored state is the most recent snapshot 2. Restored state is not the most recent snapshot but a snapshot exists 3. Restored state is not the most recent snapshot and a snapshot doesn't exist 27 1.delta 3.snapshot2.delta 1.delta 3.snapshot2.delta 4.delta 1.delta 3.delta2.delta 4.delta

- 28. State store configuration spark.sql.streaming.stateStore: → .minDeltasForSnapshot → .maintenanceInterval 28 spark.sql.streaming: → .maxBatchesToRetainInMemory

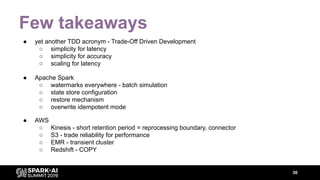

- 30. Few takeaways ● yet another TDD acronym - Trade-Off Driven Development ○ simplicity for latency ○ simplicity for accuracy ○ scaling for latency ● AWS ○ Kinesis - short retention period = reprocessing boundary, connector ○ S3 - trade reliability for performance ○ EMR - transient cluster ○ Redshift - COPY ● Apache Spark ○ watermarks everywhere - batch simulation ○ state store configuration ○ restore mechanism ○ overwrite idempotent mode 30

- 32. Thank you!Bartosz Konieczny @waitingforcode / github.com/bartosz25 / waitingforcode.com Canal+ @canaltechteam