Text classification using Text kernels

- 1. Text Classification Using String Kernels Presented by Dibyendu Nath & Divya Sambasivan CS 290D : Spring 2014 Huma Lodhi, Craig Saunders, et al Department of Computer Science, Royal Holloway, University of London

- 2. Intro: Text Classification • Task of assigning a document to one or more categories. • Done manually (library science) or algorithmically (information science, data mining, machine learning). • Learning systems (neural networks or decision trees) work on feature vectors, transformed from the input space. • Text documents cannot readily be described by explicit feature vectors. lingua-systems.eu

- 3. Problem Definition • Input : A corpus of documents. • Output : A kernel representing the documents. • This kernel can then be used to classify, cluster etc. using existing algorithms which work on kernels, eg: SVM, perceptron. • Methodology : Find a mapping and a kernel function so that we can apply any of the standard kernel methods of classification, clustering etc. to the corpus of documents.

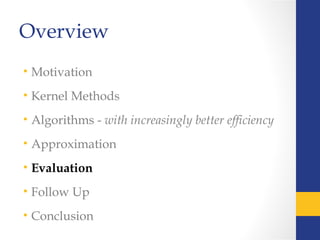

- 4. Overview • Motivation • Kernel Methods • Algorithms - with increasingly better efficiency • Approximation • Evaluation • Follow Up • Conclusion

- 5. Overview • Motivation • Kernel Methods • Algorithms - with increasingly better efficiency • Approximation • Evaluation • Follow Up • Conclusion

- 6. Motivation • Text documents cannot readily be described by explicit feature vectors. • Feature Extraction - Requires extensive domain knowledge - Possible loss of important information. • Kernel Methods – an alternative to explicit feature extraction

- 7. Overview • Motivation • Kernel Methods • Algorithms - with increasingly better efficiency • Approximation • Evaluation • Follow Up • Conclusion

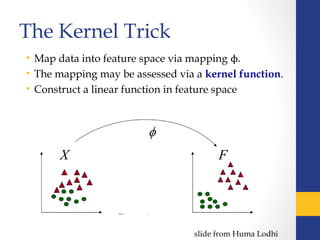

- 8. The Kernel Trick • Map data into feature space via mapping ϕ. • The mapping may be assessed via a kernel function. • Construct a linear function in feature space slide from Huma Lodhi

- 9. Kernel Function slide from Huma Lodhi Kernel Function – Measure of Similarity, returns the inner product between mapped data points K(xi, xj) = < Φ(xi), Φ(xj)> Example –

- 10. Kernels for Sequences • Word Kernels [WK] - Bag of Words - Sequence of characters followed by punctuation or space • N-Grams Kernel [NGK] • Sequence of n consecutive substrings • Example : “quick brown” 3-gram - qui, uic, ick, ck_, _br, bro, row, own • String Subsequence Kernel [SSK] • All (non-contiguous) substrings of n-symbols

- 11. Word Kernels • Documents are mapped to very high dimensional space where dimensionality of the feature space is equal to the number of unique words in the corpus. • Each entry of the vector represents the occurrence or non-occurrence of the word. • Kernel - inner product between mapped sequences give a sum over all common (weighted) words fish tank sea Doc 1 2 0 1 Doc 2 1 1 0

- 12. String Subsequence Kernels Basic Idea Non-contiguous substrings : substring “c-a-r” card – length of sequence = 3 custard – length of sequence = 6 The more subsequences (of length n) two strings have in common, the more similar they are considered Decay Factor Substrings are weighted according to the degree of contiguity in a string by a decay factor λ ∊ (0,1)

- 13. Example c-a c-t a-t c-r a-r car cat car cat Documents we want to compare λ2 λ2 λ3 0 0 λ2 λ2 λ3 0 0 K(car, car) = 2λ4 + λ6 K(cat, cat) = 2λ4 + λ6 n=2 K(car, cat) = K(car, cat) = λ4

- 14. Overview • Motivation • Kernel Methods • Algorithms - with increasingly better efficiency • Approximation • Evaluation • Follow Up • Conclusion

- 15. Algorithm Definitions • Alphabet Let Σ be the finite alphabet • String A string is a finite sequence of characters from alphabet with length |s| • Subsequence A vector of indices ij, sorted in ascending order, in a string ‘s’ such that they form the letters of a sequence Eg: ‘lancasters’ = [4,5,9] Length of subsequence = in – i1 +1 = 9 - 4 + 1 = 6

- 16. Algorithm Definitions • Feature Spaces • Feature Mapping The feature mapping φ for a string s is given by defining the u coordinate φu(s) for each u Σ∈ n These features measure the number of occurrences of subsequences in the string s weighting them according to their lengths.

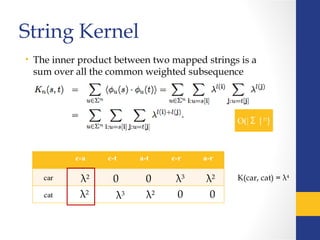

- 17. String Kernel • The inner product between two mapped strings is a sum over all the common weighted subsequence λ2 λ2 λ3 0 0 λ2 λ2 λ3 0 0 K(car, cat) = λ4

- 18. Intermediate Kernel c-a c-t a-t c-r a-r car cat λ2 λ2 λ3 0 0 λ2 λ2λ3 0 0 λ3 λ3 Count the length from the beginning of the sequence through the end of the strings s and t. K’

- 19. Recursive Computation Null sub-string Target string is shorter than search sub-string

- 20. c-a c-t a-t c-r a-r car cat λ2 λ30 0 λ2 λ3 0 0 λ3 λ3 c-a c-t a-t c-r a-r cart 3 cat λ2 λ3 0 0λ3 s t sx t λ4 λλ40 0 K’(car,cat) = λ6 K’(cart,cat) = λ7 λ3λ4 +λ7 +λ5 K’ K’

- 21. λ2 λ2 λ3 0 0 λ2 λ2 λ3 0 0 K(car,cat) = λ4 s t c-a c-t a-t c-r a-r cart cat λ2 λ2 λ3 λ4 λ2 λ3 0 λ3 λ2 0 K(cart,cat) = λ4 sx t +λ7 +λ5 K K

- 22. Recursive Computation Null sub-string Target string is shorter than search sub-string O(n |s||t|2 ) O(n |s||t|) Dynamic Programming Recursion

- 23. Efficiency O(|Σ|n ) O(n |s||t|) O(n |s||t|2 ) All subsequences of length n.

- 26. Overview • Motivation • Kernel Methods • Algorithms - with increasingly better efficiency • Approximation • Evaluation • Follow Up • Conclusion

- 27. Kernel Approximation Suppose, we have some training points (x i , y i ) X × Y∈ , and some kernel function K(x,z) corresponding to a feature space mapping φ : X → F such that K(x, z) = φ(x), φ(z)⟨ ⟩. Consider a set S of vectors S = {s i X }∈ . If the cardinality of S is equal to the dimensionality of the space F and the vectors φ(s i ) are orthogonal *

- 28. Kernel Approximation If instead of forming a complete orthonormal basis, the cardinality of S S is less than the dimensionality of X or thẽ ⊆ vectors si are not fully orthogonal, then we can construct an approximation to the kernel K: If the set S is carefully constructed, then the production of ã Gram matrix which is closely aligned to the true Gram matrix can be achieved with a fraction of the computational cost. Problem : Choose the set S to ensure that the vectors φ(s̃ i) are orthogonal.

- 29. Selecting Feature Subset Heuristic for obtaining the set S is as follows:̃ 1.We choose a substring size n. 2.We enumerate all possible contiguous strings of length n. 3.We choose the x strings of length n which occur most frequently in the dataset and this forms our set S .̃ By definition, all such strings of length n are orthogonal (i.e. K(si,sj) = Cδij for some constant C) when used in conjunction with the string kernel of degree n.

- 31. Overview • Motivation • Kernel Methods • Algorithms - with increasingly better efficiency • Approximation • Evaluation • Follow Up • Conclusion

- 32. Evaluation Dataset : Reuters-21578, ModeApte Split Categoried Selected: Precision = relevant documents categorized relevant / total documents categorized relevant Recall = relevant documents categorized relevant/total relevant documents F1 = 2*Precision*Recall/Precision+R ecall

- 33. Evaluation

- 34. Evaluation

- 35. Evaluation Effectiveness of Sequence Length [k = 7] [k = 5] [k = 6] [k = 5] [k = 5] [k = 5][k = 5] [k = 5]

- 36. Evaluation Effectiveness of Decay Factor λ = 0.3 λ = 0.03 λ = 0.05 λ = 0.03

- 37. Overview • Motivation • Kernel Methods • Algorithms - with increasingly better efficiency • Approximation • Evaluation • Follow Up • Conclusion

- 38. Follow Up • String Kernel using sequences of words rather than characters, less computationally demanding, no fixed decay factor, combination of string kernels Cancedda, Nicola, et al. "Word sequence kernels." The Journal of Machine Learning Research 3 (2003): 1059-1082. • Extracting semantic relations between entities in natural language text, based on a generalization of subsequence kernels. Bunescu, Razvan, and Raymond J. Mooney. "Subsequence kernels for relation extraction." NIPS. 2005.

- 39. Follow Up •Homology – Computational biology method to identify the ancestry of proteins. Model should be able to tolerate upto m-mismatches. The kernels used in this method measure sequence similarity based on shared occurrences of k-length subsequences, counted with up to m-mismatches.

- 40. Overview • Motivation • Kernel Methods • Algorithms - with increasingly better efficiency • Approximation • Evaluation • Follow Up • Conclusion

- 41. Conclusion Key Idea: Using non-contiguous string subsequences to compute similarity between documents with a decay factor which discounts similarity according to the degree of contiguity •Highly computationally intensive method – authors reduced the time complexity from O(|Σ|n ) to O(n|s||t|) by a dynamic programming approach •Still less intensive method – Kernel Approximation by Feature Subset Selection. •Empirical estimation of k and λ, from experimental results •Showed promising results only for small datasets •Seems to mimic stemming for small datasets

- 42. Any Q? Thank You :)

![Kernels for Sequences

• Word Kernels [WK] - Bag of Words

- Sequence of characters followed by punctuation

or space

• N-Grams Kernel [NGK]

• Sequence of n consecutive substrings

• Example : “quick brown”

3-gram - qui, uic, ick, ck_, _br, bro, row, own

• String Subsequence Kernel [SSK]

• All (non-contiguous) substrings of n-symbols](https://image.slidesharecdn.com/textclassificationusingtextkernels-150304154439-conversion-gate01/85/Text-classification-using-Text-kernels-10-320.jpg)

![Algorithm Definitions

• Alphabet

Let Σ be the finite alphabet

• String

A string is a finite sequence of characters from alphabet with

length |s|

• Subsequence

A vector of indices ij, sorted in ascending order, in a string ‘s’

such that they form the letters of a sequence

Eg: ‘lancasters’ = [4,5,9]

Length of subsequence = in – i1 +1 = 9 - 4 + 1 = 6](https://image.slidesharecdn.com/textclassificationusingtextkernels-150304154439-conversion-gate01/85/Text-classification-using-Text-kernels-15-320.jpg)

![Evaluation

Effectiveness of Sequence Length

[k = 7] [k = 5]

[k = 6] [k = 5]

[k = 5]

[k = 5][k = 5]

[k = 5]](https://image.slidesharecdn.com/textclassificationusingtextkernels-150304154439-conversion-gate01/85/Text-classification-using-Text-kernels-35-320.jpg)